Jianghong Ma

Diversity Recommendation via Causal Deconfounding of Co-purchase Relations and Counterfactual Exposure

Dec 19, 2025

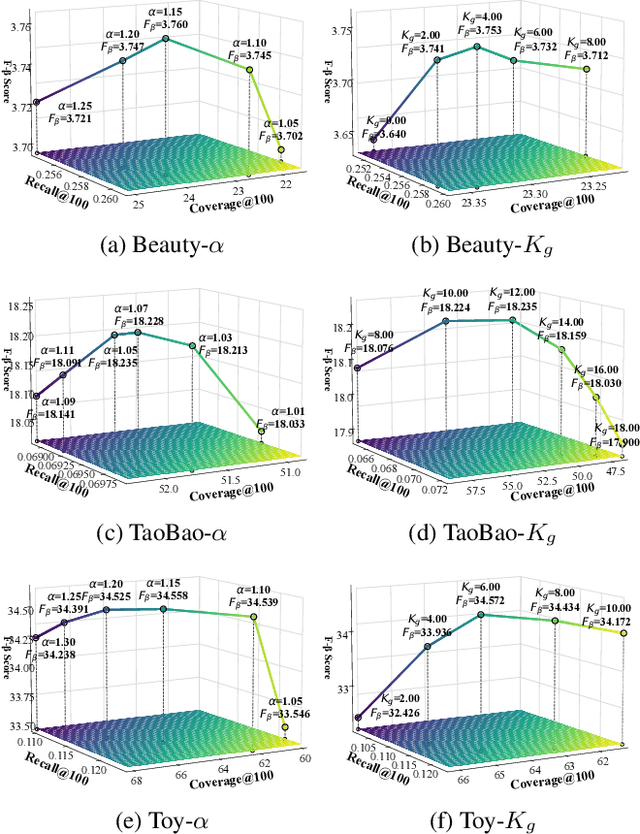

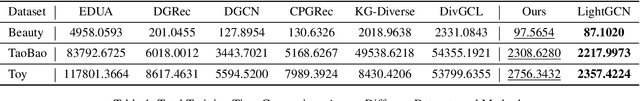

Abstract:Beyond user-item modeling, item-to-item relationships are increasingly used to enhance recommendation. However, common methods largely rely on co-occurrence, making them prone to item popularity bias and user attributes, which degrades embedding quality and performance. Meanwhile, although diversity is acknowledged as a key aspect of recommendation quality, existing research offers limited attention to it, with a notable lack of causal perspectives and theoretical grounding. To address these challenges, we propose Cadence: Diversity Recommendation via Causal Deconfounding of Co-purchase Relations and Counterfactual Exposure - a plug-and-play framework built upon LightGCN as the backbone, primarily designed to enhance recommendation diversity while preserving accuracy. First, we compute the Unbiased Asymmetric Co-purchase Relationship (UACR) between items - excluding item popularity and user attributes - to construct a deconfounded directed item graph, with an aggregation mechanism to refine embeddings. Second, we leverage UACR to identify diverse categories of items that exhibit strong causal relevance to a user's interacted items but have not yet been engaged with. We then simulate their behavior under high-exposure scenarios, thereby significantly enhancing recommendation diversity while preserving relevance. Extensive experiments on real-world datasets demonstrate that our method consistently outperforms state-of-the-art diversity models in both diversity and accuracy, and further validates its effectiveness, transferability, and efficiency over baselines.

RocketEval: Efficient Automated LLM Evaluation via Grading Checklist

Mar 07, 2025

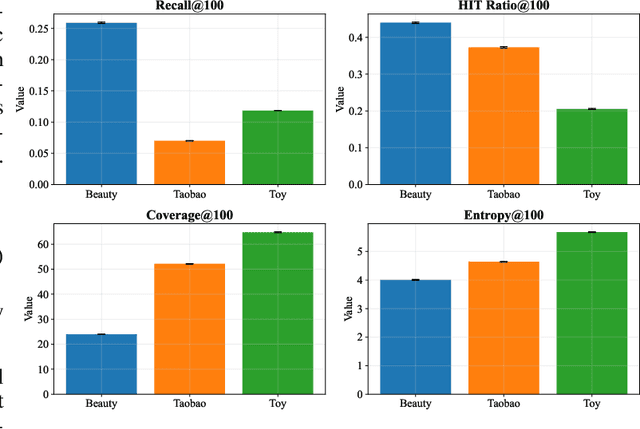

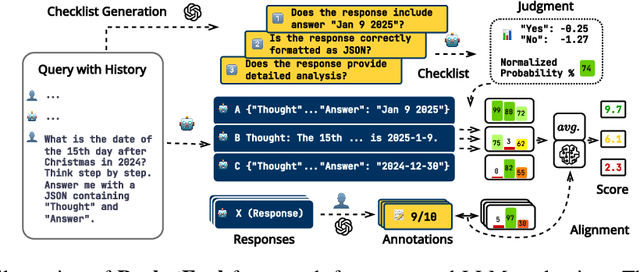

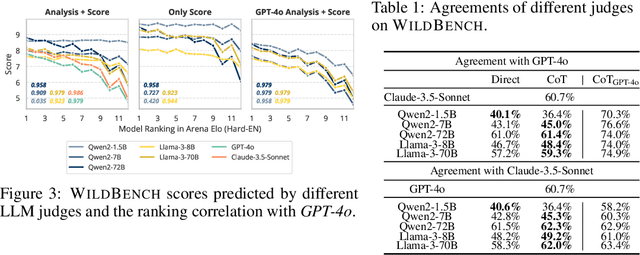

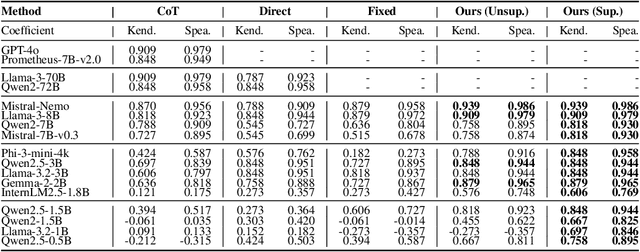

Abstract:Evaluating large language models (LLMs) in diverse and challenging scenarios is essential to align them with human preferences. To mitigate the prohibitive costs associated with human evaluations, utilizing a powerful LLM as a judge has emerged as a favored approach. Nevertheless, this methodology encounters several challenges, including substantial expenses, concerns regarding privacy and security, and reproducibility. In this paper, we propose a straightforward, replicable, and accurate automated evaluation method by leveraging a lightweight LLM as the judge, named RocketEval. Initially, we identify that the performance disparity between lightweight and powerful LLMs in evaluation tasks primarily stems from their ability to conduct comprehensive analyses, which is not easily enhanced through techniques such as chain-of-thought reasoning. By reframing the evaluation task as a multi-faceted Q&A using an instance-specific checklist, we demonstrate that the limited judgment accuracy of lightweight LLMs is largely attributes to high uncertainty and positional bias. To address these challenges, we introduce an automated evaluation process grounded in checklist grading, which is designed to accommodate a variety of scenarios and questions. This process encompasses the creation of checklists, the grading of these checklists by lightweight LLMs, and the reweighting of checklist items to align with the supervised annotations. Our experiments carried out on the automated evaluation benchmarks, MT-Bench and WildBench datasets, reveal that RocketEval, when using Gemma-2-2B as the judge, achieves a high correlation (0.965) with human preferences, which is comparable to GPT-4o. Moreover, RocketEval provides a cost reduction exceeding 50-fold for large-scale evaluation and comparison scenarios. Our code is available at https://github.com/Joinn99/RocketEval-ICLR .

COutfitGAN: Learning to Synthesize Compatible Outfits Supervised by Silhouette Masks and Fashion Styles

Feb 12, 2025

Abstract:How to recommend outfits has gained considerable attention in both academia and industry in recent years. Many studies have been carried out regarding fashion compatibility learning, to determine whether the fashion items in an outfit are compatible or not. These methods mainly focus on evaluating the compatibility of existing outfits and rarely consider applying such knowledge to 'design' new fashion items. We propose the new task of generating complementary and compatible fashion items based on an arbitrary number of given fashion items. In particular, given some fashion items that can make up an outfit, the aim of this paper is to synthesize photo-realistic images of other, complementary, fashion items that are compatible with the given ones. To achieve this, we propose an outfit generation framework, referred to as COutfitGAN, which includes a pyramid style extractor, an outfit generator, a UNet-based real/fake discriminator, and a collocation discriminator. To train and evaluate this framework, we collected a large-scale fashion outfit dataset with over 200K outfits and 800K fashion items from the Internet. Extensive experiments show that COutfitGAN outperforms other baselines in terms of similarity, authenticity, and compatibility measurements.

FCBoost-Net: A Generative Network for Synthesizing Multiple Collocated Outfits via Fashion Compatibility Boosting

Feb 03, 2025

Abstract:Outfit generation is a challenging task in the field of fashion technology, in which the aim is to create a collocated set of fashion items that complement a given set of items. Previous studies in this area have been limited to generating a unique set of fashion items based on a given set of items, without providing additional options to users. This lack of a diverse range of choices necessitates the development of a more versatile framework. However, when the task of generating collocated and diversified outfits is approached with multimodal image-to-image translation methods, it poses a challenging problem in terms of non-aligned image translation, which is hard to address with existing methods. In this research, we present FCBoost-Net, a new framework for outfit generation that leverages the power of pre-trained generative models to produce multiple collocated and diversified outfits. Initially, FCBoost-Net randomly synthesizes multiple sets of fashion items, and the compatibility of the synthesized sets is then improved in several rounds using a novel fashion compatibility booster. This approach was inspired by boosting algorithms and allows the performance to be gradually improved in multiple steps. Empirical evidence indicates that the proposed strategy can improve the fashion compatibility of randomly synthesized fashion items as well as maintain their diversity. Extensive experiments confirm the effectiveness of our proposed framework with respect to visual authenticity, diversity, and fashion compatibility.

BC-GAN: A Generative Adversarial Network for Synthesizing a Batch of Collocated Clothing

Feb 03, 2025

Abstract:Collocated clothing synthesis using generative networks has become an emerging topic in the field of fashion intelligence, as it has significant potential economic value to increase revenue in the fashion industry. In previous studies, several works have attempted to synthesize visually-collocated clothing based on a given clothing item using generative adversarial networks (GANs) with promising results. These works, however, can only accomplish the synthesis of one collocated clothing item each time. Nevertheless, users may require different clothing items to meet their multiple choices due to their personal tastes and different dressing scenarios. To address this limitation, we introduce a novel batch clothing generation framework, named BC-GAN, which is able to synthesize multiple visually-collocated clothing images simultaneously. In particular, to further improve the fashion compatibility of synthetic results, BC-GAN proposes a new fashion compatibility discriminator in a contrastive learning perspective by fully exploiting the collocation relationship among all clothing items. Our model was examined in a large-scale dataset with compatible outfits constructed by ourselves. Extensive experiment results confirmed the effectiveness of our proposed BC-GAN in comparison to state-of-the-art methods in terms of diversity, visual authenticity, and fashion compatibility.

GiVE: Guiding Visual Encoder to Perceive Overlooked Information

Oct 26, 2024Abstract:Multimodal Large Language Models have advanced AI in applications like text-to-video generation and visual question answering. These models rely on visual encoders to convert non-text data into vectors, but current encoders either lack semantic alignment or overlook non-salient objects. We propose the Guiding Visual Encoder to Perceive Overlooked Information (GiVE) approach. GiVE enhances visual representation with an Attention-Guided Adapter (AG-Adapter) module and an Object-focused Visual Semantic Learning module. These incorporate three novel loss terms: Object-focused Image-Text Contrast (OITC) loss, Object-focused Image-Image Contrast (OIIC) loss, and Object-focused Image Discrimination (OID) loss, improving object consideration, retrieval accuracy, and comprehensiveness. Our contributions include dynamic visual focus adjustment, novel loss functions to enhance object retrieval, and the Multi-Object Instruction (MOInst) dataset. Experiments show our approach achieves state-of-the-art performance.

Does Faithfulness Conflict with Plausibility? An Empirical Study in Explainable AI across NLP Tasks

Mar 29, 2024

Abstract:Explainability algorithms aimed at interpreting decision-making AI systems usually consider balancing two critical dimensions: 1) \textit{faithfulness}, where explanations accurately reflect the model's inference process. 2) \textit{plausibility}, where explanations are consistent with domain experts. However, the question arises: do faithfulness and plausibility inherently conflict? In this study, through a comprehensive quantitative comparison between the explanations from the selected explainability methods and expert-level interpretations across three NLP tasks: sentiment analysis, intent detection, and topic labeling, we demonstrate that traditional perturbation-based methods Shapley value and LIME could attain greater faithfulness and plausibility. Our findings suggest that rather than optimizing for one dimension at the expense of the other, we could seek to optimize explainability algorithms with dual objectives to achieve high levels of accuracy and user accessibility in their explanations.

DRGame: Diversified Recommendation for Multi-category Video Games with Balanced Implicit Preferences

Aug 30, 2023

Abstract:The growing popularity of subscription services in video game consumption has emphasized the importance of offering diversified recommendations. Providing users with a diverse range of games is essential for ensuring continued engagement and fostering long-term subscriptions. However, existing recommendation models face challenges in effectively handling highly imbalanced implicit feedback in gaming interactions. Additionally, they struggle to take into account the distinctive characteristics of multiple categories and the latent user interests associated with these categories. In response to these challenges, we propose a novel framework, named DRGame, to obtain diversified recommendation. It is centered on multi-category video games, consisting of two {components}: Balance-driven Implicit Preferences Learning for data pre-processing and Clustering-based Diversified Recommendation {Module} for final prediction. The first module aims to achieve a balanced representation of implicit feedback in game time, thereby discovering a comprehensive view of player interests across different categories. The second module adopts category-aware representation learning to cluster and select players and games based on balanced implicit preferences, and then employs asymmetric neighbor aggregation to achieve diversified recommendations. Experimental results on a real-world dataset demonstrate the superiority of our proposed method over existing approaches in terms of game diversity recommendations.

IDVT: Interest-aware Denoising and View-guided Tuning for Social Recommendation

Aug 30, 2023

Abstract:In the information age, recommendation systems are vital for efficiently filtering information and identifying user preferences. Online social platforms have enriched these systems by providing valuable auxiliary information. Socially connected users are assumed to share similar preferences, enhancing recommendation accuracy and addressing cold start issues. However, empirical findings challenge the assumption, revealing that certain social connections can actually harm system performance. Our statistical analysis indicates a significant amount of noise in the social network, where many socially connected users do not share common interests. To address this issue, we propose an innovative \underline{I}nterest-aware \underline{D}enoising and \underline{V}iew-guided \underline{T}uning (IDVT) method for the social recommendation. The first ID part effectively denoises social connections. Specifically, the denoising process considers both social network structure and user interaction interests in a global view. Moreover, in this global view, we also integrate denoised social information (social domain) into the propagation of the user-item interactions (collaborative domain) and aggregate user representations from two domains using a gating mechanism. To tackle potential user interest loss and enhance model robustness within the global view, our second VT part introduces two additional views (local view and dropout-enhanced view) for fine-tuning user representations in the global view through contrastive learning. Extensive evaluations on real-world datasets with varying noise ratios demonstrate the superiority of IDVT over state-of-the-art social recommendation methods.

Asymmetric feature interaction for interpreting model predictions

May 12, 2023

Abstract:In natural language processing (NLP), deep neural networks (DNNs) could model complex interactions between context and have achieved impressive results on a range of NLP tasks. Prior works on feature interaction attribution mainly focus on studying symmetric interaction that only explains the additional influence of a set of words in combination, which fails to capture asymmetric influence that contributes to model prediction. In this work, we propose an asymmetric feature interaction attribution explanation model that aims to explore asymmetric higher-order feature interactions in the inference of deep neural NLP models. By representing our explanation with an directed interaction graph, we experimentally demonstrate interpretability of the graph to discover asymmetric feature interactions. Experimental results on two sentiment classification datasets show the superiority of our model against the state-of-the-art feature interaction attribution methods in identifying influential features for model predictions. Our code is available at https://github.com/StillLu/ASIV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge