Bodong Shang

Enriched K-Tier Heterogeneous Satellite Networks Model with User Association Policies

May 15, 2025Abstract:In the rapid evolution of the non-terrestrial networks (NTNs), satellite communication has emerged as a focal area of research due to its critical role in enabling seamless global connectivity. In this paper, we investigate two representative user association policies (UAPs) for multi-tier heterogeneous satellite networks (HetSatNets), namely the nearest satellite UAP and the maximum signal-to-interference-plus-noise-ratio (max-SINR) satellite UAP, where each tier is characterized by a distinct constellation configuration and transmission pattern. Employing stochastic geometric, we analyze various intermediate system aspects, including the probability of a typical user accessing each satellite tier, the aggregated interference power, and their corresponding Laplace transforms (LTs) under both UAPs. Subsequently, we derive explicit expressions for coverage probability (CP), non-handover probability (NHP), and time delay outage probability (DOP) of the typical user. Furthermore, we propose a novel weighted metric (WM) that integrates CP, NHP, and DOP to explore their trade-offs in the system design. The robustness of the theoretical framework is verified is verified through Monte Carlo simulations calibrated with the actual Starlink constellation, affirming the precision of our analytical approach. The empirical findings underscore an optimal UAP in various HetSatNet scenarios regarding CP, NHP, and DOP..

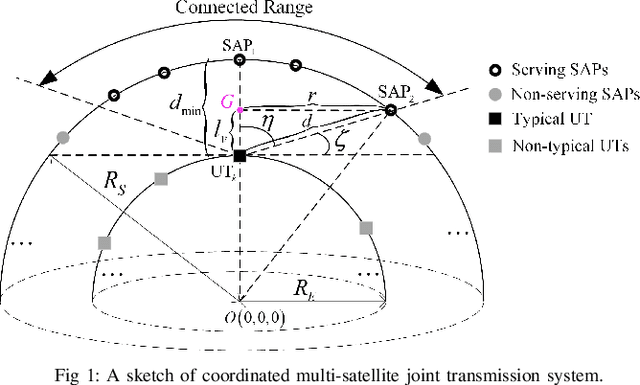

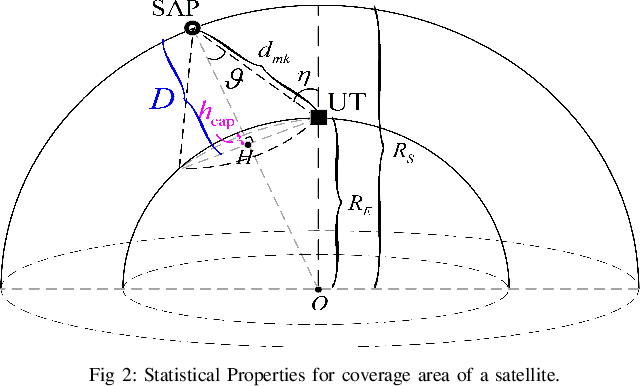

Downlink Performance of Cell-Free Massive MIMO for LEO Satellite Mega-Constellation

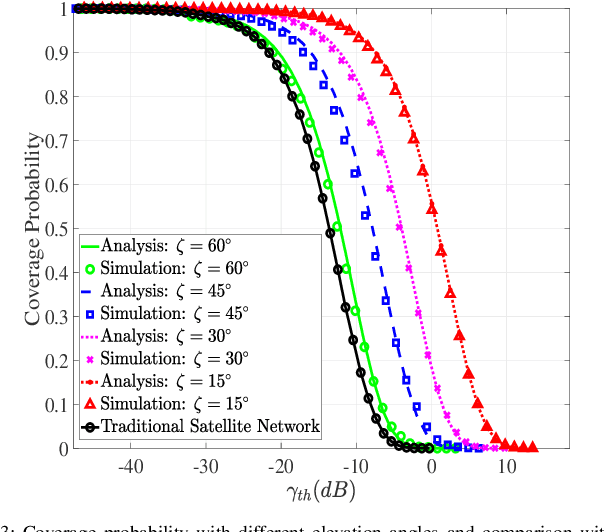

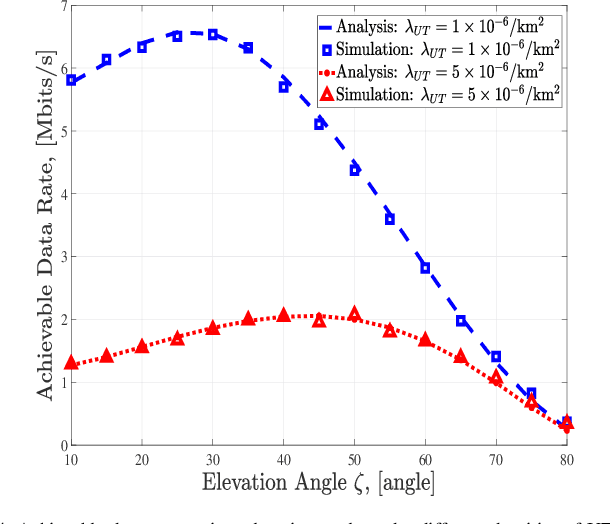

Jan 10, 2025Abstract:Low-earth orbit (LEO) satellite communication (SatCom) has emerged as a promising technology for improving wireless connectivity in global areas. Cell-free massive multiple-input multiple-output (CF-mMIMO), an architecture recently proposed for next-generation networks, has yet to be fully explored for LEO satellites. In this paper, we investigate the downlink performance of a CF-mMIMO LEO SatCom network, where many satellite access points (SAPs) simultaneously serve the corresponding ground user terminals (UTs). Using tools from stochastic geometry, we model the locations of SAPs and UTs on surfaces of concentric spheres using Poisson point processes (PPPs) and present expressions based on linear minimum-mean-square-error (LMMSE) channel estimation and conjugate beamforming. Then, we derive the coverage probabilities in both fading and non-fading scenarios, with significant system parameters such as the Nakagami fading parameter, number of UTs, number of SAPs, orbital altitude, and service range brought by the dome angle. Finally, the analytical model is verified by extensive Monte Carlo simulations. Simulation results show that stronger line-of-sight (LoS) effects and a more comprehensive service range of the UT bring higher coverage probability despite existing multi-user interference. Moreover, we found that there exist optimal numbers of UTs for different orbital altitudes and dome angles, which provides valuable system design insights.

Coverage and Spectral Efficiency of NOMA-Enabled LEO Satellite Networks with Ordering Schemes

Jan 10, 2025Abstract:This paper investigates an analytical model for low-earth orbit (LEO) multi-satellite downlink non-orthogonal multiple access (NOMA) networks. The satellites transmit data to multiple NOMA user terminals (UTs), each employing successive interference cancellation (SIC) for decoding. Two ordering schemes are adopted for NOMA-enabled LEO satellite networks, i.e., mean signal power (MSP)-based ordering and instantaneous-signal-to-inter-satellite-interference-plus-noise ratio (ISINR)-based ordering. For each ordering scheme, we derive the coverage probabilities of UTs under different channel conditions. Moreover, we discuss how coverage is influenced by SIC, main-lobe gain, and tradeoffs between the number of satellites and their altitudes. Additionally, two user fairness-based power allocation (PA) schemes are considered, and PA coefficients with the optimal number of UTs that maximize their sum spectral efficiency (SE) are studied. Simulation results show that there exists a maximum signal-to-inter-satellite-interference-plus-noise ratio (SINR) threshold for each PA scheme that ensures the operation of NOMA in LEO satellite networks, and the benefit of NOMA only exists when the target SINR is below a certain threshold. Compared with orthogonal multiple access (OMA), NOMA increases UTs' sum SE by as much as 35\%. Furthermore, for most SINR thresholds, the sum SE increases with the number of UTs to the highest value, whilst the maximum sum SE is obtained when there are two UTs.

Spectrum Sharing in Satellite-Terrestrial Integrated Networks: Frameworks, Approaches, and Opportunities

Jan 06, 2025

Abstract:To accommodate the increasing communication needs in non-terrestrial networks (NTNs), wireless users in remote areas may require access to more spectrum than is currently allocated. Terrestrial networks (TNs), such as cellular networks, are deployed in specific areas, but many underused licensed spectrum bands remain in remote areas. Therefore, bringing NTNs to a shared spectrum with TNs can improve network capacity under reasonable interference management. However, in satellite-terrestrial integrated networks (STINs), the comprehensive coverage of a satellite and the unbalanced communication resources of STINs make it challenging to effectively manage mutual interference between NTN and TN. This article presents the fundamentals and prospects of spectrum sharing (SS) in STINs by introducing four SS frameworks, their potential application scenarios, and technical challenges. Furthermore, advanced SS approaches related to interference management in STINs and performance metrics of SS in STINs are introduced. Moreover, a preliminary performance evaluation showcases the potential for sharing the spectrum between NTN and TN. Finally, future research opportunities for SS in STINs are discussed.

Channel Modeling and Rate Analysis of Optical Inter-Satellite Link (OISL)

Jan 06, 2025

Abstract:Optical inter-satellite links (OISLs) improve connectivity between satellites in space. They offer advantages such as high-throughput data transfer and reduced size, weight, and power requirements compared to traditional radio frequency transmission. However, the channel model and communication performance for long-distance inter-satellite laser transmission still require in-depth study. In this paper, we first develop a channel model for OISL communication within non-terrestrial networks (NTN) by accounting for pointing errors caused by satellite jitter and tracking noise. We derive the distributions of the channel state arising from these pointing errors and calculate their average value. Additionally, we determine the average achievable data rate for OISL communication in NTN and design a cooperative OISL system, highlighting a trade-off between concentrating beam energy and balancing misalignment. We calculate the minimum number of satellites required in cooperative OISLs to achieve a targeted data transmission size while adhering to latency constraints. This involves exploring the balance between the increased data rate of each link and the cumulative latency across all links. Finally, simulation results validate the effectiveness of the proposed analytical model and provide insights into the optimal number of satellites needed for cooperative OISLs and the optimal laser frequency to use.

Spectrum Sharing in 6G Space-Ground Integrated Networks: A Ground Protection Zone-Based Design

Jan 06, 2025Abstract:Space-ground integrated network (SGIN) has been envisioned as a competitive solution for large scale and wide coverage of future wireless networks. By integrating both the non-terrestrial network (NTN) and the terrestrial network (TN), SGIN can provide high speed and omnipresent wireless network access for the users using the predefined licensed spectrums. Considering the scarcity of the spectrum resource and the low spectrum efficiency of the SGIN, we enable the NTN and TN to share the spectrum to improve overall system performance, i.e., weighted-sum area data rate (WS-ADR). However, mutual interference between NTN and TN is often inevitable and thus causes SGIN performance degradation. In this work, we consider a ground protection zone for the TN base stations, in which the NTN users are only allowed to use the NTN reserved spectrum to mitigate the NTN and TN mutual interference. We analytically derive the coverage probability and area data rate (ADR) of the typical users and study the performance under various protection zone sizes and spectrum allocation parameter settings. Simulation and numerical results demonstrate that the WS-ADR could be maximized by selecting the appropriate radius of protection zone and bandwidth allocation factor in the SGIN.

Advancing Multi-Connectivity in Satellite-Terrestrial Integrated Networks: Architectures, Challenges, and Applications

Nov 07, 2024Abstract:Multi-connectivity (MC) in satellite-terrestrial integrated networks (STINs), included in 3GPP standards, is regarded as a promising technology for future networks. The significant advantages of MC in improving coverage, communication, and sensing through satellite-terrestrial collaboration have sparked widespread interest. In this article, we first introduce three fundamental deployment architectures of MC systems in STINs, including multi-satellite, single-satellite single-base-station, and multi-satellite multi-base-station configurations. Considering the emerging but still evolving satellite networking, we explore system design challenges such as satellite networking schemes, e.g., cell-free and multi-tier satellite networks. Then, key technical challenges that severely influence the quality of mutual communications, including beamforming, channel estimation, and synchronization, are discussed subsequently. Furthermore, typical applications such as coverage enhancement, traffic offloading, collaborative sensing, and low-altitude communication are demonstrated, followed by a case study comparing coverage performance in MC and single-connectivity (SC) configurations. Several essential future research directions for MC in STINs are presented to facilitate further exploration.

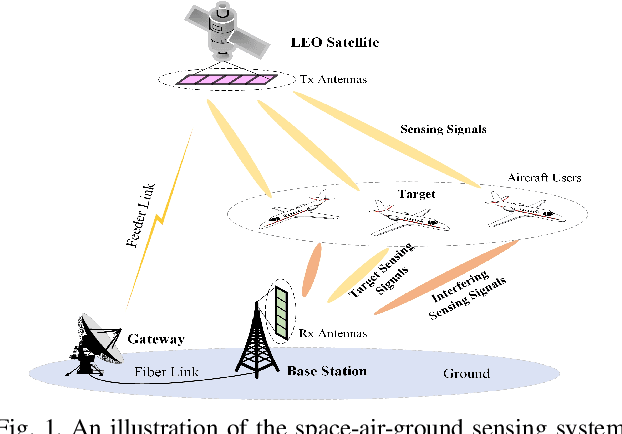

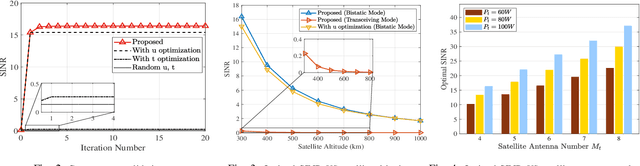

A Bistatic Sensing System in Space-Air-Ground Integrated Networks

Jul 04, 2024

Abstract:Sensing is anticipated to have wider extensions in communication systems with the boom of non-terrestrial networks (NTNs) during the past years. In this paper, we study a bistatic sensing system by maximizing the signal-to-interference-plus-noise ration (SINR) from the target aircraft in the space-air-ground integrated network (SAGIN). We formulate a joint optimization problem for the transmit beamforming of low-earth orbit (LEO) satellite and the receive filtering of ground base station. To tackle this problem, we decompose the original problem into two sub-problems and use the alternating optimization to solve them iteratively. Using techniques of fractional programming and generalized Rayleigh quotient, the closed-form solution for each sub-problem is returned. Simulation results show that the proposed algorithm has good convergence performance.Moreover, the optimization of receive filtering dominates the optimality, especially when the satellite altitude becomes higher, which provides valuable network design insights.

Coverage and Rate Analysis for Cell-Free LEO Satellite Networks

Nov 09, 2023

Abstract:Low-earth orbit (LEO) satellite communication is one of the enabling key technologies in next-generation (6G) networks. However, single satellite-supported downlink communication may not meet user's needs due to limited signal strength, especially in emergent scenarios. In this letter, we investigate an architecture of cell-free (CF) LEO satellite (CFLS) networks from a system-level perspective, where a user can be served by multiple satellites to improve its quality-of-service (QoS). Furthermore, we analyze the coverage and rate of a typical user in the CFLS network. Simulation and numerical results show that the CFLS network achieves a higher coverage probability than the traditional single satellite-supported network. Moreover, user's ergodic rate is maximized by selecting an appropriate number of serving satellites.

UAV Swarm-Enabled Aerial Reconfigurable Intelligent Surface

Mar 10, 2021

Abstract:Reconfigurable intelligent surface (RIS) offers tremendous spectrum and energy efficiency in wireless networks by adjusting the amplitudes and/or phases of passive reflecting elements to optimize signal reflection. With the agility and mobility of unmanned aerial vehicles (UAVs), RIS can be mounted on UAVs to enable three-dimensional signal reflection. Compared to the conventional terrestrial RIS (TRIS), the aerial RIS (ARIS) enjoys higher deployment flexibility, reliable air-to-ground links, and panoramic full-angle reflection. However, due to UAV's limited payload and battery capacity, it is difficult for a UAV to carry a RIS with a large number of reflecting elements. Thus, the scalability of the aperture gain could not be guaranteed. In practice, multiple UAVs can form a UAV swarm to enable the ARIS cooperatively. In this article, we first present an overview of the UAV swarm-enabled ARIS (SARIS), including its motivations and competitive advantages compared to TRIS and ARIS, as well as its new transformative applications in wireless networks. We then address the critical challenges of designing the SARIS by focusing on the beamforming design, SARIS channel estimation, and SARIS's deployment and movement. Next, the potential performance enhancement of SARIS is showcased and discussed with preliminary numerical results. Finally, open research opportunities are illustrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge