Chao Ren

Towards Explainable Quantum AI: Informing the Encoder Selection of Quantum Neural Networks via Visualization

Dec 16, 2025

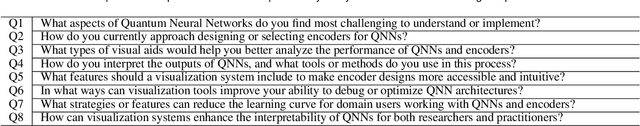

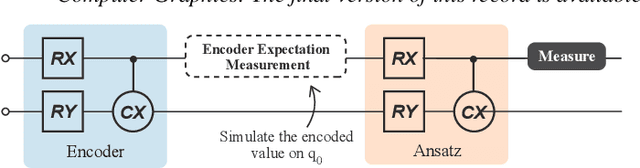

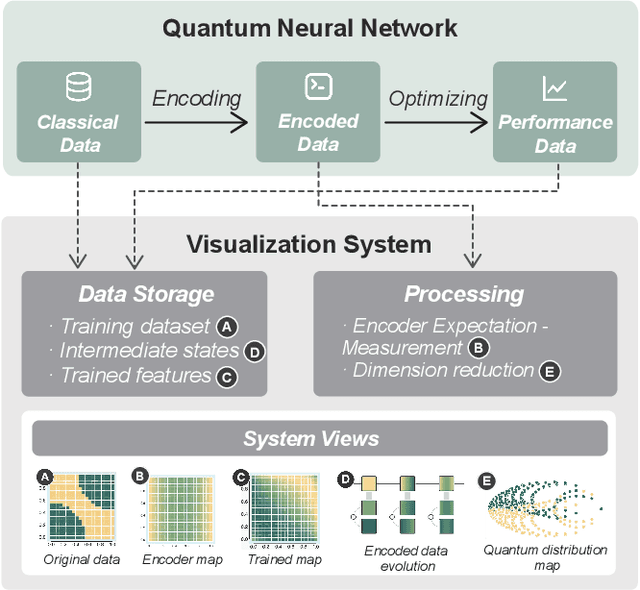

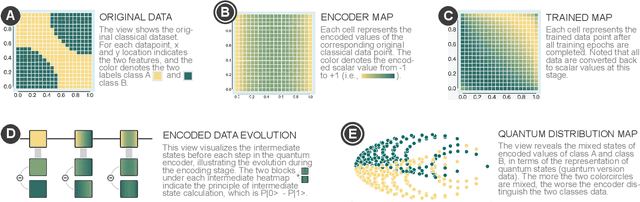

Abstract:Quantum Neural Networks (QNNs) represent a promising fusion of quantum computing and neural network architectures, offering speed-ups and efficient processing of high-dimensional, entangled data. A crucial component of QNNs is the encoder, which maps classical input data into quantum states. However, choosing suitable encoders remains a significant challenge, largely due to the lack of systematic guidance and the trial-and-error nature of current approaches. This process is further impeded by two key challenges: (1) the difficulty in evaluating encoded quantum states prior to training, and (2) the lack of intuitive methods for analyzing an encoder's ability to effectively distinguish data features. To address these issues, we introduce a novel visualization tool, XQAI-Eyes, which enables QNN developers to compare classical data features with their corresponding encoded quantum states and to examine the mixed quantum states across different classes. By bridging classical and quantum perspectives, XQAI-Eyes facilitates a deeper understanding of how encoders influence QNN performance. Evaluations across diverse datasets and encoder designs demonstrate XQAI-Eyes's potential to support the exploration of the relationship between encoder design and QNN effectiveness, offering a holistic and transparent approach to optimizing quantum encoders. Moreover, domain experts used XQAI-Eyes to derive two key practices for quantum encoder selection, grounded in the principles of pattern preservation and feature mapping.

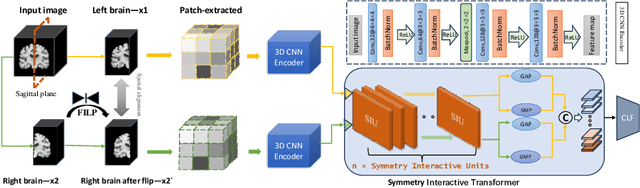

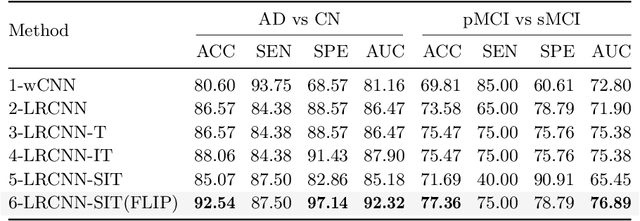

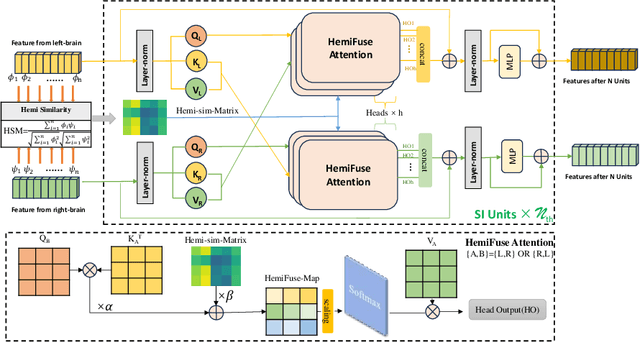

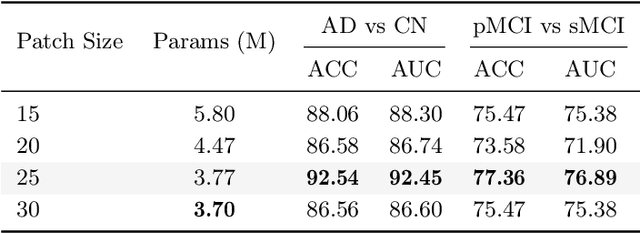

Symmetry Interactive Transformer with CNN Framework for Diagnosis of Alzheimer's Disease Using Structural MRI

Sep 10, 2025

Abstract:Structural magnetic resonance imaging (sMRI) combined with deep learning has achieved remarkable progress in the prediction and diagnosis of Alzheimer's disease (AD). Existing studies have used CNN and transformer to build a well-performing network, but most of them are based on pretraining or ignoring the asymmetrical character caused by brain disorders. We propose an end-to-end network for the detection of disease-based asymmetric induced by left and right brain atrophy which consist of 3D CNN Encoder and Symmetry Interactive Transformer (SIT). Following the inter-equal grid block fetch operation, the corresponding left and right hemisphere features are aligned and subsequently fed into the SIT for diagnostic analysis. SIT can help the model focus more on the regions of asymmetry caused by structural changes, thus improving diagnostic performance. We evaluated our method based on the ADNI dataset, and the results show that the method achieves better diagnostic accuracy (92.5\%) compared to several CNN methods and CNNs combined with a general transformer. The visualization results show that our network pays more attention in regions of brain atrophy, especially for the asymmetric pathological characteristics induced by AD, demonstrating the interpretability and effectiveness of the method.

Computation-resource-efficient Task-oriented Communications

Jul 10, 2025Abstract:The rapid development of deep-learning enabled task-oriented communications (TOC) significantly shifts the paradigm of wireless communications. However, the high computation demands, particularly in resource-constrained systems e.g., mobile phones and UAVs, make TOC challenging for many tasks. To address the problem, we propose a novel TOC method with two models: a static and a dynamic model. In the static model, we apply a neural network (NN) as a task-oriented encoder (TOE) when there is no computation budget constraint. The dynamic model is used when device computation resources are limited, and it uses dynamic NNs with multiple exits as the TOE. The dynamic model sorts input data by complexity with thresholds, allowing the efficient allocation of computation resources. Furthermore, we analyze the convergence of the proposed TOC methods and show that the model converges at rate $O\left(\frac{1}{\sqrt{T}}\right)$ with an epoch of length $T$. Experimental results demonstrate that the static model outperforms baseline models in terms of transmitted dimensions, floating-point operations (FLOPs), and accuracy simultaneously. The dynamic model can further improve accuracy and computational demand, providing an improved solution for resource-constrained systems.

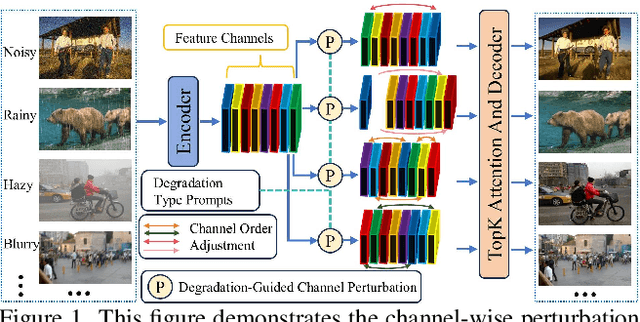

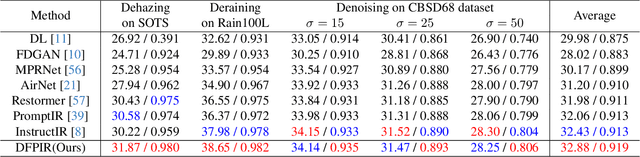

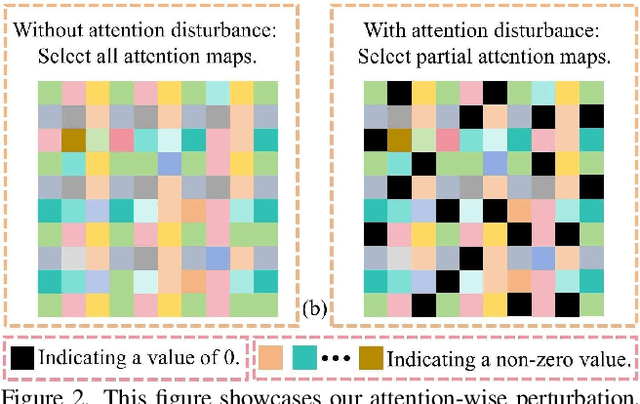

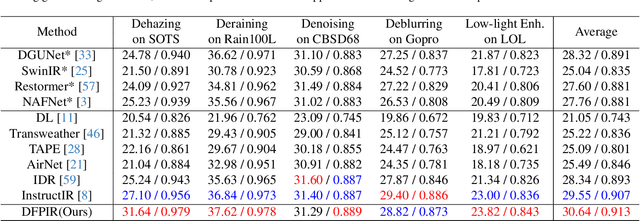

Degradation-Aware Feature Perturbation for All-in-One Image Restoration

May 19, 2025

Abstract:All-in-one image restoration aims to recover clear images from various degradation types and levels with a unified model. Nonetheless, the significant variations among degradation types present challenges for training a universal model, often resulting in task interference, where the gradient update directions of different tasks may diverge due to shared parameters. To address this issue, motivated by the routing strategy, we propose DFPIR, a novel all-in-one image restorer that introduces Degradation-aware Feature Perturbations(DFP) to adjust the feature space to align with the unified parameter space. In this paper, the feature perturbations primarily include channel-wise perturbations and attention-wise perturbations. Specifically, channel-wise perturbations are implemented by shuffling the channels in high-dimensional space guided by degradation types, while attention-wise perturbations are achieved through selective masking in the attention space. To achieve these goals, we propose a Degradation-Guided Perturbation Block (DGPB) to implement these two functions, positioned between the encoding and decoding stages of the encoder-decoder architecture. Extensive experimental results demonstrate that DFPIR achieves state-of-the-art performance on several all-in-one image restoration tasks including image denoising, image dehazing, image deraining, motion deblurring, and low-light image enhancement. Our codes are available at https://github.com/TxpHome/DFPIR.

Heterogeneity-Aware Client Sampling: A Unified Solution for Consistent Federated Learning

May 16, 2025Abstract:Federated learning (FL) commonly involves clients with diverse communication and computational capabilities. Such heterogeneity can significantly distort the optimization dynamics and lead to objective inconsistency, where the global model converges to an incorrect stationary point potentially far from the pursued optimum. Despite its critical impact, the joint effect of communication and computation heterogeneity has remained largely unexplored, due to the intrinsic complexity of their interaction. In this paper, we reveal the fundamentally distinct mechanisms through which heterogeneous communication and computation drive inconsistency in FL. To the best of our knowledge, this is the first unified theoretical analysis of general heterogeneous FL, offering a principled understanding of how these two forms of heterogeneity jointly distort the optimization trajectory under arbitrary choices of local solvers. Motivated by these insights, we propose Federated Heterogeneity-Aware Client Sampling, FedACS, a universal method to eliminate all types of objective inconsistency. We theoretically prove that FedACS converges to the correct optimum at a rate of $O(1/\sqrt{R})$, even in dynamic heterogeneous environments. Extensive experiments across multiple datasets show that FedACS outperforms state-of-the-art and category-specific baselines by 4.3%-36%, while reducing communication costs by 22%-89% and computation loads by 14%-105%, respectively.

NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images: Methods and Results

Apr 19, 2025

Abstract:This paper reviews the NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images. This challenge received a wide range of impressive solutions, which are developed and evaluated using our collected real-world Raindrop Clarity dataset. Unlike existing deraining datasets, our Raindrop Clarity dataset is more diverse and challenging in degradation types and contents, which includes day raindrop-focused, day background-focused, night raindrop-focused, and night background-focused degradations. This dataset is divided into three subsets for competition: 14,139 images for training, 240 images for validation, and 731 images for testing. The primary objective of this challenge is to establish a new and powerful benchmark for the task of removing raindrops under varying lighting and focus conditions. There are a total of 361 participants in the competition, and 32 teams submitting valid solutions and fact sheets for the final testing phase. These submissions achieved state-of-the-art (SOTA) performance on the Raindrop Clarity dataset. The project can be found at https://lixinustc.github.io/CVPR-NTIRE2025-RainDrop-Competition.github.io/.

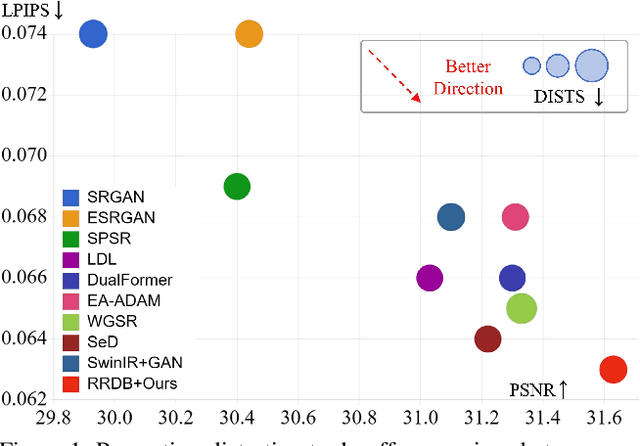

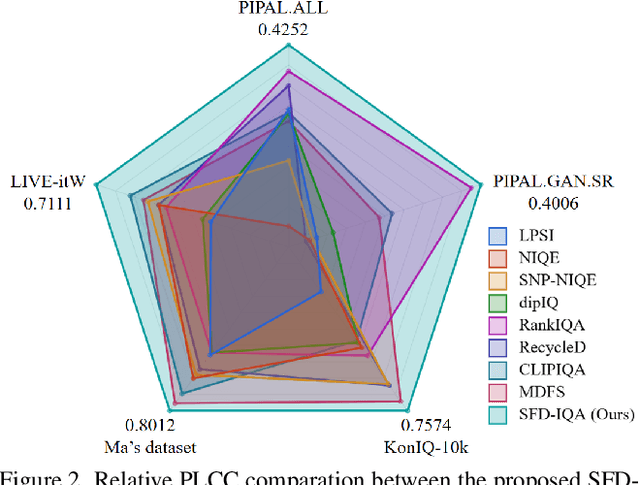

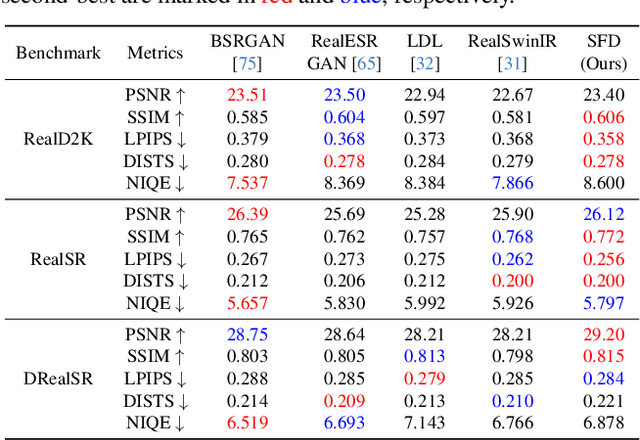

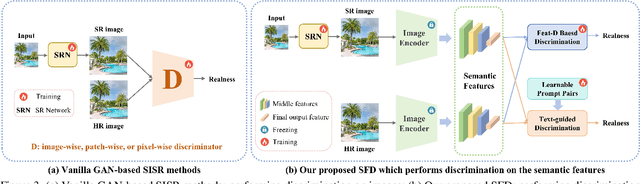

Exploring Semantic Feature Discrimination for Perceptual Image Super-Resolution and Opinion-Unaware No-Reference Image Quality Assessment

Mar 25, 2025

Abstract:Generative Adversarial Networks (GANs) have been widely applied to image super-resolution (SR) to enhance the perceptual quality. However, most existing GAN-based SR methods typically perform coarse-grained discrimination directly on images and ignore the semantic information of images, making it challenging for the super resolution networks (SRN) to learn fine-grained and semantic-related texture details. To alleviate this issue, we propose a semantic feature discrimination method, SFD, for perceptual SR. Specifically, we first design a feature discriminator (Feat-D), to discriminate the pixel-wise middle semantic features from CLIP, aligning the feature distributions of SR images with that of high-quality images. Additionally, we propose a text-guided discrimination method (TG-D) by introducing learnable prompt pairs (LPP) in an adversarial manner to perform discrimination on the more abstract output feature of CLIP, further enhancing the discriminative ability of our method. With both Feat-D and TG-D, our SFD can effectively distinguish between the semantic feature distributions of low-quality and high-quality images, encouraging SRN to generate more realistic and semantic-relevant textures. Furthermore, based on the trained Feat-D and LPP, we propose a novel opinion-unaware no-reference image quality assessment (OU NR-IQA) method, SFD-IQA, greatly improving OU NR-IQA performance without any additional targeted training. Extensive experiments on classical SISR, real-world SISR, and OU NR-IQA tasks demonstrate the effectiveness of our proposed methods.

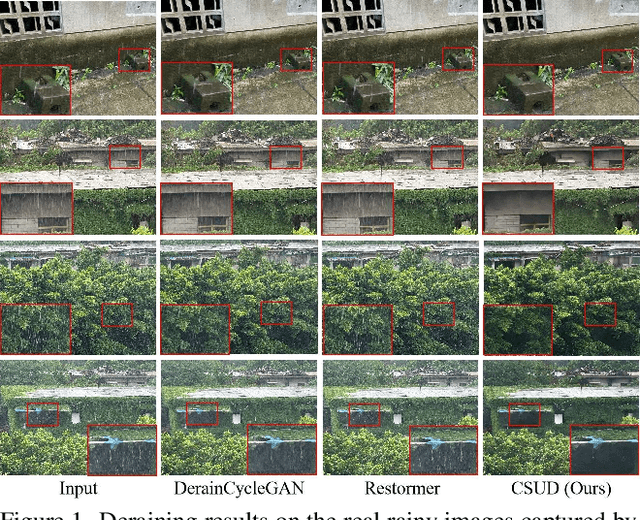

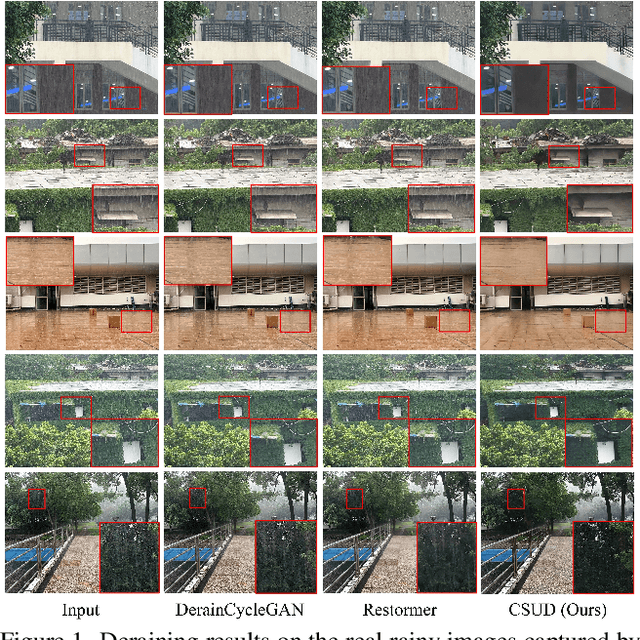

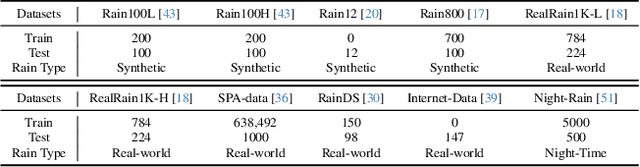

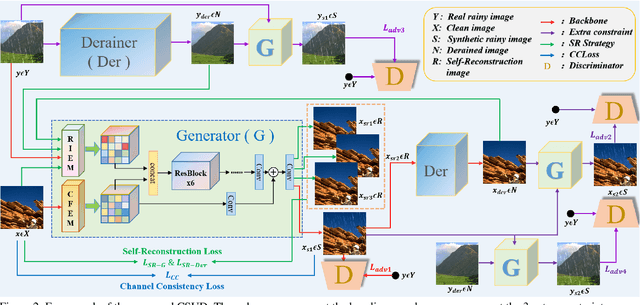

Channel Consistency Prior and Self-Reconstruction Strategy Based Unsupervised Image Deraining

Mar 24, 2025

Abstract:Recently, deep image deraining models based on paired datasets have made a series of remarkable progress. However, they cannot be well applied in real-world applications due to the difficulty of obtaining real paired datasets and the poor generalization performance. In this paper, we propose a novel Channel Consistency Prior and Self-Reconstruction Strategy Based Unsupervised Image Deraining framework, CSUD, to tackle the aforementioned challenges. During training with unpaired data, CSUD is capable of generating high-quality pseudo clean and rainy image pairs which are used to enhance the performance of deraining network. Specifically, to preserve more image background details while transferring rain streaks from rainy images to the unpaired clean images, we propose a novel Channel Consistency Loss (CCLoss) by introducing the Channel Consistency Prior (CCP) of rain streaks into training process, thereby ensuring that the generated pseudo rainy images closely resemble the real ones. Furthermore, we propose a novel Self-Reconstruction (SR) strategy to alleviate the redundant information transfer problem of the generator, further improving the deraining performance and the generalization capability of our method. Extensive experiments on multiple synthetic and real-world datasets demonstrate that the deraining performance of CSUD surpasses other state-of-the-art unsupervised methods and CSUD exhibits superior generalization capability.

Coverage and Spectral Efficiency of NOMA-Enabled LEO Satellite Networks with Ordering Schemes

Jan 10, 2025Abstract:This paper investigates an analytical model for low-earth orbit (LEO) multi-satellite downlink non-orthogonal multiple access (NOMA) networks. The satellites transmit data to multiple NOMA user terminals (UTs), each employing successive interference cancellation (SIC) for decoding. Two ordering schemes are adopted for NOMA-enabled LEO satellite networks, i.e., mean signal power (MSP)-based ordering and instantaneous-signal-to-inter-satellite-interference-plus-noise ratio (ISINR)-based ordering. For each ordering scheme, we derive the coverage probabilities of UTs under different channel conditions. Moreover, we discuss how coverage is influenced by SIC, main-lobe gain, and tradeoffs between the number of satellites and their altitudes. Additionally, two user fairness-based power allocation (PA) schemes are considered, and PA coefficients with the optimal number of UTs that maximize their sum spectral efficiency (SE) are studied. Simulation results show that there exists a maximum signal-to-inter-satellite-interference-plus-noise ratio (SINR) threshold for each PA scheme that ensures the operation of NOMA in LEO satellite networks, and the benefit of NOMA only exists when the target SINR is below a certain threshold. Compared with orthogonal multiple access (OMA), NOMA increases UTs' sum SE by as much as 35\%. Furthermore, for most SINR thresholds, the sum SE increases with the number of UTs to the highest value, whilst the maximum sum SE is obtained when there are two UTs.

Spectrum Sharing in Satellite-Terrestrial Integrated Networks: Frameworks, Approaches, and Opportunities

Jan 06, 2025

Abstract:To accommodate the increasing communication needs in non-terrestrial networks (NTNs), wireless users in remote areas may require access to more spectrum than is currently allocated. Terrestrial networks (TNs), such as cellular networks, are deployed in specific areas, but many underused licensed spectrum bands remain in remote areas. Therefore, bringing NTNs to a shared spectrum with TNs can improve network capacity under reasonable interference management. However, in satellite-terrestrial integrated networks (STINs), the comprehensive coverage of a satellite and the unbalanced communication resources of STINs make it challenging to effectively manage mutual interference between NTN and TN. This article presents the fundamentals and prospects of spectrum sharing (SS) in STINs by introducing four SS frameworks, their potential application scenarios, and technical challenges. Furthermore, advanced SS approaches related to interference management in STINs and performance metrics of SS in STINs are introduced. Moreover, a preliminary performance evaluation showcases the potential for sharing the spectrum between NTN and TN. Finally, future research opportunities for SS in STINs are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge