Jian Huang

DARE: Aligning LLM Agents with the R Statistical Ecosystem via Distribution-Aware Retrieval

Mar 05, 2026Abstract:Large Language Model (LLM) agents can automate data-science workflows, but many rigorous statistical methods implemented in R remain underused because LLMs struggle with statistical knowledge and tool retrieval. Existing retrieval-augmented approaches focus on function-level semantics and ignore data distribution, producing suboptimal matches. We propose DARE (Distribution-Aware Retrieval Embedding), a lightweight, plug-and-play retrieval model that incorporates data distribution information into function representations for R package retrieval. Our main contributions are: (i) RPKB, a curated R Package Knowledge Base derived from 8,191 high-quality CRAN packages; (ii) DARE, an embedding model that fuses distributional features with function metadata to improve retrieval relevance; and (iii) RCodingAgent, an R-oriented LLM agent for reliable R code generation and a suite of statistical analysis tasks for systematically evaluating LLM agents in realistic analytical scenarios. Empirically, DARE achieves an NDCG at 10 of 93.47%, outperforming state-of-the-art open-source embedding models by up to 17% on package retrieval while using substantially fewer parameters. Integrating DARE into RCodingAgent yields significant gains on downstream analysis tasks. This work helps narrow the gap between LLM automation and the mature R statistical ecosystem.

SpecAttn: Co-Designing Sparse Attention with Self-Speculative Decoding

Feb 06, 2026Abstract:Long-context large language model (LLM) inference has become the norm for today's AI applications. However, it is severely bottlenecked by the increasing memory demands of its KV cache. Previous works have shown that self-speculative decoding with sparse attention, where tokens are drafted using a subset of the KV cache and verified in parallel with full KV cache, speeds up inference in a lossless way. However, this approach relies on standalone KV selection algorithms to select the KV entries used for drafting and overlooks that the criticality of each KV entry is inherently computed during verification. In this paper, we propose SpecAttn, a self-speculative decoding method with verification-guided sparse attention. SpecAttn identifies critical KV entries as a byproduct of verification and only loads these entries when drafting subsequent tokens. This not only improves draft token acceptance rate but also incurs low KV selection overhead, thereby improving decoding throughput. SpecAttn achieves 2.81$\times$ higher throughput over vanilla auto-regressive decoding and 1.29$\times$ improvement over state-of-the-art sparsity-based self-speculative decoding methods.

DSAEval: Evaluating Data Science Agents on a Wide Range of Real-World Data Science Problems

Jan 20, 2026Abstract:Recent LLM-based data agents aim to automate data science tasks ranging from data analysis to deep learning. However, the open-ended nature of real-world data science problems, which often span multiple taxonomies and lack standard answers, poses a significant challenge for evaluation. To address this, we introduce DSAEval, a benchmark comprising 641 real-world data science problems grounded in 285 diverse datasets, covering both structured and unstructured data (e.g., vision and text). DSAEval incorporates three distinctive features: (1) Multimodal Environment Perception, which enables agents to interpret observations from multiple modalities including text and vision; (2) Multi-Query Interactions, which mirror the iterative and cumulative nature of real-world data science projects; and (3) Multi-Dimensional Evaluation, which provides a holistic assessment across reasoning, code, and results. We systematically evaluate 11 advanced agentic LLMs using DSAEval. Our results show that Claude-Sonnet-4.5 achieves the strongest overall performance, GPT-5.2 is the most efficient, and MiMo-V2-Flash is the most cost-effective. We further demonstrate that multimodal perception consistently improves performance on vision-related tasks, with gains ranging from 2.04% to 11.30%. Overall, while current data science agents perform well on structured data and routine data anlysis workflows, substantial challenges remain in unstructured domains. Finally, we offer critical insights and outline future research directions to advance the development of data science agents.

On Conditional Stochastic Interpolation for Generative Nonlinear Sufficient Dimension Reduction

Dec 22, 2025

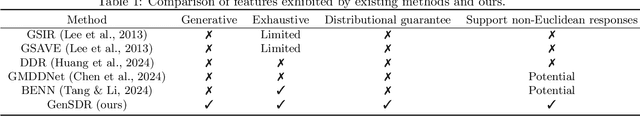

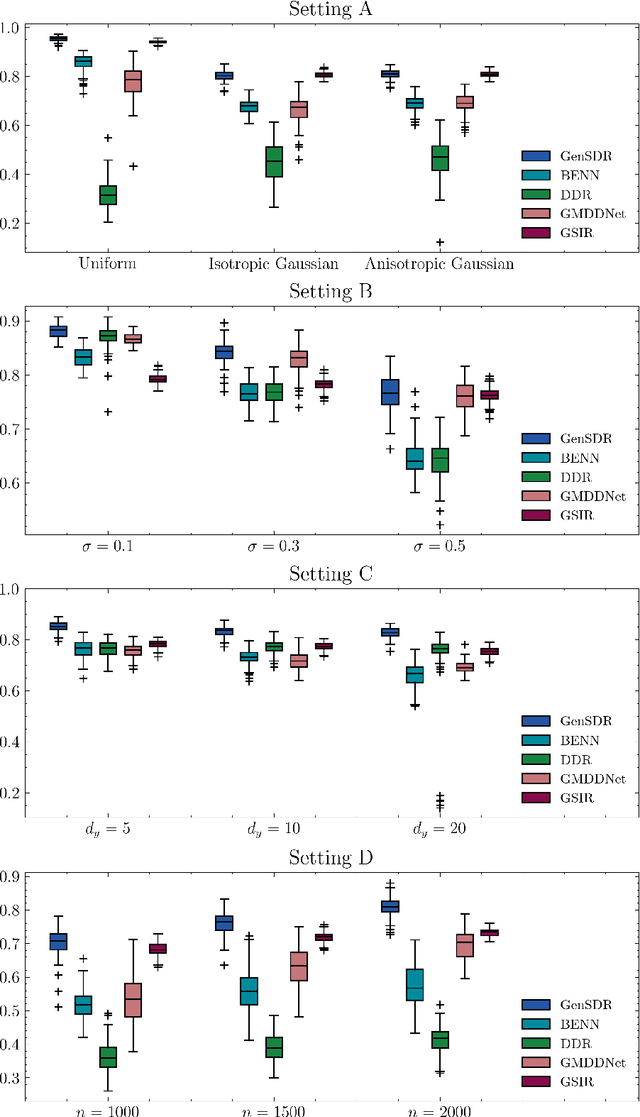

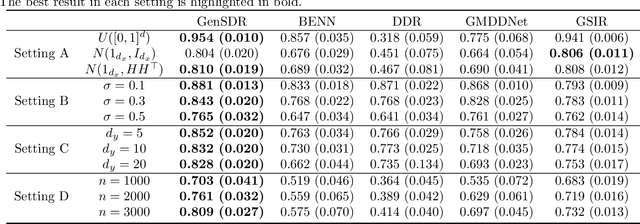

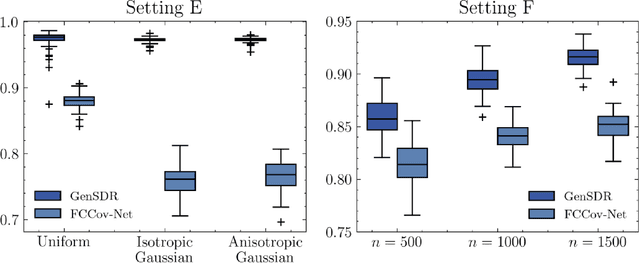

Abstract:Identifying low-dimensional sufficient structures in nonlinear sufficient dimension reduction (SDR) has long been a fundamental yet challenging problem. Most existing methods lack theoretical guarantees of exhaustiveness in identifying lower dimensional structures, either at the population level or at the sample level. We tackle this issue by proposing a new method, generative sufficient dimension reduction (GenSDR), which leverages modern generative models. We show that GenSDR is able to fully recover the information contained in the central $σ$-field at both the population and sample levels. In particular, at the sample level, we establish a consistency property for the GenSDR estimator from the perspective of conditional distributions, capitalizing on the distributional learning capabilities of deep generative models. Moreover, by incorporating an ensemble technique, we extend GenSDR to accommodate scenarios with non-Euclidean responses, thereby substantially broadening its applicability. Extensive numerical results demonstrate the outstanding empirical performance of GenSDR and highlight its strong potential for addressing a wide range of complex, real-world tasks.

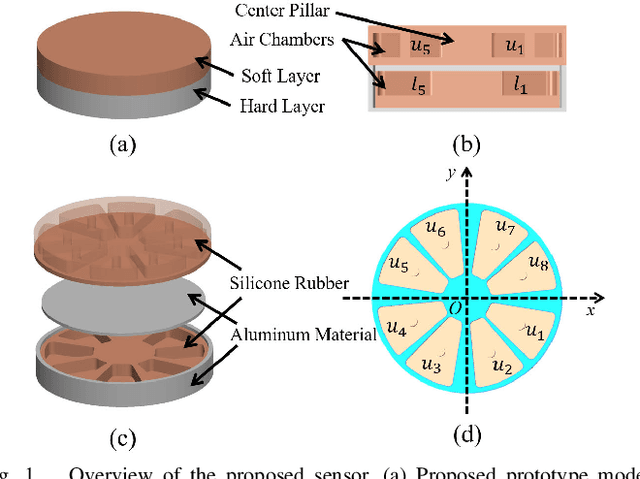

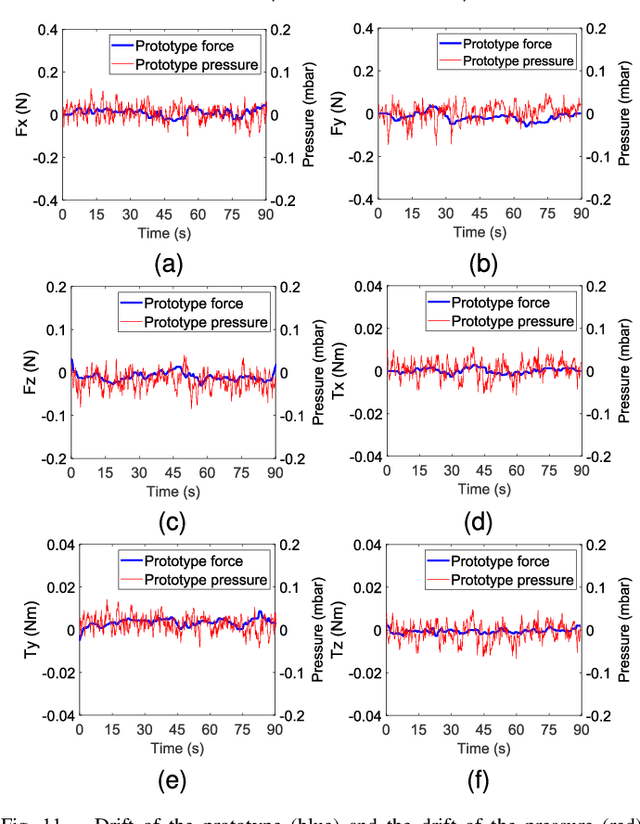

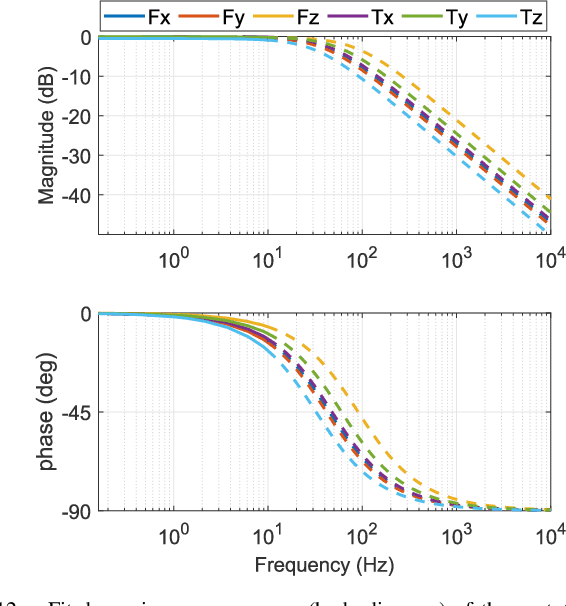

Air-Chamber Based Soft Six-Axis Force/Torque Sensor for Human-Robot Interaction

Nov 17, 2025

Abstract:Soft multi-axis force/torque sensors provide safe and precise force interaction. Capturing the complete degree-of-freedom of force is imperative for accurate force measurement with six-axis force/torque sensors. However, cross-axis coupling can lead to calibration issues and decreased accuracy. In this instance, developing a soft and accurate six-axis sensor is a challenging task. In this paper, a soft air-chamber type six-axis force/torque sensor with 16-channel barometers is introduced, which housed in hyper-elastic air chambers made of silicone rubber. Additionally, an effective decoupling method is proposed, based on a rigid-soft hierarchical structure, which reduces the six-axis decoupling problem to two three-axis decoupling problems. Finite element model simulation and experiments demonstrate the compatibility of the proposed approach with reality. The prototype's sensing performance is quantitatively measured in terms of static load response, dynamic load response and dynamic response characteristic. It possesses a measuring range of 50 N force and 1 Nm torque, and the average deviation, repeatability, non-linearity and hysteresis are 4.9$\%$, 2.7$\%$, 5.8$\%$ and 6.7$\%$, respectively. The results indicate that the prototype exhibits satisfactory sensing performance while maintaining its softness due to the presence of soft air chambers.

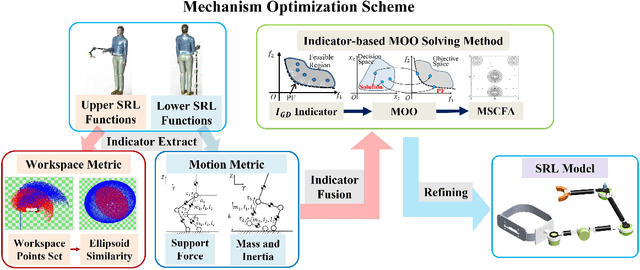

Innovative Design of Multi-functional Supernumerary Robotic Limbs with Ellipsoid Workspace Optimization

Nov 15, 2025

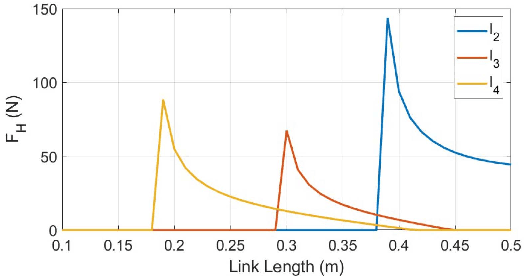

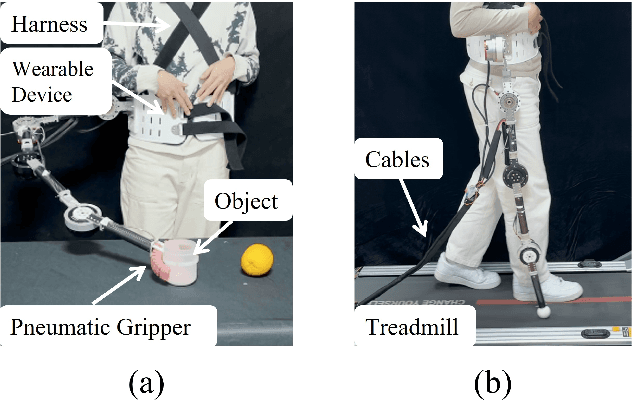

Abstract:Supernumerary robotic limbs (SRLs) offer substantial potential in both the rehabilitation of hemiplegic patients and the enhancement of functional capabilities for healthy individuals. Designing a general-purpose SRL device is inherently challenging, particularly when developing a unified theoretical framework that meets the diverse functional requirements of both upper and lower limbs. In this paper, we propose a multi-objective optimization (MOO) design theory that integrates grasping workspace similarity, walking workspace similarity, braced force for sit-to-stand (STS) movements, and overall mass and inertia. A geometric vector quantification method is developed using an ellipsoid to represent the workspace, aiming to reduce computational complexity and address quantification challenges. The ellipsoid envelope transforms workspace points into ellipsoid attributes, providing a parametric description of the workspace. Furthermore, the STS static braced force assesses the effectiveness of force transmission. The overall mass and inertia restricts excessive link length. To facilitate rapid and stable convergence of the model to high-dimensional irregular Pareto fronts, we introduce a multi-subpopulation correction firefly algorithm. This algorithm incorporates a strategy involving attractive and repulsive domains to effectively handle the MOO task. The optimized solution is utilized to redesign the prototype for experimentation to meet specified requirements. Six healthy participants and two hemiplegia patients participated in real experiments. Compared to the pre-optimization results, the average grasp success rate improved by 7.2%, while the muscle activity during walking and STS tasks decreased by an average of 12.7% and 25.1%, respectively. The proposed design theory offers an efficient option for the design of multi-functional SRL mechanisms.

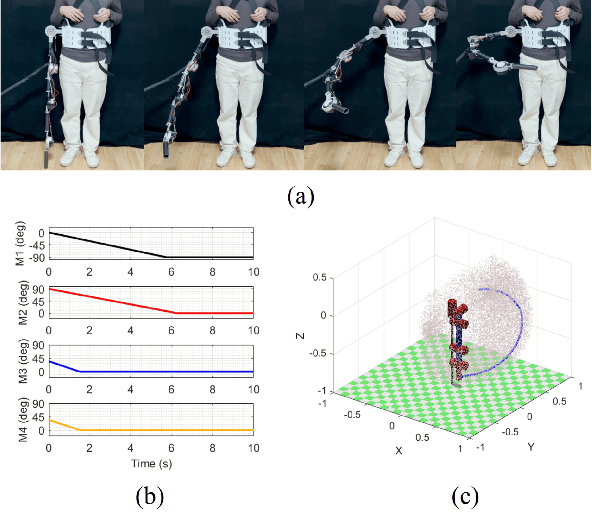

Variable Impedance Control for Floating-Base Supernumerary Robotic Leg in Walking Assistance

Nov 15, 2025Abstract:In human-robot systems, ensuring safety during force control in the presence of both internal and external disturbances is crucial. As a typical loosely coupled floating-base robot system, the supernumerary robotic leg (SRL) system is particularly susceptible to strong internal disturbances. To address the challenge posed by floating base, we investigated the dynamics model of the loosely coupled SRL and designed a hybrid position/force impedance controller to fit dynamic torque input. An efficient variable impedance control (VIC) method is developed to enhance human-robot interaction, particularly in scenarios involving external force disturbances. By dynamically adjusting impedance parameters, VIC improves the dynamic switching between rigidity and flexibility, so that it can adapt to unknown environmental disturbances in different states. An efficient real-time stability guaranteed impedance parameters generating network is specifically designed for the proposed SRL, to achieve shock mitigation and high rigidity supporting. Simulations and experiments validate the system's effectiveness, demonstrating its ability to maintain smooth signal transitions in flexible states while providing strong support forces in rigid states. This approach provides a practical solution for accommodating individual gait variations in interaction, and significantly advances the safety and adaptability of human-robot systems.

Elk: Exploring the Efficiency of Inter-core Connected AI Chips with Deep Learning Compiler Techniques

Jul 15, 2025Abstract:To meet the increasing demand of deep learning (DL) models, AI chips are employing both off-chip memory (e.g., HBM) and high-bandwidth low-latency interconnect for direct inter-core data exchange. However, it is not easy to explore the efficiency of these inter-core connected AI (ICCA) chips, due to a fundamental tussle among compute (per-core execution), communication (inter-core data exchange), and I/O (off-chip data access). In this paper, we develop Elk, a DL compiler framework to maximize the efficiency of ICCA chips by jointly trading off all the three performance factors discussed above. Elk structures these performance factors into configurable parameters and forms a global trade-off space in the DL compiler. To systematically explore this space and maximize overall efficiency, Elk employs a new inductive operator scheduling policy and a cost-aware on-chip memory allocation algorithm. It generates globally optimized execution plans that best overlap off-chip data loading and on-chip execution. To examine the efficiency of Elk, we build a full-fledged emulator based on a real ICCA chip IPU-POD4, and an ICCA chip simulator for sensitivity analysis with different interconnect network topologies. Elk achieves 94% of the ideal roofline performance of ICCA chips on average, showing the benefits of supporting large DL models on ICCA chips. We also show Elk's capability of enabling architecture design space exploration for new ICCA chip development.

* This paper is accepted at the 58th IEEE/ACM International Symposium on Microarchitecture (MICRO'25)

On-Device Training of PV Power Forecasting Models in a Smart Meter for Grid Edge Intelligence

Jul 09, 2025Abstract:In this paper, an edge-side model training study is conducted on a resource-limited smart meter. The motivation of grid-edge intelligence and the concept of on-device training are introduced. Then, the technical preparation steps for on-device training are described. A case study on the task of photovoltaic power forecasting is presented, where two representative machine learning models are investigated: a gradient boosting tree model and a recurrent neural network model. To adapt to the resource-limited situation in the smart meter, "mixed"- and "reduced"-precision training schemes are also devised. Experiment results demonstrate the feasibility of economically achieving grid-edge intelligence via the existing advanced metering infrastructures.

Mic-hackathon 2024: Hackathon on Machine Learning for Electron and Scanning Probe Microscopy

Jun 10, 2025

Abstract:Microscopy is a primary source of information on materials structure and functionality at nanometer and atomic scales. The data generated is often well-structured, enriched with metadata and sample histories, though not always consistent in detail or format. The adoption of Data Management Plans (DMPs) by major funding agencies promotes preservation and access. However, deriving insights remains difficult due to the lack of standardized code ecosystems, benchmarks, and integration strategies. As a result, data usage is inefficient and analysis time is extensive. In addition to post-acquisition analysis, new APIs from major microscope manufacturers enable real-time, ML-based analytics for automated decision-making and ML-agent-controlled microscope operation. Yet, a gap remains between the ML and microscopy communities, limiting the impact of these methods on physics, materials discovery, and optimization. Hackathons help bridge this divide by fostering collaboration between ML researchers and microscopy experts. They encourage the development of novel solutions that apply ML to microscopy, while preparing a future workforce for instrumentation, materials science, and applied ML. This hackathon produced benchmark datasets and digital twins of microscopes to support community growth and standardized workflows. All related code is available at GitHub: https://github.com/KalininGroup/Mic-hackathon-2024-codes-publication/tree/1.0.0.1

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge