Joshua C. Agar

Real-Time Cell Sorting with Scalable In Situ FPGA-Accelerated Deep Learning

Mar 16, 2025Abstract:Precise cell classification is essential in biomedical diagnostics and therapeutic monitoring, particularly for identifying diverse cell types involved in various diseases. Traditional cell classification methods such as flow cytometry depend on molecular labeling which is often costly, time-intensive, and can alter cell integrity. To overcome these limitations, we present a label-free machine learning framework for cell classification, designed for real-time sorting applications using bright-field microscopy images. This approach leverages a teacher-student model architecture enhanced by knowledge distillation, achieving high efficiency and scalability across different cell types. Demonstrated through a use case of classifying lymphocyte subsets, our framework accurately classifies T4, T8, and B cell types with a dataset of 80,000 preprocessed images, accessible via an open-source Python package for easy adaptation. Our teacher model attained 98\% accuracy in differentiating T4 cells from B cells and 93\% accuracy in zero-shot classification between T8 and B cells. Remarkably, our student model operates with only 0.02\% of the teacher model's parameters, enabling field-programmable gate array (FPGA) deployment. Our FPGA-accelerated student model achieves an ultra-low inference latency of just 14.5~$\mu$s and a complete cell detection-to-sorting trigger time of 24.7~$\mu$s, delivering 12x and 40x improvements over the previous state-of-the-art real-time cell analysis algorithm in inference and total latency, respectively, while preserving accuracy comparable to the teacher model. This framework provides a scalable, cost-effective solution for lymphocyte classification, as well as a new SOTA real-time cell sorting implementation for rapid identification of subsets using in situ deep learning on off-the-shelf computing hardware.

Low latency optical-based mode tracking with machine learning deployed on FPGAs on a tokamak

Nov 30, 2023

Abstract:Active feedback control in magnetic confinement fusion devices is desirable to mitigate plasma instabilities and enable robust operation. Optical high-speed cameras provide a powerful, non-invasive diagnostic and can be suitable for these applications. In this study, we process fast camera data, at rates exceeding 100kfps, on $\textit{in situ}$ Field Programmable Gate Array (FPGA) hardware to track magnetohydrodynamic (MHD) mode evolution and generate control signals in real-time. Our system utilizes a convolutional neural network (CNN) model which predicts the $n$=1 MHD mode amplitude and phase using camera images with better accuracy than other tested non-deep-learning-based methods. By implementing this model directly within the standard FPGA readout hardware of the high-speed camera diagnostic, our mode tracking system achieves a total trigger-to-output latency of 17.6$\mu$s and a throughput of up to 120kfps. This study at the High Beta Tokamak-Extended Pulse (HBT-EP) experiment demonstrates an FPGA-based high-speed camera data acquisition and processing system, enabling application in real-time machine-learning-based tokamak diagnostic and control as well as potential applications in other scientific domains.

Deep Learning for Automated Experimentation in Scanning Transmission Electron Microscopy

Apr 04, 2023

Abstract:Machine learning (ML) has become critical for post-acquisition data analysis in (scanning) transmission electron microscopy, (S)TEM, imaging and spectroscopy. An emerging trend is the transition to real-time analysis and closed-loop microscope operation. The effective use of ML in electron microscopy now requires the development of strategies for microscopy-centered experiment workflow design and optimization. Here, we discuss the associated challenges with the transition to active ML, including sequential data analysis and out-of-distribution drift effects, the requirements for the edge operation, local and cloud data storage, and theory in the loop operations. Specifically, we discuss the relative contributions of human scientists and ML agents in the ideation, orchestration, and execution of experimental workflows and the need to develop universal hyper languages that can apply across multiple platforms. These considerations will collectively inform the operationalization of ML in next-generation experimentation.

Stacked Generative Machine Learning Models for Fast Approximations of Steady-State Navier-Stokes Equations

Dec 13, 2021

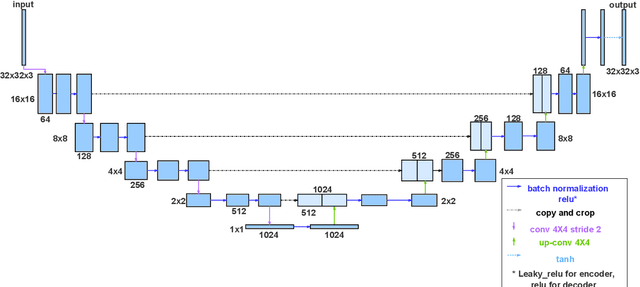

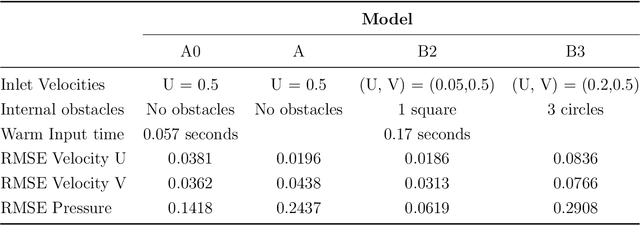

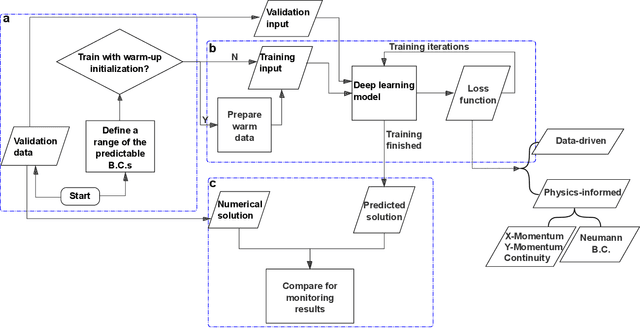

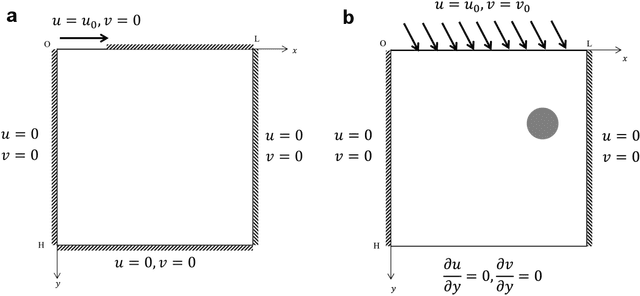

Abstract:Computational fluid dynamics (CFD) simulations are broadly applied in engineering and physics. A standard description of fluid dynamics requires solving the Navier-Stokes (N-S) equations in different flow regimes. However, applications of CFD simulations are computationally-limited by the availability, speed, and parallelism of high-performance computing. To improve computational efficiency, machine learning techniques have been used to create accelerated data-driven approximations for CFD. A majority of such approaches rely on large labeled CFD datasets that are expensive to obtain at the scale necessary to build robust data-driven models. We develop a weakly-supervised approach to solve the steady-state N-S equations under various boundary conditions, using a multi-channel input with boundary and geometric conditions. We achieve state-of-the-art results without any labeled simulation data, but using a custom data-driven and physics-informed loss function by using and small-scale solutions to prime the model to solve the N-S equations. To improve the resolution and predictability, we train stacked models of increasing complexity generating the numerical solutions for N-S equations. Without expensive computations, our model achieves high predictability with a variety of obstacles and boundary conditions. Given its high flexibility, the model can generate a solution on a 64 x 64 domain within 5 ms on a regular desktop computer which is 1000 times faster than a regular CFD solver. Translation of interactive CFD simulation on local consumer computing hardware enables new applications in real-time predictions on the internet of things devices where data transfer is prohibitive and can increase the scale, speed, and computational cost of boundary-value fluid problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge