Li

Tina

Cost-Aware Routing for Efficient Text-To-Image Generation

Jun 17, 2025

Abstract:Diffusion models are well known for their ability to generate a high-fidelity image for an input prompt through an iterative denoising process. Unfortunately, the high fidelity also comes at a high computational cost due the inherently sequential generative process. In this work, we seek to optimally balance quality and computational cost, and propose a framework to allow the amount of computation to vary for each prompt, depending on its complexity. Each prompt is automatically routed to the most appropriate text-to-image generation function, which may correspond to a distinct number of denoising steps of a diffusion model, or a disparate, independent text-to-image model. Unlike uniform cost reduction techniques (e.g., distillation, model quantization), our approach achieves the optimal trade-off by learning to reserve expensive choices (e.g., 100+ denoising steps) only for a few complex prompts, and employ more economical choices (e.g., small distilled model) for less sophisticated prompts. We empirically demonstrate on COCO and DiffusionDB that by learning to route to nine already-trained text-to-image models, our approach is able to deliver an average quality that is higher than that achievable by any of these models alone.

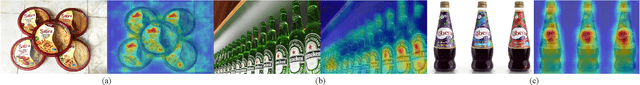

HSLiNets: Evaluating Band Ordering Strategies in Hyperspectral and LiDAR Fusion

Mar 27, 2025Abstract:The integration of hyperspectral imaging (HSI) and Light Detection and Ranging (LiDAR) data provides complementary spectral and spatial information for remote sensing applications. While previous studies have explored the role of band selection and grouping in HSI classification, little attention has been given to how the spectral sequence or band order affects classification outcomes when fused with LiDAR. In this work, we systematically investigate the influence of band order on HSI-LiDAR fusion performance. Through extensive experiments, we demonstrate that band order significantly impacts classification accuracy, revealing a previously overlooked factor in fusion-based models. Motivated by this observation, we propose a novel fusion architecture that not only integrates HSI and LiDAR data but also learns from multiple band order configurations. The proposed method enhances feature representation by adaptively fusing different spectral sequences, leading to improved classification accuracy. Experimental results on the Houston 2013 and Trento datasets show that our approach outperforms state-of-the-art fusion models. Data and code are available at https://github.com/Judyxyang/HSLiNets.

A Survey: Collaborative Hardware and Software Design in the Era of Large Language Models

Oct 08, 2024

Abstract:The rapid development of large language models (LLMs) has significantly transformed the field of artificial intelligence, demonstrating remarkable capabilities in natural language processing and moving towards multi-modal functionality. These models are increasingly integrated into diverse applications, impacting both research and industry. However, their development and deployment present substantial challenges, including the need for extensive computational resources, high energy consumption, and complex software optimizations. Unlike traditional deep learning systems, LLMs require unique optimization strategies for training and inference, focusing on system-level efficiency. This paper surveys hardware and software co-design approaches specifically tailored to address the unique characteristics and constraints of large language models. This survey analyzes the challenges and impacts of LLMs on hardware and algorithm research, exploring algorithm optimization, hardware design, and system-level innovations. It aims to provide a comprehensive understanding of the trade-offs and considerations in LLM-centric computing systems, guiding future advancements in AI. Finally, we summarize the existing efforts in this space and outline future directions toward realizing production-grade co-design methodologies for the next generation of large language models and AI systems.

MonoSparse-CAM: Harnessing Monotonicity and Sparsity for Enhanced Tree Model Processing on CAMs

Jul 12, 2024

Abstract:Despite significant advancements in AI driven by neural networks, tree-based machine learning (TBML) models excel on tabular data. These models exhibit promising energy efficiency, and high performance, particularly when accelerated on analog content-addressable memory (aCAM) arrays. However, optimizing their hardware deployment, especially in leveraging TBML model structure and aCAM circuitry, remains challenging. In this paper, we introduce MonoSparse-CAM, a novel content-addressable memory (CAM) based computing optimization technique. MonoSparse-CAM efficiently leverages TBML model sparsity and CAM array circuits, enhancing processing performance. Our experiments show that MonoSparse-CAM reduces energy consumption by up to 28.56x compared to raw processing and 18.51x compared to existing deployment optimization techniques. Additionally, it consistently achieves at least 1.68x computational efficiency over current methods. By enabling energy-efficient CAM-based computing while preserving performance regardless of the array sparsity, MonoSparse-CAM addresses the high energy consumption problem of CAM which hinders processing of large arrays. Our contributions are twofold: we propose MonoSparse-CAM as an effective deployment optimization solution for CAM-based computing, and we investigate the impact of TBML model structure on array sparsity. This work provides crucial insights for energy-efficient TBML on hardware, highlighting a significant advancement in sustainable AI technologies.

Distilling Text Style Transfer With Self-Explanation From LLMs

Mar 02, 2024

Abstract:Text Style Transfer (TST) seeks to alter the style of text while retaining its core content. Given the constraints of limited parallel datasets for TST, we propose CoTeX, a framework that leverages large language models (LLMs) alongside chain-of-thought (CoT) prompting to facilitate TST. CoTeX distills the complex rewriting and reasoning capabilities of LLMs into more streamlined models capable of working with both non-parallel and parallel data. Through experimentation across four TST datasets, CoTeX is shown to surpass traditional supervised fine-tuning and knowledge distillation methods, particularly in low-resource settings. We conduct a comprehensive evaluation, comparing CoTeX against current unsupervised, supervised, in-context learning (ICL) techniques, and instruction-tuned LLMs. Furthermore, CoTeX distinguishes itself by offering transparent explanations for its style transfer process.

Self Expanding Convolutional Neural Networks

Jan 17, 2024Abstract:In this paper, we present a novel method for dynamically expanding Convolutional Neural Networks (CNNs) during training, aimed at meeting the increasing demand for efficient and sustainable deep learning models. Our approach, drawing from the seminal work on Self-Expanding Neural Networks (SENN), employs a natural expansion score as an expansion criteria to address the common issue of over-parameterization in deep convolutional neural networks, thereby ensuring that the model's complexity is finely tuned to the task's specific needs. A significant benefit of this method is its eco-friendly nature, as it obviates the necessity of training multiple models of different sizes. We employ a strategy where a single model is dynamically expanded, facilitating the extraction of checkpoints at various complexity levels, effectively reducing computational resource use and energy consumption while also expediting the development cycle by offering diverse model complexities from a single training session. We evaluate our method on the CIFAR-10 dataset and our experimental results validate this approach, demonstrating that dynamically adding layers not only maintains but also improves CNN performance, underscoring the effectiveness of our expansion criteria. This approach marks a considerable advancement in developing adaptive, scalable, and environmentally considerate neural network architectures, addressing key challenges in the field of deep learning.

Foundation Models for Generalist Geospatial Artificial Intelligence

Nov 08, 2023Abstract:Significant progress in the development of highly adaptable and reusable Artificial Intelligence (AI) models is expected to have a significant impact on Earth science and remote sensing. Foundation models are pre-trained on large unlabeled datasets through self-supervision, and then fine-tuned for various downstream tasks with small labeled datasets. This paper introduces a first-of-a-kind framework for the efficient pre-training and fine-tuning of foundational models on extensive geospatial data. We have utilized this framework to create Prithvi, a transformer-based geospatial foundational model pre-trained on more than 1TB of multispectral satellite imagery from the Harmonized Landsat-Sentinel 2 (HLS) dataset. Our study demonstrates the efficacy of our framework in successfully fine-tuning Prithvi to a range of Earth observation tasks that have not been tackled by previous work on foundation models involving multi-temporal cloud gap imputation, flood mapping, wildfire scar segmentation, and multi-temporal crop segmentation. Our experiments show that the pre-trained model accelerates the fine-tuning process compared to leveraging randomly initialized weights. In addition, pre-trained Prithvi compares well against the state-of-the-art, e.g., outperforming a conditional GAN model in multi-temporal cloud imputation by up to 5pp (or 5.7%) in the structural similarity index. Finally, due to the limited availability of labeled data in the field of Earth observation, we gradually reduce the quantity of available labeled data for refining the model to evaluate data efficiency and demonstrate that data can be decreased significantly without affecting the model's accuracy. The pre-trained 100 million parameter model and corresponding fine-tuning workflows have been released publicly as open source contributions to the global Earth sciences community through Hugging Face.

Clinical-Inspired Cytological Whole Slide Image Screening with Just Slide-Level Labels

Jun 06, 2023

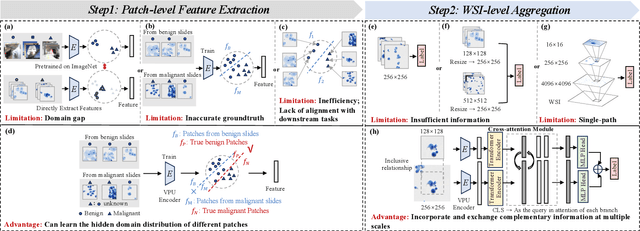

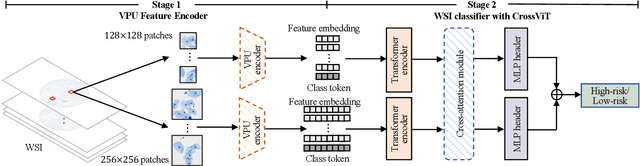

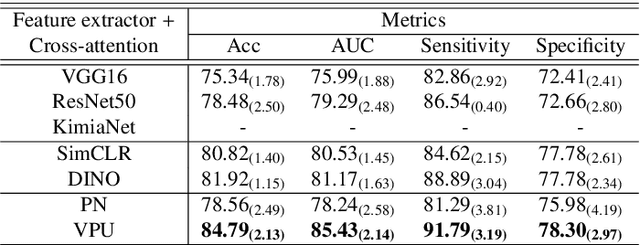

Abstract:Cytology test is effective, non-invasive, convenient, and inexpensive for clinical cancer screening. ThinPrep, a commonly used liquid-based specimen, can be scanned to generate digital whole slide images (WSIs) for cytology testing. However, WSIs classification with gigapixel resolutions is highly resource-intensive, posing significant challenges for automated medical image analysis. In order to circumvent this computational impasse, existing methods emphasize learning features at the cell or patch level, typically requiring labor-intensive and detailed manual annotations, such as labels at the cell or patch level. Here we propose a novel automated Label-Efficient WSI Screening method, dubbed LESS, for cytology-based diagnosis with only slide-level labels. Firstly, in order to achieve label efficiency, we suggest employing variational positive-unlabeled (VPU) learning, enhancing patch-level feature learning using WSI-level labels. Subsequently, guided by the clinical approach of scrutinizing WSIs at varying fields of view and scales, we employ a cross-attention vision transformer (CrossViT) to fuse multi-scale patch-level data and execute WSI-level classification. We validate the proposed label-efficient method on a urine cytology WSI dataset encompassing 130 samples (13,000 patches) and FNAC 2019 dataset with 212 samples (21,200 patches). The experiment shows that the proposed LESS reaches 84.79%, 85.43%, 91.79% and 78.30% on a urine cytology WSI dataset, and 96.53%, 96.37%, 99.31%, 94.95% on FNAC 2019 dataset in terms of accuracy, AUC, sensitivity and specificity. It outperforms state-of-the-art methods and realizes automatic cytology-based bladder cancer screening.

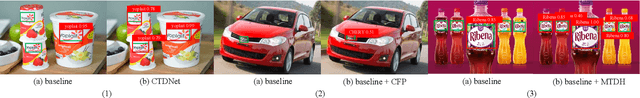

A Cross-direction Task Decoupling Network for Small Logo Detection

May 04, 2023

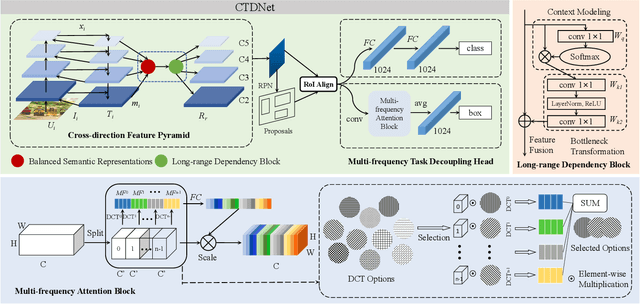

Abstract:Logo detection plays an integral role in many applications. However, handling small logos is still difficult since they occupy too few pixels in the image, which burdens the extraction of discriminative features. The aggregation of small logos also brings a great challenge to the classification and localization of logos. To solve these problems, we creatively propose Cross-direction Task Decoupling Network (CTDNet) for small logo detection. We first introduce Cross-direction Feature Pyramid (CFP) to realize cross-direction feature fusion by adopting horizontal transmission and vertical transmission. In addition, Multi-frequency Task Decoupling Head (MTDH) decouples the classification and localization tasks into two branches. A multi frequency attention convolution branch is designed to achieve more accurate regression by combining discrete cosine transform and convolution creatively. Comprehensive experiments on four logo datasets demonstrate the effectiveness and efficiency of the proposed method.

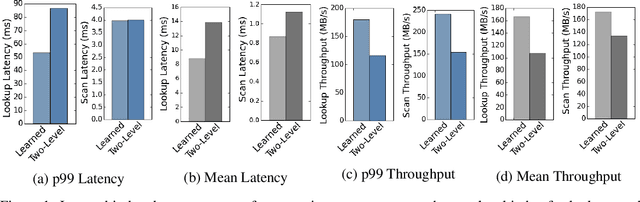

Learned Indexes for a Google-scale Disk-based Database

Dec 23, 2020

Abstract:There is great excitement about learned index structures, but understandable skepticism about the practicality of a new method uprooting decades of research on B-Trees. In this paper, we work to remove some of that uncertainty by demonstrating how a learned index can be integrated in a distributed, disk-based database system: Google's Bigtable. We detail several design decisions we made to integrate learned indexes in Bigtable. Our results show that integrating learned index significantly improves the end-to-end read latency and throughput for Bigtable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge