Blair Edwards

IBM Research, Daresbury, UK

Variational Exploration Module VEM: A Cloud-Native Optimization and Validation Tool for Geospatial Modeling and AI Workflows

Nov 26, 2023

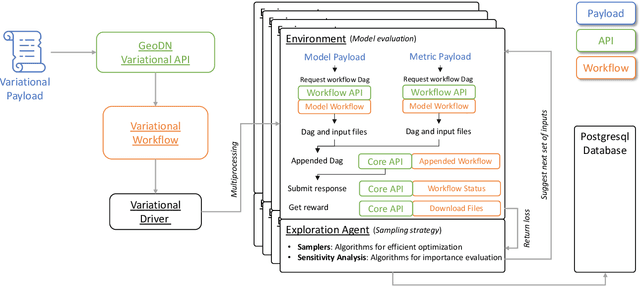

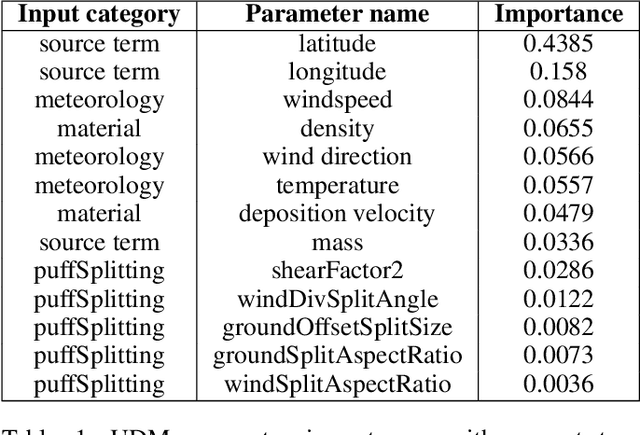

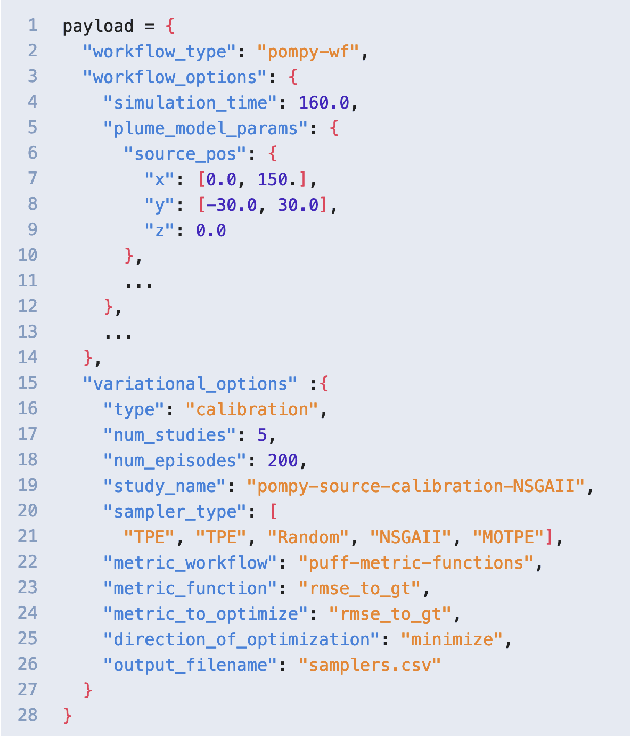

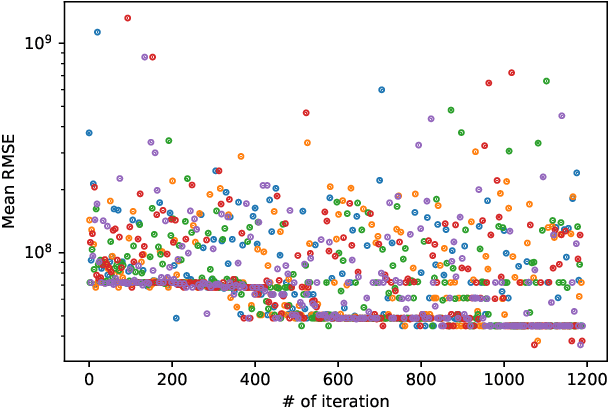

Abstract:Geospatial observations combined with computational models have become key to understanding the physical systems of our environment and enable the design of best practices to reduce societal harm. Cloud-based deployments help to scale up these modeling and AI workflows. Yet, for practitioners to make robust conclusions, model tuning and testing is crucial, a resource intensive process which involves the variation of model input variables. We have developed the Variational Exploration Module which facilitates the optimization and validation of modeling workflows deployed in the cloud by orchestrating workflow executions and using Bayesian and machine learning-based methods to analyze model behavior. User configurations allow the combination of diverse sampling strategies in multi-agent environments. The flexibility and robustness of the model-agnostic module is demonstrated using real-world applications.

Foundation Models for Generalist Geospatial Artificial Intelligence

Nov 08, 2023Abstract:Significant progress in the development of highly adaptable and reusable Artificial Intelligence (AI) models is expected to have a significant impact on Earth science and remote sensing. Foundation models are pre-trained on large unlabeled datasets through self-supervision, and then fine-tuned for various downstream tasks with small labeled datasets. This paper introduces a first-of-a-kind framework for the efficient pre-training and fine-tuning of foundational models on extensive geospatial data. We have utilized this framework to create Prithvi, a transformer-based geospatial foundational model pre-trained on more than 1TB of multispectral satellite imagery from the Harmonized Landsat-Sentinel 2 (HLS) dataset. Our study demonstrates the efficacy of our framework in successfully fine-tuning Prithvi to a range of Earth observation tasks that have not been tackled by previous work on foundation models involving multi-temporal cloud gap imputation, flood mapping, wildfire scar segmentation, and multi-temporal crop segmentation. Our experiments show that the pre-trained model accelerates the fine-tuning process compared to leveraging randomly initialized weights. In addition, pre-trained Prithvi compares well against the state-of-the-art, e.g., outperforming a conditional GAN model in multi-temporal cloud imputation by up to 5pp (or 5.7%) in the structural similarity index. Finally, due to the limited availability of labeled data in the field of Earth observation, we gradually reduce the quantity of available labeled data for refining the model to evaluate data efficiency and demonstrate that data can be decreased significantly without affecting the model's accuracy. The pre-trained 100 million parameter model and corresponding fine-tuning workflows have been released publicly as open source contributions to the global Earth sciences community through Hugging Face.

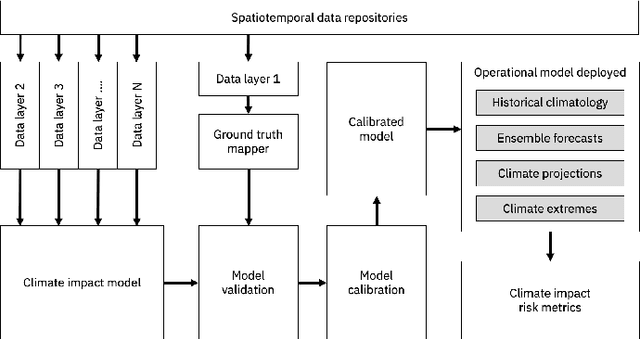

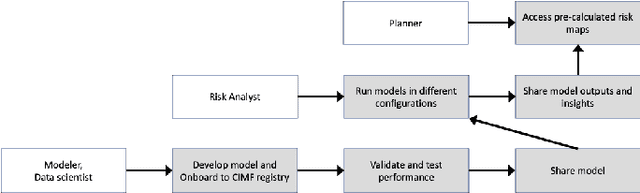

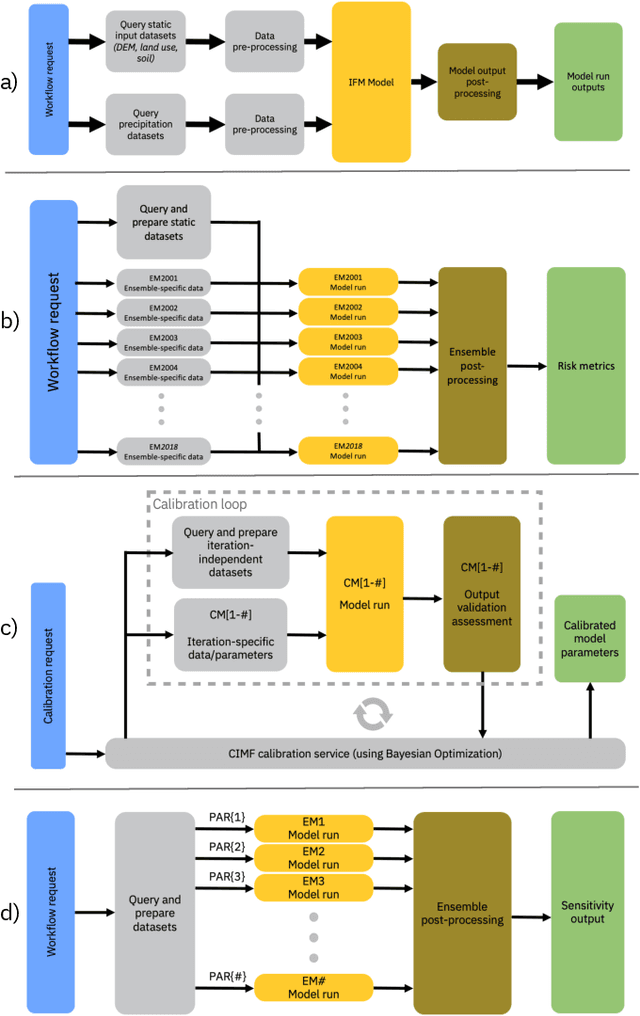

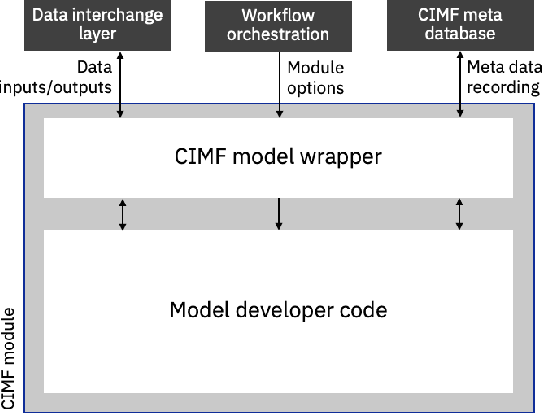

Climate Impact Modelling Framework

Sep 27, 2022

Abstract:The application of models to assess the risk of the physical impacts of weather and climate and their subsequent consequences for society and business is of the utmost importance in our changing climate. The operation of such models is historically bespoke and constrained to specific compute infrastructure, driving datasets and predefined configurations. These constraints introduce challenges with scaling model runs and putting the models in the hands of interested users. Here we present a cloud-based modular framework for the deployment and operation of geospatial models, initially applied to climate impacts. The Climate Impact Modelling Frameworks (CIMF) enables the deployment of modular workflows in a dynamic and flexible manner. Users can specify workflow components in a streamlined manner, these components can then be easily organised into different configurations to assess risk in different ways and at different scales. This also enables different models (physical simulation or machine learning models) and workflows to be connected to produce combined risk assessment. Flood modelling is used as an end-to-end example to demonstrate the operation of CIMF.

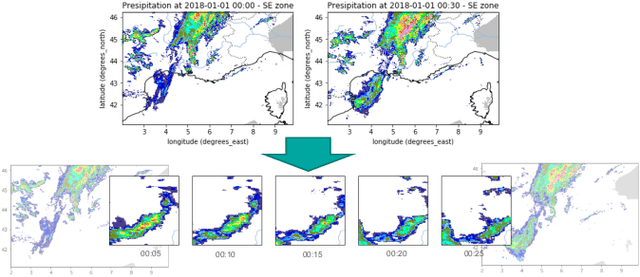

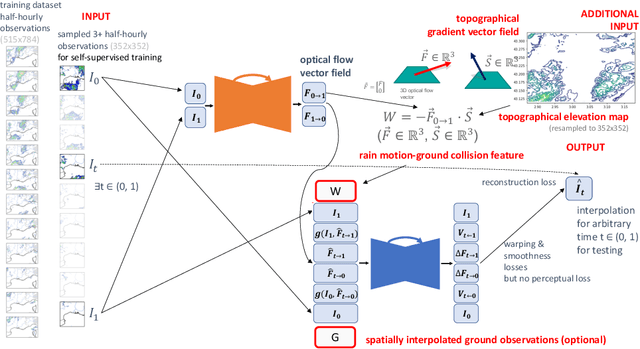

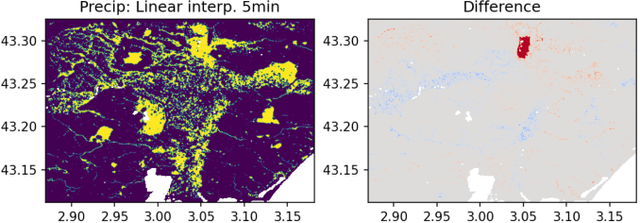

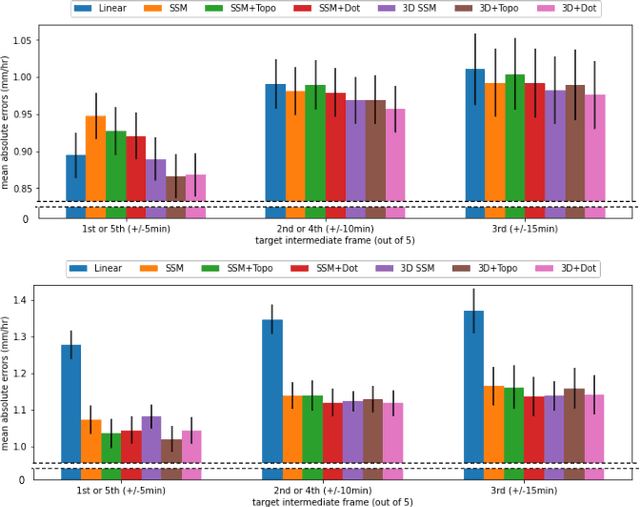

Deep Temporal Interpolation of Radar-based Precipitation

Mar 01, 2022

Abstract:When providing the boundary conditions for hydrological flood models and estimating the associated risk, interpolating precipitation at very high temporal resolutions (e.g. 5 minutes) is essential not to miss the cause of flooding in local regions. In this paper, we study optical flow-based interpolation of globally available weather radar images from satellites. The proposed approach uses deep neural networks for the interpolation of multiple video frames, while terrain information is combined with temporarily coarse-grained precipitation radar observation as inputs for self-supervised training. An experiment with the Meteonet radar precipitation dataset for the flood risk simulation in Aude, a department in Southern France (2018), demonstrated the advantage of the proposed method over a linear interpolation baseline, with up to 20% error reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge