Anne Jones

IBM Research, Daresbury, UK

Variational Exploration Module VEM: A Cloud-Native Optimization and Validation Tool for Geospatial Modeling and AI Workflows

Nov 26, 2023

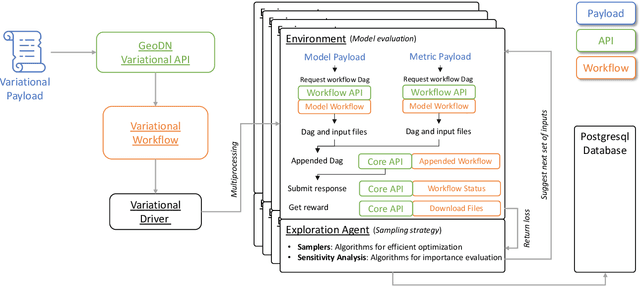

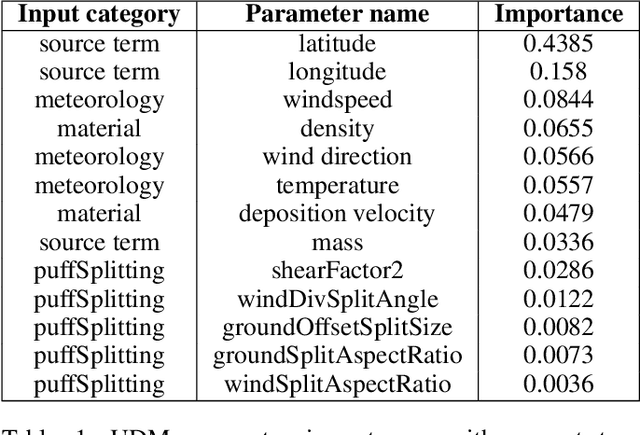

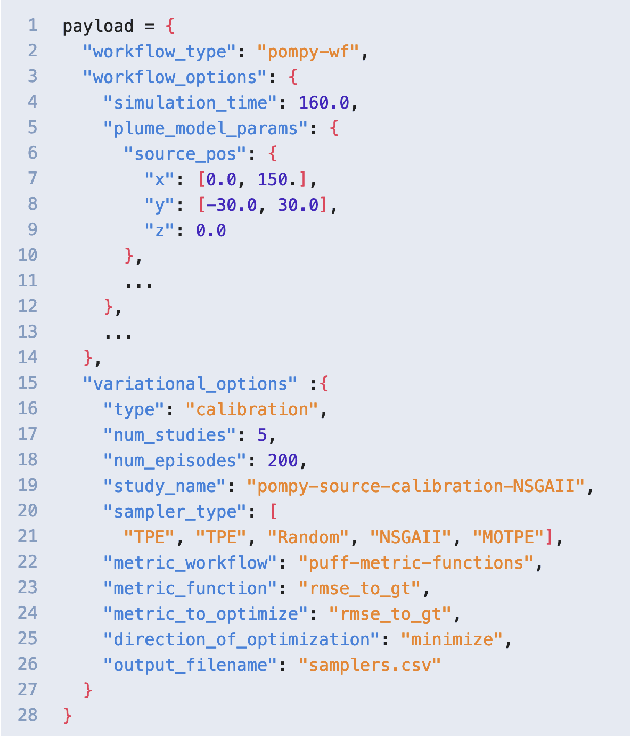

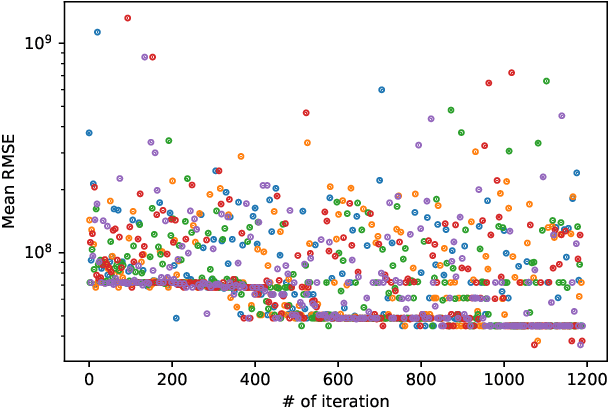

Abstract:Geospatial observations combined with computational models have become key to understanding the physical systems of our environment and enable the design of best practices to reduce societal harm. Cloud-based deployments help to scale up these modeling and AI workflows. Yet, for practitioners to make robust conclusions, model tuning and testing is crucial, a resource intensive process which involves the variation of model input variables. We have developed the Variational Exploration Module which facilitates the optimization and validation of modeling workflows deployed in the cloud by orchestrating workflow executions and using Bayesian and machine learning-based methods to analyze model behavior. User configurations allow the combination of diverse sampling strategies in multi-agent environments. The flexibility and robustness of the model-agnostic module is demonstrated using real-world applications.

AI Foundation Models for Weather and Climate: Applications, Design, and Implementation

Sep 20, 2023Abstract:Machine learning and deep learning methods have been widely explored in understanding the chaotic behavior of the atmosphere and furthering weather forecasting. There has been increasing interest from technology companies, government institutions, and meteorological agencies in building digital twins of the Earth. Recent approaches using transformers, physics-informed machine learning, and graph neural networks have demonstrated state-of-the-art performance on relatively narrow spatiotemporal scales and specific tasks. With the recent success of generative artificial intelligence (AI) using pre-trained transformers for language modeling and vision with prompt engineering and fine-tuning, we are now moving towards generalizable AI. In particular, we are witnessing the rise of AI foundation models that can perform competitively on multiple domain-specific downstream tasks. Despite this progress, we are still in the nascent stages of a generalizable AI model for global Earth system models, regional climate models, and mesoscale weather models. Here, we review current state-of-the-art AI approaches, primarily from transformer and operator learning literature in the context of meteorology. We provide our perspective on criteria for success towards a family of foundation models for nowcasting and forecasting weather and climate predictions. We also discuss how such models can perform competitively on downstream tasks such as downscaling (super-resolution), identifying conditions conducive to the occurrence of wildfires, and predicting consequential meteorological phenomena across various spatiotemporal scales such as hurricanes and atmospheric rivers. In particular, we examine current AI methodologies and contend they have matured enough to design and implement a weather foundation model.

Climate Impact Modelling Framework

Sep 27, 2022

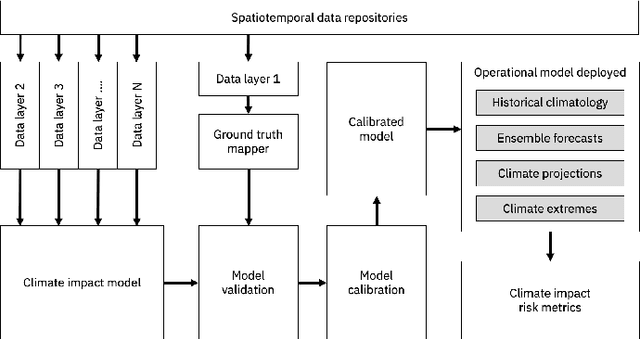

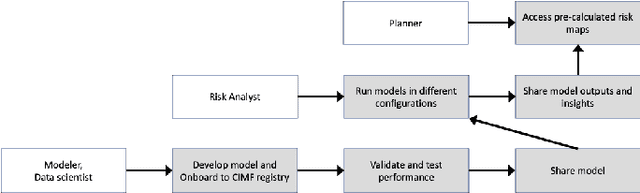

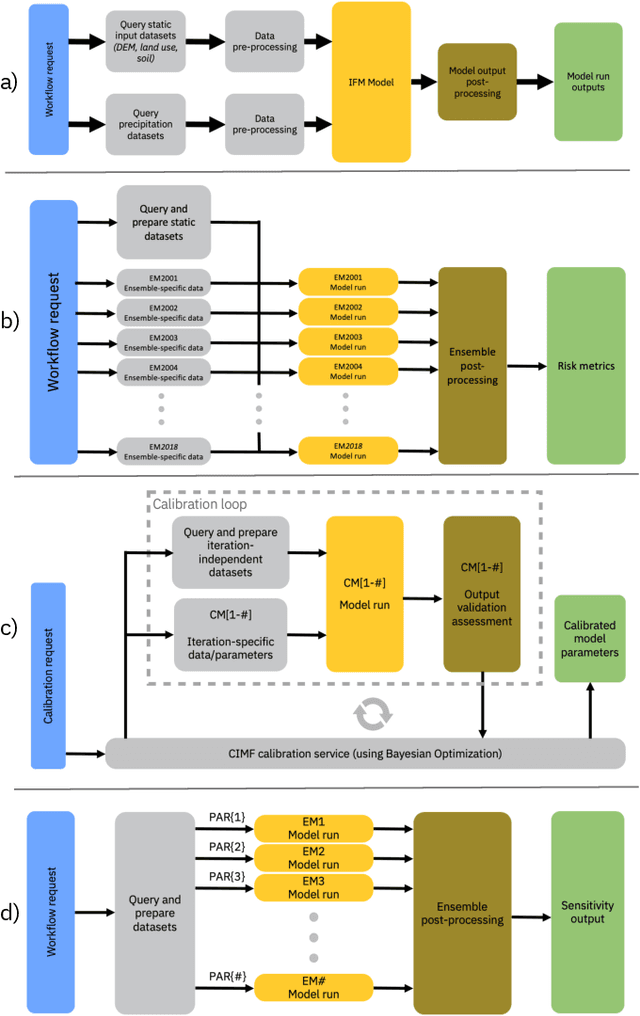

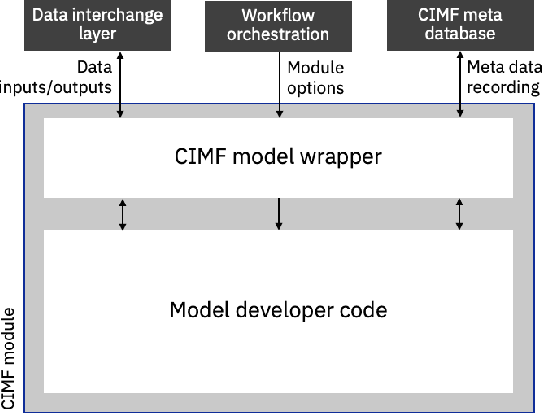

Abstract:The application of models to assess the risk of the physical impacts of weather and climate and their subsequent consequences for society and business is of the utmost importance in our changing climate. The operation of such models is historically bespoke and constrained to specific compute infrastructure, driving datasets and predefined configurations. These constraints introduce challenges with scaling model runs and putting the models in the hands of interested users. Here we present a cloud-based modular framework for the deployment and operation of geospatial models, initially applied to climate impacts. The Climate Impact Modelling Frameworks (CIMF) enables the deployment of modular workflows in a dynamic and flexible manner. Users can specify workflow components in a streamlined manner, these components can then be easily organised into different configurations to assess risk in different ways and at different scales. This also enables different models (physical simulation or machine learning models) and workflows to be connected to produce combined risk assessment. Flood modelling is used as an end-to-end example to demonstrate the operation of CIMF.

Surrogate Ensemble Forecasting for Dynamic Climate Impact Models

Apr 12, 2022

Abstract:As acute climate change impacts weather and climate variability, there is increased demand for robust climate impact model predictions from which forecasts of the impacts can be derived. The quality of those predictions are limited by the climate drivers for the impact models which are nonlinear and highly variable in nature. One way to estimate the uncertainty of the model drivers is to assess the distribution of ensembles of climate forecasts. To capture the uncertainty in the impact model outputs associated with the distribution of the input climate forecasts, each individual forecast ensemble member has to be propagated through the physical model which can imply high computational costs. It is therefore desirable to train a surrogate model which allows predictions of the uncertainties of the output distribution in ensembles of climate drivers, thus reducing resource demands. This study considers a climate driven disease model, the Liverpool Malaria Model (LMM), which predicts the malaria transmission coefficient R0. Seasonal ensembles forecasts of temperature and precipitation with a 6-month horizon are propagated through the model, predicting the distribution of transmission time series. The input and output data is used to train surrogate models in the form of a Random Forest Quantile Regression (RFQR) model and a Bayesian Long Short-Term Memory (BLSTM) neural network. Comparing the predictive performance, the RFQR better predicts the time series of the individual ensemble member, while the BLSTM offers a direct way to construct a combined distribution for all ensemble members. An important element of the proposed methodology is that accounting for non-normal distributions of climate forecast ensembles can be captured naturally by a Bayesian formulation.

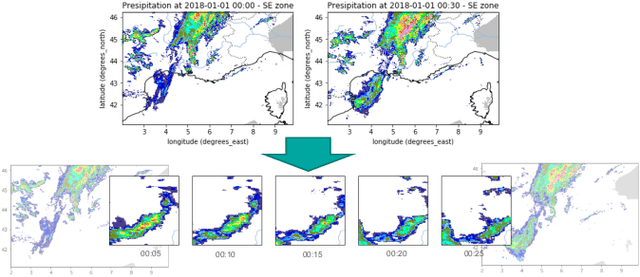

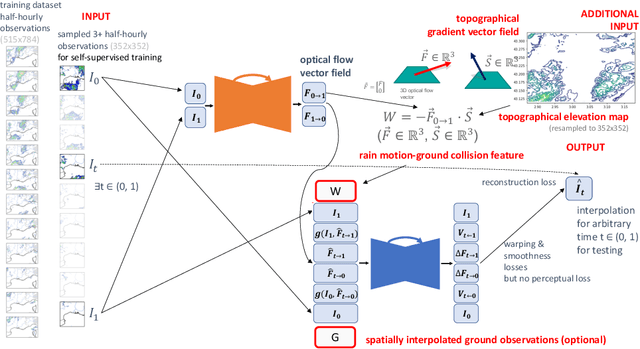

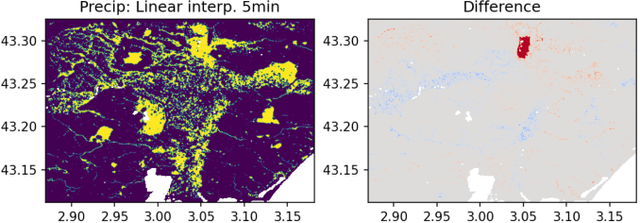

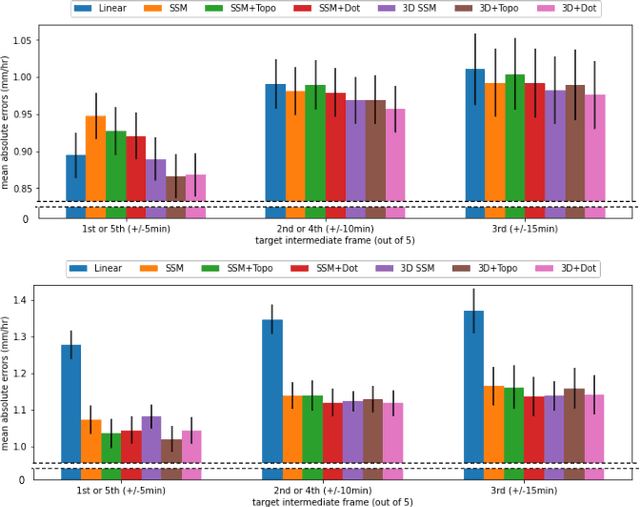

Deep Temporal Interpolation of Radar-based Precipitation

Mar 01, 2022

Abstract:When providing the boundary conditions for hydrological flood models and estimating the associated risk, interpolating precipitation at very high temporal resolutions (e.g. 5 minutes) is essential not to miss the cause of flooding in local regions. In this paper, we study optical flow-based interpolation of globally available weather radar images from satellites. The proposed approach uses deep neural networks for the interpolation of multiple video frames, while terrain information is combined with temporarily coarse-grained precipitation radar observation as inputs for self-supervised training. An experiment with the Meteonet radar precipitation dataset for the flood risk simulation in Aude, a department in Southern France (2018), demonstrated the advantage of the proposed method over a linear interpolation baseline, with up to 20% error reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge