Campbell Watson

Prithvi-EO-2.0: A Versatile Multi-Temporal Foundation Model for Earth Observation Applications

Dec 03, 2024

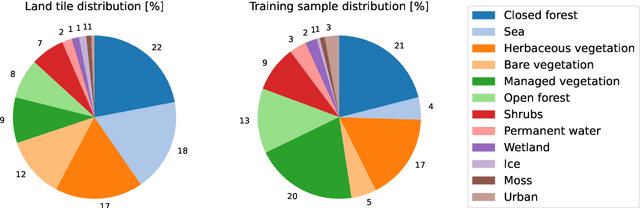

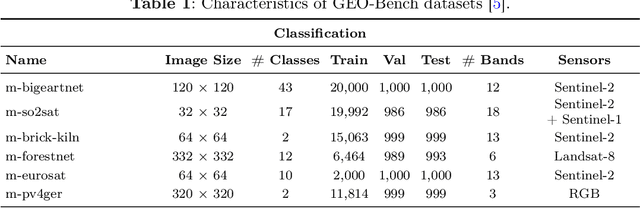

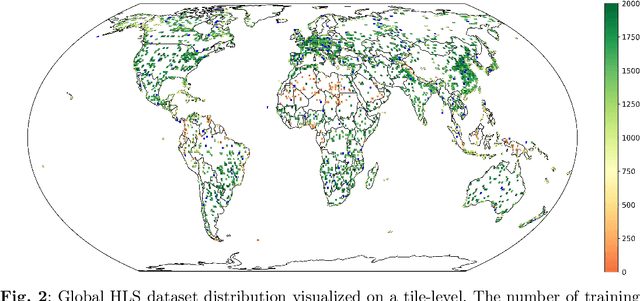

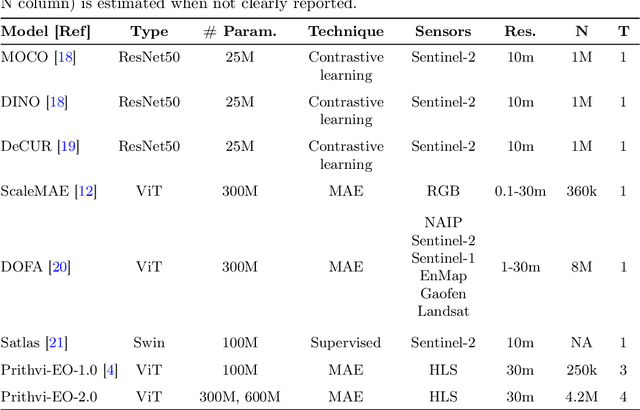

Abstract:This technical report presents Prithvi-EO-2.0, a new geospatial foundation model that offers significant improvements over its predecessor, Prithvi-EO-1.0. Trained on 4.2M global time series samples from NASA's Harmonized Landsat and Sentinel-2 data archive at 30m resolution, the new 300M and 600M parameter models incorporate temporal and location embeddings for enhanced performance across various geospatial tasks. Through extensive benchmarking with GEO-Bench, the 600M version outperforms the previous Prithvi-EO model by 8\% across a range of tasks. It also outperforms six other geospatial foundation models when benchmarked on remote sensing tasks from different domains and resolutions (i.e. from 0.1m to 15m). The results demonstrate the versatility of the model in both classical earth observation and high-resolution applications. Early involvement of end-users and subject matter experts (SMEs) are among the key factors that contributed to the project's success. In particular, SME involvement allowed for constant feedback on model and dataset design, as well as successful customization for diverse SME-led applications in disaster response, land use and crop mapping, and ecosystem dynamics monitoring. Prithvi-EO-2.0 is available on Hugging Face and IBM terratorch, with additional resources on GitHub. The project exemplifies the Trusted Open Science approach embraced by all involved organizations.

Multi-modal graph neural networks for localized off-grid weather forecasting

Oct 16, 2024Abstract:Urgent applications like wildfire management and renewable energy generation require precise, localized weather forecasts near the Earth's surface. However, weather forecast products from machine learning or numerical weather models are currently generated on a global regular grid, on which a naive interpolation cannot accurately reflect fine-grained weather patterns close to the ground. In this work, we train a heterogeneous graph neural network (GNN) end-to-end to downscale gridded forecasts to off-grid locations of interest. This multi-modal GNN takes advantage of local historical weather observations (e.g., wind, temperature) to correct the gridded weather forecast at different lead times towards locally accurate forecasts. Each data modality is modeled as a different type of node in the graph. Using message passing, the node at the prediction location aggregates information from its heterogeneous neighbor nodes. Experiments using weather stations across the Northeastern United States show that our model outperforms a range of data-driven and non-data-driven off-grid forecasting methods. Our approach demonstrates how the gap between global large-scale weather models and locally accurate predictions can be bridged to inform localized decision-making.

Evaluating the transferability potential of deep learning models for climate downscaling

Jul 17, 2024

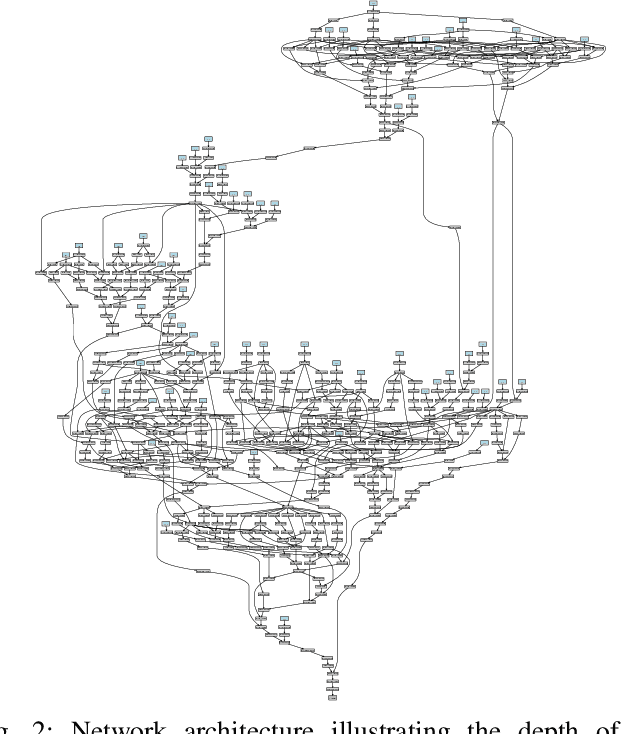

Abstract:Climate downscaling, the process of generating high-resolution climate data from low-resolution simulations, is essential for understanding and adapting to climate change at regional and local scales. Deep learning approaches have proven useful in tackling this problem. However, existing studies usually focus on training models for one specific task, location and variable, which are therefore limited in their generalizability and transferability. In this paper, we evaluate the efficacy of training deep learning downscaling models on multiple diverse climate datasets to learn more robust and transferable representations. We evaluate the effectiveness of architectures zero-shot transferability using CNNs, Fourier Neural Operators (FNOs), and vision Transformers (ViTs). We assess the spatial, variable, and product transferability of downscaling models experimentally, to understand the generalizability of these different architecture types.

Fine-tuning of Geospatial Foundation Models for Aboveground Biomass Estimation

Jun 28, 2024

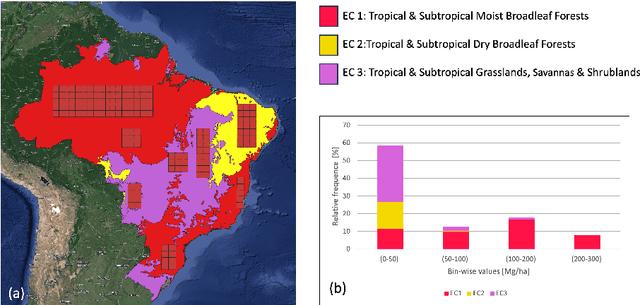

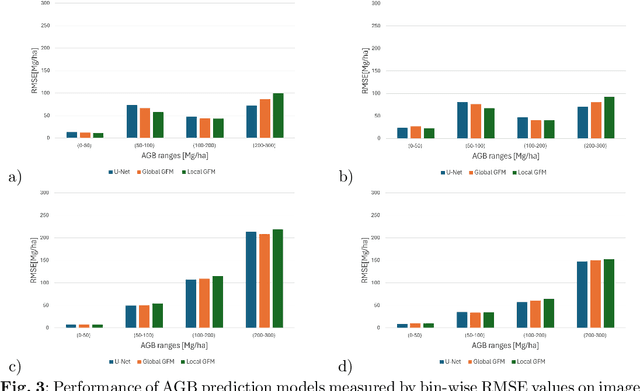

Abstract:Global vegetation structure mapping is critical for understanding the global carbon cycle and maximizing the efficacy of nature-based carbon sequestration initiatives. Moreover, vegetation structure mapping can help reduce the impacts of climate change by, for example, guiding actions to improve water security, increase biodiversity and reduce flood risk. Global satellite measurements provide an important set of observations for monitoring and managing deforestation and degradation of existing forests, natural forest regeneration, reforestation, biodiversity restoration, and the implementation of sustainable agricultural practices. In this paper, we explore the effectiveness of fine-tuning of a geospatial foundation model to estimate above-ground biomass (AGB) using space-borne data collected across different eco-regions in Brazil. The fine-tuned model architecture consisted of a Swin-B transformer as the encoder (i.e., backbone) and a single convolutional layer for the decoder head. All results were compared to a U-Net which was trained as the baseline model Experimental results of this sparse-label prediction task demonstrate that the fine-tuned geospatial foundation model with a frozen encoder has comparable performance to a U-Net trained from scratch. This is despite the fine-tuned model having 13 times less parameters requiring optimization, which saves both time and compute resources. Further, we explore the transfer-learning capabilities of the geospatial foundation models by fine-tuning on satellite imagery with sparse labels from different eco-regions in Brazil.

AI Foundation Models for Weather and Climate: Applications, Design, and Implementation

Sep 20, 2023Abstract:Machine learning and deep learning methods have been widely explored in understanding the chaotic behavior of the atmosphere and furthering weather forecasting. There has been increasing interest from technology companies, government institutions, and meteorological agencies in building digital twins of the Earth. Recent approaches using transformers, physics-informed machine learning, and graph neural networks have demonstrated state-of-the-art performance on relatively narrow spatiotemporal scales and specific tasks. With the recent success of generative artificial intelligence (AI) using pre-trained transformers for language modeling and vision with prompt engineering and fine-tuning, we are now moving towards generalizable AI. In particular, we are witnessing the rise of AI foundation models that can perform competitively on multiple domain-specific downstream tasks. Despite this progress, we are still in the nascent stages of a generalizable AI model for global Earth system models, regional climate models, and mesoscale weather models. Here, we review current state-of-the-art AI approaches, primarily from transformer and operator learning literature in the context of meteorology. We provide our perspective on criteria for success towards a family of foundation models for nowcasting and forecasting weather and climate predictions. We also discuss how such models can perform competitively on downstream tasks such as downscaling (super-resolution), identifying conditions conducive to the occurrence of wildfires, and predicting consequential meteorological phenomena across various spatiotemporal scales such as hurricanes and atmospheric rivers. In particular, we examine current AI methodologies and contend they have matured enough to design and implement a weather foundation model.

Generating physically-consistent high-resolution climate data with hard-constrained neural networks

Aug 08, 2022

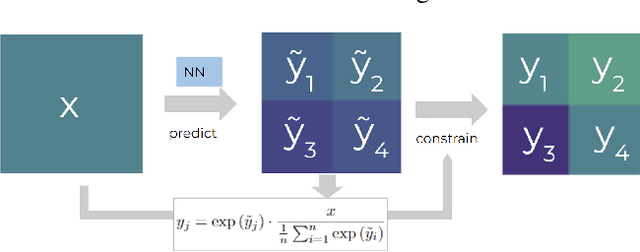

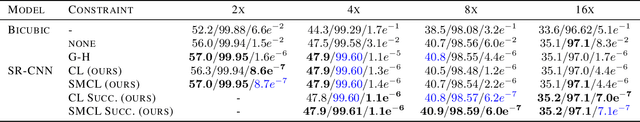

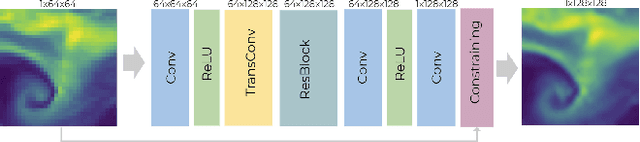

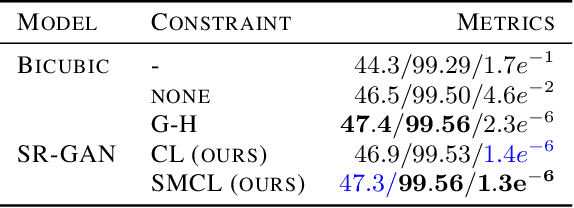

Abstract:The availability of reliable, high-resolution climate and weather data is important to inform long-term decisions on climate adaptation and mitigation and to guide rapid responses to extreme events. Forecasting models are limited by computational costs and therefore often predict quantities at a coarse spatial resolution. Statistical downscaling can provide an efficient method of upsampling low-resolution data. In this field, deep learning has been applied successfully, often using methods from the super-resolution domain in computer vision. Despite often achieving visually compelling results, such models often violate conservation laws when predicting physical variables. In order to conserve important physical quantities, we develop methods that guarantee physical constraints are satisfied by a deep downscaling model while also increasing their performance according to traditional metrics. We introduce two ways of constraining the network: A renormalization layer added to the end of the neural network and a successive approach that scales with increasing upsampling factors. We show the applicability of our methods across different popular architectures and upsampling factors using ERA5 reanalysis data.

Wildfire risk forecast: An optimizable fire danger index

Mar 28, 2022

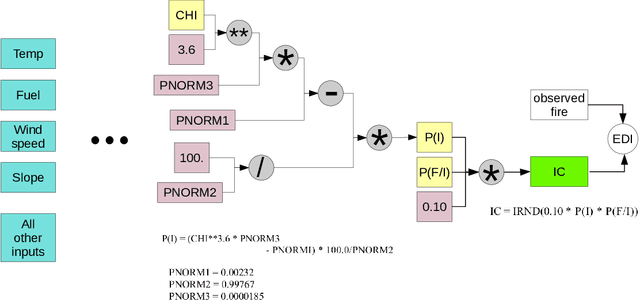

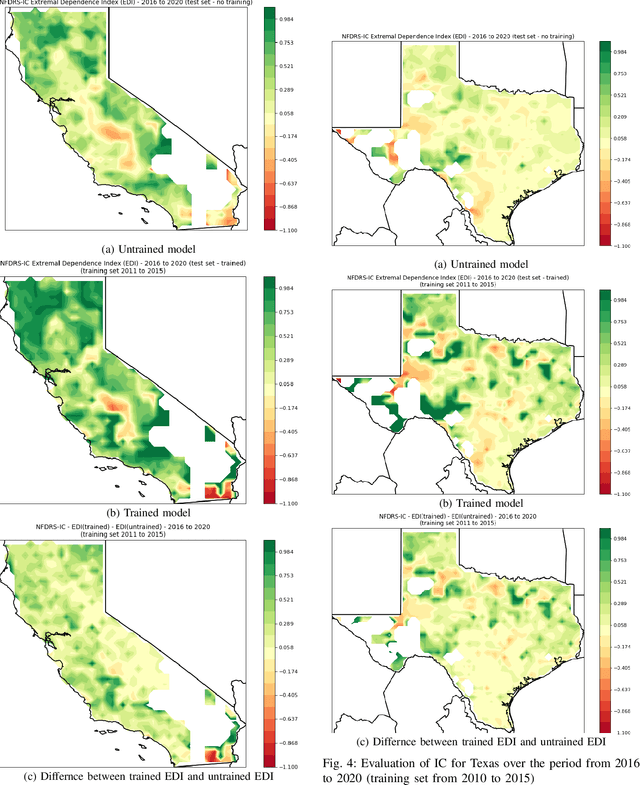

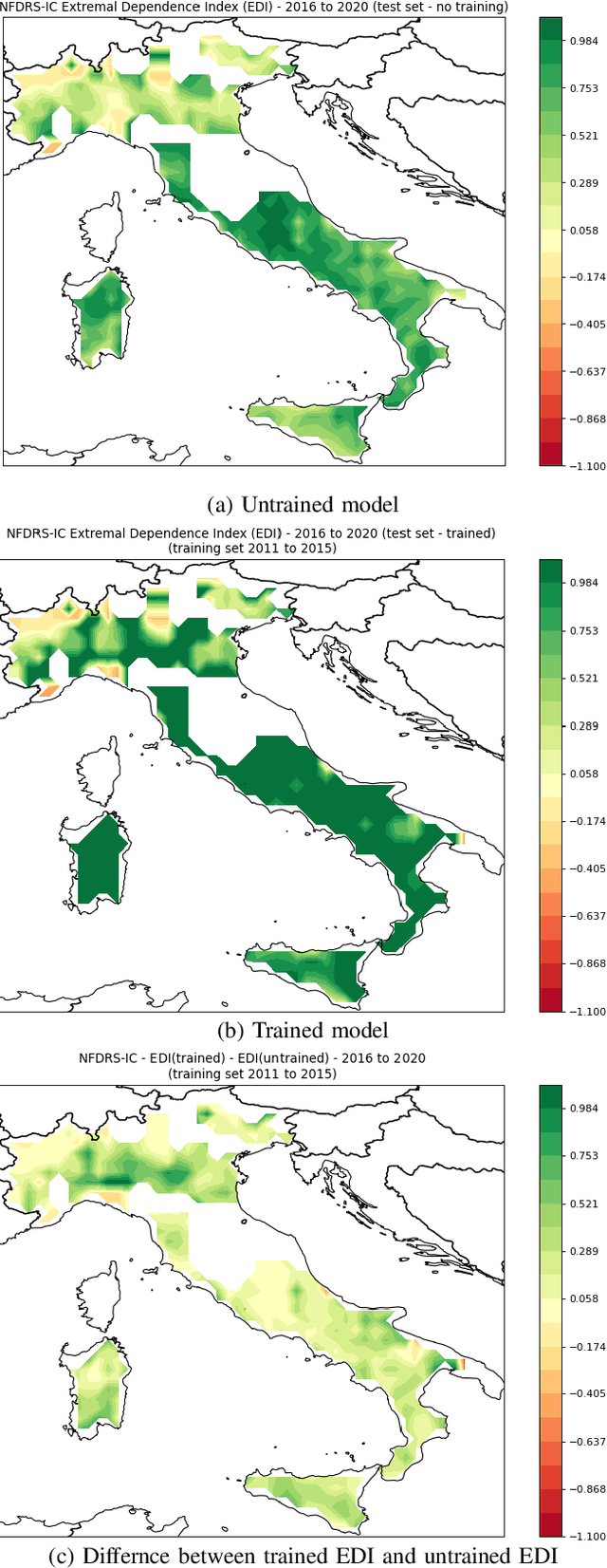

Abstract:Wildfire events have caused severe losses in many places around the world and are expected to increase with climate change. Throughout the years many technologies have been developed to identify fire events early on and to simulate fire behavior once they have started. Another particularly helpful technology is fire risk indices, which use weather forcing to make advanced predictions of the risk of fire. Predictions of fire risk indices can be used, for instance, to allocate resources in places with high risk. These indices have been developed over the years as empirical models with parameters that were estimated in lab experiments and field tests. These parameters, however, may not fit well all places where these models are used. In this paper we propose a novel implementation of one index (NFDRS IC) as a differentiable function in which one can optimize its internal parameters via gradient descent. We leverage existing machine learning frameworks (PyTorch) to construct our model. This approach has two benefits: (1) the NFDRS IC parameters can be improved for each region using actual observed fire events, and (2) the internal variables remain intact for interpretations by specialists instead of meaningless hidden layers as in traditional neural networks. In this paper we evaluate our strategy with actual fire events for locations in the USA and Europe.

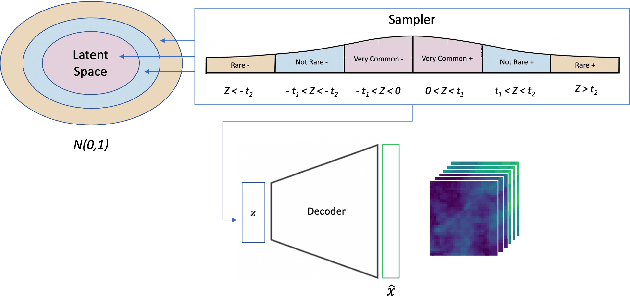

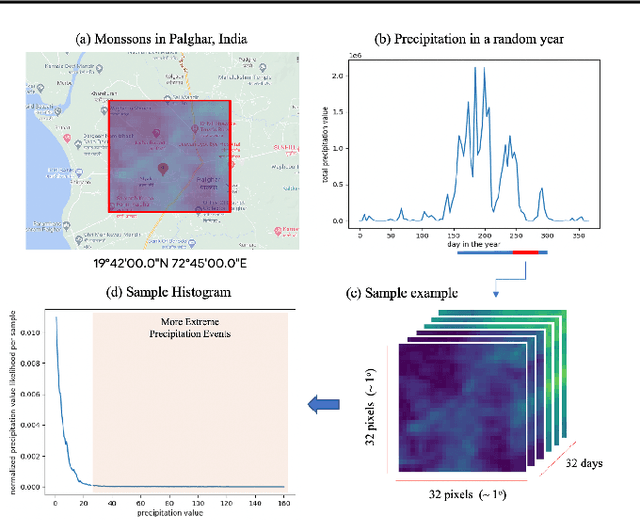

Controlling Weather Field Synthesis Using Variational Autoencoders

Jul 30, 2021

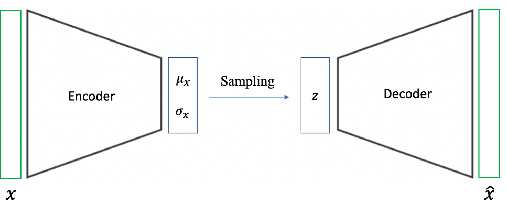

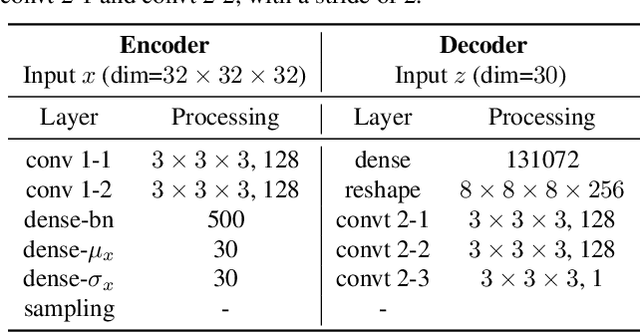

Abstract:One of the consequences of climate change is anobserved increase in the frequency of extreme cli-mate events. That poses a challenge for weatherforecast and generation algorithms, which learnfrom historical data but should embed an often un-certain bias to create correct scenarios. This paperinvestigates how mapping climate data to a knowndistribution using variational autoencoders mighthelp explore such biases and control the synthesisof weather fields towards more extreme climatescenarios. We experimented using a monsoon-affected precipitation dataset from southwest In-dia, which should give a roughly stable pattern ofrainy days and ease our investigation. We reportcompelling results showing that mapping complexweather data to a known distribution implementsan efficient control for weather field synthesis to-wards more (or less) extreme scenarios.

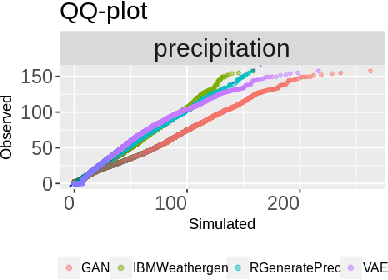

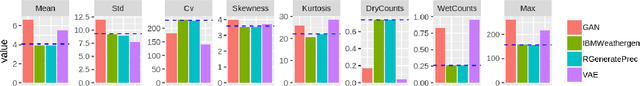

A comparative study of stochastic and deep generative models for multisite precipitation synthesis

Jul 16, 2021

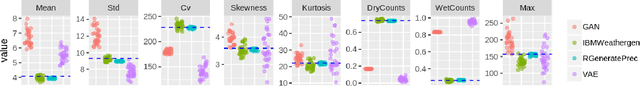

Abstract:Future climate change scenarios are usually hypothesized using simulations from weather generators. However, there only a few works comparing and evaluating promising deep learning models for weather generation against classical approaches. This study shows preliminary results making such evaluations for the multisite precipitation synthesis task. We compared two open-source weather generators: IBMWeathergen (an extension of the Weathergen library) and RGeneratePrec, and two deep generative models: GAN and VAE, on a variety of metrics. Our preliminary results can serve as a guide for improving the design of deep learning architectures and algorithms for the multisite precipitation synthesis task.

Extreme Precipitation Seasonal Forecast Using a Transformer Neural Network

Jul 14, 2021

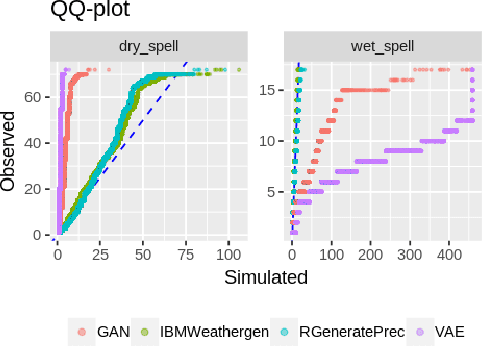

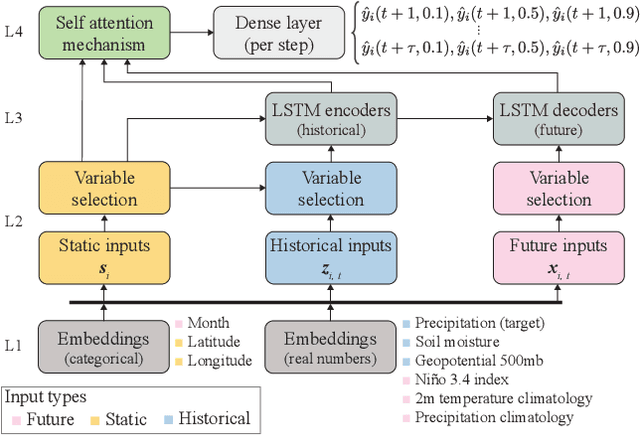

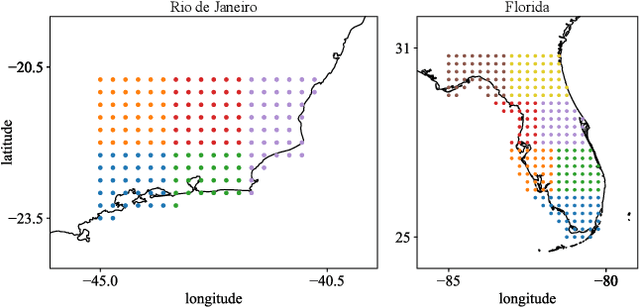

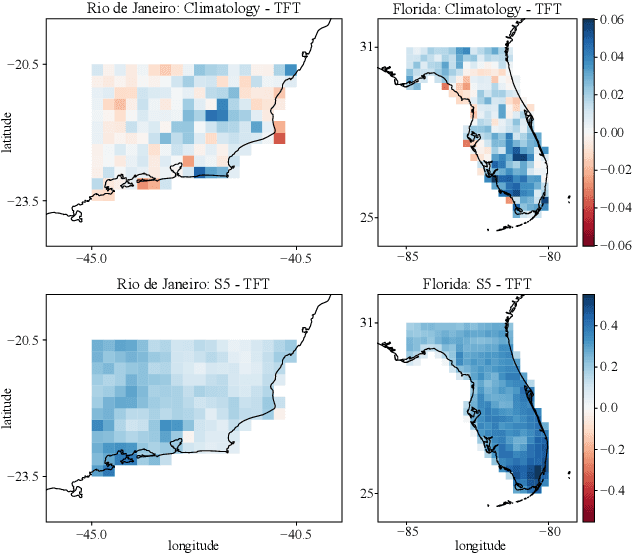

Abstract:An impact of climate change is the increase in frequency and intensity of extreme precipitation events. However, confidently predicting the likelihood of extreme precipitation at seasonal scales remains an outstanding challenge. Here, we present an approach to forecasting the quantiles of the maximum daily precipitation in each week up to six months ahead using the temporal fusion transformer (TFT) model. Through experiments in two regions, we compare TFT predictions with those of two baselines: climatology and a calibrated ECMWF SEAS5 ensemble forecast (S5). Our results show that, in terms of quantile risk at six month lead time, the TFT predictions significantly outperform those from S5 and show an overall small improvement compared to climatology. The TFT also responds positively to departures from normal that climatology cannot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge