Paula Harder

Evaluating the transferability potential of deep learning models for climate downscaling

Jul 17, 2024

Abstract:Climate downscaling, the process of generating high-resolution climate data from low-resolution simulations, is essential for understanding and adapting to climate change at regional and local scales. Deep learning approaches have proven useful in tackling this problem. However, existing studies usually focus on training models for one specific task, location and variable, which are therefore limited in their generalizability and transferability. In this paper, we evaluate the efficacy of training deep learning downscaling models on multiple diverse climate datasets to learn more robust and transferable representations. We evaluate the effectiveness of architectures zero-shot transferability using CNNs, Fourier Neural Operators (FNOs), and vision Transformers (ViTs). We assess the spatial, variable, and product transferability of downscaling models experimentally, to understand the generalizability of these different architecture types.

Climate Variable Downscaling with Conditional Normalizing Flows

May 31, 2024

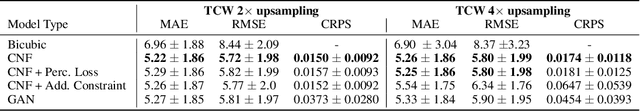

Abstract:Predictions of global climate models typically operate on coarse spatial scales due to the large computational costs of climate simulations. This has led to a considerable interest in methods for statistical downscaling, a similar process to super-resolution in the computer vision context, to provide more local and regional climate information. In this work, we apply conditional normalizing flows to the task of climate variable downscaling. We showcase its successful performance on an ERA5 water content dataset for different upsampling factors. Additionally, we show that the method allows us to assess the predictive uncertainty in terms of standard deviation from the fitted conditional distribution mean.

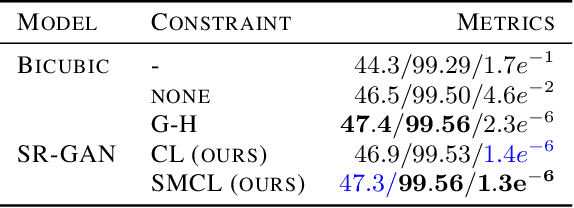

Multi-variable Hard Physical Constraints for Climate Model Downscaling

Aug 02, 2023

Abstract:Global Climate Models (GCMs) are the primary tool to simulate climate evolution and assess the impacts of climate change. However, they often operate at a coarse spatial resolution that limits their accuracy in reproducing local-scale phenomena. Statistical downscaling methods leveraging deep learning offer a solution to this problem by approximating local-scale climate fields from coarse variables, thus enabling regional GCM projections. Typically, climate fields of different variables of interest are downscaled independently, resulting in violations of fundamental physical properties across interconnected variables. This study investigates the scope of this problem and, through an application on temperature, lays the foundation for a framework introducing multi-variable hard constraints that guarantees physical relationships between groups of downscaled climate variables.

Fourier Neural Operators for Arbitrary Resolution Climate Data Downscaling

May 23, 2023

Abstract:Climate simulations are essential in guiding our understanding of climate change and responding to its effects. However, it is computationally expensive to resolve complex climate processes at high spatial resolution. As one way to speed up climate simulations, neural networks have been used to downscale climate variables from fast-running low-resolution simulations, but high-resolution training data are often unobtainable or scarce, greatly limiting accuracy. In this work, we propose a downscaling method based on the Fourier neural operator. It trains with data of a small upsampling factor and then can zero-shot downscale its input to arbitrary unseen high resolution. Evaluated both on ERA5 climate model data and on the Navier-Stokes equation solution data, our downscaling model significantly outperforms state-of-the-art convolutional and generative adversarial downscaling models, both in standard single-resolution downscaling and in zero-shot generalization to higher upsampling factors. Furthermore, we show that our method also outperforms state-of-the-art data-driven partial differential equation solvers on Navier-Stokes equations. Overall, our work bridges the gap between simulation of a physical process and interpolation of low-resolution output, showing that it is possible to combine both approaches and significantly improve upon each other.

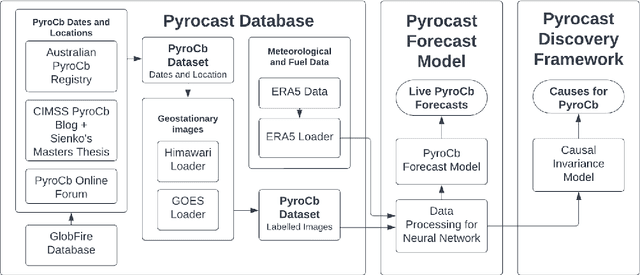

Pyrocast: a Machine Learning Pipeline to Forecast Pyrocumulonimbus (PyroCb) Clouds

Nov 22, 2022

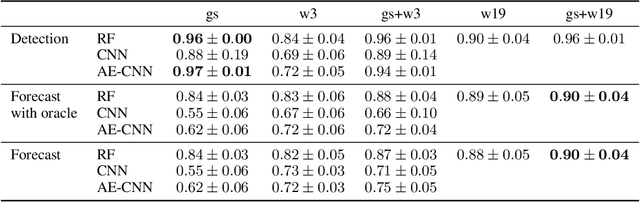

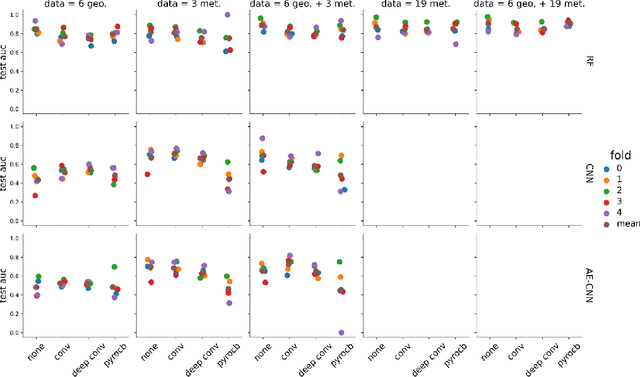

Abstract:Pyrocumulonimbus (pyroCb) clouds are storm clouds generated by extreme wildfires. PyroCbs are associated with unpredictable, and therefore dangerous, wildfire spread. They can also inject smoke particles and trace gases into the upper troposphere and lower stratosphere, affecting the Earth's climate. As global temperatures increase, these previously rare events are becoming more common. Being able to predict which fires are likely to generate pyroCb is therefore key to climate adaptation in wildfire-prone areas. This paper introduces Pyrocast, a pipeline for pyroCb analysis and forecasting. The pipeline's first two components, a pyroCb database and a pyroCb forecast model, are presented. The database brings together geostationary imagery and environmental data for over 148 pyroCb events across North America, Australia, and Russia between 2018 and 2022. Random Forests, Convolutional Neural Networks (CNNs), and CNNs pretrained with Auto-Encoders were tested to predict the generation of pyroCb for a given fire six hours in advance. The best model predicted pyroCb with an AUC of $0.90 \pm 0.04$.

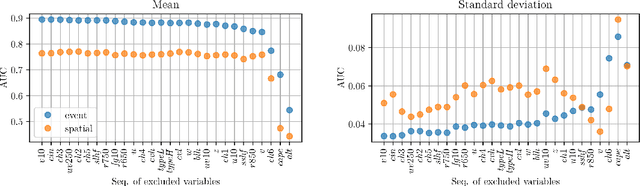

Identifying the Causes of Pyrocumulonimbus (PyroCb)

Nov 18, 2022

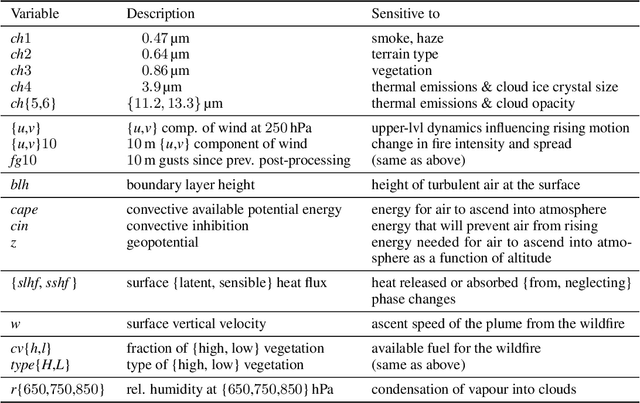

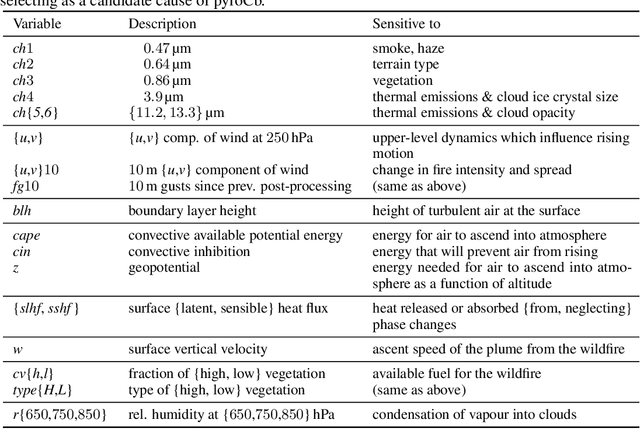

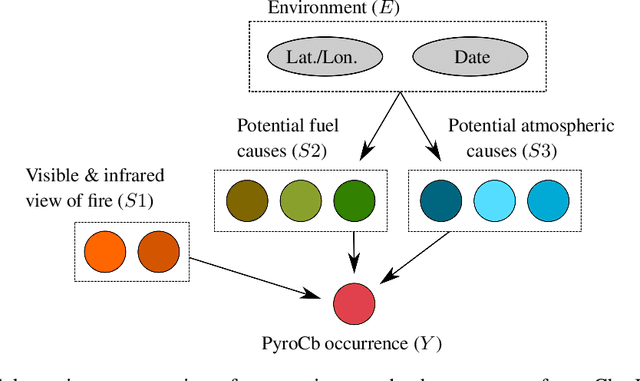

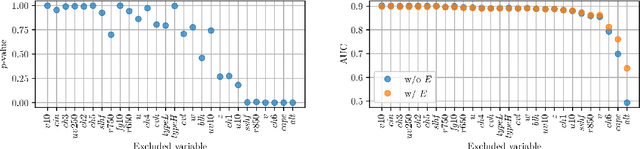

Abstract:A first causal discovery analysis from observational data of pyroCb (storm clouds generated from extreme wildfires) is presented. Invariant Causal Prediction was used to develop tools to understand the causal drivers of pyroCb formation. This includes a conditional independence test for testing $Y$ conditionally independent of $E$ given $X$ for binary variable $Y$ and multivariate, continuous variables $X$ and $E$, and a greedy-ICP search algorithm that relies on fewer conditional independence tests to obtain a smaller more manageable set of causal predictors. With these tools, we identified a subset of seven causal predictors which are plausible when contrasted with domain knowledge: surface sensible heat flux, relative humidity at $850$ hPa, a component of wind at $250$ hPa, $13.3$ micro-meters, thermal emissions, convective available potential energy, and altitude.

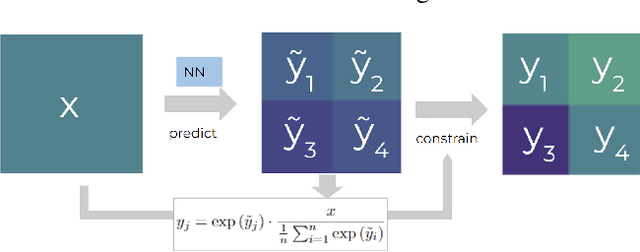

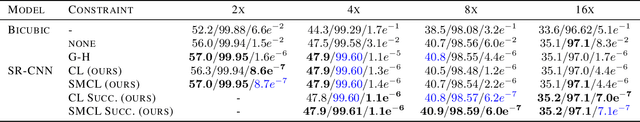

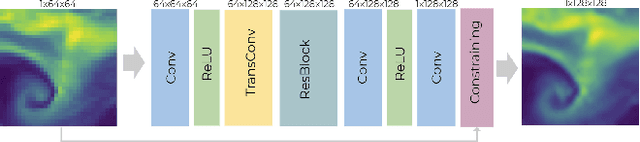

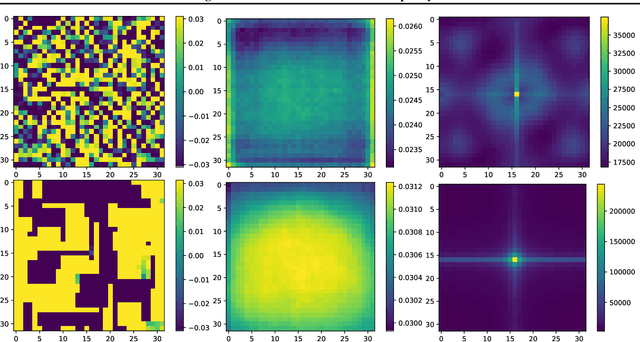

Generating physically-consistent high-resolution climate data with hard-constrained neural networks

Aug 08, 2022

Abstract:The availability of reliable, high-resolution climate and weather data is important to inform long-term decisions on climate adaptation and mitigation and to guide rapid responses to extreme events. Forecasting models are limited by computational costs and therefore often predict quantities at a coarse spatial resolution. Statistical downscaling can provide an efficient method of upsampling low-resolution data. In this field, deep learning has been applied successfully, often using methods from the super-resolution domain in computer vision. Despite often achieving visually compelling results, such models often violate conservation laws when predicting physical variables. In order to conserve important physical quantities, we develop methods that guarantee physical constraints are satisfied by a deep downscaling model while also increasing their performance according to traditional metrics. We introduce two ways of constraining the network: A renormalization layer added to the end of the neural network and a successive approach that scales with increasing upsampling factors. We show the applicability of our methods across different popular architectures and upsampling factors using ERA5 reanalysis data.

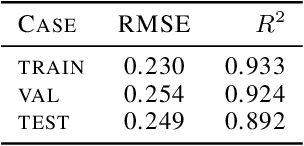

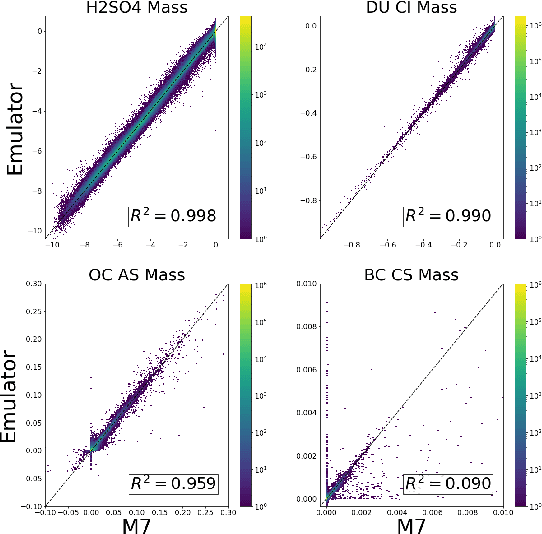

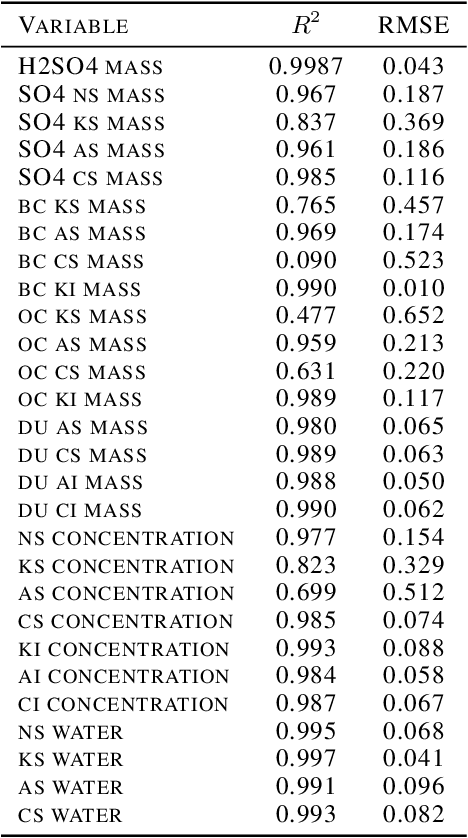

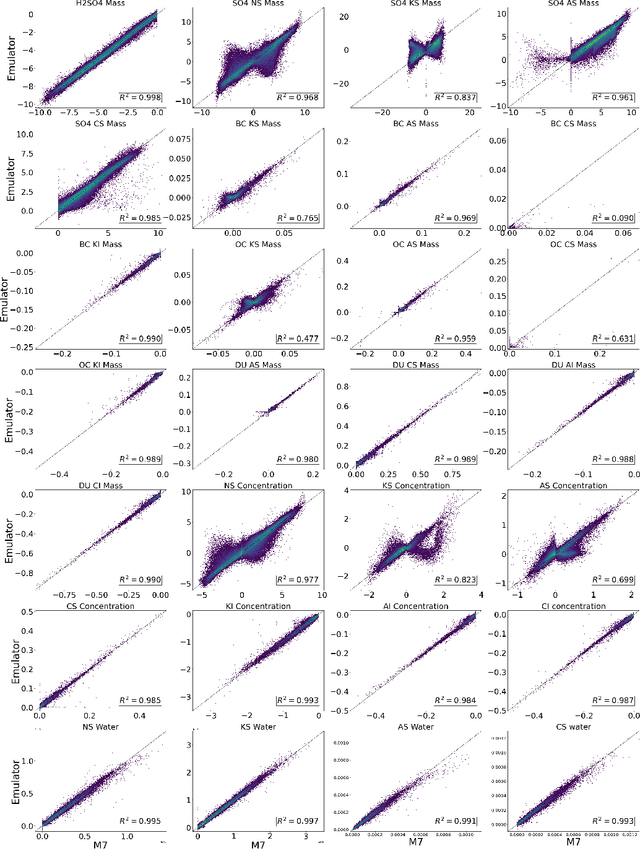

Physics-Informed Learning of Aerosol Microphysics

Jul 24, 2022Abstract:Aerosol particles play an important role in the climate system by absorbing and scattering radiation and influencing cloud properties. They are also one of the biggest sources of uncertainty for climate modeling. Many climate models do not include aerosols in sufficient detail due to computational constraints. In order to represent key processes, aerosol microphysical properties and processes have to be accounted for. This is done in the ECHAM-HAM global climate aerosol model using the M7 microphysics, but high computational costs make it very expensive to run with finer resolution or for a longer time. We aim to use machine learning to emulate the microphysics model at sufficient accuracy and reduce the computational cost by being fast at inference time. The original M7 model is used to generate data of input-output pairs to train a neural network on it. We are able to learn the variables' tendencies achieving an average $R^2$ score of $77.1\% $. We further explore methods to inform and constrain the neural network with physical knowledge to reduce mass violation and enforce mass positivity. On a GPU we achieve a speed-up of up to over 64x compared to the original model.

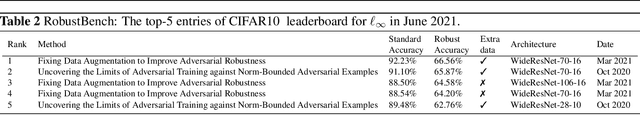

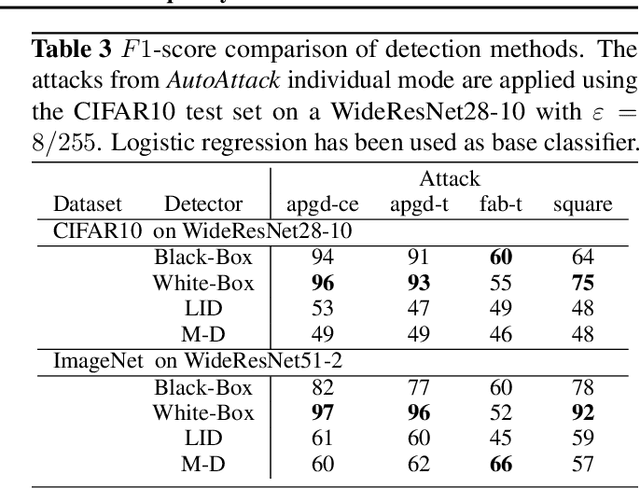

Detecting AutoAttack Perturbations in the Frequency Domain

Nov 16, 2021

Abstract:Recently, adversarial attacks on image classification networks by the AutoAttack (Croce and Hein, 2020b) framework have drawn a lot of attention. While AutoAttack has shown a very high attack success rate, most defense approaches are focusing on network hardening and robustness enhancements, like adversarial training. This way, the currently best-reported method can withstand about 66% of adversarial examples on CIFAR10. In this paper, we investigate the spatial and frequency domain properties of AutoAttack and propose an alternative defense. Instead of hardening a network, we detect adversarial attacks during inference, rejecting manipulated inputs. Based on a rather simple and fast analysis in the frequency domain, we introduce two different detection algorithms. First, a black box detector that only operates on the input images and achieves a detection accuracy of 100% on the AutoAttack CIFAR10 benchmark and 99.3% on ImageNet, for epsilon = 8/255 in both cases. Second, a whitebox detector using an analysis of CNN feature maps, leading to a detection rate of also 100% and 98.7% on the same benchmarks.

Emulating Aerosol Microphysics with Machine Learning

Sep 29, 2021

Abstract:Aerosol particles play an important role in the climate system by absorbing and scattering radiation and influencing cloud properties. They are also one of the biggest sources of uncertainty for climate modeling. Many climate models do not include aerosols in sufficient detail. In order to achieve higher accuracy, aerosol microphysical properties and processes have to be accounted for. This is done in the ECHAM-HAM global climate aerosol model using the M7 microphysics model, but increased computational costs make it very expensive to run at higher resolutions or for a longer time. We aim to use machine learning to approximate the microphysics model at sufficient accuracy and reduce the computational cost by being fast at inference time. The original M7 model is used to generate data of input-output pairs to train a neural network on it. By using a special logarithmic transform we are able to learn the variables tendencies achieving an average $R^2$ score of $89\%$. On a GPU we achieve a speed-up of 120 compared to the original model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge