Nis Meinert

Is Epistemic Uncertainty Faithfully Represented by Evidential Deep Learning Methods?

Feb 20, 2024

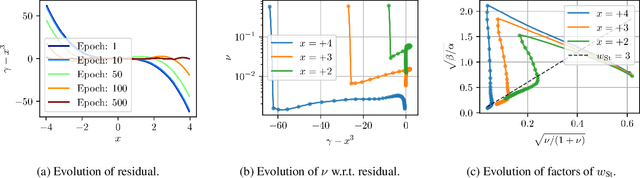

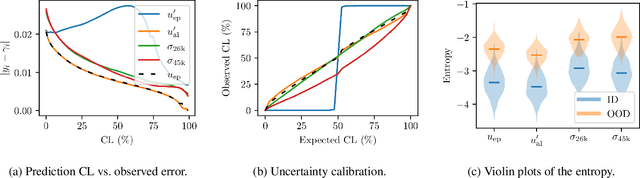

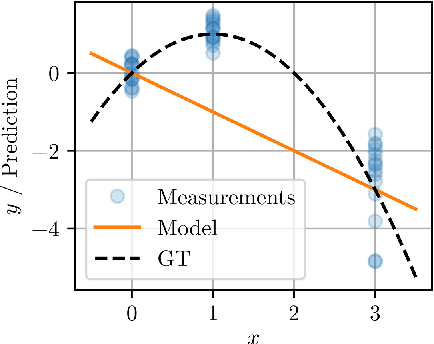

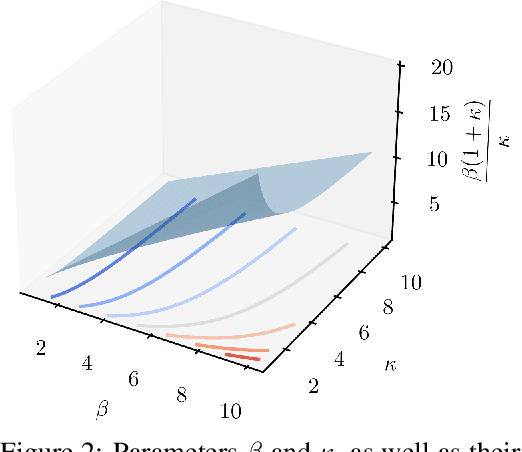

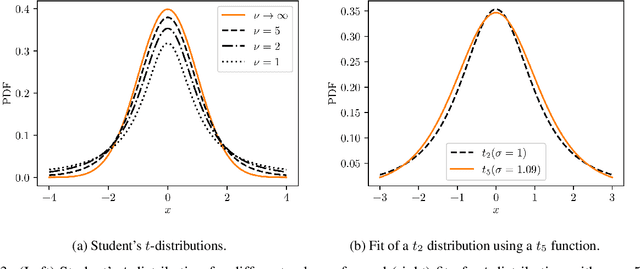

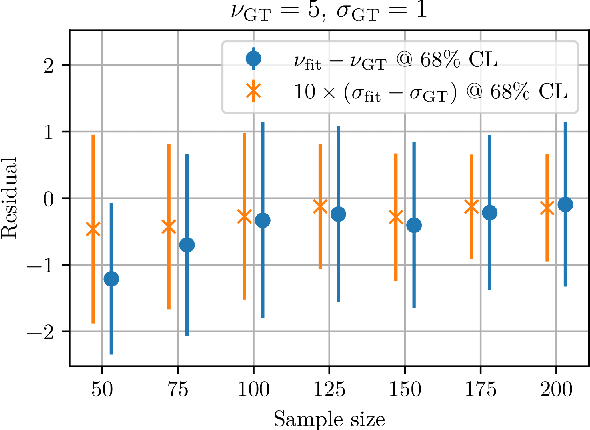

Abstract:Trustworthy ML systems should not only return accurate predictions, but also a reliable representation of their uncertainty. Bayesian methods are commonly used to quantify both aleatoric and epistemic uncertainty, but alternative approaches, such as evidential deep learning methods, have become popular in recent years. The latter group of methods in essence extends empirical risk minimization (ERM) for predicting second-order probability distributions over outcomes, from which measures of epistemic (and aleatoric) uncertainty can be extracted. This paper presents novel theoretical insights of evidential deep learning, highlighting the difficulties in optimizing second-order loss functions and interpreting the resulting epistemic uncertainty measures. With a systematic setup that covers a wide range of approaches for classification, regression and counts, it provides novel insights into issues of identifiability and convergence in second-order loss minimization, and the relative (rather than absolute) nature of epistemic uncertainty measures.

Pyrocast: a Machine Learning Pipeline to Forecast Pyrocumulonimbus (PyroCb) Clouds

Nov 22, 2022

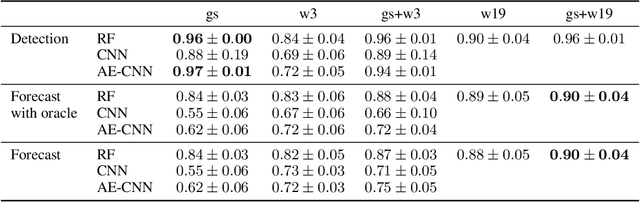

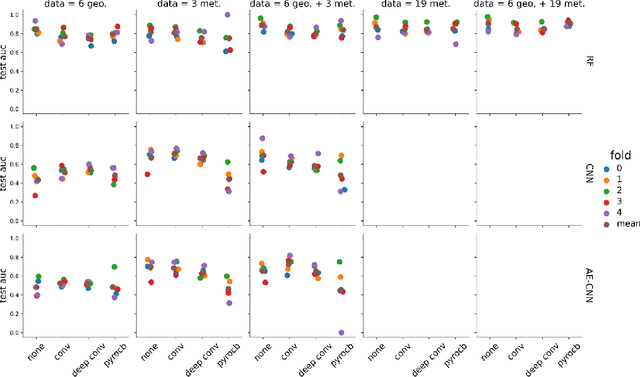

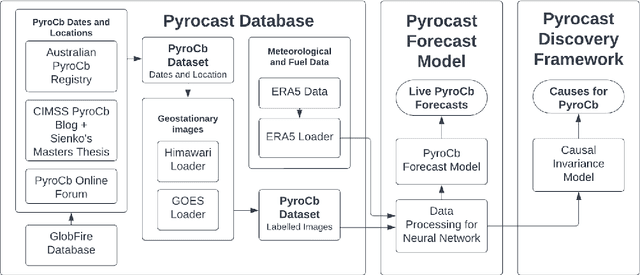

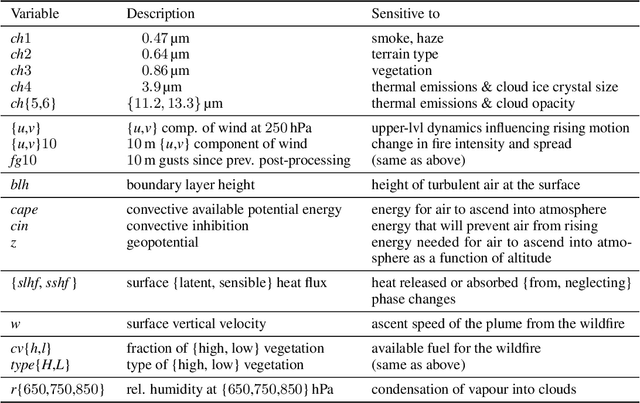

Abstract:Pyrocumulonimbus (pyroCb) clouds are storm clouds generated by extreme wildfires. PyroCbs are associated with unpredictable, and therefore dangerous, wildfire spread. They can also inject smoke particles and trace gases into the upper troposphere and lower stratosphere, affecting the Earth's climate. As global temperatures increase, these previously rare events are becoming more common. Being able to predict which fires are likely to generate pyroCb is therefore key to climate adaptation in wildfire-prone areas. This paper introduces Pyrocast, a pipeline for pyroCb analysis and forecasting. The pipeline's first two components, a pyroCb database and a pyroCb forecast model, are presented. The database brings together geostationary imagery and environmental data for over 148 pyroCb events across North America, Australia, and Russia between 2018 and 2022. Random Forests, Convolutional Neural Networks (CNNs), and CNNs pretrained with Auto-Encoders were tested to predict the generation of pyroCb for a given fire six hours in advance. The best model predicted pyroCb with an AUC of $0.90 \pm 0.04$.

Identifying the Causes of Pyrocumulonimbus (PyroCb)

Nov 18, 2022

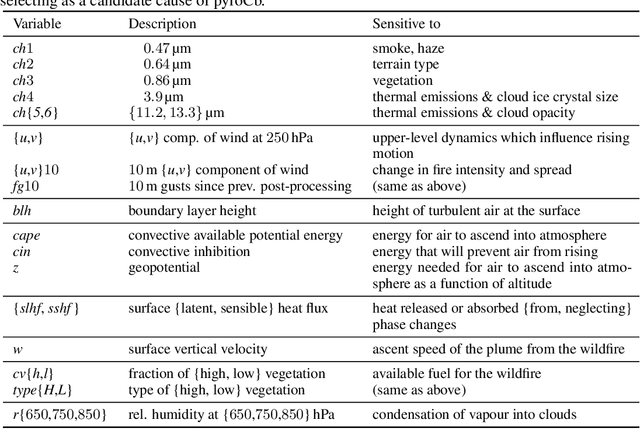

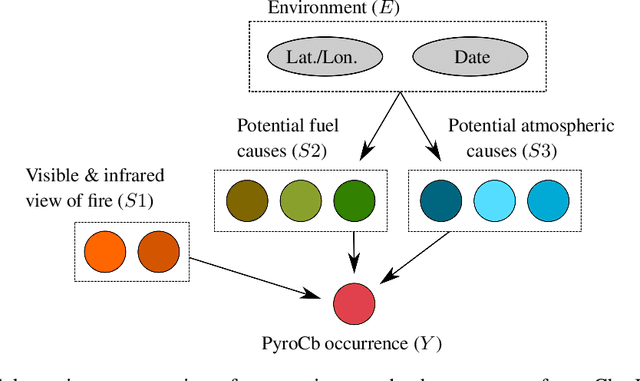

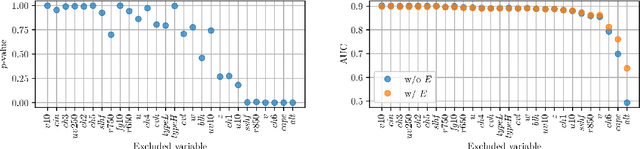

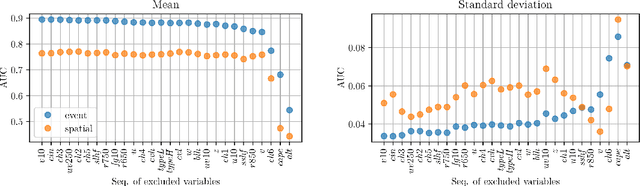

Abstract:A first causal discovery analysis from observational data of pyroCb (storm clouds generated from extreme wildfires) is presented. Invariant Causal Prediction was used to develop tools to understand the causal drivers of pyroCb formation. This includes a conditional independence test for testing $Y$ conditionally independent of $E$ given $X$ for binary variable $Y$ and multivariate, continuous variables $X$ and $E$, and a greedy-ICP search algorithm that relies on fewer conditional independence tests to obtain a smaller more manageable set of causal predictors. With these tools, we identified a subset of seven causal predictors which are plausible when contrasted with domain knowledge: surface sensible heat flux, relative humidity at $850$ hPa, a component of wind at $250$ hPa, $13.3$ micro-meters, thermal emissions, convective available potential energy, and altitude.

The Unreasonable Effectiveness of Deep Evidential Regression

May 20, 2022

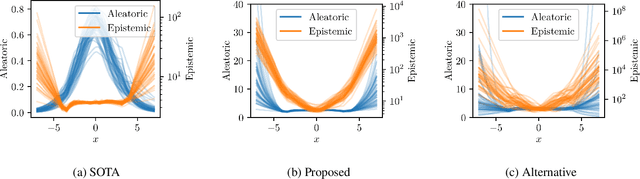

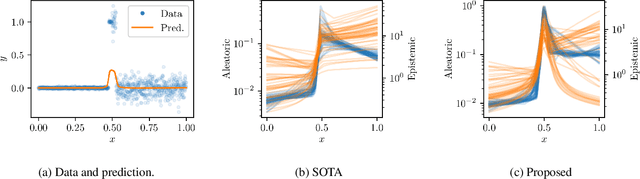

Abstract:There is a significant need for principled uncertainty reasoning in machine learning systems as they are increasingly deployed in safety-critical domains. A new approach with uncertainty-aware regression-based neural networks (NNs), based on learning evidential distributions for aleatoric and epistemic uncertainties, shows promise over traditional deterministic methods and typical Bayesian NNs, notably with the capabilities to disentangle aleatoric and epistemic uncertainties. Despite some empirical success of Deep Evidential Regression (DER), there are important gaps in the mathematical foundation that raise the question of why the proposed technique seemingly works. We detail the theoretical shortcomings and analyze the performance on synthetic and real-world data sets, showing that Deep Evidential Regression is a heuristic rather than an exact uncertainty quantification. We go on to propose corrections and redefinitions of how aleatoric and epistemic uncertainties should be extracted from NNs.

Multivariate Deep Evidential Regression

Apr 15, 2021

Abstract:There is significant need for principled uncertainty reasoning in machine learning systems as they are increasingly deployed in safety-critical domains. A new approach with uncertainty-aware neural networks shows promise over traditional deterministic methods, yet several important gaps in the theory and implementation of these networks remain. We discuss three issues with a proposed solution to extract aleatoric and epistemic uncertainties from regression-based neural networks. The aforementioned proposal derives a technique by placing evidential priors over the original Gaussian likelihood function and training the neural network to infer the hyperparemters of the evidential distribution. Doing so allows for the simultaneous extraction of both uncertainties without sampling or utilization of out-of-distribution data for univariate regression tasks. We describe the outstanding issues in detail, provide a possible solution, and generalize the technique for the multivariate case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge