Multivariate Deep Evidential Regression

Paper and Code

Apr 15, 2021

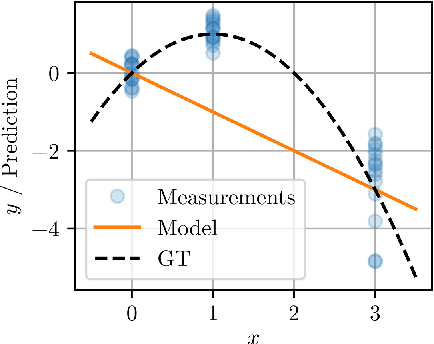

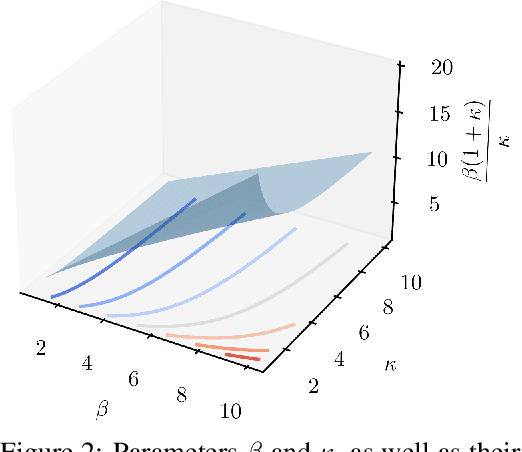

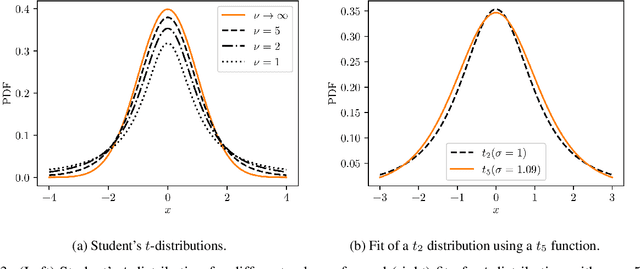

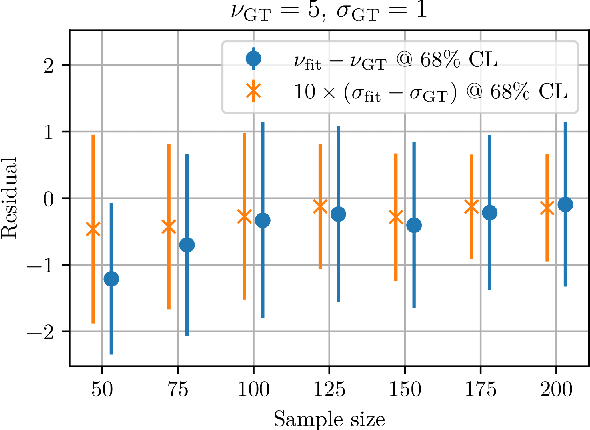

There is significant need for principled uncertainty reasoning in machine learning systems as they are increasingly deployed in safety-critical domains. A new approach with uncertainty-aware neural networks shows promise over traditional deterministic methods, yet several important gaps in the theory and implementation of these networks remain. We discuss three issues with a proposed solution to extract aleatoric and epistemic uncertainties from regression-based neural networks. The aforementioned proposal derives a technique by placing evidential priors over the original Gaussian likelihood function and training the neural network to infer the hyperparemters of the evidential distribution. Doing so allows for the simultaneous extraction of both uncertainties without sampling or utilization of out-of-distribution data for univariate regression tasks. We describe the outstanding issues in detail, provide a possible solution, and generalize the technique for the multivariate case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge