Eyke Hüllermeier

Evolutionary Mapping of Neural Networks to Spatial Accelerators

Feb 04, 2026Abstract:Spatial accelerators, composed of arrays of compute-memory integrated units, offer an attractive platform for deploying inference workloads with low latency and low energy consumption. However, fully exploiting their architectural advantages typically requires careful, expert-driven mapping of computational graphs to distributed processing elements. In this work, we automate this process by framing the mapping challenge as a black-box optimization problem. We introduce the first evolutionary, hardware-in-the-loop mapping framework for neuromorphic accelerators, enabling users without deep hardware knowledge to deploy workloads more efficiently. We evaluate our approach on Intel Loihi 2, a representative spatial accelerator featuring 152 cores per chip in a 2D mesh. Our method achieves up to 35% reduction in total latency compared to default heuristics on two sparse multi-layer perceptron networks. Furthermore, we demonstrate the scalability of our approach to multi-chip systems and observe an up to 40% improvement in energy efficiency, without explicitly optimizing for it.

ResponseRank: Data-Efficient Reward Modeling through Preference Strength Learning

Dec 31, 2025Abstract:Binary choices, as often used for reinforcement learning from human feedback (RLHF), convey only the direction of a preference. A person may choose apples over oranges and bananas over grapes, but which preference is stronger? Strength is crucial for decision-making under uncertainty and generalization of preference models, but hard to measure reliably. Metadata such as response times and inter-annotator agreement can serve as proxies for strength, but are often noisy and confounded. We propose ResponseRank to address the challenge of learning from noisy strength signals. Our method uses relative differences in proxy signals to rank responses to pairwise comparisons by their inferred preference strength. To control for systemic variation, we compare signals only locally within carefully constructed strata. This enables robust learning of utility differences consistent with strength-derived rankings while making minimal assumptions about the strength signal. Our contributions are threefold: (1) ResponseRank, a novel method that robustly learns preference strength by leveraging locally valid relative strength signals; (2) empirical evidence of improved sample efficiency and robustness across diverse tasks: synthetic preference learning (with simulated response times), language modeling (with annotator agreement), and RL control tasks (with simulated episode returns); and (3) the Pearson Distance Correlation (PDC), a novel metric that isolates cardinal utility learning from ordinal accuracy.

Distribution Matching for Graph Quantification Under Structural Covariate Shift

Dec 30, 2025Abstract:Graphs are commonly used in machine learning to model relationships between instances. Consider the task of predicting the political preferences of users in a social network; to solve this task one should consider, both, the features of each individual user and the relationships between them. However, oftentimes one is not interested in the label of a single instance but rather in the distribution of labels over a set of instances; e.g., when predicting the political preferences of users, the overall prevalence of a given opinion might be of higher interest than the opinion of a specific person. This label prevalence estimation task is commonly referred to as quantification learning (QL). Current QL methods for tabular data are typically based on the so-called prior probability shift (PPS) assumption which states that the label-conditional instance distributions should remain equal across the training and test data. In the graph setting, PPS generally does not hold if the shift between training and test data is structural, i.e., if the training data comes from a different region of the graph than the test data. To address such structural shifts, an importance sampling variant of the popular adjusted count quantification approach has previously been proposed. In this work, we extend the idea of structural importance sampling to the state-of-the-art KDEy quantification approach. We show that our proposed method adapts to structural shifts and outperforms standard quantification approaches.

* 17 pages, presented at ECML-PKDD 2025

Co-Exploration and Co-Exploitation via Shared Structure in Multi-Task Bandits

Dec 14, 2025Abstract:We propose a novel Bayesian framework for efficient exploration in contextual multi-task multi-armed bandit settings, where the context is only observed partially and dependencies between reward distributions are induced by latent context variables. In order to exploit these structural dependencies, our approach integrates observations across all tasks and learns a global joint distribution, while still allowing personalised inference for new tasks. In this regard, we identify two key sources of epistemic uncertainty, namely structural uncertainty in the latent reward dependencies across arms and tasks, and user-specific uncertainty due to incomplete context and limited interaction history. To put our method into practice, we represent the joint distribution over tasks and rewards using a particle-based approximation of a log-density Gaussian process. This representation enables flexible, data-driven discovery of both inter-arm and inter-task dependencies without prior assumptions on the latent variables. Empirically, we demonstrate that our method outperforms baselines such as hierarchical model bandits, especially in settings with model misspecification or complex latent heterogeneity.

Efficient Online Learning with Predictive Coding Networks: Exploiting Temporal Correlations

Oct 29, 2025

Abstract:Robotic systems operating at the edge require efficient online learning algorithms that can continuously adapt to changing environments while processing streaming sensory data. Traditional backpropagation, while effective, conflicts with biological plausibility principles and may be suboptimal for continuous adaptation scenarios. The Predictive Coding (PC) framework offers a biologically plausible alternative with local, Hebbian-like update rules, making it suitable for neuromorphic hardware implementation. However, PC's main limitation is its computational overhead due to multiple inference iterations during training. We present Predictive Coding Network with Temporal Amortization (PCN-TA), which preserves latent states across temporal frames. By leveraging temporal correlations, PCN-TA significantly reduces computational demands while maintaining learning performance. Our experiments on the COIL-20 robotic perception dataset demonstrate that PCN-TA achieves 10% fewer weight updates compared to backpropagation and requires 50% fewer inference steps than baseline PC networks. These efficiency gains directly translate to reduced computational overhead for moving another step toward edge deployment and real-time adaptation support in resource-constrained robotic systems. The biologically-inspired nature of our approach also makes it a promising candidate for future neuromorphic hardware implementations, enabling efficient online learning at the edge.

Fine-Grained Uncertainty Decomposition in Large Language Models: A Spectral Approach

Sep 26, 2025Abstract:As Large Language Models (LLMs) are increasingly integrated in diverse applications, obtaining reliable measures of their predictive uncertainty has become critically important. A precise distinction between aleatoric uncertainty, arising from inherent ambiguities within input data, and epistemic uncertainty, originating exclusively from model limitations, is essential to effectively address each uncertainty source. In this paper, we introduce Spectral Uncertainty, a novel approach to quantifying and decomposing uncertainties in LLMs. Leveraging the Von Neumann entropy from quantum information theory, Spectral Uncertainty provides a rigorous theoretical foundation for separating total uncertainty into distinct aleatoric and epistemic components. Unlike existing baseline methods, our approach incorporates a fine-grained representation of semantic similarity, enabling nuanced differentiation among various semantic interpretations in model responses. Empirical evaluations demonstrate that Spectral Uncertainty outperforms state-of-the-art methods in estimating both aleatoric and total uncertainty across diverse models and benchmark datasets.

Explaining Similarity in Vision-Language Encoders with Weighted Banzhaf Interactions

Aug 07, 2025Abstract:Language-image pre-training (LIP) enables the development of vision-language models capable of zero-shot classification, localization, multimodal retrieval, and semantic understanding. Various explanation methods have been proposed to visualize the importance of input image-text pairs on the model's similarity outputs. However, popular saliency maps are limited by capturing only first-order attributions, overlooking the complex cross-modal interactions intrinsic to such encoders. We introduce faithful interaction explanations of LIP models (FIxLIP) as a unified approach to decomposing the similarity in vision-language encoders. FIxLIP is rooted in game theory, where we analyze how using the weighted Banzhaf interaction index offers greater flexibility and improves computational efficiency over the Shapley interaction quantification framework. From a practical perspective, we propose how to naturally extend explanation evaluation metrics, like the pointing game and area between the insertion/deletion curves, to second-order interaction explanations. Experiments on MS COCO and ImageNet-1k benchmarks validate that second-order methods like FIxLIP outperform first-order attribution methods. Beyond delivering high-quality explanations, we demonstrate the utility of FIxLIP in comparing different models like CLIP vs. SigLIP-2 and ViT-B/32 vs. ViT-L/16.

Uncertainty Quantification with Proper Scoring Rules: Adjusting Measures to Prediction Tasks

May 28, 2025Abstract:We address the problem of uncertainty quantification and propose measures of total, aleatoric, and epistemic uncertainty based on a known decomposition of (strictly) proper scoring rules, a specific type of loss function, into a divergence and an entropy component. This leads to a flexible framework for uncertainty quantification that can be instantiated with different losses (scoring rules), which makes it possible to tailor uncertainty quantification to the use case at hand. We show that this flexibility is indeed advantageous. In particular, we analyze the task of selective prediction and show that the scoring rule should ideally match the task loss. In addition, we perform experiments on two other common tasks. For out-of-distribution detection, our results confirm that a widely used measure of epistemic uncertainty, mutual information, performs best. Moreover, in the setting of active learning, our measure of epistemic uncertainty based on the zero-one-loss consistently outperforms other uncertainty measures.

Credal Prediction based on Relative Likelihood

May 28, 2025Abstract:Predictions in the form of sets of probability distributions, so-called credal sets, provide a suitable means to represent a learner's epistemic uncertainty. In this paper, we propose a theoretically grounded approach to credal prediction based on the statistical notion of relative likelihood: The target of prediction is the set of all (conditional) probability distributions produced by the collection of plausible models, namely those models whose relative likelihood exceeds a specified threshold. This threshold has an intuitive interpretation and allows for controlling the trade-off between correctness and precision of credal predictions. We tackle the problem of approximating credal sets defined in this way by means of suitably modified ensemble learning techniques. To validate our approach, we illustrate its effectiveness by experiments on benchmark datasets demonstrating superior uncertainty representation without compromising predictive performance. We also compare our method against several state-of-the-art baselines in credal prediction.

Optimal Conformal Prediction under Epistemic Uncertainty

May 25, 2025

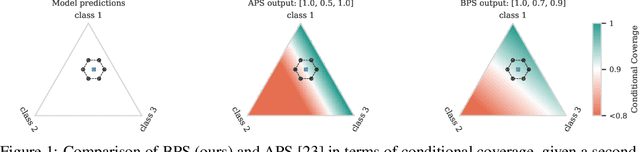

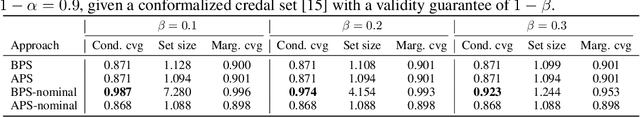

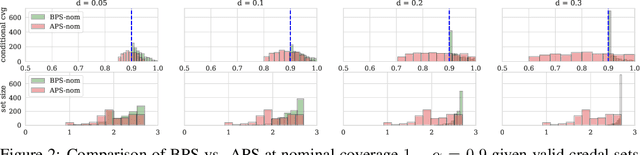

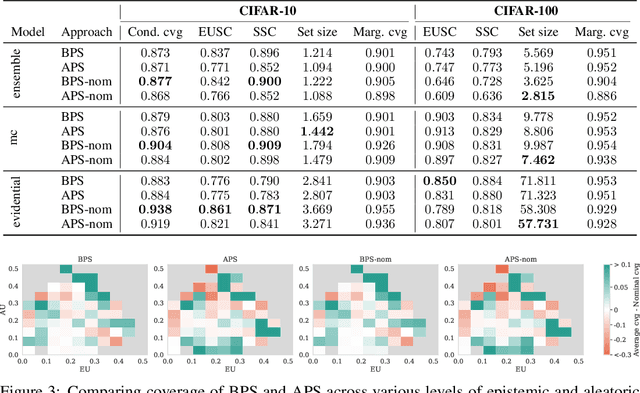

Abstract:Conformal prediction (CP) is a popular frequentist framework for representing uncertainty by providing prediction sets that guarantee coverage of the true label with a user-adjustable probability. In most applications, CP operates on confidence scores coming from a standard (first-order) probabilistic predictor (e.g., softmax outputs). Second-order predictors, such as credal set predictors or Bayesian models, are also widely used for uncertainty quantification and are known for their ability to represent both aleatoric and epistemic uncertainty. Despite their popularity, there is still an open question on ``how they can be incorporated into CP''. In this paper, we discuss the desiderata for CP when valid second-order predictions are available. We then introduce Bernoulli prediction sets (BPS), which produce the smallest prediction sets that ensure conditional coverage in this setting. When given first-order predictions, BPS reduces to the well-known adaptive prediction sets (APS). Furthermore, when the validity assumption on the second-order predictions is compromised, we apply conformal risk control to obtain a marginal coverage guarantee while still accounting for epistemic uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge