Thomas Decker

Improving Perturbation-based Explanations by Understanding the Role of Uncertainty Calibration

Nov 13, 2025Abstract:Perturbation-based explanations are widely utilized to enhance the transparency of machine-learning models in practice. However, their reliability is often compromised by the unknown model behavior under the specific perturbations used. This paper investigates the relationship between uncertainty calibration - the alignment of model confidence with actual accuracy - and perturbation-based explanations. We show that models systematically produce unreliable probability estimates when subjected to explainability-specific perturbations and theoretically prove that this directly undermines global and local explanation quality. To address this, we introduce ReCalX, a novel approach to recalibrate models for improved explanations while preserving their original predictions. Empirical evaluations across diverse models and datasets demonstrate that ReCalX consistently reduces perturbation-specific miscalibration most effectively while enhancing explanation robustness and the identification of globally important input features.

Fine-Grained Uncertainty Decomposition in Large Language Models: A Spectral Approach

Sep 26, 2025Abstract:As Large Language Models (LLMs) are increasingly integrated in diverse applications, obtaining reliable measures of their predictive uncertainty has become critically important. A precise distinction between aleatoric uncertainty, arising from inherent ambiguities within input data, and epistemic uncertainty, originating exclusively from model limitations, is essential to effectively address each uncertainty source. In this paper, we introduce Spectral Uncertainty, a novel approach to quantifying and decomposing uncertainties in LLMs. Leveraging the Von Neumann entropy from quantum information theory, Spectral Uncertainty provides a rigorous theoretical foundation for separating total uncertainty into distinct aleatoric and epistemic components. Unlike existing baseline methods, our approach incorporates a fine-grained representation of semantic similarity, enabling nuanced differentiation among various semantic interpretations in model responses. Empirical evaluations demonstrate that Spectral Uncertainty outperforms state-of-the-art methods in estimating both aleatoric and total uncertainty across diverse models and benchmark datasets.

Why Uncertainty Calibration Matters for Reliable Perturbation-based Explanations

Jun 24, 2025Abstract:Perturbation-based explanations are widely utilized to enhance the transparency of modern machine-learning models. However, their reliability is often compromised by the unknown model behavior under the specific perturbations used. This paper investigates the relationship between uncertainty calibration - the alignment of model confidence with actual accuracy - and perturbation-based explanations. We show that models frequently produce unreliable probability estimates when subjected to explainability-specific perturbations and theoretically prove that this directly undermines explanation quality. To address this, we introduce ReCalX, a novel approach to recalibrate models for improved perturbation-based explanations while preserving their original predictions. Experiments on popular computer vision models demonstrate that our calibration strategy produces explanations that are more aligned with human perception and actual object locations.

Incremental Uncertainty-aware Performance Monitoring with Active Labeling Intervention

May 11, 2025Abstract:We study the problem of monitoring machine learning models under gradual distribution shifts, where circumstances change slowly over time, often leading to unnoticed yet significant declines in accuracy. To address this, we propose Incremental Uncertainty-aware Performance Monitoring (IUPM), a novel label-free method that estimates performance changes by modeling gradual shifts using optimal transport. In addition, IUPM quantifies the uncertainty in the performance prediction and introduces an active labeling procedure to restore a reliable estimate under a limited labeling budget. Our experiments show that IUPM outperforms existing performance estimation baselines in various gradual shift scenarios and that its uncertainty awareness guides label acquisition more effectively compared to other strategies.

Explanatory Model Monitoring to Understand the Effects of Feature Shifts on Performance

Aug 24, 2024

Abstract:Monitoring and maintaining machine learning models are among the most critical challenges in translating recent advances in the field into real-world applications. However, current monitoring methods lack the capability of provide actionable insights answering the question of why the performance of a particular model really degraded. In this work, we propose a novel approach to explain the behavior of a black-box model under feature shifts by attributing an estimated performance change to interpretable input characteristics. We refer to our method that combines concepts from Optimal Transport and Shapley Values as Explanatory Performance Estimation (XPE). We analyze the underlying assumptions and demonstrate the superiority of our approach over several baselines on different data sets across various data modalities such as images, audio, and tabular data. We also indicate how the generated results can lead to valuable insights, enabling explanatory model monitoring by revealing potential root causes for model deterioration and guiding toward actionable countermeasures.

Provably Better Explanations with Optimized Aggregation of Feature Attributions

Jun 07, 2024Abstract:Using feature attributions for post-hoc explanations is a common practice to understand and verify the predictions of opaque machine learning models. Despite the numerous techniques available, individual methods often produce inconsistent and unstable results, putting their overall reliability into question. In this work, we aim to systematically improve the quality of feature attributions by combining multiple explanations across distinct methods or their variations. For this purpose, we propose a novel approach to derive optimal convex combinations of feature attributions that yield provable improvements of desired quality criteria such as robustness or faithfulness to the model behavior. Through extensive experiments involving various model architectures and popular feature attribution techniques, we demonstrate that our combination strategy consistently outperforms individual methods and existing baselines.

Does Your Model Think Like an Engineer? Explainable AI for Bearing Fault Detection with Deep Learning

Oct 19, 2023

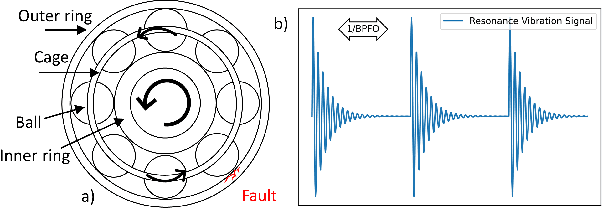

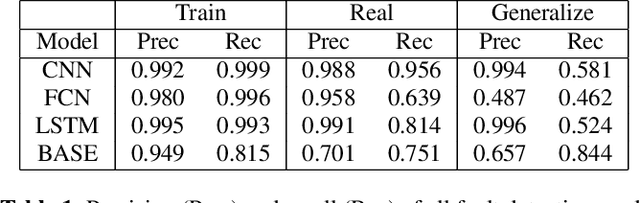

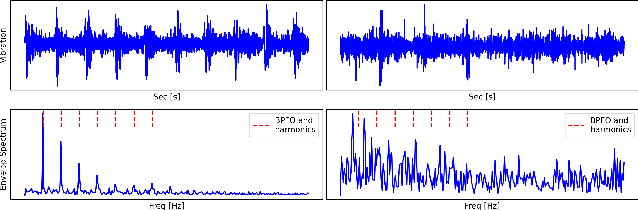

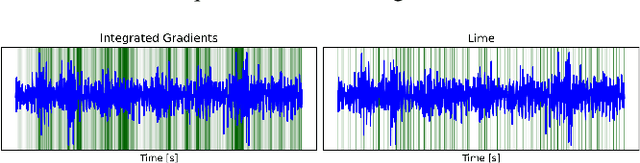

Abstract:Deep Learning has already been successfully applied to analyze industrial sensor data in a variety of relevant use cases. However, the opaque nature of many well-performing methods poses a major obstacle for real-world deployment. Explainable AI (XAI) and especially feature attribution techniques promise to enable insights about how such models form their decision. But the plain application of such methods often fails to provide truly informative and problem-specific insights to domain experts. In this work, we focus on the specific task of detecting faults in rolling element bearings from vibration signals. We propose a novel and domain-specific feature attribution framework that allows us to evaluate how well the underlying logic of a model corresponds with expert reasoning. Utilizing the framework we are able to validate the trustworthiness and to successfully anticipate the generalization ability of different well-performing deep learning models. Our methodology demonstrates how signal processing tools can effectively be used to enhance Explainable AI techniques and acts as a template for similar problems.

Explaining Deep Neural Networks for Bearing Fault Detection with Vibration Concepts

Oct 17, 2023

Abstract:Concept-based explanation methods, such as Concept Activation Vectors, are potent means to quantify how abstract or high-level characteristics of input data influence the predictions of complex deep neural networks. However, applying them to industrial prediction problems is challenging as it is not immediately clear how to define and access appropriate concepts for individual use cases and specific data types. In this work, we investigate how to leverage established concept-based explanation techniques in the context of bearing fault detection with deep neural networks trained on vibration signals. Since bearings are prevalent in almost every rotating equipment, ensuring the reliability of intransparent fault detection models is crucial to prevent costly repairs and downtimes of industrial machinery. Our evaluations demonstrate that explaining opaque models in terms of vibration concepts enables human-comprehensible and intuitive insights about their inner workings, but the underlying assumptions need to be carefully validated first.

Towards Scenario-based Safety Validation for Autonomous Trains with Deep Generative Models

Oct 16, 2023Abstract:Modern AI techniques open up ever-increasing possibilities for autonomous vehicles, but how to appropriately verify the reliability of such systems remains unclear. A common approach is to conduct safety validation based on a predefined Operational Design Domain (ODD) describing specific conditions under which a system under test is required to operate properly. However, collecting sufficient realistic test cases to ensure comprehensive ODD coverage is challenging. In this paper, we report our practical experiences regarding the utility of data simulation with deep generative models for scenario-based ODD validation. We consider the specific use case of a camera-based rail-scene segmentation system designed to support autonomous train operation. We demonstrate the capabilities of semantically editing railway scenes with deep generative models to make a limited amount of test data more representative. We also show how our approach helps to analyze the degree to which a system complies with typical ODD requirements. Specifically, we focus on evaluating proper operation under different lighting and weather conditions as well as while transitioning between them.

The Thousand Faces of Explainable AI Along the Machine Learning Life Cycle: Industrial Reality and Current State of Research

Oct 11, 2023Abstract:In this paper, we investigate the practical relevance of explainable artificial intelligence (XAI) with a special focus on the producing industries and relate them to the current state of academic XAI research. Our findings are based on an extensive series of interviews regarding the role and applicability of XAI along the Machine Learning (ML) lifecycle in current industrial practice and its expected relevance in the future. The interviews were conducted among a great variety of roles and key stakeholders from different industry sectors. On top of that, we outline the state of XAI research by providing a concise review of the relevant literature. This enables us to provide an encompassing overview covering the opinions of the surveyed persons as well as the current state of academic research. By comparing our interview results with the current research approaches we reveal several discrepancies. While a multitude of different XAI approaches exists, most of them are centered around the model evaluation phase and data scientists. Their versatile capabilities for other stages are currently either not sufficiently explored or not popular among practitioners. In line with existing work, our findings also confirm that more efforts are needed to enable also non-expert users' interpretation and understanding of opaque AI models with existing methods and frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge