Lutz Terfloth

Investigating Co-Constructive Behavior of Large Language Models in Explanation Dialogues

Apr 25, 2025Abstract:The ability to generate explanations that are understood by explainees is the quintessence of explainable artificial intelligence. Since understanding depends on the explainee's background and needs, recent research has focused on co-constructive explanation dialogues, where the explainer continuously monitors the explainee's understanding and adapts explanations dynamically. We investigate the ability of large language models (LLMs) to engage as explainers in co-constructive explanation dialogues. In particular, we present a user study in which explainees interact with LLMs, of which some have been instructed to explain a predefined topic co-constructively. We evaluate the explainees' understanding before and after the dialogue, as well as their perception of the LLMs' co-constructive behavior. Our results indicate that current LLMs show some co-constructive behaviors, such as asking verification questions, that foster the explainees' engagement and can improve understanding of a topic. However, their ability to effectively monitor the current understanding and scaffold the explanations accordingly remains limited.

Explainers' Mental Representations of Explainees' Needs in Everyday Explanations

Nov 13, 2024

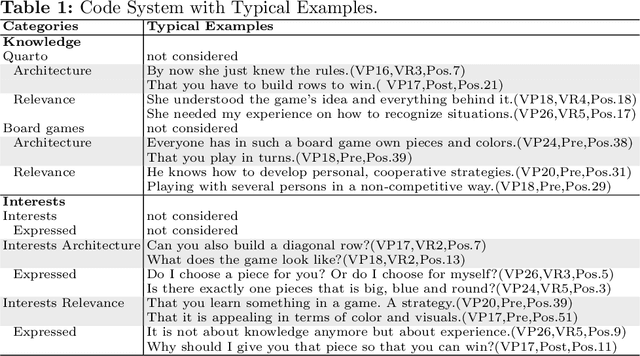

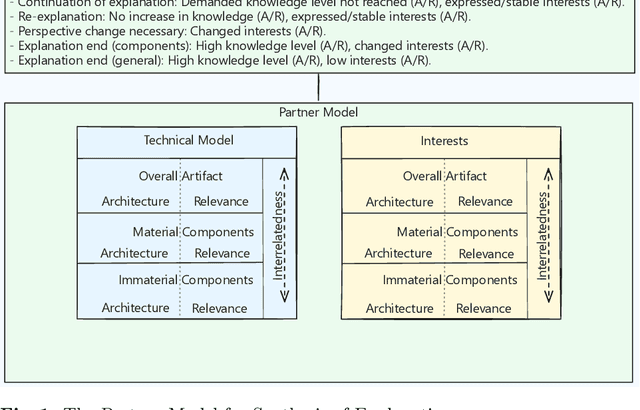

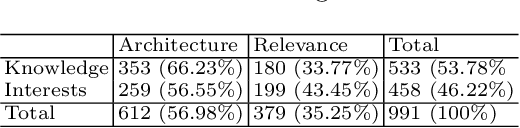

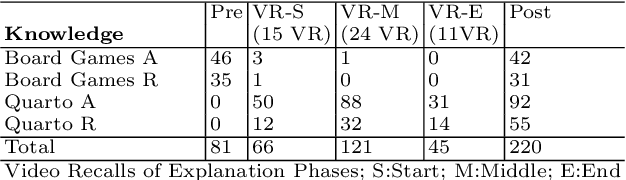

Abstract:In explanations, explainers have mental representations of explainees' developing knowledge and shifting interests regarding the explanandum. These mental representations are dynamic in nature and develop over time, thereby enabling explainers to react to explainees' needs by adapting and customizing the explanation. XAI should be able to react to explainees' needs in a similar manner. Therefore, a component that incorporates aspects of explainers' mental representations of explainees is required. In this study, we took first steps by investigating explainers' mental representations in everyday explanations of technological artifacts. According to the dual nature theory, technological artifacts require explanations with two distinct perspectives, namely observable and measurable features addressing "Architecture" or interpretable aspects addressing "Relevance". We conducted extended semi structured pre-, post- and video recall-interviews with explainers (N=9) in the context of an explanation. The transcribed interviews were analyzed utilizing qualitative content analysis. The explainers' answers regarding the explainees' knowledge and interests with regard to the technological artifact emphasized the vagueness of early assumptions of explainers toward strong beliefs in the course of explanations. The assumed knowledge of explainees in the beginning is centered around Architecture and develops toward knowledge with regard to both Architecture and Relevance. In contrast, explainers assumed higher interests in Relevance in the beginning to interests regarding both Architecture and Relevance in the further course of explanations. Further, explainers often finished the explanation despite their perception that explainees still had gaps in knowledge. These findings are transferred into practical implications relevant for user models for adaptive explainable systems.

What you need to know about a learning robot: Identifying the enabling architecture of complex systems

Nov 24, 2023Abstract:Nowadays, we are dealing more and more with robots and AI in everyday life. However, their behavior is not always apparent to most lay users, especially in error situations. As a result, there can be misconceptions about the behavior of the technologies in use. This, in turn, can lead to misuse and rejection by users. Explanation, for example, through transparency, can address these misconceptions. However, it would be confusing and overwhelming for users if the entire software or hardware was explained. Therefore, this paper looks at the 'enabling' architecture. It describes those aspects of a robotic system that might need to be explained to enable someone to use the technology effectively. Furthermore, this paper is concerned with the 'explanandum', which is the corresponding misunderstanding or missing concepts of the enabling architecture that needs to be clarified. We have thus developed and present an approach for determining this 'enabling' architecture and the resulting 'explanandum' of complex technologies.

Forms of Understanding of XAI-Explanations

Nov 15, 2023

Abstract:Explainability has become an important topic in computer science and artificial intelligence, leading to a subfield called Explainable Artificial Intelligence (XAI). The goal of providing or seeking explanations is to achieve (better) 'understanding' on the part of the explainee. However, what it means to 'understand' is still not clearly defined, and the concept itself is rarely the subject of scientific investigation. This conceptual article aims to present a model of forms of understanding in the context of XAI and beyond. From an interdisciplinary perspective bringing together computer science, linguistics, sociology, and psychology, a definition of understanding and its forms, assessment, and dynamics during the process of giving everyday explanations are explored. Two types of understanding are considered as possible outcomes of explanations, namely enabledness, 'knowing how' to do or decide something, and comprehension, 'knowing that' -- both in different degrees (from shallow to deep). Explanations regularly start with shallow understanding in a specific domain and can lead to deep comprehension and enabledness of the explanandum, which we see as a prerequisite for human users to gain agency. In this process, the increase of comprehension and enabledness are highly interdependent. Against the background of this systematization, special challenges of understanding in XAI are discussed.

Adding Why to What? Analyses of an Everyday Explanation

Aug 08, 2023Abstract:In XAI it is important to consider that, in contrast to explanations for professional audiences, one cannot assume common expertise when explaining for laypeople. But such explanations between humans vary greatly, making it difficult to research commonalities across explanations. We used the dual nature theory, a techno-philosophical approach, to cope with these challenges. According to it, one can explain, for example, an XAI's decision by addressing its dual nature: by focusing on the Architecture (e.g., the logic of its algorithms) or the Relevance (e.g., the severity of a decision, the implications of a recommendation). We investigated 20 game explanations using the theory as an analytical framework. We elaborate how we used the theory to quickly structure and compare explanations of technological artifacts. We supplemented results from analyzing the explanation contents with results from a video recall to explore how explainers justified their explanation. We found that explainers were focusing on the physical aspects of the game first (Architecture) and only later on aspects of the Relevance. Reasoning in the video recalls indicated that EX regarded the focus on the Architecture as important for structuring the explanation initially by explaining the basic components before focusing on more complex, intangible aspects. Shifting between addressing the two sides was justified by explanation goals, emerging misunderstandings, and the knowledge needs of the explainee. We discovered several commonalities that inspire future research questions which, if further generalizable, provide first ideas for the construction of synthetic explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge