Phillip Richter

Improving Human-Robot Teaching by Quantifying and Reducing Mental Model Mismatch

Jan 08, 2025

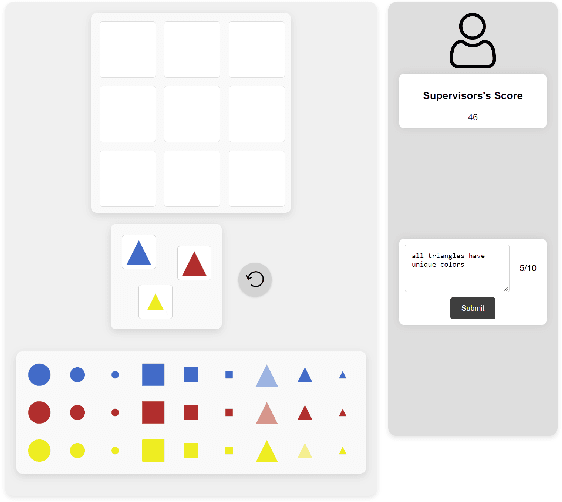

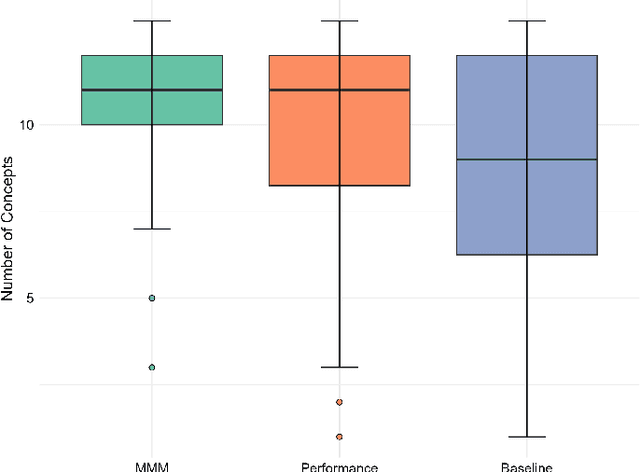

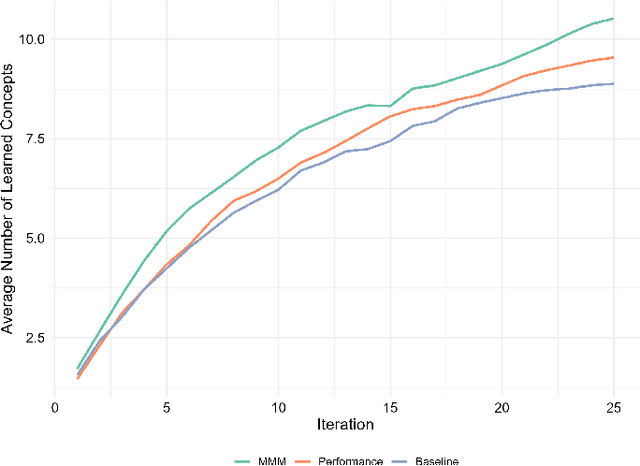

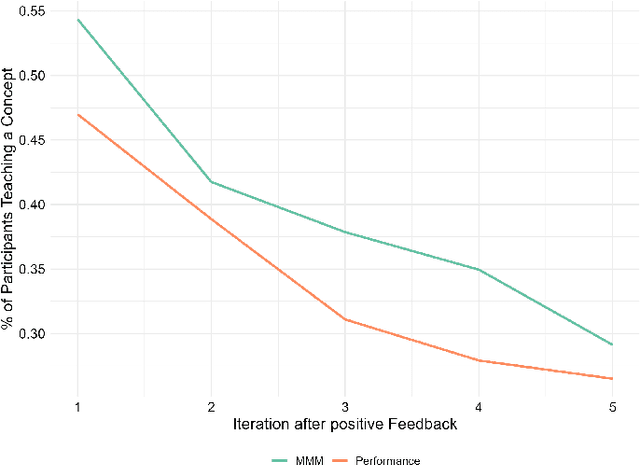

Abstract:The rapid development of artificial intelligence and robotics has had a significant impact on our lives, with intelligent systems increasingly performing tasks traditionally performed by humans. Efficient knowledge transfer requires matching the mental model of the human teacher with the capabilities of the robot learner. This paper introduces the Mental Model Mismatch (MMM) Score, a feedback mechanism designed to quantify and reduce mismatches by aligning human teaching behavior with robot learning behavior. Using Large Language Models (LLMs), we analyze teacher intentions in natural language to generate adaptive feedback. A study with 150 participants teaching a virtual robot to solve a puzzle game shows that intention-based feedback significantly outperforms traditional performance-based feedback or no feedback. The results suggest that intention-based feedback improves instructional outcomes, improves understanding of the robot's learning process and reduces misconceptions. This research addresses a critical gap in human-robot interaction (HRI) by providing a method to quantify and mitigate discrepancies between human mental models and robot capabilities, with the goal of improving robot learning and human teaching effectiveness.

What you need to know about a learning robot: Identifying the enabling architecture of complex systems

Nov 24, 2023Abstract:Nowadays, we are dealing more and more with robots and AI in everyday life. However, their behavior is not always apparent to most lay users, especially in error situations. As a result, there can be misconceptions about the behavior of the technologies in use. This, in turn, can lead to misuse and rejection by users. Explanation, for example, through transparency, can address these misconceptions. However, it would be confusing and overwhelming for users if the entire software or hardware was explained. Therefore, this paper looks at the 'enabling' architecture. It describes those aspects of a robotic system that might need to be explained to enable someone to use the technology effectively. Furthermore, this paper is concerned with the 'explanandum', which is the corresponding misunderstanding or missing concepts of the enabling architecture that needs to be clarified. We have thus developed and present an approach for determining this 'enabling' architecture and the resulting 'explanandum' of complex technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge