José Manuel Gutiérrez

Transformer based super-resolution downscaling for regional reanalysis: Full domain vs tiling approaches

Oct 16, 2024

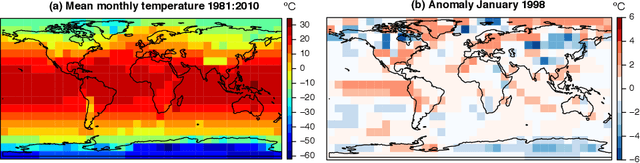

Abstract:Super-resolution (SR) is a promising cost-effective downscaling methodology for producing high-resolution climate information from coarser counterparts. A particular application is downscaling regional reanalysis outputs (predictand) from the driving global counterparts (predictor). This study conducts an intercomparison of various SR downscaling methods focusing on temperature and using the CERRA reanalysis (5.5 km resolution, produced with a regional atmospheric model driven by ERA5) as example. The method proposed in this work is the Swin transformer and two alternative methods are used as benchmark (fully convolutional U-Net and convolutional and dense DeepESD) as well as the simple bicubic interpolation. We compare two approaches, the standard one using the full domain as input and a more scalable tiling approach, dividing the full domain into tiles that are used as input. The methods are trained to downscale CERRA surface temperature, based on temperature information from the driving ERA5; in addition, the tiling approach includes static orographic information. We show that the tiling approach, which requires spatial transferability, comes at the cost of a lower performance (although it outperforms some full-domain benchmarks), but provides an efficient scalable solution that allows SR reduction on a pan-European scale and is valuable for real-time applications.

Multi-variable Hard Physical Constraints for Climate Model Downscaling

Aug 02, 2023

Abstract:Global Climate Models (GCMs) are the primary tool to simulate climate evolution and assess the impacts of climate change. However, they often operate at a coarse spatial resolution that limits their accuracy in reproducing local-scale phenomena. Statistical downscaling methods leveraging deep learning offer a solution to this problem by approximating local-scale climate fields from coarse variables, thus enabling regional GCM projections. Typically, climate fields of different variables of interest are downscaled independently, resulting in violations of fundamental physical properties across interconnected variables. This study investigates the scope of this problem and, through an application on temperature, lays the foundation for a framework introducing multi-variable hard constraints that guarantees physical relationships between groups of downscaled climate variables.

Using Explainability to Inform Statistical Downscaling Based on Deep Learning Beyond Standard Validation Approaches

Feb 03, 2023Abstract:Deep learning (DL) has emerged as a promising tool to downscale climate projections at regional-to-local scales from large-scale atmospheric fields following the perfect-prognosis (PP) approach. Given their complexity, it is crucial to properly evaluate these methods, especially when applied to changing climatic conditions where the ability to extrapolate/generalise is key. In this work, we intercompare several DL models extracted from the literature for the same challenging use-case (downscaling temperature in the CORDEX North America domain) and expand standard evaluation methods building on eXplainable artifical intelligence (XAI) techniques. We show how these techniques can be used to unravel the internal behaviour of these models, providing new evaluation dimensions and aiding in their diagnostic and design. These results show the usefulness of incorporating XAI techniques into statistical downscaling evaluation frameworks, especially when working with large regions and/or under climate change conditions.

Learning complex dependency structure of gene regulatory networks from high dimensional micro-array data with Gaussian Bayesian networks

Jun 28, 2021

Abstract:Gene expression datasets consist of thousand of genes with relatively small samplesizes (i.e. are large-$p$-small-$n$). Moreover, dependencies of various orders co-exist in the datasets. In the Undirected probabilistic Graphical Model (UGM) framework the Glasso algorithm has been proposed to deal with high dimensional micro-array datasets forcing sparsity. Also, modifications of the default Glasso algorithm are developed to overcome the problem of complex interaction structure. In this work we advocate the use of a simple score-based Hill Climbing algorithm (HC) that learns Gaussian Bayesian Networks (BNs) leaning on Directed Acyclic Graphs (DAGs). We compare HC with Glasso and its modifications in the UGM framework on their capability to reconstruct GRNs from micro-array data belonging to the Escherichia Coli genome. We benefit from the analytical properties of the Joint Probability Density (JPD) function on which both directed and undirected PGMs build to convert DAGs to UGMs. We conclude that dependencies in complex data are learned best by the HC algorithm, presenting them most accurately and efficiently, simultaneously modelling strong local and weaker but significant global connections coexisting in the gene expression dataset. The HC algorithm adapts intrinsically to the complex dependency structure of the dataset, without forcing a specific structure in advance. On the contrary, Glasso and modifications model unnecessary dependencies at the expense of the probabilistic information in the network and of a structural bias in the JPD function that can only be relieved including many parameters.

Who Learns Better Bayesian Network Structures: Constraint-Based, Score-based or Hybrid Algorithms?

Aug 01, 2018

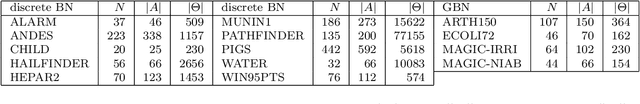

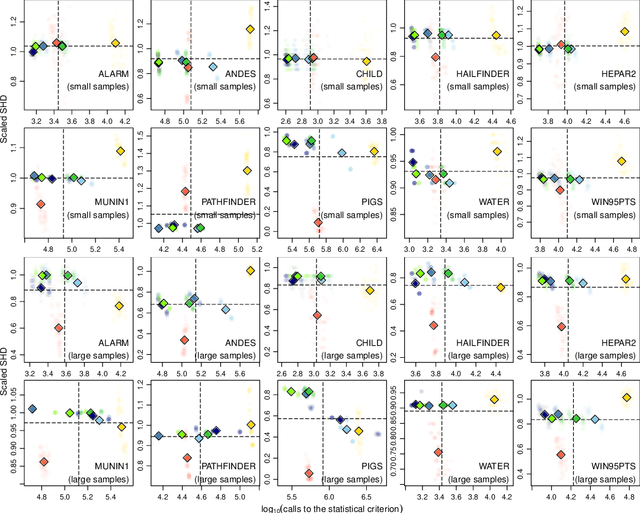

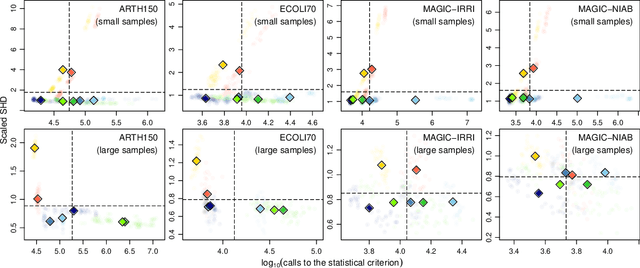

Abstract:The literature groups algorithms to learn the structure of Bayesian networks from data in three separate classes: constraint-based algorithms, which use conditional independence tests to learn the dependence structure of the data; score-based algorithms, which use goodness-of-fit scores as objective functions to maximise; and hybrid algorithms that combine both approaches. Famously, Cowell (2001) showed that algorithms in the first two classes learn the same structures when the topological ordering of the network is known and we use entropy to assess conditional independence and goodness of fit. In this paper we address the complementary question: how do these classes of algorithms perform outside of the assumptions above? We approach this question by recognising that structure learning is defined by the combination of a statistical criterion and an algorithm that determines how the criterion is applied to the data. Removing the confounding effect of different choices for the statistical criterion, we find using both simulated and real-world data that constraint-based algorithms do not appear to be more efficient or more sensitive to errors than score-based algorithms; and that hybrid algorithms are not faster or more accurate than constraint-based algorithms. This suggests that commonly held beliefs on structure learning in the literature are strongly influenced by the choice of particular statistical criteria rather than just properties of the algorithms themselves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge