Jorge Baño-Medina

Harnessing AI data-driven global weather models for climate attribution: An analysis of the 2017 Oroville Dam extreme atmospheric river

Sep 17, 2024

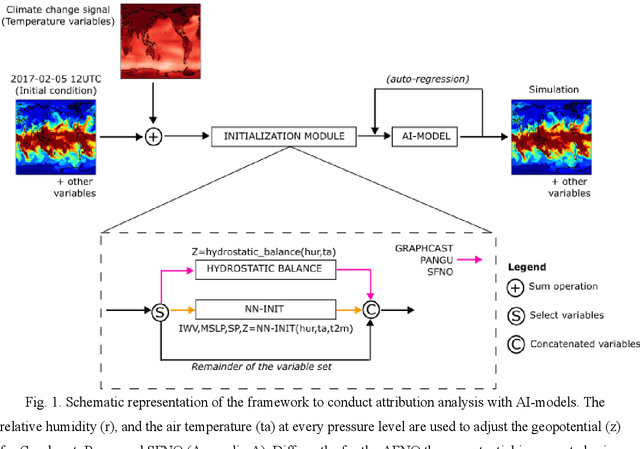

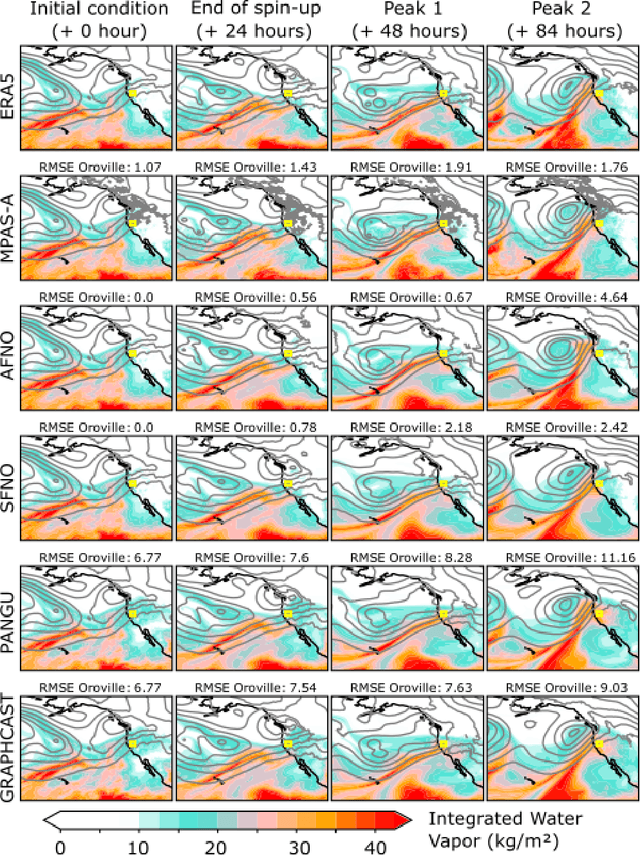

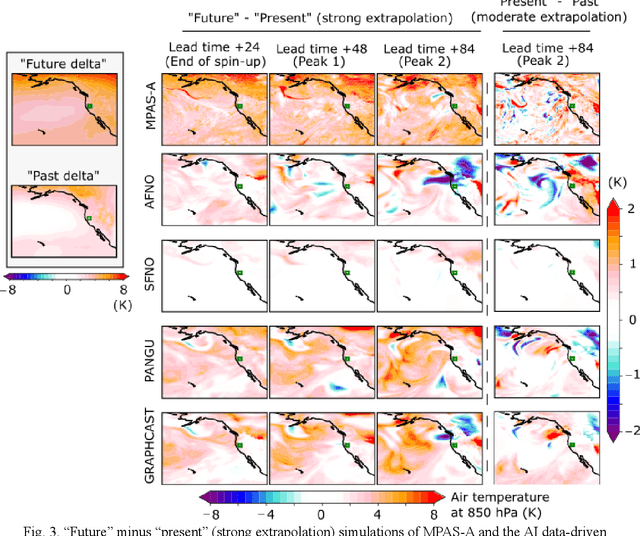

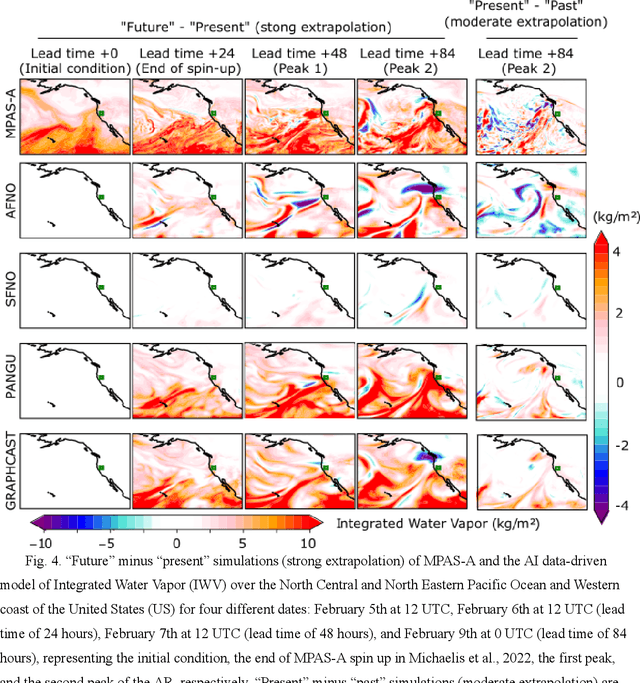

Abstract:AI data-driven models (Graphcast, Pangu Weather, Fourcastnet, and SFNO) are explored for storyline-based climate attribution due to their short inference times, which can accelerate the number of events studied, and provide real time attributions when public attention is heightened. The analysis is framed on the extreme atmospheric river episode of February 2017 that contributed to the Oroville dam spillway incident in Northern California. Past and future simulations are generated by perturbing the initial conditions with the pre-industrial and the late-21st century temperature climate change signals, respectively. The simulations are compared to results from a dynamical model which represents plausible pseudo-realities under both climate environments. Overall, the AI models show promising results, projecting a 5-6 % increase in the integrated water vapor over the Oroville dam in the present day compared to the pre-industrial, in agreement with the dynamical model. Different geopotential-moisture-temperature dependencies are unveiled for each of the AI-models tested, providing valuable information for understanding the physicality of the attribution response. However, the AI models tend to simulate weaker attribution values than the pseudo-reality imagined by the dynamical model, suggesting some reduced extrapolation skill, especially for the late-21st century regime. Large ensembles generated with an AI model (>500 members) produced statistically significant present-day to pre-industrial attribution results, unlike the >20-member ensemble from the dynamical model. This analysis highlights the potential of AI models to conduct attribution analysis, while emphasizing future lines of work on explainable artificial intelligence to gain confidence in these tools, which can enable reliable attribution studies in real-time.

On the use of Deep Generative Models for Perfect Prognosis Climate Downscaling

Apr 27, 2023

Abstract:Deep Learning has recently emerged as a perfect prognosis downscaling technique to compute high-resolution fields from large-scale coarse atmospheric data. Despite their promising results to reproduce the observed local variability, they are based on the estimation of independent distributions at each location, which leads to deficient spatial structures, especially when downscaling precipitation. This study proposes the use of generative models to improve the spatial consistency of the high-resolution fields, very demanded by some sectoral applications (e.g., hydrology) to tackle climate change.

Deep Ensembles to Improve Uncertainty Quantification of Statistical Downscaling Models under Climate Change Conditions

Apr 27, 2023Abstract:Recently, deep learning has emerged as a promising tool for statistical downscaling, the set of methods for generating high-resolution climate fields from coarse low-resolution variables. Nevertheless, their ability to generalize to climate change conditions remains questionable, mainly due to the stationarity assumption. We propose deep ensembles as a simple method to improve the uncertainty quantification of statistical downscaling models. By better capturing uncertainty, statistical downscaling models allow for superior planning against extreme weather events, a source of various negative social and economic impacts. Since no observational future data exists, we rely on a pseudo reality experiment to assess the suitability of deep ensembles for quantifying the uncertainty of climate change projections. Deep ensembles allow for a better risk assessment, highly demanded by sectoral applications to tackle climate change.

Using Explainability to Inform Statistical Downscaling Based on Deep Learning Beyond Standard Validation Approaches

Feb 03, 2023Abstract:Deep learning (DL) has emerged as a promising tool to downscale climate projections at regional-to-local scales from large-scale atmospheric fields following the perfect-prognosis (PP) approach. Given their complexity, it is crucial to properly evaluate these methods, especially when applied to changing climatic conditions where the ability to extrapolate/generalise is key. In this work, we intercompare several DL models extracted from the literature for the same challenging use-case (downscaling temperature in the CORDEX North America domain) and expand standard evaluation methods building on eXplainable artifical intelligence (XAI) techniques. We show how these techniques can be used to unravel the internal behaviour of these models, providing new evaluation dimensions and aiding in their diagnostic and design. These results show the usefulness of incorporating XAI techniques into statistical downscaling evaluation frameworks, especially when working with large regions and/or under climate change conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge