Maciel Zortea

Fine-tuning of Geospatial Foundation Models for Aboveground Biomass Estimation

Jun 28, 2024

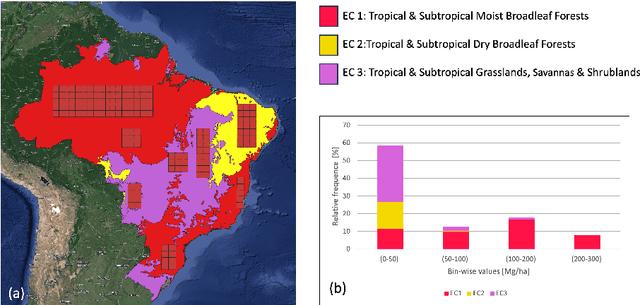

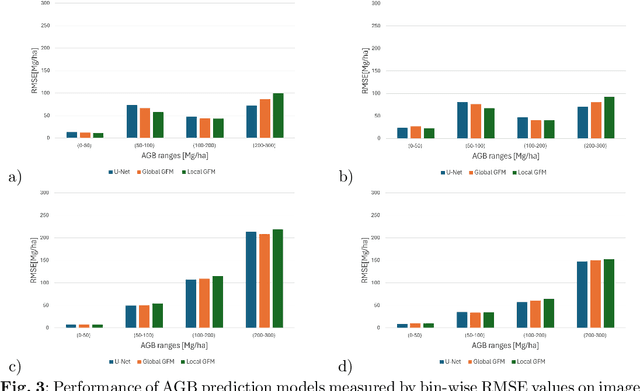

Abstract:Global vegetation structure mapping is critical for understanding the global carbon cycle and maximizing the efficacy of nature-based carbon sequestration initiatives. Moreover, vegetation structure mapping can help reduce the impacts of climate change by, for example, guiding actions to improve water security, increase biodiversity and reduce flood risk. Global satellite measurements provide an important set of observations for monitoring and managing deforestation and degradation of existing forests, natural forest regeneration, reforestation, biodiversity restoration, and the implementation of sustainable agricultural practices. In this paper, we explore the effectiveness of fine-tuning of a geospatial foundation model to estimate above-ground biomass (AGB) using space-borne data collected across different eco-regions in Brazil. The fine-tuned model architecture consisted of a Swin-B transformer as the encoder (i.e., backbone) and a single convolutional layer for the decoder head. All results were compared to a U-Net which was trained as the baseline model Experimental results of this sparse-label prediction task demonstrate that the fine-tuned geospatial foundation model with a frozen encoder has comparable performance to a U-Net trained from scratch. This is despite the fine-tuned model having 13 times less parameters requiring optimization, which saves both time and compute resources. Further, we explore the transfer-learning capabilities of the geospatial foundation models by fine-tuning on satellite imagery with sparse labels from different eco-regions in Brazil.

Fast-Slow Streamflow Model Using Mass-Conserving LSTM

Jul 13, 2021

Abstract:Streamflow forecasting is key to effectively managing water resources and preparing for the occurrence of natural calamities being exacerbated by climate change. Here we use the concept of fast and slow flow components to create a new mass-conserving Long Short-Term Memory (LSTM) neural network model. It uses hydrometeorological time series and catchment attributes to predict daily river discharges. Preliminary results evidence improvement in skills for different scores compared to the recent literature.

Geostatistical Learning: Challenges and Opportunities

Feb 17, 2021

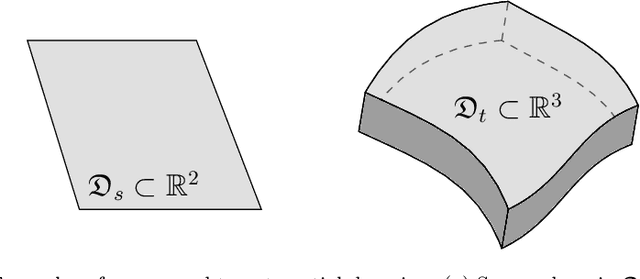

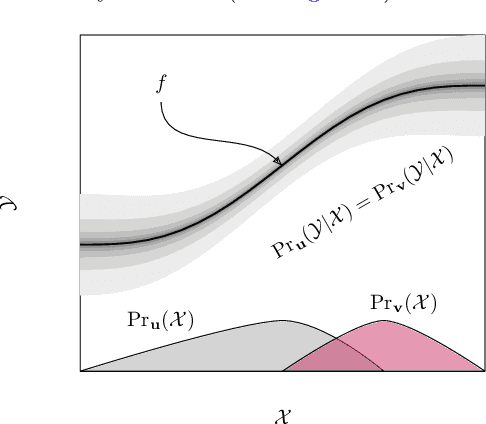

Abstract:Statistical learning theory provides the foundation to applied machine learning, and its various successful applications in computer vision, natural language processing and other scientific domains. The theory, however, does not take into account the unique challenges of performing statistical learning in geospatial settings. For instance, it is well known that model errors cannot be assumed to be independent and identically distributed in geospatial (a.k.a. regionalized) variables due to spatial correlation; and trends caused by geophysical processes lead to covariate shifts between the domain where the model was trained and the domain where it will be applied, which in turn harm the use of classical learning methodologies that rely on random samples of the data. In this work, we introduce the geostatistical (transfer) learning problem, and illustrate the challenges of learning from geospatial data by assessing widely-used methods for estimating generalization error of learning models, under covariate shift and spatial correlation. Experiments with synthetic Gaussian process data as well as with real data from geophysical surveys in New Zealand indicate that none of the methods are adequate for model selection in a geospatial context. We provide general guidelines regarding the choice of these methods in practice while new methods are being actively researched.

Comparison of computer systems and ranking criteria for automatic melanoma detection in dermoscopic images

Feb 05, 2018

Abstract:Melanoma is the deadliest form of skin cancer. Computer systems can assist in melanoma detection, but are not widespread in clinical practice. In 2016, an open challenge in classification of dermoscopic images of skin lesions was announced. A training set of 900 images with corresponding class labels and semi-automatic/manual segmentation masks was released for the challenge. An independent test set of 379 images was used to rank the participants. This article demonstrates the impact of ranking criteria, segmentation method and classifier, and highlights the clinical perspective. We compare five different measures for diagnostic accuracy by analysing the resulting ranking of the computer systems in the challenge. Choice of performance measure had great impact on the ranking. Systems that were ranked among the top three for one measure, dropped to the bottom half when changing performance measure. Nevus Doctor, a computer system previously developed by the authors, was used to investigate the impact of segmentation and classifier. The unexpected small impact of automatic versus semi-automatic/manual segmentation suggests that improvements of the automatic segmentation method w.r.t. resemblance to semi-automatic/manual segmentation will not improve diagnostic accuracy substantially. A small set of similar classification algorithms are used to investigate the impact of classifier on the diagnostic accuracy. The variability in diagnostic accuracy for different classifier algorithms was larger than the variability for segmentation methods, and suggests a focus for future investigations. From a clinical perspective, the misclassification of a melanoma as benign has far greater cost than the misclassification of a benign lesion. For computer systems to have clinical impact, their performance should be ranked by a high-sensitivity measure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge