Hou

When Segmentation Meets Hyperspectral Image: New Paradigm for Hyperspectral Image Classification

Feb 18, 2025Abstract:Hyperspectral image (HSI) classification is a cornerstone of remote sensing, enabling precise material and land-cover identification through rich spectral information. While deep learning has driven significant progress in this task, small patch-based classifiers, which account for over 90% of the progress, face limitations: (1) the small patch (e.g., 7x7, 9x9)-based sampling approach considers a limited receptive field, resulting in insufficient spatial structural information critical for object-level identification and noise-like misclassifications even within uniform regions; (2) undefined optimal patch sizes lead to coarse label predictions, which degrade performance; and (3) a lack of multi-shape awareness around objects. To address these challenges, we draw inspiration from large-scale image segmentation techniques, which excel at handling object boundaries-a capability essential for semantic labeling in HSI classification. However, their application remains under-explored in this task due to (1) the prevailing notion that larger patch sizes degrade performance, (2) the extensive unlabeled regions in HSI groundtruth, and (3) the misalignment of input shapes between HSI data and segmentation models. Thus, in this study, we propose a novel paradigm and baseline, HSIseg, for HSI classification that leverages segmentation techniques combined with a novel Dynamic Shifted Regional Transformer (DSRT) to overcome these challenges. We also introduce an intuitive progressive learning framework with adaptive pseudo-labeling to iteratively incorporate unlabeled regions into the training process, thereby advancing the application of segmentation techniques. Additionally, we incorporate auxiliary data through multi-source data collaboration, promoting better feature interaction. Validated on five public HSI datasets, our proposal outperforms state-of-the-art methods.

Roadmap to Neuromorphic Computing with Emerging Technologies

Jul 02, 2024

Abstract:The roadmap is organized into several thematic sections, outlining current computing challenges, discussing the neuromorphic computing approach, analyzing mature and currently utilized technologies, providing an overview of emerging technologies, addressing material challenges, exploring novel computing concepts, and finally examining the maturity level of emerging technologies while determining the next essential steps for their advancement.

Mamba-in-Mamba: Centralized Mamba-Cross-Scan in Tokenized Mamba Model for Hyperspectral Image Classification

May 20, 2024

Abstract:Hyperspectral image (HSI) classification is pivotal in the remote sensing (RS) field, particularly with the advancement of deep learning techniques. Sequential models, adapted from the natural language processing (NLP) field such as Recurrent Neural Networks (RNNs) and Transformers, have been tailored to this task, offering a unique viewpoint. However, several challenges persist 1) RNNs struggle with centric feature aggregation and are sensitive to interfering pixels, 2) Transformers require significant computational resources and often underperform with limited HSI training samples, and 3) Current scanning methods for converting images into sequence-data are simplistic and inefficient. In response, this study introduces the innovative Mamba-in-Mamba (MiM) architecture for HSI classification, the first attempt of deploying State Space Model (SSM) in this task. The MiM model includes 1) A novel centralized Mamba-Cross-Scan (MCS) mechanism for transforming images into sequence-data, 2) A Tokenized Mamba (T-Mamba) encoder that incorporates a Gaussian Decay Mask (GDM), a Semantic Token Learner (STL), and a Semantic Token Fuser (STF) for enhanced feature generation and concentration, and 3) A Weighted MCS Fusion (WMF) module coupled with a Multi-Scale Loss Design to improve decoding efficiency. Experimental results from three public HSI datasets with fixed and disjoint training-testing samples demonstrate that our method outperforms existing baselines and state-of-the-art approaches, highlighting its efficacy and potential in HSI applications.

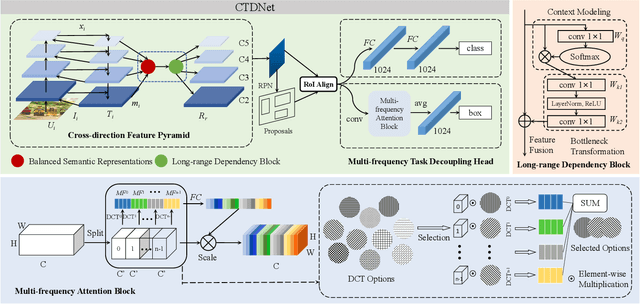

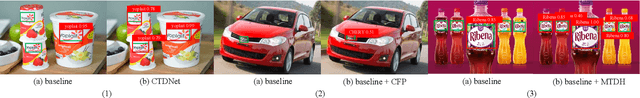

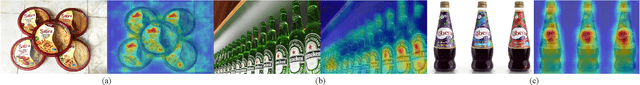

A Cross-direction Task Decoupling Network for Small Logo Detection

May 04, 2023

Abstract:Logo detection plays an integral role in many applications. However, handling small logos is still difficult since they occupy too few pixels in the image, which burdens the extraction of discriminative features. The aggregation of small logos also brings a great challenge to the classification and localization of logos. To solve these problems, we creatively propose Cross-direction Task Decoupling Network (CTDNet) for small logo detection. We first introduce Cross-direction Feature Pyramid (CFP) to realize cross-direction feature fusion by adopting horizontal transmission and vertical transmission. In addition, Multi-frequency Task Decoupling Head (MTDH) decouples the classification and localization tasks into two branches. A multi frequency attention convolution branch is designed to achieve more accurate regression by combining discrete cosine transform and convolution creatively. Comprehensive experiments on four logo datasets demonstrate the effectiveness and efficiency of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge