Adnan Mehonic

Alex

Nonideality-aware training makes memristive networks more robust to adversarial attacks

Sep 29, 2024Abstract:Neural networks are now deployed in a wide number of areas from object classification to natural language systems. Implementations using analog devices like memristors promise better power efficiency, potentially bringing these applications to a greater number of environments. However, such systems suffer from more frequent device faults and overall, their exposure to adversarial attacks has not been studied extensively. In this work, we investigate how nonideality-aware training - a common technique to deal with physical nonidealities - affects adversarial robustness. We find that adversarial robustness is significantly improved, even with limited knowledge of what nonidealities will be encountered during test time.

Roadmap to Neuromorphic Computing with Emerging Technologies

Jul 02, 2024

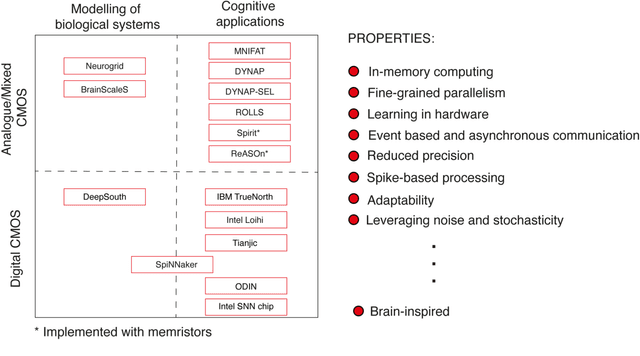

Abstract:The roadmap is organized into several thematic sections, outlining current computing challenges, discussing the neuromorphic computing approach, analyzing mature and currently utilized technologies, providing an overview of emerging technologies, addressing material challenges, exploring novel computing concepts, and finally examining the maturity level of emerging technologies while determining the next essential steps for their advancement.

Brain-inspired computing: We need a master plan

Apr 29, 2021

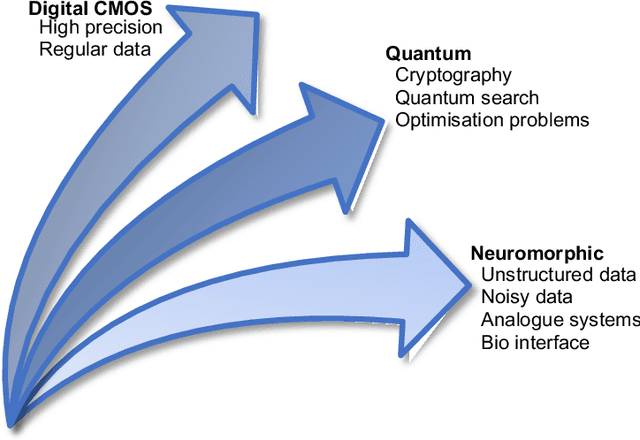

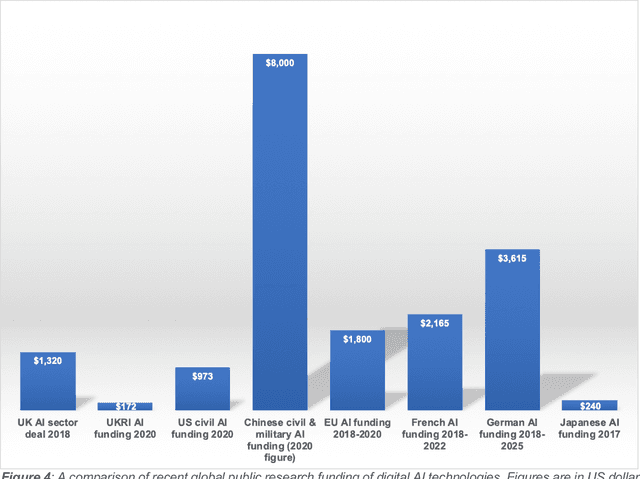

Abstract:New computing technologies inspired by the brain promise fundamentally different ways to process information with extreme energy efficiency and the ability to handle the avalanche of unstructured and noisy data that we are generating at an ever-increasing rate. To realise this promise requires a brave and coordinated plan to bring together disparate research communities and to provide them with the funding, focus and support needed. We have done this in the past with digital technologies; we are in the process of doing it with quantum technologies; can we now do it for brain-inspired computing?

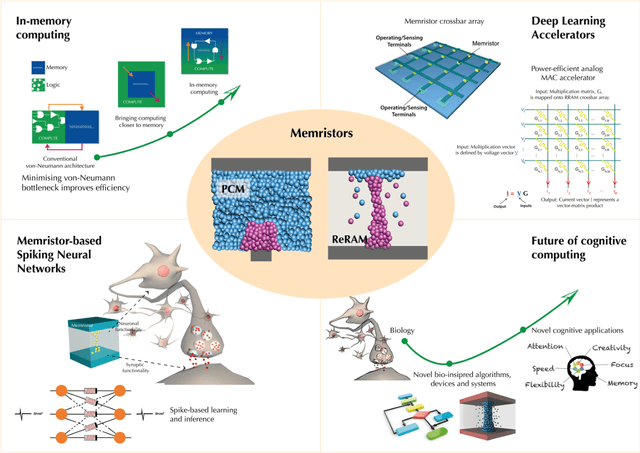

Memristors -- from In-memory computing, Deep Learning Acceleration, Spiking Neural Networks, to the Future of Neuromorphic and Bio-inspired Computing

Apr 30, 2020

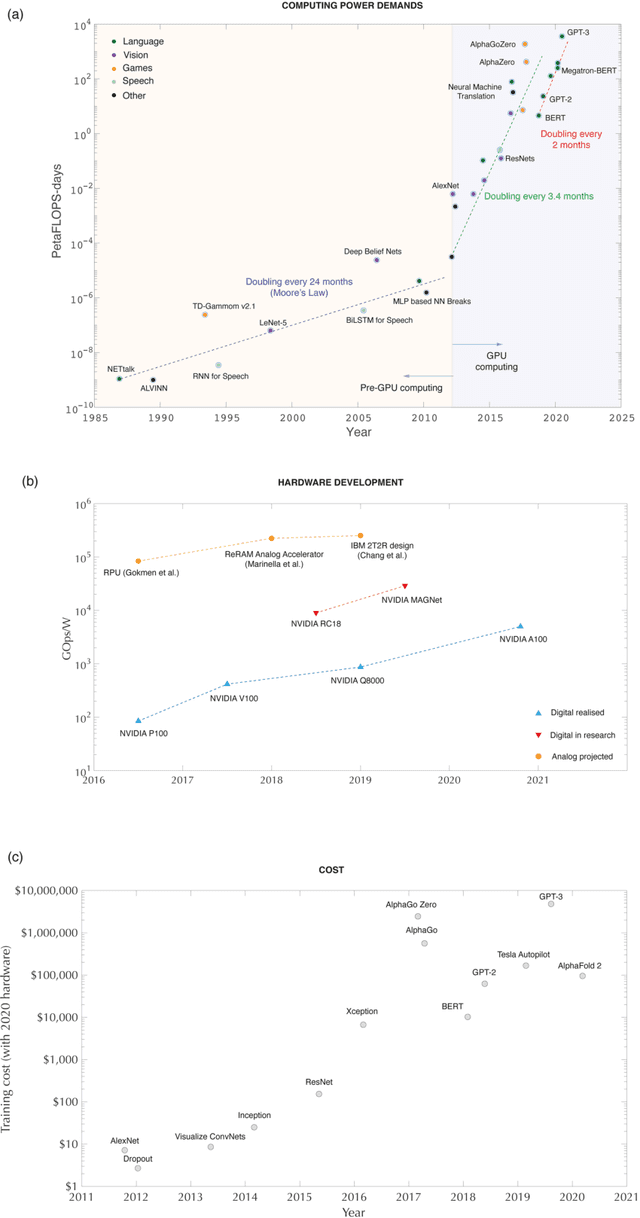

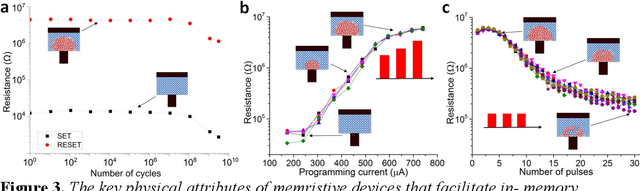

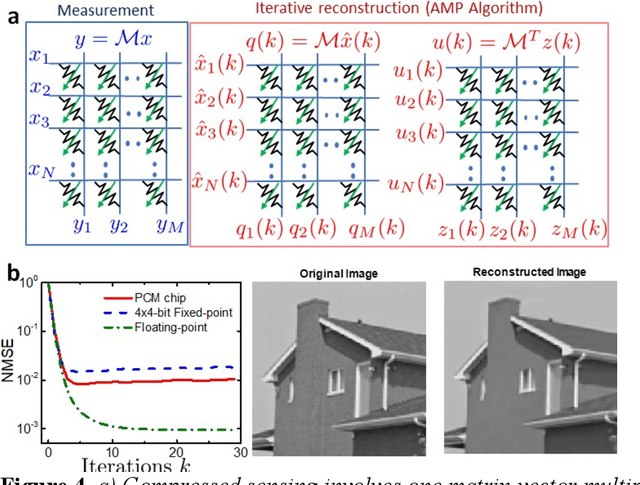

Abstract:Machine learning, particularly in the form of deep learning, has driven most of the recent fundamental developments in artificial intelligence. Deep learning is based on computational models that are, to a certain extent, bio-inspired, as they rely on networks of connected simple computing units operating in parallel. Deep learning has been successfully applied in areas such as object/pattern recognition, speech and natural language processing, self-driving vehicles, intelligent self-diagnostics tools, autonomous robots, knowledgeable personal assistants, and monitoring. These successes have been mostly supported by three factors: availability of vast amounts of data, continuous growth in computing power, and algorithmic innovations. The approaching demise of Moore's law, and the consequent expected modest improvements in computing power that can be achieved by scaling, raise the question of whether the described progress will be slowed or halted due to hardware limitations. This paper reviews the case for a novel beyond CMOS hardware technology, memristors, as a potential solution for the implementation of power-efficient in-memory computing, deep learning accelerators, and spiking neural networks. Central themes are the reliance on non-von-Neumann computing architectures and the need for developing tailored learning and inference algorithms. To argue that lessons from biology can be useful in providing directions for further progress in artificial intelligence, we briefly discuss an example based reservoir computing. We conclude the review by speculating on the big picture view of future neuromorphic and brain-inspired computing systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge