Emil Lupu

Hallucination-Resistant Security Planning with a Large Language Model

Feb 05, 2026Abstract:Large language models (LLMs) are promising tools for supporting security management tasks, such as incident response planning. However, their unreliability and tendency to hallucinate remain significant challenges. In this paper, we address these challenges by introducing a principled framework for using an LLM as decision support in security management. Our framework integrates the LLM in an iterative loop where it generates candidate actions that are checked for consistency with system constraints and lookahead predictions. When consistency is low, we abstain from the generated actions and instead collect external feedback, e.g., by evaluating actions in a digital twin. This feedback is then used to refine the candidate actions through in-context learning (ICL). We prove that this design allows to control the hallucination risk by tuning the consistency threshold. Moreover, we establish a bound on the regret of ICL under certain assumptions. To evaluate our framework, we apply it to an incident response use case where the goal is to generate a response and recovery plan based on system logs. Experiments on four public datasets show that our framework reduces recovery times by up to 30% compared to frontier LLMs.

Nonideality-aware training makes memristive networks more robust to adversarial attacks

Sep 29, 2024Abstract:Neural networks are now deployed in a wide number of areas from object classification to natural language systems. Implementations using analog devices like memristors promise better power efficiency, potentially bringing these applications to a greater number of environments. However, such systems suffer from more frequent device faults and overall, their exposure to adversarial attacks has not been studied extensively. In this work, we investigate how nonideality-aware training - a common technique to deal with physical nonidealities - affects adversarial robustness. We find that adversarial robustness is significantly improved, even with limited knowledge of what nonidealities will be encountered during test time.

Exact Inference Techniques for the Analysis of Bayesian Attack Graphs

Nov 04, 2016

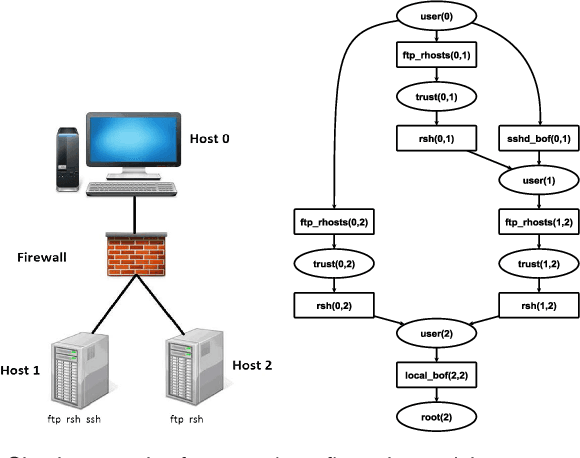

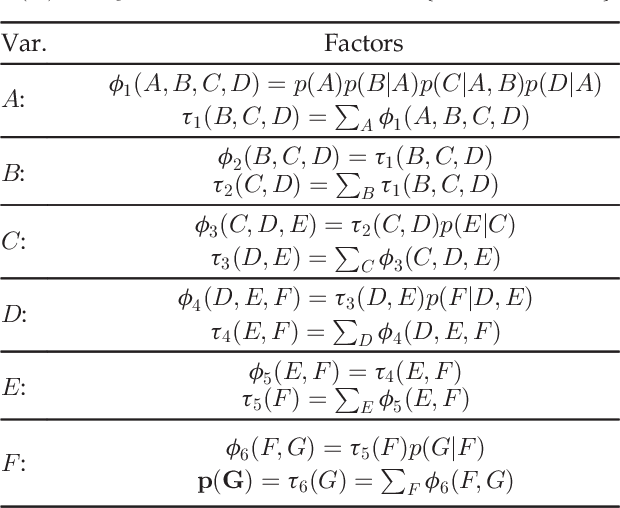

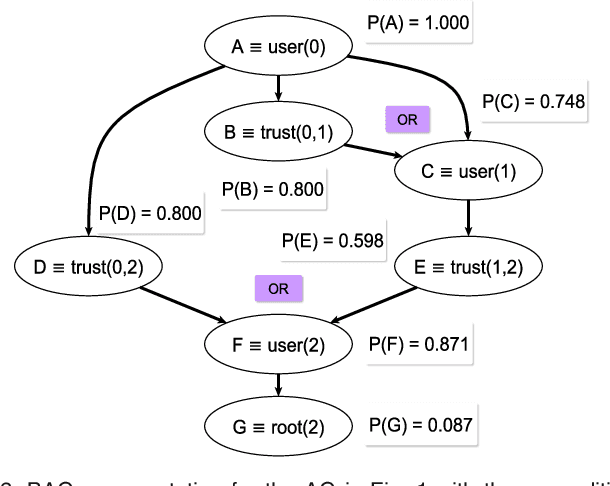

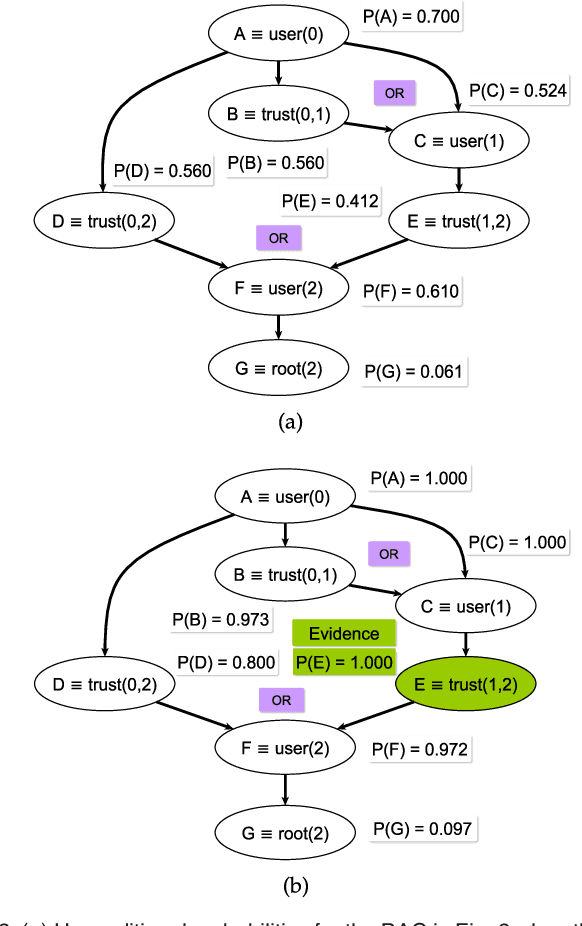

Abstract:Attack graphs are a powerful tool for security risk assessment by analysing network vulnerabilities and the paths attackers can use to compromise network resources. The uncertainty about the attacker's behaviour makes Bayesian networks suitable to model attack graphs to perform static and dynamic analysis. Previous approaches have focused on the formalization of attack graphs into a Bayesian model rather than proposing mechanisms for their analysis. In this paper we propose to use efficient algorithms to make exact inference in Bayesian attack graphs, enabling the static and dynamic network risk assessments. To support the validity of our approach we have performed an extensive experimental evaluation on synthetic Bayesian attack graphs with different topologies, showing the computational advantages in terms of time and memory use of the proposed techniques when compared to existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge