Jared Strader

Language-Grounded Hierarchical Planning and Execution with Multi-Robot 3D Scene Graphs

Jun 09, 2025Abstract:In this paper, we introduce a multi-robot system that integrates mapping, localization, and task and motion planning (TAMP) enabled by 3D scene graphs to execute complex instructions expressed in natural language. Our system builds a shared 3D scene graph incorporating an open-set object-based map, which is leveraged for multi-robot 3D scene graph fusion. This representation supports real-time, view-invariant relocalization (via the object-based map) and planning (via the 3D scene graph), allowing a team of robots to reason about their surroundings and execute complex tasks. Additionally, we introduce a planning approach that translates operator intent into Planning Domain Definition Language (PDDL) goals using a Large Language Model (LLM) by leveraging context from the shared 3D scene graph and robot capabilities. We provide an experimental assessment of the performance of our system on real-world tasks in large-scale, outdoor environments.

Belief Roadmaps with Uncertain Landmark Evanescence

Jan 29, 2025

Abstract:We would like a robot to navigate to a goal location while minimizing state uncertainty. To aid the robot in this endeavor, maps provide a prior belief over the location of objects and regions of interest. To localize itself within the map, a robot identifies mapped landmarks using its sensors. However, as the time between map creation and robot deployment increases, portions of the map can become stale, and landmarks, once believed to be permanent, may disappear. We refer to the propensity of a landmark to disappear as landmark evanescence. Reasoning about landmark evanescence during path planning, and the associated impact on localization accuracy, requires analyzing the presence or absence of each landmark, leading to an exponential number of possible outcomes of a given motion plan. To address this complexity, we develop BRULE, an extension of the Belief Roadmap. During planning, we replace the belief over future robot poses with a Gaussian mixture which is able to capture the effects of landmark evanescence. Furthermore, we show that belief updates can be made efficient, and that maintaining a random subset of mixture components is sufficient to find high quality solutions. We demonstrate performance in simulated and real-world experiments. Software is available at https://bit.ly/BRULE.

Indoor and Outdoor 3D Scene Graph Generation via Language-Enabled Spatial Ontologies

Dec 18, 2023Abstract:This paper proposes an approach to build 3D scene graphs in arbitrary (indoor and outdoor) environments. Such extension is challenging; the hierarchy of concepts that describe an outdoor environment is more complex than for indoors, and manually defining such hierarchy is time-consuming and does not scale. Furthermore, the lack of training data prevents the straightforward application of learning-based tools used in indoor settings. To address these challenges, we propose two novel extensions. First, we develop methods to build a spatial ontology defining concepts and relations relevant for indoor and outdoor robot operation. In particular, we use a Large Language Model (LLM) to build such an ontology, thus largely reducing the amount of manual effort required. Second, we leverage the spatial ontology for 3D scene graph construction using Logic Tensor Networks (LTN) to add logical rules, or axioms (e.g., "a beach contains sand"), which provide additional supervisory signals at training time thus reducing the need for labelled data, providing better predictions, and even allowing predicting concepts unseen at training time. We test our approach in a variety of datasets, including indoor, rural, and coastal environments, and show that it leads to a significant increase in the quality of the 3D scene graph generation with sparsely annotated data.

Aggressive Aerial Grasping using a Soft Drone with Onboard Perception

Aug 11, 2023Abstract:Contrary to the stunning feats observed in birds of prey, aerial manipulation and grasping with flying robots still lack versatility and agility. Conventional approaches using rigid manipulators require precise positioning and are subject to large reaction forces at grasp, which limit performance at high speeds. The few reported examples of aggressive aerial grasping rely on motion capture systems, or fail to generalize across environments and grasp targets. We describe the first example of a soft aerial manipulator equipped with a fully onboard perception pipeline, capable of robustly localizing and grasping visually and morphologically varied objects. The proposed system features a novel passively closing tendon-actuated soft gripper that enables fast closure at grasp, while compensating for position errors, complying to the target-object morphology, and dampening reaction forces. The system includes an onboard perception pipeline that combines a neural-network-based semantic keypoint detector with a state-of-the-art robust 3D object pose estimator, whose estimate is further refined using a fixed-lag smoother. The resulting pose estimate is passed to a minimum-snap trajectory planner, tracked by an adaptive controller that fully compensates for the added mass of the grasped object. Finally, a finite-element-based controller determines optimal gripper configurations for grasping. Rigorous experiments confirm that our approach enables dynamic, aggressive, and versatile grasping. We demonstrate fully onboard vision-based grasps of a variety of objects, in both indoor and outdoor environments, and up to speeds of 2.0 m/s -- the fastest vision-based grasp reported in the literature. Finally, we take a major step in expanding the utility of our platform beyond stationary targets, by demonstrating motion-capture-based grasps of targets moving up to 0.3 m/s, with relative speeds up to 1.5 m/s.

Foundations of Spatial Perception for Robotics: Hierarchical Representations and Real-time Systems

May 11, 2023Abstract:3D spatial perception is the problem of building and maintaining an actionable and persistent representation of the environment in real-time using sensor data and prior knowledge. Despite the fast-paced progress in robot perception, most existing methods either build purely geometric maps (as in traditional SLAM) or flat metric-semantic maps that do not scale to large environments or large dictionaries of semantic labels. The first part of this paper is concerned with representations: we show that scalable representations for spatial perception need to be hierarchical in nature. Hierarchical representations are efficient to store, and lead to layered graphs with small treewidth, which enable provably efficient inference. We then introduce an example of hierarchical representation for indoor environments, namely a 3D scene graph, and discuss its structure and properties. The second part of the paper focuses on algorithms to incrementally construct a 3D scene graph as the robot explores the environment. Our algorithms combine 3D geometry, topology (to cluster the places into rooms), and geometric deep learning (e.g., to classify the type of rooms the robot is moving across). The third part of the paper focuses on algorithms to maintain and correct 3D scene graphs during long-term operation. We propose hierarchical descriptors for loop closure detection and describe how to correct a scene graph in response to loop closures, by solving a 3D scene graph optimization problem. We conclude the paper by combining the proposed perception algorithms into Hydra, a real-time spatial perception system that builds a 3D scene graph from visual-inertial data in real-time. We showcase Hydra's performance in photo-realistic simulations and real data collected by a Clearpath Jackal robots and a Unitree A1 robot. We release an open-source implementation of Hydra at https://github.com/MIT-SPARK/Hydra.

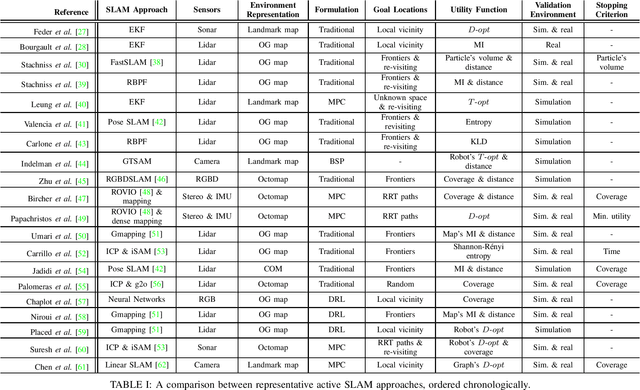

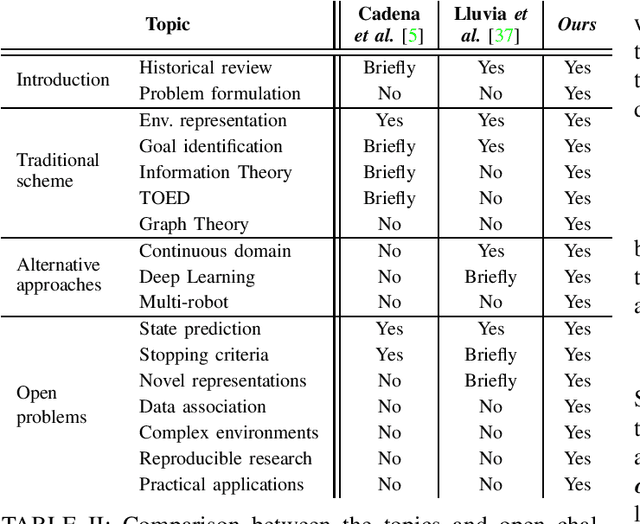

A Survey on Active Simultaneous Localization and Mapping: State of the Art and New Frontiers

Jul 01, 2022

Abstract:Active Simultaneous Localization and Mapping (SLAM) is the problem of planning and controlling the motion of a robot to build the most accurate and complete model of the surrounding environment. Since the first foundational work in active perception appeared, more than three decades ago, this field has received increasing attention across different scientific communities. This has brought about many different approaches and formulations, and makes a review of the current trends necessary and extremely valuable for both new and experienced researchers. In this work, we survey the state-of-the-art in active SLAM and take an in-depth look at the open challenges that still require attention to meet the needs of modern applications. % in order to achieve real-world deployment. After providing a historical perspective, we present a unified problem formulation and review the classical solution scheme, which decouples the problem into three stages that identify, select, and execute potential navigation actions. We then analyze alternative approaches, including belief-space planning and modern techniques based on deep reinforcement learning, and review related work on multi-robot coordination. The manuscript concludes with a discussion of new research directions, addressing reproducible research, active spatial perception, and practical applications, among other topics.

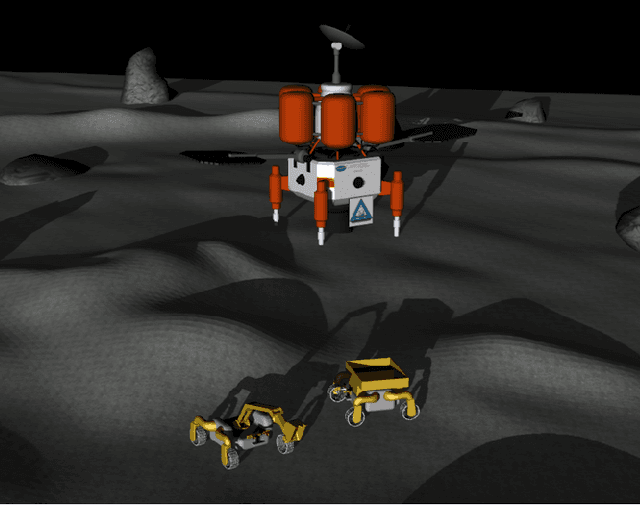

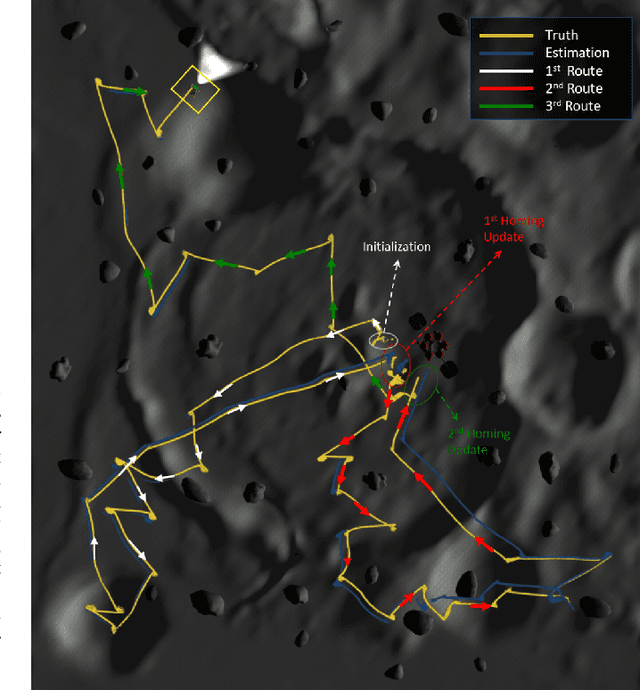

NASA Space Robotics Challenge 2 Qualification Round: An Approach to Autonomous Lunar Rover Operations

Sep 20, 2021

Abstract:Plans for establishing a long-term human presence on the Moon will require substantial increases in robot autonomy and multi-robot coordination to support establishing a lunar outpost. To achieve these objectives, algorithm design choices for the software developments need to be tested and validated for expected scenarios such as autonomous in-situ resource utilization (ISRU), localization in challenging environments, and multi-robot coordination. However, real-world experiments are extremely challenging and limited for extraterrestrial environment. Also, realistic simulation demonstrations in these environments are still rare and demanded for initial algorithm testing capabilities. To help some of these needs, the NASA Centennial Challenges program established the Space Robotics Challenge Phase 2 (SRC2) which consist of virtual robotic systems in a realistic lunar simulation environment, where a group of mobile robots were tasked with reporting volatile locations within a global map, excavating and transporting these resources, and detecting and localizing a target of interest. The main goal of this article is to share our team's experiences on the design trade-offs to perform autonomous robotic operations in a virtual lunar environment and to share strategies to complete the mission requirements posed by NASA SRC2 competition during the qualification round. Of the 114 teams that registered for participation in the NASA SRC2, team Mountaineers finished as one of only six teams to receive the top qualification round prize.

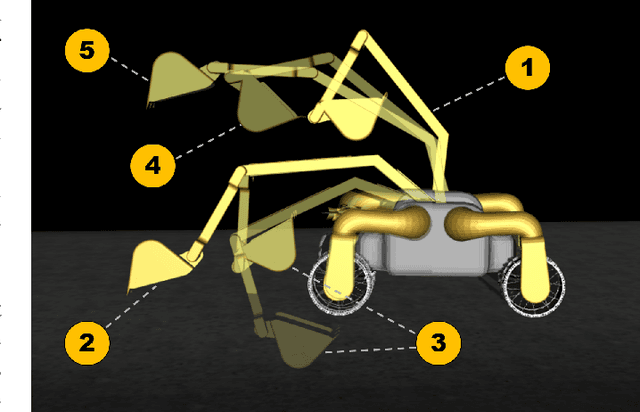

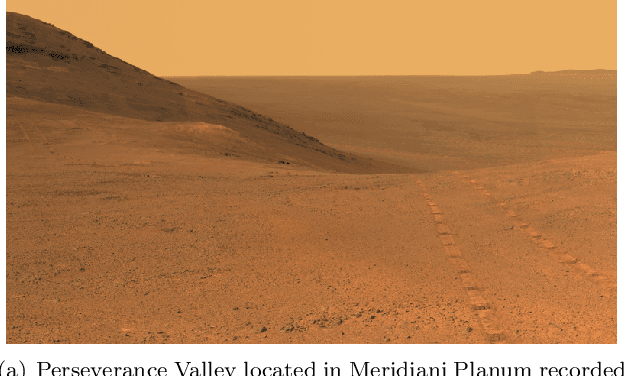

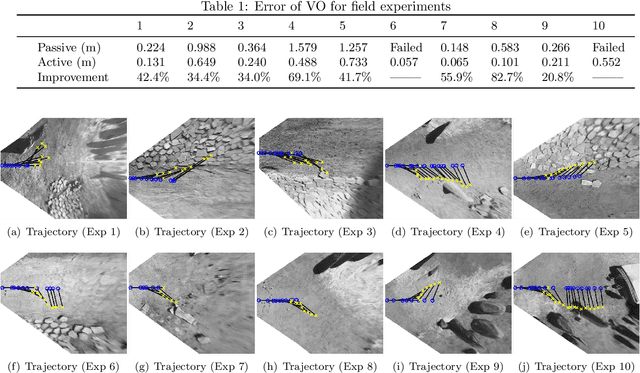

Perception-aware Autonomous Mast Motion Planning for Planetary Exploration Rovers

Dec 14, 2019

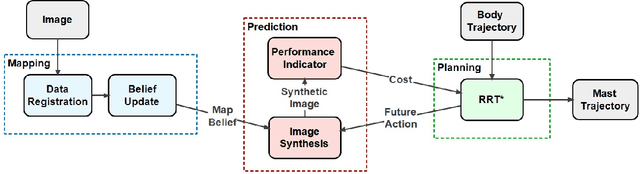

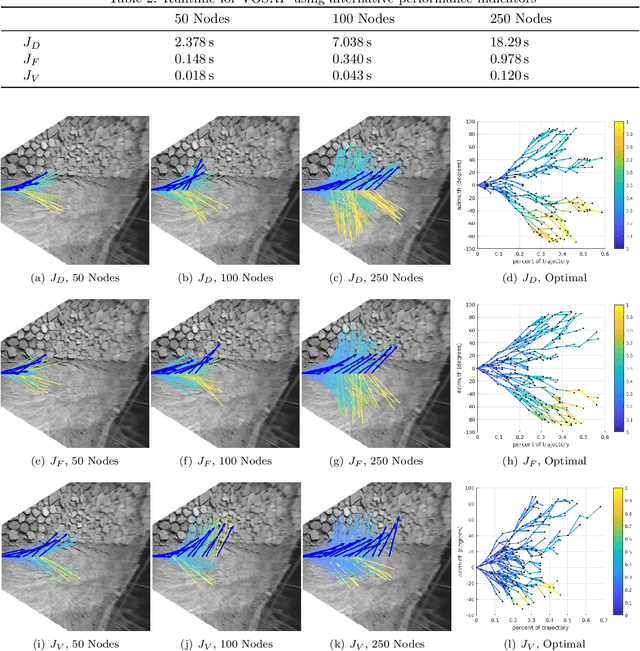

Abstract:Highly accurate real-time localization is of fundamental importance for the safety and efficiency of planetary rovers exploring the surface of Mars. Mars rover operations rely on vision-based systems to avoid hazards as well as plan safe routes. However, vision-based systems operate on the assumption that sufficient visual texture is visible in the scene. This poses a challenge for vision-based navigation on Mars where regions lacking visual texture are prevalent. To overcome this, we make use of the ability of the rover to actively steer the visual sensor to improve fault tolerance and maximize the perception performance. This paper answers the question of where and when to look by presenting a method for predicting the sensor trajectory that maximizes the localization performance of the rover. This is accomplished by an online assessment of possible trajectories using synthetic, future camera views created from previous observations of the scene. The proposed trajectories are quantified and chosen based on the expected localization performance. In this work, we validate the proposed method in field experiments at the Jet Propulsion Laboratory (JPL) Mars Yard. Furthermore, multiple performance metrics are identified and evaluated for reducing the overall runtime of the algorithm. We show how actively steering the perception system increases the localization accuracy compared to traditional fixed-sensor configurations.

Flower Interaction Subsystem for a Precision Pollination Robot

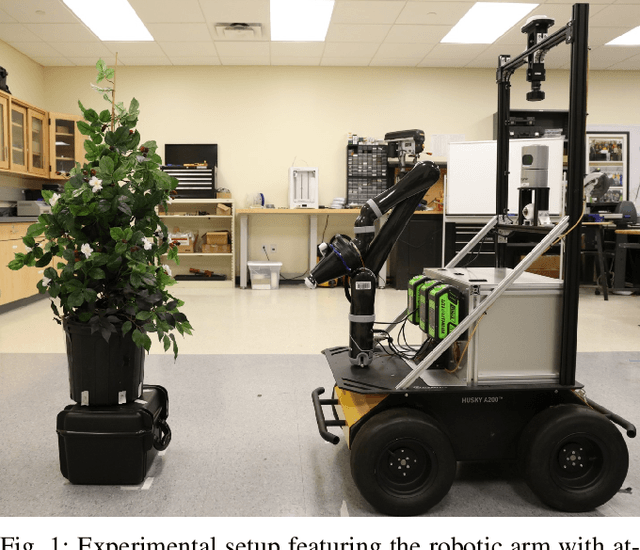

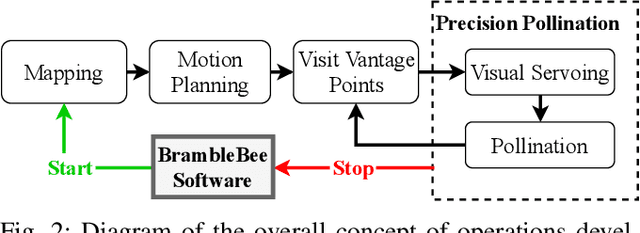

Jun 21, 2019

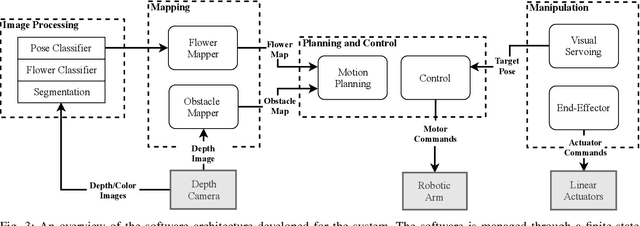

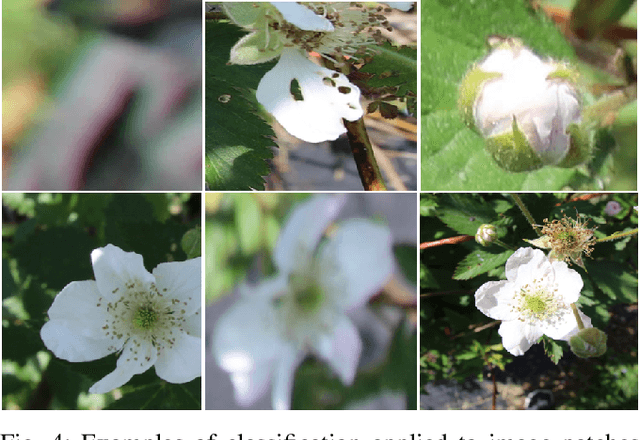

Abstract:Robotic pollinators not only can aid farmers by providing more cost effective and stable methods for pollinating plants but also benefit crop production in environments not suitable for bees such as greenhouses, growth chambers, and in outer space. Robotic pollination requires a high degree of precision and autonomy but few systems have addressed both of these aspects in practice. In this paper, a fully autonomous robot is presented, capable of precise pollination of individual small flowers. Experimental results show that the proposed system is able to achieve a 93.1% detection accuracy and a 76.9% 'pollination' success rate tested with high-fidelity artificial flowers.

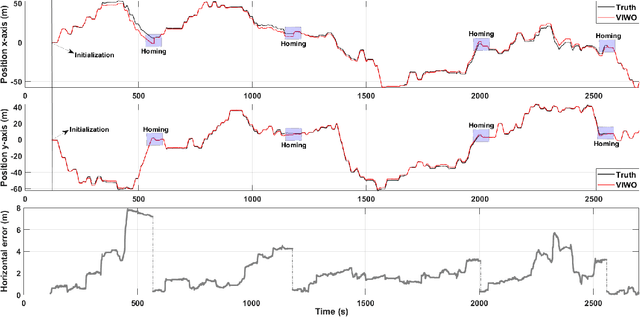

Improved Planetary Rover Inertial Navigation and Wheel Odometry Performance through Periodic Use of Zero-Type Constraints

Jun 20, 2019

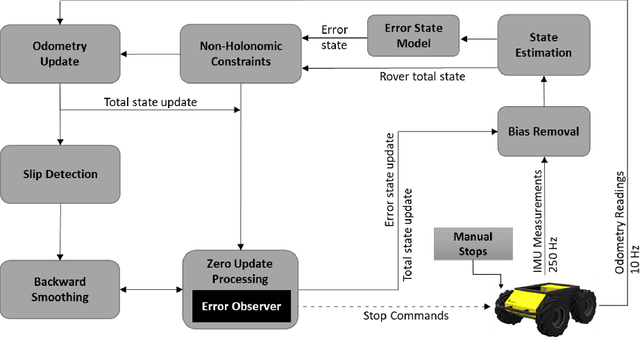

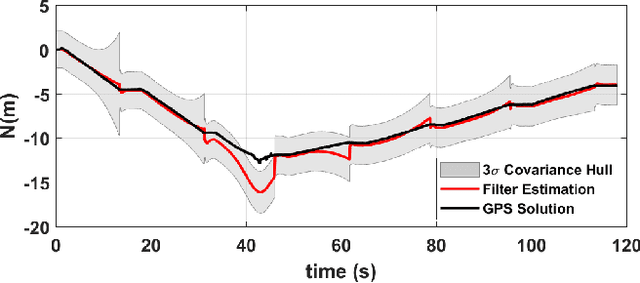

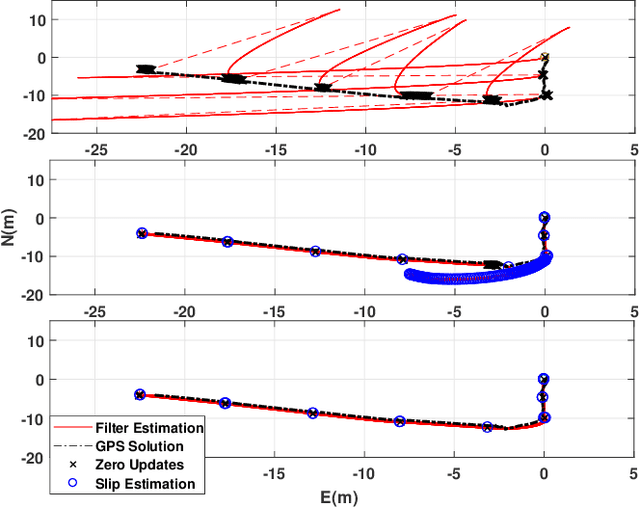

Abstract:We present an approach to enhance wheeled planetary rover dead-reckoning localization performance by leveraging the use of zero-type constraint equations in the navigation filter. Without external aiding, inertial navigation solutions inherently exhibit cubic error growth. Furthermore, for planetary rovers that are traversing diverse types of terrain, wheel odometry is often unreliable for use in localization, due to wheel slippage. For current Mars rovers, computer vision-based approaches are generally used whenever there is a high possibility of positioning error; however, these strategies require additional computational power, energy resources, and significantly slow down the rover traverse speed. To this end, we propose a navigation approach that compensates for the high likelihood of odometry errors by providing a reliable navigation solution that leverages non-holonomic vehicle constraints as well as state-aware pseudo-measurements (e.g., zero velocity and zero angular rate) updates during periodic stops. By using this, computationally expensive visual-based corrections could be performed less often. Experimental tests that compare against GPS-based localization are used to demonstrate the accuracy of the proposed approach. The source code, post-processing scripts, and example datasets associated with the paper are published in a public repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge