Scott Harper

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

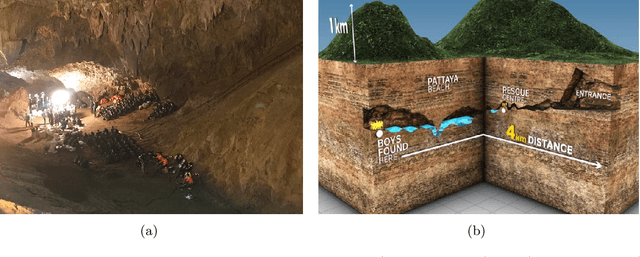

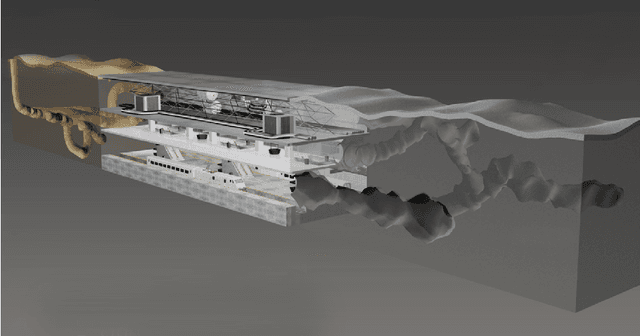

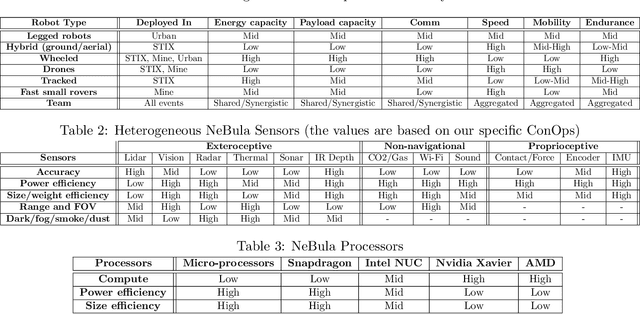

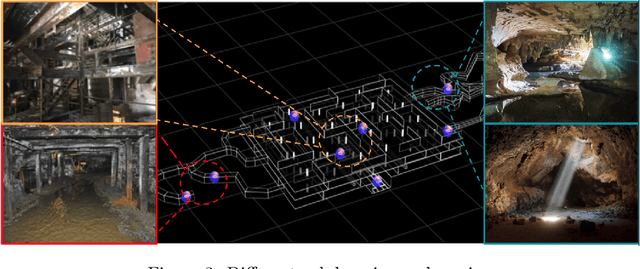

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

Improved Planetary Rover Inertial Navigation and Wheel Odometry Performance through Periodic Use of Zero-Type Constraints

Jun 20, 2019

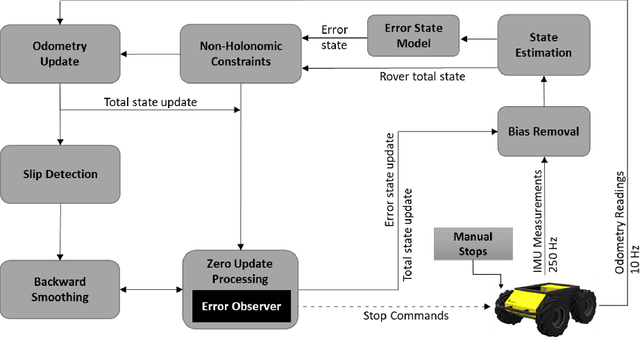

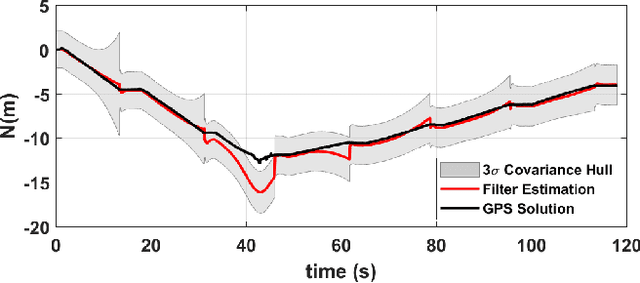

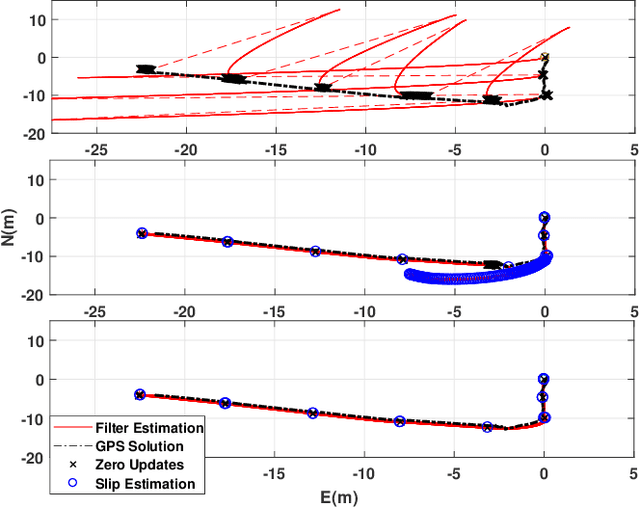

Abstract:We present an approach to enhance wheeled planetary rover dead-reckoning localization performance by leveraging the use of zero-type constraint equations in the navigation filter. Without external aiding, inertial navigation solutions inherently exhibit cubic error growth. Furthermore, for planetary rovers that are traversing diverse types of terrain, wheel odometry is often unreliable for use in localization, due to wheel slippage. For current Mars rovers, computer vision-based approaches are generally used whenever there is a high possibility of positioning error; however, these strategies require additional computational power, energy resources, and significantly slow down the rover traverse speed. To this end, we propose a navigation approach that compensates for the high likelihood of odometry errors by providing a reliable navigation solution that leverages non-holonomic vehicle constraints as well as state-aware pseudo-measurements (e.g., zero velocity and zero angular rate) updates during periodic stops. By using this, computationally expensive visual-based corrections could be performed less often. Experimental tests that compare against GPS-based localization are used to demonstrate the accuracy of the proposed approach. The source code, post-processing scripts, and example datasets associated with the paper are published in a public repository.

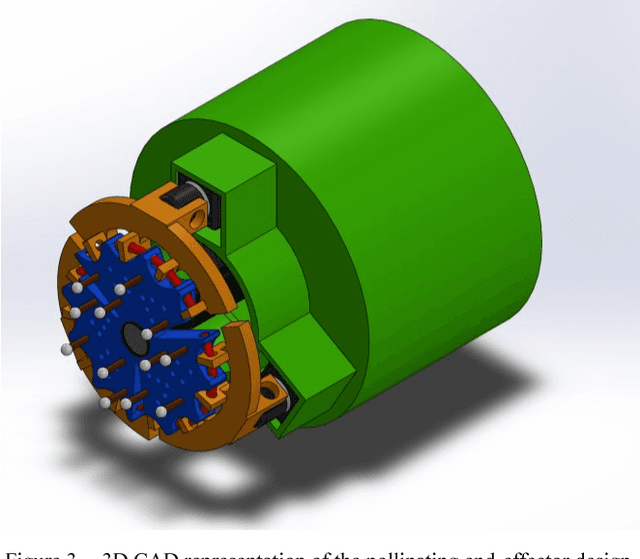

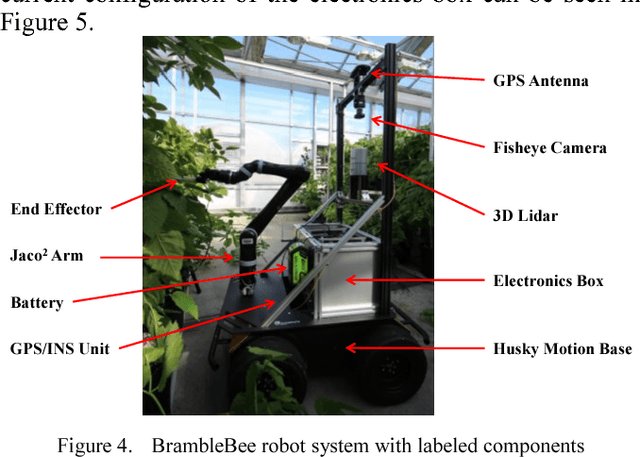

Design of an Autonomous Precision Pollination Robot

Aug 29, 2018

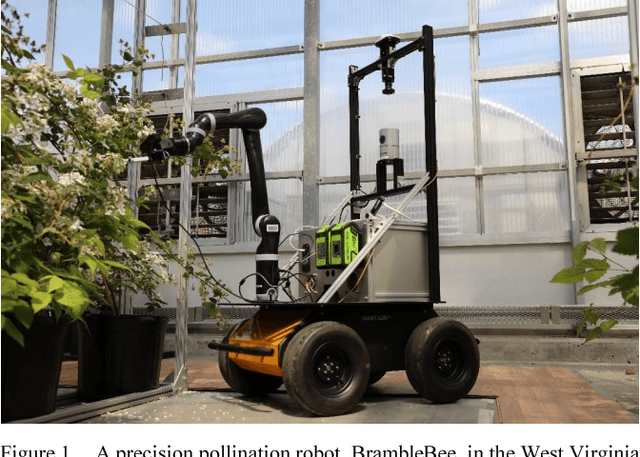

Abstract:Precision robotic pollination systems can not only fill the gap of declining natural pollinators, but can also surpass them in efficiency and uniformity, helping to feed the fast-growing human population on Earth. This paper presents the design and ongoing development of an autonomous robot named "BrambleBee", which aims at pollinating bramble plants in a greenhouse environment. Partially inspired by the ecology and behavior of bees, BrambleBee employs state-of-the-art localization and mapping, visual perception, path planning, motion control, and manipulation techniques to create an efficient and robust autonomous pollination system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge