Mason B. Peterson

Distribution Estimation for Global Data Association via Approximate Bayesian Inference

Sep 19, 2025

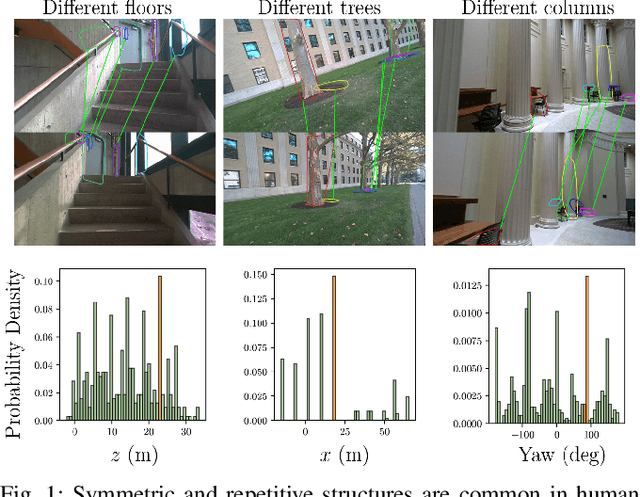

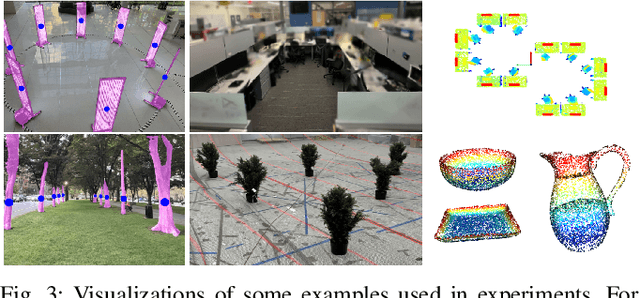

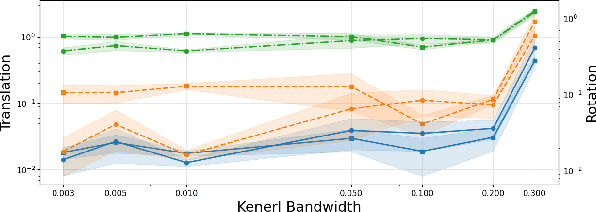

Abstract:Global data association is an essential prerequisite for robot operation in environments seen at different times or by different robots. Repetitive or symmetric data creates significant challenges for existing methods, which typically rely on maximum likelihood estimation or maximum consensus to produce a single set of associations. However, in ambiguous scenarios, the distribution of solutions to global data association problems is often highly multimodal, and such single-solution approaches frequently fail. In this work, we introduce a data association framework that leverages approximate Bayesian inference to capture multiple solution modes to the data association problem, thereby avoiding premature commitment to a single solution under ambiguity. Our approach represents hypothetical solutions as particles that evolve according to a deterministic or randomized update rule to cover the modes of the underlying solution distribution. Furthermore, we show that our method can incorporate optimization constraints imposed by the data association formulation and directly benefit from GPU-parallelized optimization. Extensive simulated and real-world experiments with highly ambiguous data show that our method correctly estimates the distribution over transformations when registering point clouds or object maps.

Language-Grounded Hierarchical Planning and Execution with Multi-Robot 3D Scene Graphs

Jun 09, 2025Abstract:In this paper, we introduce a multi-robot system that integrates mapping, localization, and task and motion planning (TAMP) enabled by 3D scene graphs to execute complex instructions expressed in natural language. Our system builds a shared 3D scene graph incorporating an open-set object-based map, which is leveraged for multi-robot 3D scene graph fusion. This representation supports real-time, view-invariant relocalization (via the object-based map) and planning (via the 3D scene graph), allowing a team of robots to reason about their surroundings and execute complex tasks. Additionally, we introduce a planning approach that translates operator intent into Planning Domain Definition Language (PDDL) goals using a Large Language Model (LLM) by leveraging context from the shared 3D scene graph and robot capabilities. We provide an experimental assessment of the performance of our system on real-world tasks in large-scale, outdoor environments.

ROMAN: Open-Set Object Map Alignment for Robust View-Invariant Global Localization

Oct 10, 2024

Abstract:Global localization is a fundamental capability required for long-term and drift-free robot navigation. However, current methods fail to relocalize when faced with significantly different viewpoints. We present ROMAN (Robust Object Map Alignment Anywhere), a robust global localization method capable of localizing in challenging and diverse environments based on creating and aligning maps of open-set and view-invariant objects. To address localization difficulties caused by feature-sparse or perceptually aliased environments, ROMAN formulates and solves a registration problem between object submaps using a unified graph-theoretic global data association approach that simultaneously accounts for object shape and semantic similarities and a prior on gravity direction. Through a set of challenging large-scale multi-robot or multi-session SLAM experiments in indoor, urban and unstructured/forested environments, we demonstrate that ROMAN achieves a maximum recall 36% higher than other object-based map alignment methods and an absolute trajectory error that is 37% lower than using visual features for loop closures. Our project page can be found at https://acl.mit.edu/ROMAN/.

MOTLEE: Collaborative Multi-Object Tracking Using Temporal Consistency for Neighboring Robot Frame Alignment

May 08, 2024

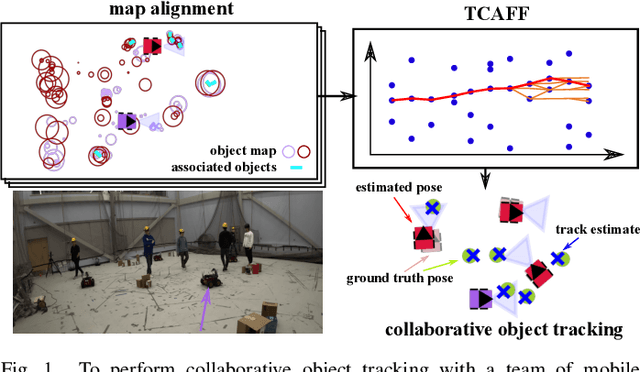

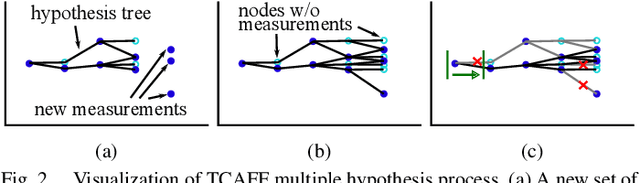

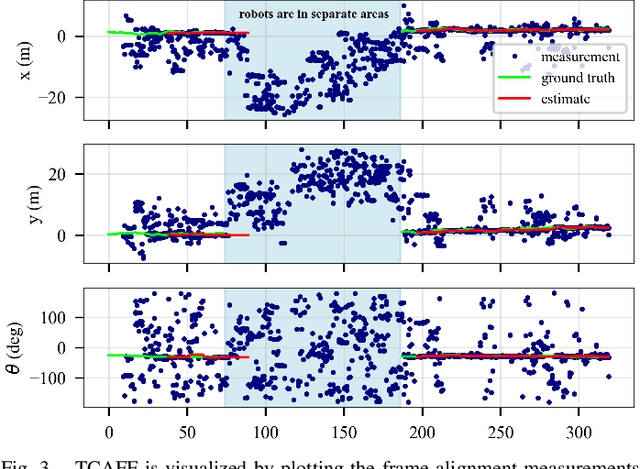

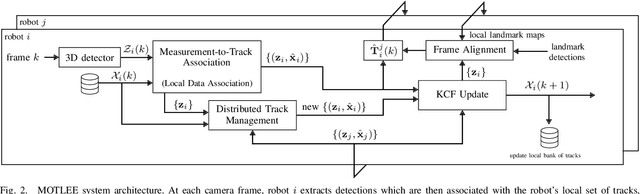

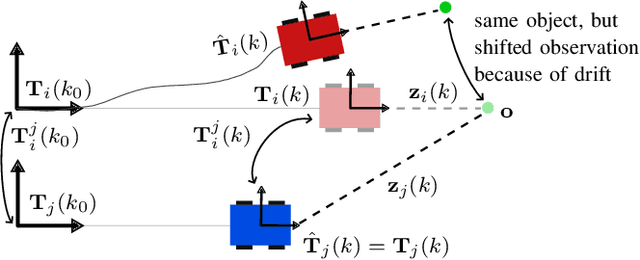

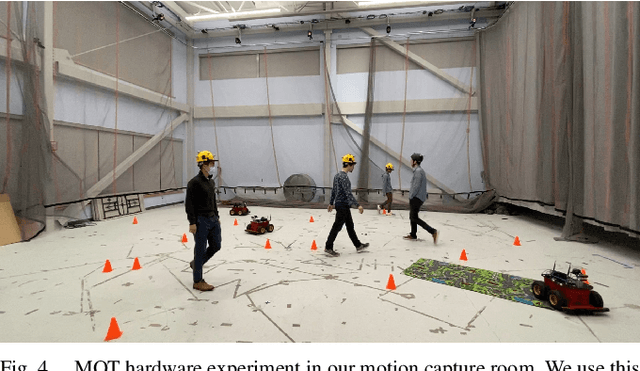

Abstract:Knowing the locations of nearby moving objects is important for a mobile robot to operate safely in a dynamic environment. Dynamic object tracking performance can be improved if robots share observations of tracked objects with nearby team members in real-time. To share observations, a robot must make up-to-date estimates of the transformation from its coordinate frame to the frame of each neighbor, which can be challenging because of odometry drift. We present Multiple Object Tracking with Localization Error Elimination (MOTLEE), a complete system for a multi-robot team to accurately estimate frame transformations and collaboratively track dynamic objects. To accomplish this, robots use open-set image-segmentation methods to build object maps of their environment and then use our Temporally Consistent Alignment of Frames Filter (TCAFF) to align maps and estimate coordinate frame transformations without any initial knowledge of neighboring robot poses. We show that our method for aligning frames enables a team of four robots to collaboratively track six pedestrians with accuracy similar to that of a system with ground truth localization in a challenging hardware demonstration. The code and hardware dataset are available at https://github.com/mit-acl/motlee.

PUMA: Fully Decentralized Uncertainty-aware Multiagent Trajectory Planner with Real-time Image Segmentation-based Frame Alignment

Nov 07, 2023Abstract:Fully decentralized, multiagent trajectory planners enable complex tasks like search and rescue or package delivery by ensuring safe navigation in unknown environments. However, deconflicting trajectories with other agents and ensuring collision-free paths in a fully decentralized setting is complicated by dynamic elements and localization uncertainty. To this end, this paper presents (1) an uncertainty-aware multiagent trajectory planner and (2) an image segmentation-based frame alignment pipeline. The uncertainty-aware planner propagates uncertainty associated with the future motion of detected obstacles, and by incorporating this propagated uncertainty into optimization constraints, the planner effectively navigates around obstacles. Unlike conventional methods that emphasize explicit obstacle tracking, our approach integrates implicit tracking. Sharing trajectories between agents can cause potential collisions due to frame misalignment. Addressing this, we introduce a novel frame alignment pipeline that rectifies inter-agent frame misalignment. This method leverages a zero-shot image segmentation model for detecting objects in the environment and a data association framework based on geometric consistency for map alignment. Our approach accurately aligns frames with only 0.18 m and 2.7 deg of mean frame alignment error in our most challenging simulation scenario. In addition, we conducted hardware experiments and successfully achieved 0.29 m and 2.59 deg of frame alignment error. Together with the alignment framework, our planner ensures safe navigation in unknown environments and collision avoidance in decentralized settings.

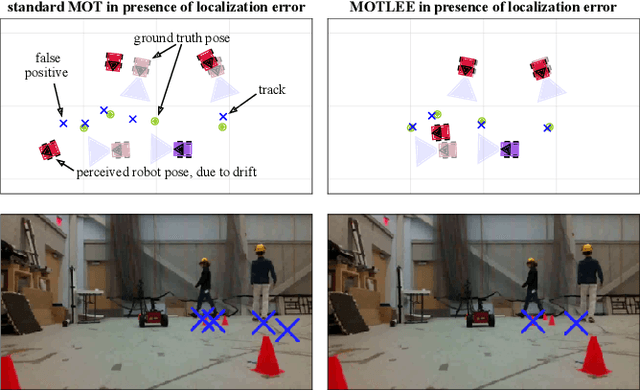

MOTLEE: Distributed Mobile Multi-Object Tracking with Localization Error Elimination

Apr 24, 2023

Abstract:We present MOTLEE, a distributed mobile multi-object tracking algorithm that enables a team of robots to collaboratively track moving objects in the presence of localization error. Existing approaches to distributed tracking assume either a static sensor network or that perfect localization is available. Instead, we develop algorithms based on the Kalman-Consensus filter for distributed tracking that are uncertainty-aware and properly leverage localization uncertainty. Our method maintains an accurate understanding of dynamic objects in an environment by realigning robot frames and incorporating uncertainty of frame misalignment into our object tracking formulation. We evaluate our method in hardware on a team of three mobile ground robots tracking four people. Compared to previous works that do not account for localization error, we show that MOTLEE is resilient to localization uncertainties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge