Christopher Bradley

Language-Grounded Hierarchical Planning and Execution with Multi-Robot 3D Scene Graphs

Jun 09, 2025Abstract:In this paper, we introduce a multi-robot system that integrates mapping, localization, and task and motion planning (TAMP) enabled by 3D scene graphs to execute complex instructions expressed in natural language. Our system builds a shared 3D scene graph incorporating an open-set object-based map, which is leveraged for multi-robot 3D scene graph fusion. This representation supports real-time, view-invariant relocalization (via the object-based map) and planning (via the 3D scene graph), allowing a team of robots to reason about their surroundings and execute complex tasks. Additionally, we introduce a planning approach that translates operator intent into Planning Domain Definition Language (PDDL) goals using a Large Language Model (LLM) by leveraging context from the shared 3D scene graph and robot capabilities. We provide an experimental assessment of the performance of our system on real-world tasks in large-scale, outdoor environments.

An Intrinsically Explainable Approach to Detecting Vertebral Compression Fractures in CT Scans via Neurosymbolic Modeling

Dec 23, 2024Abstract:Vertebral compression fractures (VCFs) are a common and potentially serious consequence of osteoporosis. Yet, they often remain undiagnosed. Opportunistic screening, which involves automated analysis of medical imaging data acquired primarily for other purposes, is a cost-effective method to identify undiagnosed VCFs. In high-stakes scenarios like opportunistic medical diagnosis, model interpretability is a key factor for the adoption of AI recommendations. Rule-based methods are inherently explainable and closely align with clinical guidelines, but they are not immediately applicable to high-dimensional data such as CT scans. To address this gap, we introduce a neurosymbolic approach for VCF detection in CT volumes. The proposed model combines deep learning (DL) for vertebral segmentation with a shape-based algorithm (SBA) that analyzes vertebral height distributions in salient anatomical regions. This allows for the definition of a rule set over the height distributions to detect VCFs. Evaluation of VerSe19 dataset shows that our method achieves an accuracy of 96% and a sensitivity of 91% in VCF detection. In comparison, a black box model, DenseNet, achieved an accuracy of 95% and sensitivity of 91% in the same dataset. Our results demonstrate that our intrinsically explainable approach can match or surpass the performance of black box deep neural networks while providing additional insights into why a prediction was made. This transparency can enhance clinician's trust thus, supporting more informed decision-making in VCF diagnosis and treatment planning.

Task and Motion Planning in Hierarchical 3D Scene Graphs

Mar 12, 2024

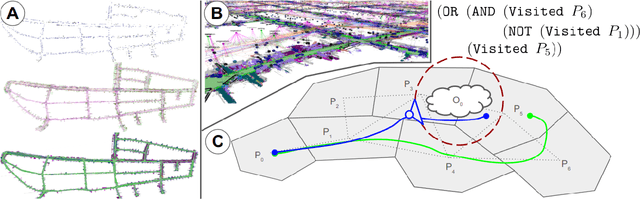

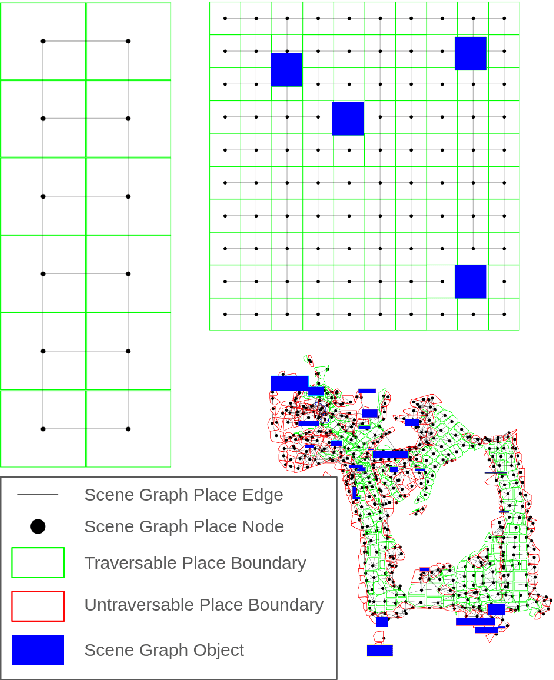

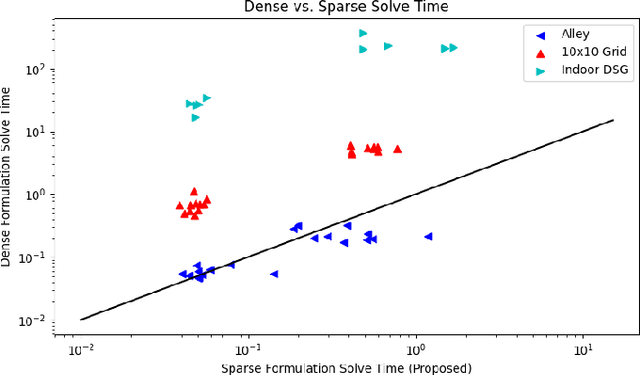

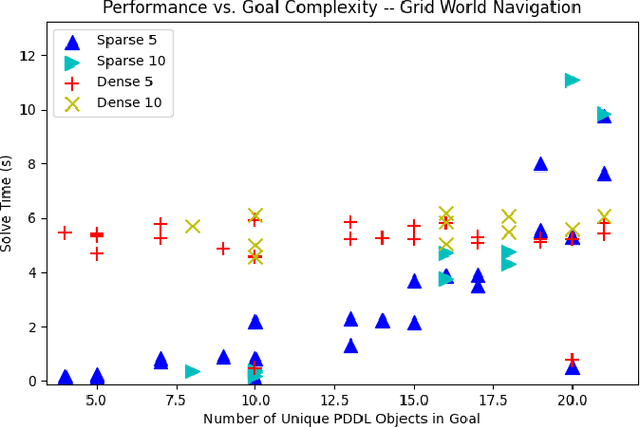

Abstract:Recent work in the construction of 3D scene graphs has enabled mobile robots to build large-scale hybrid metric-semantic hierarchical representations of the world. These detailed models contain information that is useful for planning, however how to derive a planning domain from a 3D scene graph that enables efficient computation of executable plans is an open question. In this work, we present a novel approach for defining and solving Task and Motion Planning problems in large-scale environments using hierarchical 3D scene graphs. We identify a method for building sparse problem domains which enable scaling to large scenes, and propose a technique for incrementally adding objects to that domain during planning time to avoid wasting computation on irrelevant elements of the scene graph. We test our approach in two hand crafted domains as well as two scene graphs built from perception, including one constructed from the KITTI dataset. A video supplement is available at https://youtu.be/63xuCCaN0I4.

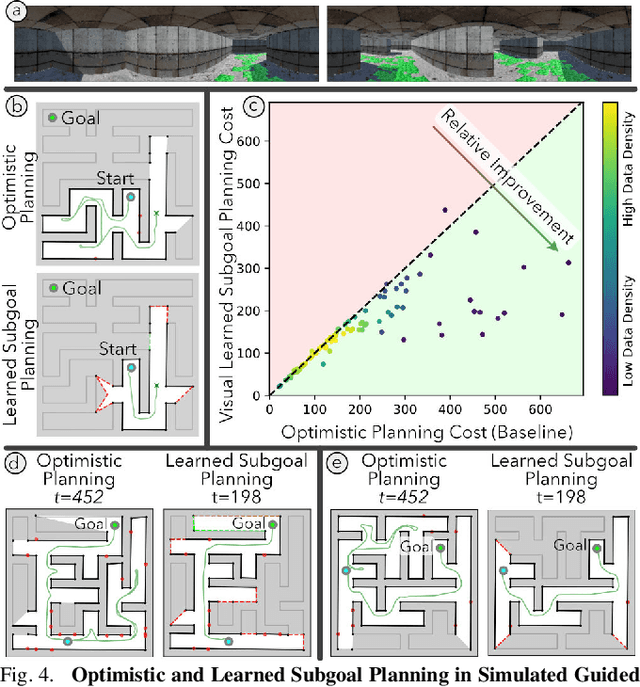

Learning and Planning for Temporally Extended Tasks in Unknown Environments

Apr 28, 2021

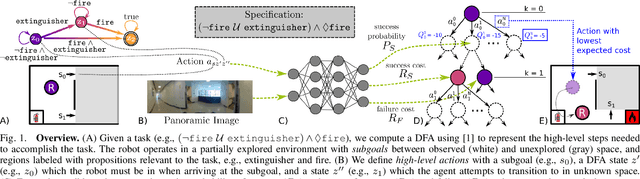

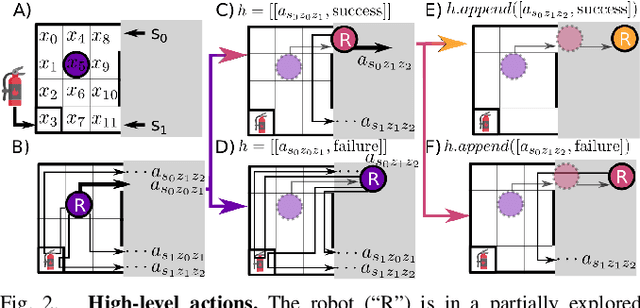

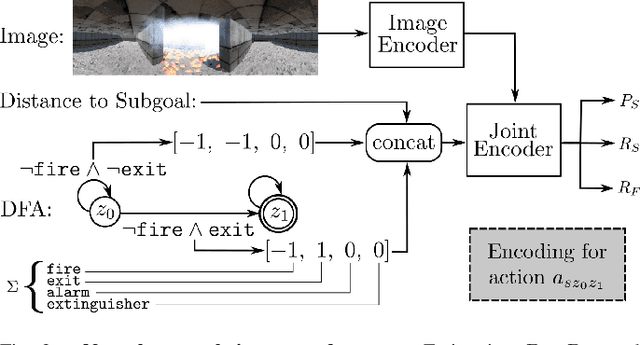

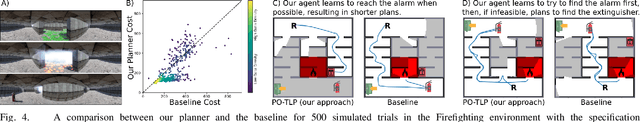

Abstract:We propose a novel planning technique for satisfying tasks specified in temporal logic in partially revealed environments. We define high-level actions derived from the environment and the given task itself, and estimate how each action contributes to progress towards completing the task. As the map is revealed, we estimate the cost and probability of success of each action from images and an encoding of that action using a trained neural network. These estimates guide search for the minimum-expected-cost plan within our model. Our learned model is structured to generalize across environments and task specifications without requiring retraining. We demonstrate an improvement in total cost in both simulated and real-world experiments compared to a heuristic-driven baseline.

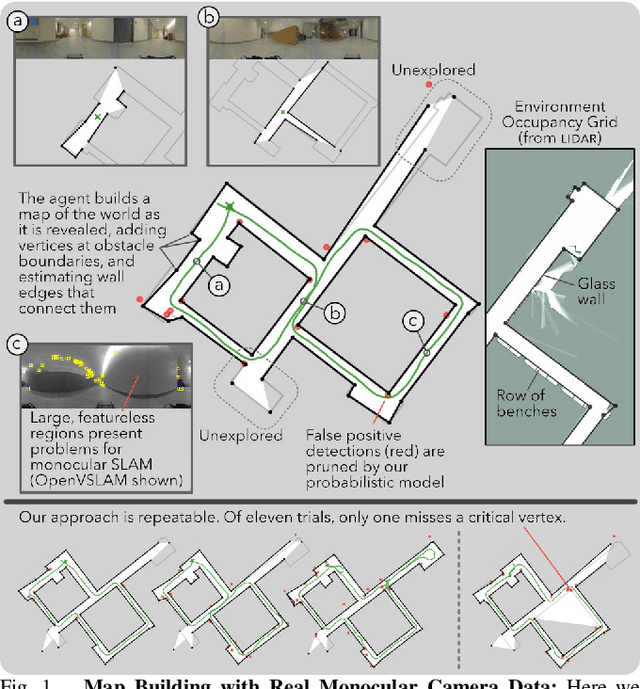

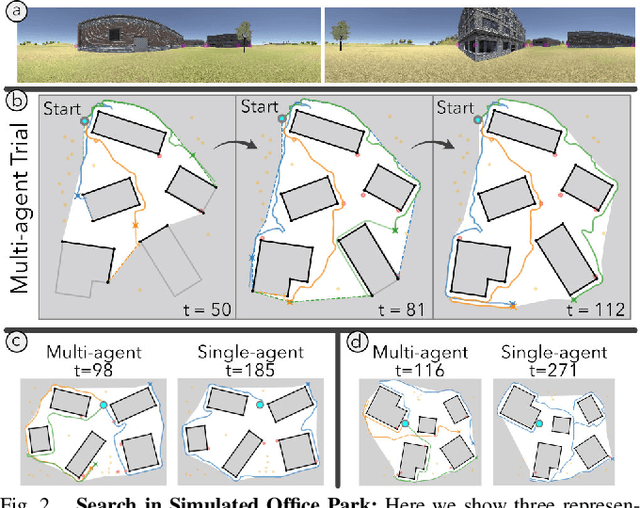

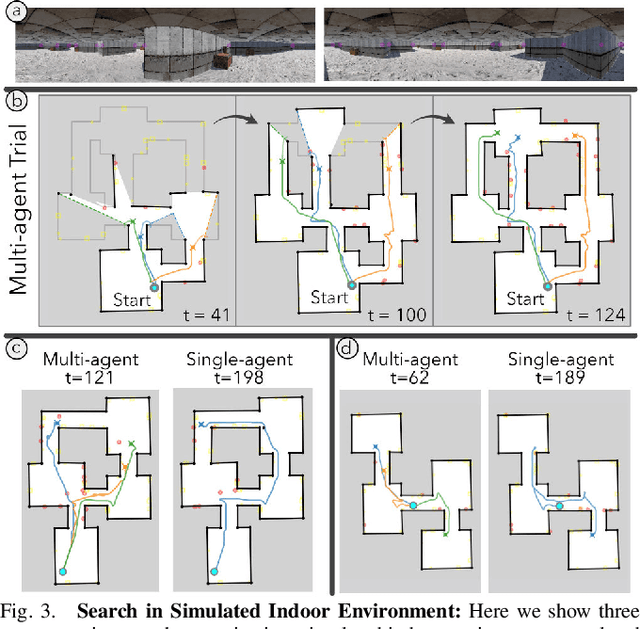

Enabling Topological Planning with Monocular Vision

Mar 31, 2020

Abstract:Topological strategies for navigation meaningfully reduce the space of possible actions available to a robot, allowing use of heuristic priors or learning to enable computationally efficient, intelligent planning. The challenges in estimating structure with monocular SLAM in low texture or highly cluttered environments have precluded its use for topological planning in the past. We propose a robust sparse map representation that can be built with monocular vision and overcomes these shortcomings. Using a learned sensor, we estimate high-level structure of an environment from streaming images by detecting sparse vertices (e.g., boundaries of walls) and reasoning about the structure between them. We also estimate the known free space in our map, a necessary feature for planning through previously unknown environments. We show that our mapping technique can be used on real data and is sufficient for planning and exploration in simulated multi-agent search and learned subgoal planning applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge