Learning and Planning for Temporally Extended Tasks in Unknown Environments

Paper and Code

Apr 28, 2021

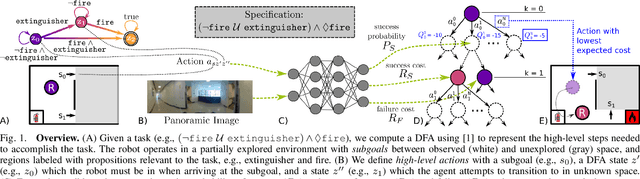

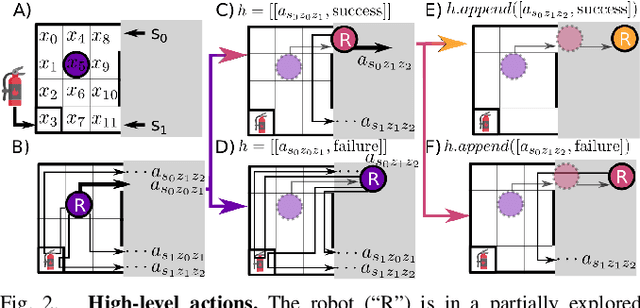

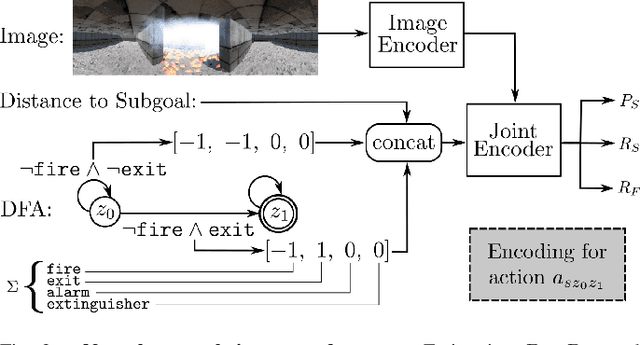

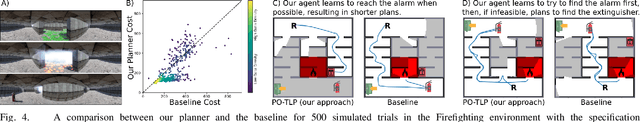

We propose a novel planning technique for satisfying tasks specified in temporal logic in partially revealed environments. We define high-level actions derived from the environment and the given task itself, and estimate how each action contributes to progress towards completing the task. As the map is revealed, we estimate the cost and probability of success of each action from images and an encoding of that action using a trained neural network. These estimates guide search for the minimum-expected-cost plan within our model. Our learned model is structured to generalize across environments and task specifications without requiring retraining. We demonstrate an improvement in total cost in both simulated and real-world experiments compared to a heuristic-driven baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge