Adam Pacheck

Physically-Feasible Repair of Reactive, Linear Temporal Logic-based, High-Level Tasks

Jul 06, 2022

Abstract:A typical approach to creating complex robot behaviors is to compose atomic controllers, or skills, such that the resulting behavior satisfies a high-level task; however, when a task cannot be accomplished with a given set of skills, it is difficult to know how to modify the skills to make the task possible. We present a method for combining symbolic repair with physical feasibility-checking and implementation to automatically modify existing skills such that the robot can execute a previously infeasible task. We encode robot skills in Linear Temporal Logic (LTL) formulas that capture both safety constraints and goals for reactive tasks. Furthermore, our encoding captures the full skill execution, as opposed to prior work where only the state of the world before and after the skill is executed are considered. Our repair algorithm suggests symbolic modifications, then attempts to physically implement the suggestions by modifying the original skills subject to LTL constraints derived from the symbolic repair. If skills are not physically possible, we automatically provide additional constraints for the symbolic repair. We demonstrate our approach with a Baxter and a Clearpath Jackal.

Automatic Encoding and Repair of Reactive High-Level Tasks with Learned Abstract Representations

Apr 18, 2022

Abstract:We present a framework that, given a set of skills a robot can perform, abstracts sensor data into symbols that we use to automatically encode the robot's capabilities in Linear Temporal Logic. We specify reactive high-level tasks based on these capabilities, for which a strategy is automatically synthesized and executed on the robot, if the task is feasible. If a task is not feasible given the robot's capabilities, we present two methods, one enumeration-based and one synthesis-based, for automatically suggesting additional skills for the robot or modifications to existing skills that would make the task feasible. We demonstrate our framework on a Baxter robot manipulating blocks on a table, a Baxter robot manipulating plates on a table, and a Kinova arm manipulating vials, with multiple sensor modalities, including raw images.

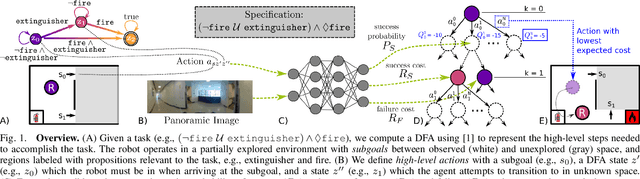

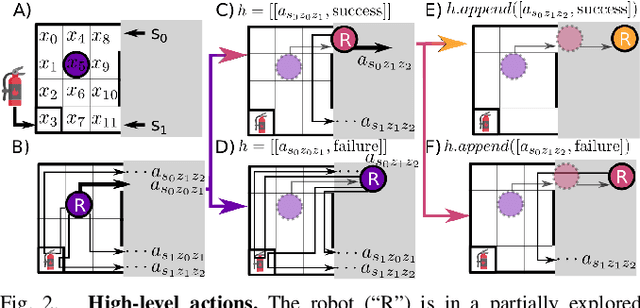

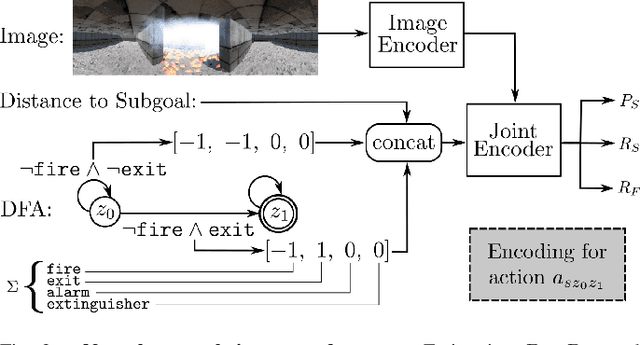

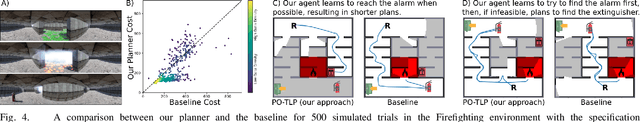

Learning and Planning for Temporally Extended Tasks in Unknown Environments

Apr 28, 2021

Abstract:We propose a novel planning technique for satisfying tasks specified in temporal logic in partially revealed environments. We define high-level actions derived from the environment and the given task itself, and estimate how each action contributes to progress towards completing the task. As the map is revealed, we estimate the cost and probability of success of each action from images and an encoding of that action using a trained neural network. These estimates guide search for the minimum-expected-cost plan within our model. Our learned model is structured to generalize across environments and task specifications without requiring retraining. We demonstrate an improvement in total cost in both simulated and real-world experiments compared to a heuristic-driven baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge