Benjamin D. Killeen

Investigating Robot Control Policy Learning for Autonomous X-ray-guided Spine Procedures

Nov 05, 2025Abstract:Imitation learning-based robot control policies are enjoying renewed interest in video-based robotics. However, it remains unclear whether this approach applies to X-ray-guided procedures, such as spine instrumentation. This is because interpretation of multi-view X-rays is complex. We examine opportunities and challenges for imitation policy learning in bi-plane-guided cannula insertion. We develop an in silico sandbox for scalable, automated simulation of X-ray-guided spine procedures with a high degree of realism. We curate a dataset of correct trajectories and corresponding bi-planar X-ray sequences that emulate the stepwise alignment of providers. We then train imitation learning policies for planning and open-loop control that iteratively align a cannula solely based on visual information. This precisely controlled setup offers insights into limitations and capabilities of this method. Our policy succeeded on the first attempt in 68.5% of cases, maintaining safe intra-pedicular trajectories across diverse vertebral levels. The policy generalized to complex anatomy, including fractures, and remained robust to varied initializations. Rollouts on real bi-planar X-rays further suggest that the model can produce plausible trajectories, despite training exclusively in simulation. While these preliminary results are promising, we also identify limitations, especially in entry point precision. Full closed-look control will require additional considerations around how to provide sufficiently frequent feedback. With more robust priors and domain knowledge, such models may provide a foundation for future efforts toward lightweight and CT-free robotic intra-operative spinal navigation.

Did you just see that? Arbitrary view synthesis for egocentric replay of operating room workflows from ambient sensors

Oct 06, 2025Abstract:Observing surgical practice has historically relied on fixed vantage points or recollections, leaving the egocentric visual perspectives that guide clinical decisions undocumented. Fixed-camera video can capture surgical workflows at the room-scale, but cannot reconstruct what each team member actually saw. Thus, these videos only provide limited insights into how decisions that affect surgical safety, training, and workflow optimization are made. Here we introduce EgoSurg, the first framework to reconstruct the dynamic, egocentric replays for any operating room (OR) staff directly from wall-mounted fixed-camera video, and thus, without intervention to clinical workflow. EgoSurg couples geometry-driven neural rendering with diffusion-based view enhancement, enabling high-visual fidelity synthesis of arbitrary and egocentric viewpoints at any moment. In evaluation across multi-site surgical cases and controlled studies, EgoSurg reconstructs person-specific visual fields and arbitrary viewpoints with high visual quality and fidelity. By transforming existing OR camera infrastructure into a navigable dynamic 3D record, EgoSurg establishes a new foundation for immersive surgical data science, enabling surgical practice to be visualized, experienced, and analyzed from every angle.

Benchmark of Segmentation Techniques for Pelvic Fracture in CT and X-ray: Summary of the PENGWIN 2024 Challenge

Apr 03, 2025Abstract:The segmentation of pelvic fracture fragments in CT and X-ray images is crucial for trauma diagnosis, surgical planning, and intraoperative guidance. However, accurately and efficiently delineating the bone fragments remains a significant challenge due to complex anatomy and imaging limitations. The PENGWIN challenge, organized as a MICCAI 2024 satellite event, aimed to advance automated fracture segmentation by benchmarking state-of-the-art algorithms on these complex tasks. A diverse dataset of 150 CT scans was collected from multiple clinical centers, and a large set of simulated X-ray images was generated using the DeepDRR method. Final submissions from 16 teams worldwide were evaluated under a rigorous multi-metric testing scheme. The top-performing CT algorithm achieved an average fragment-wise intersection over union (IoU) of 0.930, demonstrating satisfactory accuracy. However, in the X-ray task, the best algorithm attained an IoU of 0.774, highlighting the greater challenges posed by overlapping anatomical structures. Beyond the quantitative evaluation, the challenge revealed methodological diversity in algorithm design. Variations in instance representation, such as primary-secondary classification versus boundary-core separation, led to differing segmentation strategies. Despite promising results, the challenge also exposed inherent uncertainties in fragment definition, particularly in cases of incomplete fractures. These findings suggest that interactive segmentation approaches, integrating human decision-making with task-relevant information, may be essential for improving model reliability and clinical applicability.

An Intrinsically Explainable Approach to Detecting Vertebral Compression Fractures in CT Scans via Neurosymbolic Modeling

Dec 23, 2024Abstract:Vertebral compression fractures (VCFs) are a common and potentially serious consequence of osteoporosis. Yet, they often remain undiagnosed. Opportunistic screening, which involves automated analysis of medical imaging data acquired primarily for other purposes, is a cost-effective method to identify undiagnosed VCFs. In high-stakes scenarios like opportunistic medical diagnosis, model interpretability is a key factor for the adoption of AI recommendations. Rule-based methods are inherently explainable and closely align with clinical guidelines, but they are not immediately applicable to high-dimensional data such as CT scans. To address this gap, we introduce a neurosymbolic approach for VCF detection in CT volumes. The proposed model combines deep learning (DL) for vertebral segmentation with a shape-based algorithm (SBA) that analyzes vertebral height distributions in salient anatomical regions. This allows for the definition of a rule set over the height distributions to detect VCFs. Evaluation of VerSe19 dataset shows that our method achieves an accuracy of 96% and a sensitivity of 91% in VCF detection. In comparison, a black box model, DenseNet, achieved an accuracy of 95% and sensitivity of 91% in the same dataset. Our results demonstrate that our intrinsically explainable approach can match or surpass the performance of black box deep neural networks while providing additional insights into why a prediction was made. This transparency can enhance clinician's trust thus, supporting more informed decision-making in VCF diagnosis and treatment planning.

Intelligent Control of Robotic X-ray Devices using a Language-promptable Digital Twin

Dec 11, 2024

Abstract:Natural language offers a convenient, flexible interface for controlling robotic C-arm X-ray systems, making advanced functionality and controls accessible. However, enabling language interfaces requires specialized AI models that interpret X-ray images to create a semantic representation for reasoning. The fixed outputs of such AI models limit the functionality of language controls. Incorporating flexible, language-aligned AI models prompted through language enables more versatile interfaces for diverse tasks and procedures. Using a language-aligned foundation model for X-ray image segmentation, our system continually updates a patient digital twin based on sparse reconstructions of desired anatomical structures. This supports autonomous capabilities such as visualization, patient-specific viewfinding, and automatic collimation from novel viewpoints, enabling commands 'Focus in on the lower lumbar vertebrae.' In a cadaver study, users visualized, localized, and collimated structures across the torso using verbal commands, achieving 84% end-to-end success. Post hoc analysis of randomly oriented images showed our patient digital twin could localize 35 commonly requested structures to within 51.68 mm, enabling localization and isolation from arbitrary orientations. Our results demonstrate how intelligent robotic X-ray systems can incorporate physicians' expressed intent directly. While existing foundation models for intra-operative X-ray analysis exhibit failure modes, as they improve, they can facilitate highly flexible, intelligent robotic C-arms.

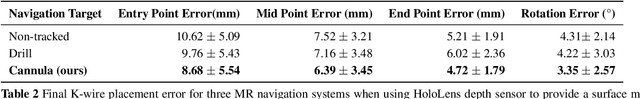

StraightTrack: Towards Mixed Reality Navigation System for Percutaneous K-wire Insertion

Oct 02, 2024

Abstract:In percutaneous pelvic trauma surgery, accurate placement of Kirschner wires (K-wires) is crucial to ensure effective fracture fixation and avoid complications due to breaching the cortical bone along an unsuitable trajectory. Surgical navigation via mixed reality (MR) can help achieve precise wire placement in a low-profile form factor. Current approaches in this domain are as yet unsuitable for real-world deployment because they fall short of guaranteeing accurate visual feedback due to uncontrolled bending of the wire. To ensure accurate feedback, we introduce StraightTrack, an MR navigation system designed for percutaneous wire placement in complex anatomy. StraightTrack features a marker body equipped with a rigid access cannula that mitigates wire bending due to interactions with soft tissue and a covered bony surface. Integrated with an Optical See-Through Head-Mounted Display (OST HMD) capable of tracking the cannula body, StraightTrack offers real-time 3D visualization and guidance without external trackers, which are prone to losing line-of-sight. In phantom experiments with two experienced orthopedic surgeons, StraightTrack improves wire placement accuracy, achieving the ideal trajectory within $5.26 \pm 2.29$ mm and $2.88 \pm 1.49$ degree, compared to over 12.08 mm and 4.07 degree for comparable methods. As MR navigation systems continue to mature, StraightTrack realizes their potential for internal fracture fixation and other percutaneous orthopedic procedures.

FluoroSAM: A Language-aligned Foundation Model for X-ray Image Segmentation

Mar 12, 2024Abstract:Automated X-ray image segmentation would accelerate research and development in diagnostic and interventional precision medicine. Prior efforts have contributed task-specific models capable of solving specific image analysis problems, but the utility of these models is restricted to their particular task domain, and expanding to broader use requires additional data, labels, and retraining efforts. Recently, foundation models (FMs) -- machine learning models trained on large amounts of highly variable data thus enabling broad applicability -- have emerged as promising tools for automated image analysis. Existing FMs for medical image analysis focus on scenarios and modalities where objects are clearly defined by visually apparent boundaries, such as surgical tool segmentation in endoscopy. X-ray imaging, by contrast, does not generally offer such clearly delineated boundaries or structure priors. During X-ray image formation, complex 3D structures are projected in transmission onto the imaging plane, resulting in overlapping features of varying opacity and shape. To pave the way toward an FM for comprehensive and automated analysis of arbitrary medical X-ray images, we develop FluoroSAM, a language-aligned variant of the Segment-Anything Model, trained from scratch on 1.6M synthetic X-ray images. FluoroSAM is trained on data including masks for 128 organ types and 464 non-anatomical objects, such as tools and implants. In real X-ray images of cadaveric specimens, FluoroSAM is able to segment bony anatomical structures based on text-only prompting with 0.51 and 0.79 DICE with point-based refinement, outperforming competing SAM variants for all structures. FluoroSAM is also capable of zero-shot generalization to segmenting classes beyond the training set thanks to its language alignment, which we demonstrate for full lung segmentation on real chest X-rays.

Pelphix: Surgical Phase Recognition from X-ray Images in Percutaneous Pelvic Fixation

Apr 18, 2023

Abstract:Surgical phase recognition (SPR) is a crucial element in the digital transformation of the modern operating theater. While SPR based on video sources is well-established, incorporation of interventional X-ray sequences has not yet been explored. This paper presents Pelphix, a first approach to SPR for X-ray-guided percutaneous pelvic fracture fixation, which models the procedure at four levels of granularity -- corridor, activity, view, and frame value -- simulating the pelvic fracture fixation workflow as a Markov process to provide fully annotated training data. Using added supervision from detection of bony corridors, tools, and anatomy, we learn image representations that are fed into a transformer model to regress surgical phases at the four granularity levels. Our approach demonstrates the feasibility of X-ray-based SPR, achieving an average accuracy of 93.8% on simulated sequences and 67.57% in cadaver across all granularity levels, with up to 88% accuracy for the target corridor in real data. This work constitutes the first step toward SPR for the X-ray domain, establishing an approach to categorizing phases in X-ray-guided surgery, simulating realistic image sequences to enable machine learning model development, and demonstrating that this approach is feasible for the analysis of real procedures. As X-ray-based SPR continues to mature, it will benefit procedures in orthopedic surgery, angiography, and interventional radiology by equipping intelligent surgical systems with situational awareness in the operating room.

SyntheX: Scaling Up Learning-based X-ray Image Analysis Through In Silico Experiments

Jun 13, 2022

Abstract:Artificial intelligence (AI) now enables automated interpretation of medical images for clinical use. However, AI's potential use for interventional images (versus those involved in triage or diagnosis), such as for guidance during surgery, remains largely untapped. This is because surgical AI systems are currently trained using post hoc analysis of data collected during live surgeries, which has fundamental and practical limitations, including ethical considerations, expense, scalability, data integrity, and a lack of ground truth. Here, we demonstrate that creating realistic simulated images from human models is a viable alternative and complement to large-scale in situ data collection. We show that training AI image analysis models on realistically synthesized data, combined with contemporary domain generalization or adaptation techniques, results in models that on real data perform comparably to models trained on a precisely matched real data training set. Because synthetic generation of training data from human-based models scales easily, we find that our model transfer paradigm for X-ray image analysis, which we refer to as SyntheX, can even outperform real data-trained models due to the effectiveness of training on a larger dataset. We demonstrate the potential of SyntheX on three clinical tasks: Hip image analysis, surgical robotic tool detection, and COVID-19 lung lesion segmentation. SyntheX provides an opportunity to drastically accelerate the conception, design, and evaluation of intelligent systems for X-ray-based medicine. In addition, simulated image environments provide the opportunity to test novel instrumentation, design complementary surgical approaches, and envision novel techniques that improve outcomes, save time, or mitigate human error, freed from the ethical and practical considerations of live human data collection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge