Jouko Kinnari

VISTA: Monocular Segmentation-Based Mapping for Appearance and View-Invariant Global Localization

Jul 15, 2025Abstract:Global localization is critical for autonomous navigation, particularly in scenarios where an agent must localize within a map generated in a different session or by another agent, as agents often have no prior knowledge about the correlation between reference frames. However, this task remains challenging in unstructured environments due to appearance changes induced by viewpoint variation, seasonal changes, spatial aliasing, and occlusions -- known failure modes for traditional place recognition methods. To address these challenges, we propose VISTA (View-Invariant Segmentation-Based Tracking for Frame Alignment), a novel open-set, monocular global localization framework that combines: 1) a front-end, object-based, segmentation and tracking pipeline, followed by 2) a submap correspondence search, which exploits geometric consistencies between environment maps to align vehicle reference frames. VISTA enables consistent localization across diverse camera viewpoints and seasonal changes, without requiring any domain-specific training or finetuning. We evaluate VISTA on seasonal and oblique-angle aerial datasets, achieving up to a 69% improvement in recall over baseline methods. Furthermore, we maintain a compact object-based map that is only 0.6% the size of the most memory-conservative baseline, making our approach capable of real-time implementation on resource-constrained platforms.

SOS-SLAM: Segmentation for Open-Set SLAM in Unstructured Environments

Jan 09, 2024

Abstract:We present a novel framework for open-set Simultaneous Localization and Mapping (SLAM) in unstructured environments that uses segmentation to create a map of objects and geometric relationships between objects for localization. Our system consists of 1) a front-end mapping pipeline using a zero-shot segmentation model to extract object masks from images and track them across frames to generate an object-based map and 2) a frame alignment pipeline that uses the geometric consistency of objects to efficiently localize within maps taken in a variety of conditions. This approach is shown to be more robust to changes in lighting and appearance than traditional feature-based SLAM systems or global descriptor methods. This is established by evaluating SOS-SLAM on the Batvik seasonal dataset which includes drone flights collected over a coastal plot of southern Finland during different seasons and lighting conditions. Across flights during varying environmental conditions, our approach achieves higher recall than benchmark methods with precision of 1.0. SOS-SLAM localizes within a reference map up to 14x faster than other feature based approaches and has a map size less than 0.4% the size of the most compact other maps. When considering localization performance from varying viewpoints, our approach outperforms all benchmarks from the same viewpoint and most benchmarks from different viewpoints. SOS-SLAM is a promising new approach for SLAM in unstructured environments that is robust to changes in lighting and appearance and is more computationally efficient than other approaches. We release our code and datasets: https://acl.mit.edu/SOS-SLAM/.

PUMA: Fully Decentralized Uncertainty-aware Multiagent Trajectory Planner with Real-time Image Segmentation-based Frame Alignment

Nov 07, 2023Abstract:Fully decentralized, multiagent trajectory planners enable complex tasks like search and rescue or package delivery by ensuring safe navigation in unknown environments. However, deconflicting trajectories with other agents and ensuring collision-free paths in a fully decentralized setting is complicated by dynamic elements and localization uncertainty. To this end, this paper presents (1) an uncertainty-aware multiagent trajectory planner and (2) an image segmentation-based frame alignment pipeline. The uncertainty-aware planner propagates uncertainty associated with the future motion of detected obstacles, and by incorporating this propagated uncertainty into optimization constraints, the planner effectively navigates around obstacles. Unlike conventional methods that emphasize explicit obstacle tracking, our approach integrates implicit tracking. Sharing trajectories between agents can cause potential collisions due to frame misalignment. Addressing this, we introduce a novel frame alignment pipeline that rectifies inter-agent frame misalignment. This method leverages a zero-shot image segmentation model for detecting objects in the environment and a data association framework based on geometric consistency for map alignment. Our approach accurately aligns frames with only 0.18 m and 2.7 deg of mean frame alignment error in our most challenging simulation scenario. In addition, we conducted hardware experiments and successfully achieved 0.29 m and 2.59 deg of frame alignment error. Together with the alignment framework, our planner ensures safe navigation in unknown environments and collision avoidance in decentralized settings.

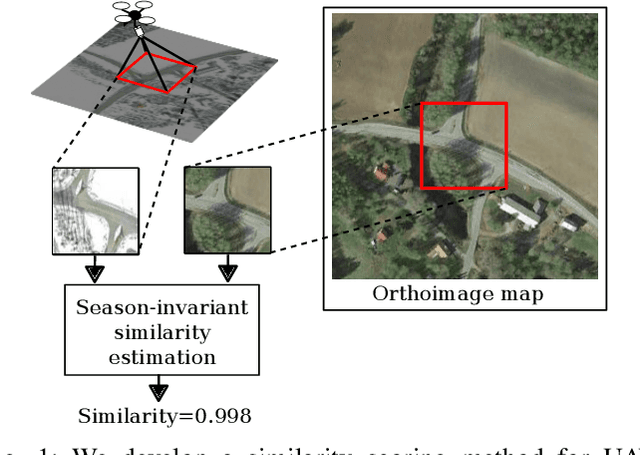

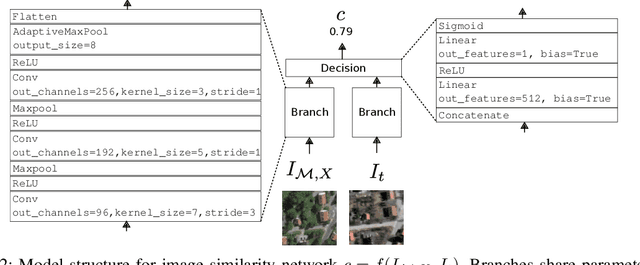

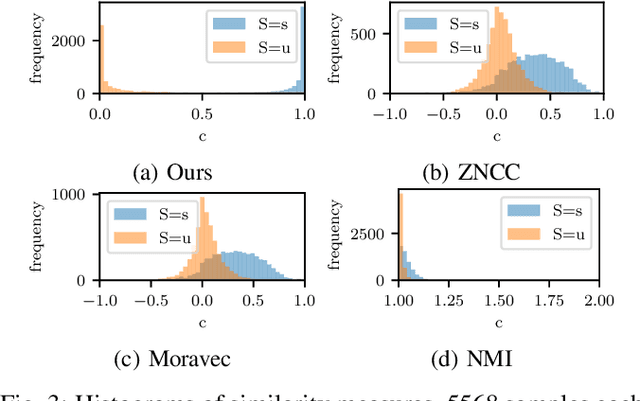

LSVL: Large-scale season-invariant visual localization for UAVs

Dec 07, 2022

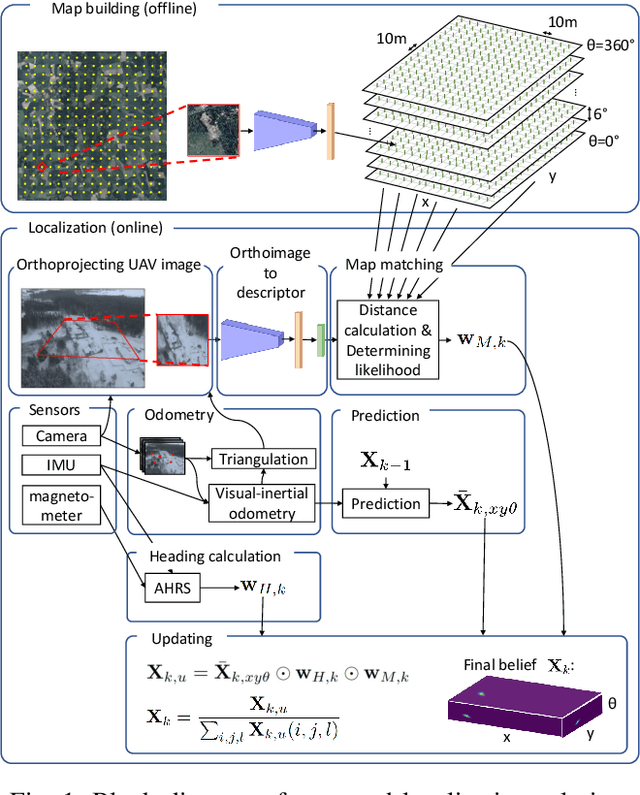

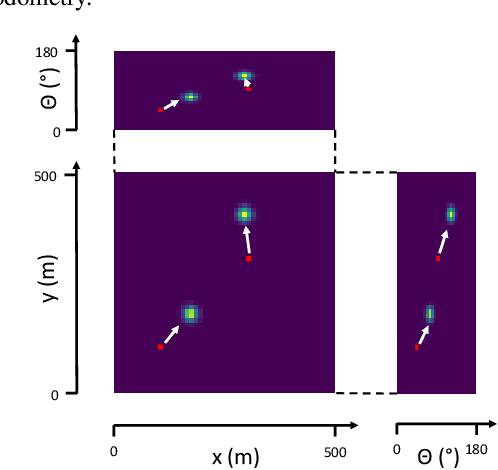

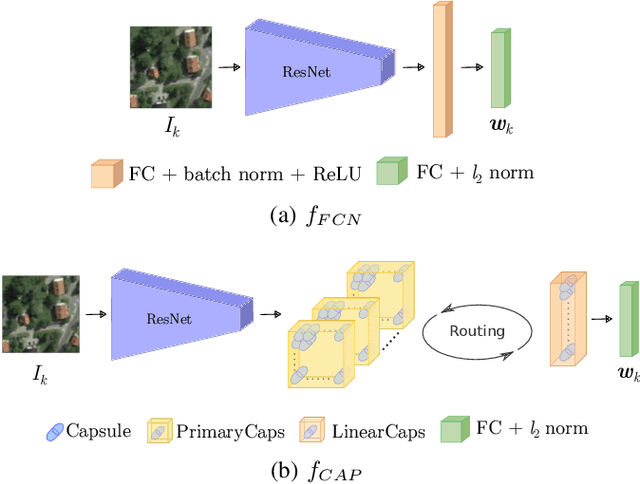

Abstract:Localization of autonomous unmanned aerial vehicles (UAVs) relies heavily on Global Navigation Satellite Systems (GNSS), which are susceptible to interference. Especially in security applications, robust localization algorithms independent of GNSS are needed to provide dependable operations of autonomous UAVs also in interfered conditions. Typical non-GNSS visual localization approaches rely on known starting pose, work only on a small-sized map, or require known flight paths before a mission starts. We consider the problem of localization with no information on initial pose or planned flight path. We propose a solution for global visual localization on a map at scale up to 100 km2, based on matching orthoprojected UAV images to satellite imagery using learned season-invariant descriptors. We show that the method is able to determine heading, latitude and longitude of the UAV at 12.6-18.7 m lateral translation error in as few as 23.2-44.4 updates from an uninformed initialization, also in situations of significant seasonal appearance difference (winter-summer) between the UAV image and the map. We evaluate the characteristics of multiple neural network architectures for generating the descriptors, and likelihood estimation methods that are able to provide fast convergence and low localization error. We also evaluate the operation of the algorithm using real UAV data and evaluate running time on a real-time embedded platform. We believe this is the first work that is able to recover the pose of an UAV at this scale and rate of convergence, while allowing significant seasonal difference between camera observations and map.

Season-invariant GNSS-denied visual localization for UAVs

Oct 05, 2021

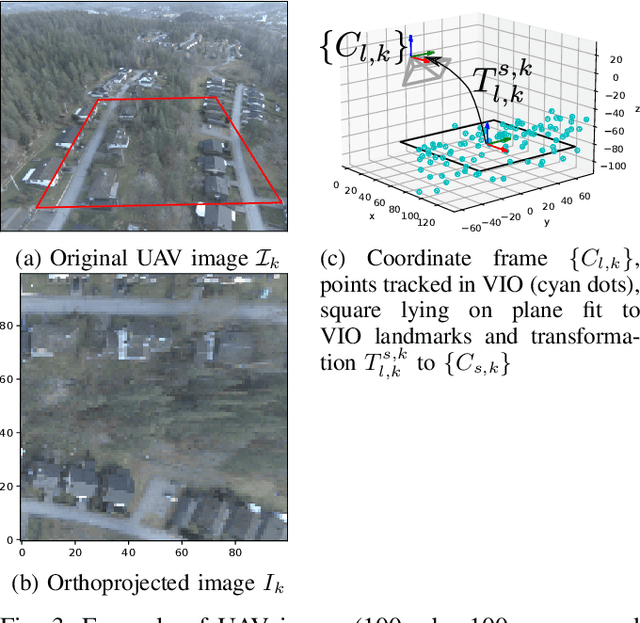

Abstract:Knowing the position and orientation of an UAV without GNSS is a critical functionality in autonomous operations of UAVs. Vision-based localization on a known map can be an effective solution, but it is burdened by two main problems: places have different appearance depending on weather and season and the perspective discrepancy between the UAV camera image and the map make matching hard. In this work, we propose a localization solution relying on matching of UAV camera images to georeferenced orthophotos with a trained CNN model that is invariant to significant seasonal appearance difference (winter-summer) between the camera image and map. We compare the convergence speed and localization accuracy of our solution to three other commonly used methods. The results show major improvements with respect to reference methods, especially under high seasonal variation. We finally demonstrate the ability of the method to successfully localize a real UAV, showing that the proposed method is robust to perspective changes.

GNSS-denied geolocalization of UAVs by visual matching of onboard camera images with orthophotos

Mar 26, 2021

Abstract:Localization of low-cost unmanned aerial vehicles(UAVs) often relies on Global Navigation Satellite Systems (GNSS). GNSS are susceptible to both natural disruptions to radio signal and intentional jamming and spoofing by an adversary. A typical way to provide georeferenced localization without GNSS for small UAVs is to have a downward-facing camera and match camera images to a map. The downward-facing camera adds cost, size, and weight to the UAV platform and the orientation limits its usability for other purposes. In this work, we propose a Monte-Carlo localization method for georeferenced localization of an UAV requiring no infrastructure using only inertial measurements, a camera facing an arbitrary direction, and an orthoimage map. We perform orthorectification of the UAV image, relying on a local planarity assumption of the environment, relaxing the requirement of downward-pointing camera. We propose a measure of goodness for the matching score of an orthorectified UAV image and a map. We demonstrate that the system is able to localize globally an UAV with modest requirements for initialization and map resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge