Vadim Indelman

Online Risk-Averse Planning in POMDPs Using Iterated CVaR Value Function

Jan 28, 2026Abstract:We study risk-sensitive planning under partial observability using the dynamic risk measure Iterated Conditional Value-at-Risk (ICVaR). A policy evaluation algorithm for ICVaR is developed with finite-time performance guarantees that do not depend on the cardinality of the action space. Building on this foundation, three widely used online planning algorithms--Sparse Sampling, Particle Filter Trees with Double Progressive Widening (PFT-DPW), and Partially Observable Monte Carlo Planning with Observation Widening (POMCPOW)--are extended to optimize the ICVaR value function rather than the expectation of the return. Our formulations introduce a risk parameter $α$, where $α= 1$ recovers standard expectation-based planning and $α< 1$ induces increasing risk aversion. For ICVaR Sparse Sampling, we establish finite-time performance guarantees under the risk-sensitive objective, which further enable a novel exploration strategy tailored to ICVaR. Experiments on benchmark POMDP domains demonstrate that the proposed ICVaR planners achieve lower tail risk compared to their risk-neutral counterparts.

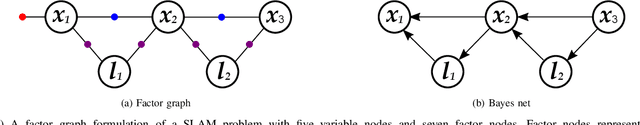

Towards Optimal Performance and Action Consistency Guarantees in Dec-POMDPs with Inconsistent Beliefs and Limited Communication

Dec 23, 2025Abstract:Multi-agent decision-making under uncertainty is fundamental for effective and safe autonomous operation. In many real-world scenarios, each agent maintains its own belief over the environment and must plan actions accordingly. However, most existing approaches assume that all agents have identical beliefs at planning time, implying these beliefs are conditioned on the same data. Such an assumption is often impractical due to limited communication. In reality, agents frequently operate with inconsistent beliefs, which can lead to poor coordination and suboptimal, potentially unsafe, performance. In this paper, we address this critical challenge by introducing a novel decentralized framework for optimal joint action selection that explicitly accounts for belief inconsistencies. Our approach provides probabilistic guarantees for both action consistency and performance with respect to open-loop multi-agent POMDP (which assumes all data is always communicated), and selectively triggers communication only when needed. Furthermore, we address another key aspect of whether, given a chosen joint action, the agents should share data to improve expected performance in inference. Simulation results show our approach outperforms state-of-the-art algorithms.

Value Gradients with Action Adaptive Search Trees in Continuous (PO)MDPs

Mar 15, 2025

Abstract:Solving Partially Observable Markov Decision Processes (POMDPs) in continuous state, action and observation spaces is key for autonomous planning in many real-world mobility and robotics applications. Current approaches are mostly sample based, and cannot hope to reach near-optimal solutions in reasonable time. We propose two complementary theoretical contributions. First, we formulate a novel Multiple Importance Sampling (MIS) tree for value estimation, that allows to share value information between sibling action branches. The novel MIS tree supports action updates during search time, such as gradient-based updates. Second, we propose a novel methodology to compute value gradients with online sampling based on transition likelihoods. It is applicable to MDPs, and we extend it to POMDPs via particle beliefs with the application of the propagated belief trick. The gradient estimator is computed in practice using the MIS tree with efficient Monte Carlo sampling. These two parts are combined into a new planning algorithm Action Gradient Monte Carlo Tree Search (AGMCTS). We demonstrate in a simulated environment its applicability, advantages over continuous online POMDP solvers that rely solely on sampling, and we discuss further implications.

Anytime Incremental $ρ$POMDP Planning in Continuous Spaces

Feb 04, 2025

Abstract:Partially Observable Markov Decision Processes (POMDPs) provide a robust framework for decision-making under uncertainty in applications such as autonomous driving and robotic exploration. Their extension, $\rho$POMDPs, introduces belief-dependent rewards, enabling explicit reasoning about uncertainty. Existing online $\rho$POMDP solvers for continuous spaces rely on fixed belief representations, limiting adaptability and refinement - critical for tasks such as information-gathering. We present $\rho$POMCPOW, an anytime solver that dynamically refines belief representations, with formal guarantees of improvement over time. To mitigate the high computational cost of updating belief-dependent rewards, we propose a novel incremental computation approach. We demonstrate its effectiveness for common entropy estimators, reducing computational cost by orders of magnitude. Experimental results show that $\rho$POMCPOW outperforms state-of-the-art solvers in both efficiency and solution quality.

Online Hybrid-Belief POMDP with Coupled Semantic-Geometric Models and Semantic Safety Awareness

Jan 20, 2025Abstract:Robots operating in complex and unknown environments frequently require geometric-semantic representations of the environment to safely perform their tasks. While inferring the environment, they must account for many possible scenarios when planning future actions. Since objects' class types are discrete and the robot's self-pose and the objects' poses are continuous, the environment can be represented by a hybrid discrete-continuous belief which is updated according to models and incoming data. Prior probabilities and observation models representing the environment can be learned from data using deep learning algorithms. Such models often couple environmental semantic and geometric properties. As a result, semantic variables are interconnected, causing semantic state space dimensionality to increase exponentially. In this paper, we consider planning under uncertainty using partially observable Markov decision processes (POMDPs) with hybrid semantic-geometric beliefs. The models and priors consider the coupling between semantic and geometric variables. Within POMDP, we introduce the concept of semantically aware safety. Obtaining representative samples of the theoretical hybrid belief, required for estimating the value function, is very challenging. As a key contribution, we develop a novel form of the hybrid belief and leverage it to sample representative samples. We show that under certain conditions, the value function and probability of safety can be calculated efficiently with an explicit expectation over all possible semantic mappings. Our simulations show that our estimates of the objective function and probability of safety achieve similar levels of accuracy compared to estimators that run exhaustively on the entire semantic state-space using samples from the theoretical hybrid belief. Nevertheless, the complexity of our estimators is polynomial rather than exponential.

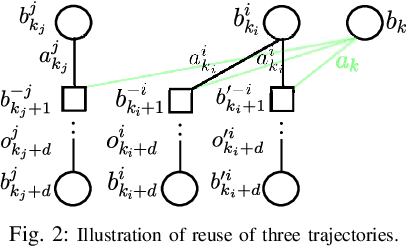

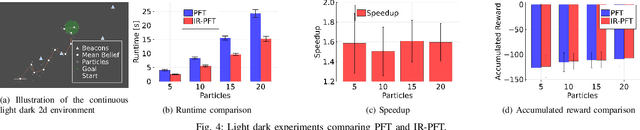

Previous Knowledge Utilization In Online Anytime Belief Space Planning

Dec 17, 2024

Abstract:Online planning under uncertainty remains a critical challenge in robotics and autonomous systems. While tree search techniques are commonly employed to construct partial future trajectories within computational constraints, most existing methods discard information from previous planning sessions considering continuous spaces. This study presents a novel, computationally efficient approach that leverages historical planning data in current decision-making processes. We provide theoretical foundations for our information reuse strategy and introduce an algorithm based on Monte Carlo Tree Search (MCTS) that implements this approach. Experimental results demonstrate that our method significantly reduces computation time while maintaining high performance levels. Our findings suggest that integrating historical planning information can substantially improve the efficiency of online decision-making in uncertain environments, paving the way for more responsive and adaptive autonomous systems.

Anytime Probabilistically Constrained Provably Convergent Online Belief Space Planning

Nov 11, 2024Abstract:Taking into account future risk is essential for an autonomously operating robot to find online not only the best but also a safe action to execute. In this paper, we build upon the recently introduced formulation of probabilistic belief-dependent constraints. We present an anytime approach employing the Monte Carlo Tree Search (MCTS) method in continuous domains. Unlike previous approaches, our method assures safety anytime with respect to the currently expanded search tree without relying on the convergence of the search. We prove convergence in probability with an exponential rate of a version of our algorithms and study proposed techniques via extensive simulations. Even with a tiny number of tree queries, the best action found by our approach is much safer than the baseline. Moreover, our approach constantly finds better than the baseline action in terms of objective. This is because we revise the values and statistics maintained in the search tree and remove from them the contribution of the pruned actions.

Simplified POMDP Planning with an Alternative Observation Space and Formal Performance Guarantees

Oct 10, 2024

Abstract:Online planning under uncertainty in partially observable domains is an essential capability in robotics and AI. The partially observable Markov decision process (POMDP) is a mathematically principled framework for addressing decision-making problems in this challenging setting. However, finding an optimal solution for POMDPs is computationally expensive and is feasible only for small problems. In this work, we contribute a novel method to simplify POMDPs by switching to an alternative, more compact, observation space and simplified model to speedup planning with formal performance guarantees. We introduce the notion of belief tree topology, which encodes the levels and branches in the tree that use the original and alternative observation space and models. Each belief tree topology comes with its own policy space and planning performance. Our key contribution is to derive bounds between the optimal Q-function of the original POMDP and the simplified tree defined by a given topology with a corresponding simplified policy space. These bounds are then used as an adaptation mechanism between different tree topologies until the optimal action of the original POMDP can be determined. Further, we consider a specific instantiation of our framework, where the alternative observation space and model correspond to a setting where the state is fully observable. We evaluate our approach in simulation, considering exact and approximate POMDP solvers and demonstrating a significant speedup while preserving solution quality. We believe this work opens new exciting avenues for online POMDP planning with formal performance guarantees.

Simplification of Risk Averse POMDPs with Performance Guarantees

Jun 05, 2024Abstract:Risk averse decision making under uncertainty in partially observable domains is a fundamental problem in AI and essential for reliable autonomous agents. In our case, the problem is modeled using partially observable Markov decision processes (POMDPs), when the value function is the conditional value at risk (CVaR) of the return. Calculating an optimal solution for POMDPs is computationally intractable in general. In this work we develop a simplification framework to speedup the evaluation of the value function, while providing performance guarantees. We consider as simplification a computationally cheaper belief-MDP transition model, that can correspond, e.g., to cheaper observation or transition models. Our contributions include general bounds for CVaR that allow bounding the CVaR of a random variable X, using a random variable Y, by assuming bounds between their cumulative distributions. We then derive bounds for the CVaR value function in a POMDP setting, and show how to bound the value function using the computationally cheaper belief-MDP transition model and without accessing the computationally expensive model in real-time. Then, we provide theoretical performance guarantees for the estimated bounds. Our results apply for a general simplification of a belief-MDP transition model and support simplification of both the observation and state transition models simultaneously.

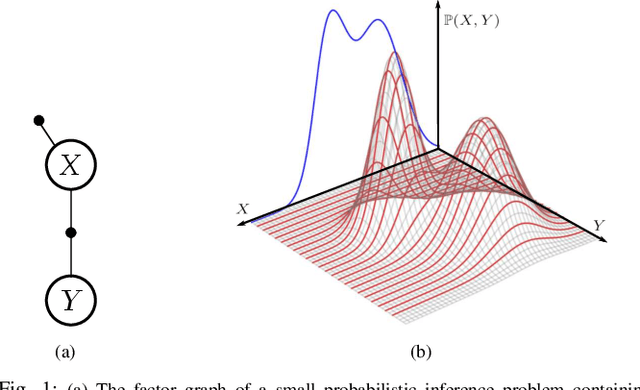

A Slices Perspective for Incremental Nonparametric Inference in High Dimensional State Spaces

May 26, 2024

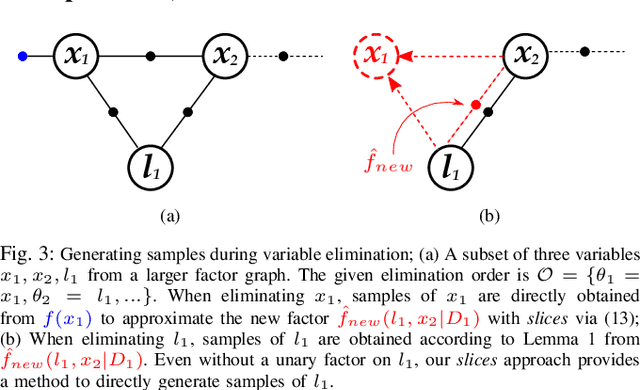

Abstract:We introduce an innovative method for incremental nonparametric probabilistic inference in high-dimensional state spaces. Our approach leverages \slices from high-dimensional surfaces to efficiently approximate posterior distributions of any shape. Unlike many existing graph-based methods, our \slices perspective eliminates the need for additional intermediate reconstructions, maintaining a more accurate representation of posterior distributions. Additionally, we propose a novel heuristic to balance between accuracy and efficiency, enabling real-time operation in nonparametric scenarios. In empirical evaluations on synthetic and real-world datasets, our \slices approach consistently outperforms other state-of-the-art methods. It demonstrates superior accuracy and achieves a significant reduction in computational complexity, often by an order of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge