Michael Novitsky

Value Gradients with Action Adaptive Search Trees in Continuous (PO)MDPs

Mar 15, 2025

Abstract:Solving Partially Observable Markov Decision Processes (POMDPs) in continuous state, action and observation spaces is key for autonomous planning in many real-world mobility and robotics applications. Current approaches are mostly sample based, and cannot hope to reach near-optimal solutions in reasonable time. We propose two complementary theoretical contributions. First, we formulate a novel Multiple Importance Sampling (MIS) tree for value estimation, that allows to share value information between sibling action branches. The novel MIS tree supports action updates during search time, such as gradient-based updates. Second, we propose a novel methodology to compute value gradients with online sampling based on transition likelihoods. It is applicable to MDPs, and we extend it to POMDPs via particle beliefs with the application of the propagated belief trick. The gradient estimator is computed in practice using the MIS tree with efficient Monte Carlo sampling. These two parts are combined into a new planning algorithm Action Gradient Monte Carlo Tree Search (AGMCTS). We demonstrate in a simulated environment its applicability, advantages over continuous online POMDP solvers that rely solely on sampling, and we discuss further implications.

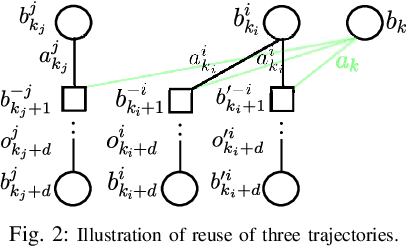

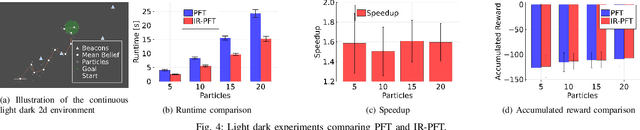

Previous Knowledge Utilization In Online Anytime Belief Space Planning

Dec 17, 2024

Abstract:Online planning under uncertainty remains a critical challenge in robotics and autonomous systems. While tree search techniques are commonly employed to construct partial future trajectories within computational constraints, most existing methods discard information from previous planning sessions considering continuous spaces. This study presents a novel, computationally efficient approach that leverages historical planning data in current decision-making processes. We provide theoretical foundations for our information reuse strategy and introduce an algorithm based on Monte Carlo Tree Search (MCTS) that implements this approach. Experimental results demonstrate that our method significantly reduces computation time while maintaining high performance levels. Our findings suggest that integrating historical planning information can substantially improve the efficiency of online decision-making in uncertain environments, paving the way for more responsive and adaptive autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge