Shounak Das

Department of Mechanical and Aerospace Engineering, West Virginia University, Morgantown, USA

BiPrompt: Bilateral Prompt Optimization for Visual and Textual Debiasing in Vision-Language Models

Jan 05, 2026Abstract:Vision language foundation models such as CLIP exhibit impressive zero-shot generalization yet remain vulnerable to spurious correlations across visual and textual modalities. Existing debiasing approaches often address a single modality either visual or textual leading to partial robustness and unstable adaptation under distribution shifts. We propose a bilateral prompt optimization framework (BiPrompt) that simultaneously mitigates non-causal feature reliance in both modalities during test-time adaptation. On the visual side, it employs structured attention-guided erasure to suppress background activations and enforce orthogonal prediction consistency between causal and spurious regions. On the textual side, it introduces balanced prompt normalization, a learnable re-centering mechanism that aligns class embeddings toward an isotropic semantic space. Together, these modules jointly minimize conditional mutual information between spurious cues and predictions, steering the model toward causal, domain invariant reasoning without retraining or domain supervision. Extensive evaluations on real-world and synthetic bias benchmarks demonstrate consistent improvements in both average and worst-group accuracies over prior test-time debiasing methods, establishing a lightweight yet effective path toward trustworthy and causally grounded vision-language adaptation.

FEDTAIL: Federated Long-Tailed Domain Generalization with Sharpness-Guided Gradient Matching

Jun 10, 2025Abstract:Domain Generalization (DG) seeks to train models that perform reliably on unseen target domains without access to target data during training. While recent progress in smoothing the loss landscape has improved generalization, existing methods often falter under long-tailed class distributions and conflicting optimization objectives. We introduce FedTAIL, a federated domain generalization framework that explicitly addresses these challenges through sharpness-guided, gradient-aligned optimization. Our method incorporates a gradient coherence regularizer to mitigate conflicts between classification and adversarial objectives, leading to more stable convergence. To combat class imbalance, we perform class-wise sharpness minimization and propose a curvature-aware dynamic weighting scheme that adaptively emphasizes underrepresented tail classes. Furthermore, we enhance conditional distribution alignment by integrating sharpness-aware perturbations into entropy regularization, improving robustness under domain shift. FedTAIL unifies optimization harmonization, class-aware regularization, and conditional alignment into a scalable, federated-compatible framework. Extensive evaluations across standard domain generalization benchmarks demonstrate that FedTAIL achieves state-of-the-art performance, particularly in the presence of domain shifts and label imbalance, validating its effectiveness in both centralized and federated settings. Code: https://github.com/sunnyinAI/FedTail

Scalable Whole Slide Image Representation Using K-Mean Clustering and Fisher Vector Aggregation

Jan 21, 2025Abstract:Whole slide images (WSIs) are high-resolution, gigapixel sized images that pose significant computational challenges for traditional machine learning models due to their size and heterogeneity.In this paper, we present a scalable and efficient methodology for WSI classification by leveraging patch-based feature extraction, clustering, and Fisher vector encoding. Initially, WSIs are divided into fixed size patches, and deep feature embeddings are extracted from each patch using a pre-trained convolutional neural network (CNN). These patch-level embeddings are subsequently clustered using K-means clustering, where each cluster aggregates semantically similar regions of the WSI. To effectively summarize each cluster, Fisher vector representations are computed by modeling the distribution of patch embeddings in each cluster as a parametric Gaussian mixture model (GMM). The Fisher vectors from each cluster are concatenated into a high-dimensional feature vector, creating a compact and informative representation of the entire WSI. This feature vector is then used by a classifier to predict the WSI's diagnostic label. Our method captures local and global tissue structures and yields robust performance for large-scale WSI classification, demonstrating superior accuracy and scalability compared to other approaches.

Clustered Patch Embeddings for Permutation-Invariant Classification of Whole Slide Images

Nov 13, 2024Abstract:Whole Slide Imaging (WSI) is a cornerstone of digital pathology, offering detailed insights critical for diagnosis and research. Yet, the gigapixel size of WSIs imposes significant computational challenges, limiting their practical utility. Our novel approach addresses these challenges by leveraging various encoders for intelligent data reduction and employing a different classification model to ensure robust, permutation-invariant representations of WSIs. A key innovation of our method is the ability to distill the complex information of an entire WSI into a single vector, effectively capturing the essential features needed for accurate analysis. This approach significantly enhances the computational efficiency of WSI analysis, enabling more accurate pathological assessments without the need for extensive computational resources. This breakthrough equips us with the capability to effectively address the challenges posed by large image resolutions in whole-slide imaging, paving the way for more scalable and effective utilization of WSIs in medical diagnostics and research, marking a significant advancement in the field.

Domain-Adaptive Learning: Unsupervised Adaptation for Histology Images with Improved Loss Function Combination

Sep 29, 2023Abstract:This paper presents a novel approach for unsupervised domain adaptation (UDA) targeting H&E stained histology images. Existing adversarial domain adaptation methods may not effectively align different domains of multimodal distributions associated with classification problems. The objective is to enhance domain alignment and reduce domain shifts between these domains by leveraging their unique characteristics. Our approach proposes a novel loss function along with carefully selected existing loss functions tailored to address the challenges specific to histology images. This loss combination not only makes the model accurate and robust but also faster in terms of training convergence. We specifically focus on leveraging histology-specific features, such as tissue structure and cell morphology, to enhance adaptation performance in the histology domain. The proposed method is extensively evaluated in accuracy, robustness, and generalization, surpassing state-of-the-art techniques for histology images. We conducted extensive experiments on the FHIST dataset and the results show that our proposed method - Domain Adaptive Learning (DAL) significantly surpasses the ViT-based and CNN-based SoTA methods by 1.41% and 6.56% respectively.

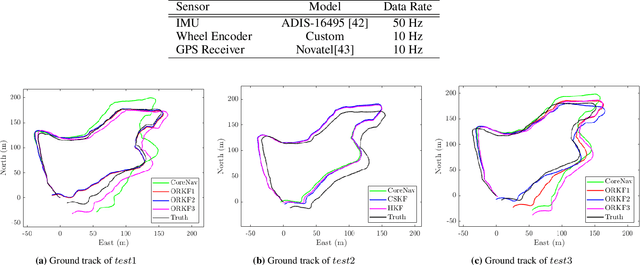

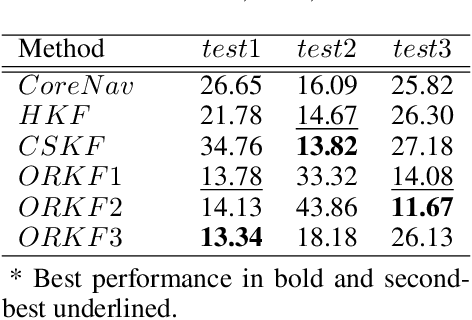

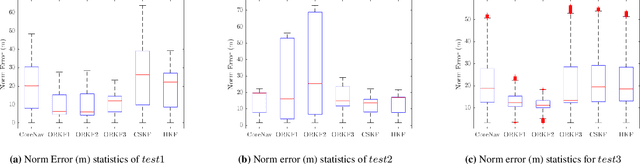

A Comparison of Robust Kalman Filters for Improving Wheel-Inertial Odometry in Planetary Rovers

Dec 15, 2021

Abstract:This paper compares the performance of adaptive and robust Kalman filter algorithms in improving wheel-inertial odometry on low featured rough terrain. Approaches include classical adaptive and robust methods as well as variational methods, which are evaluated experimentally on a wheeled rover in terrain similar to what would be encountered in planetary exploration. Variational filters show improved solution accuracy compared to the classical adaptive filters and are able to handle erroneous wheel odometry measurements and keep good localization for longer distances without significant drift. We also show how varying the parameters affects localization performance.

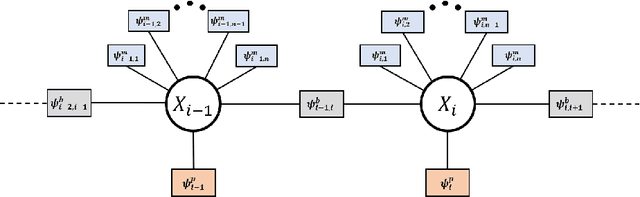

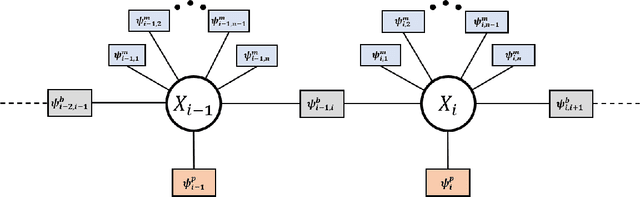

Review of Factor Graphs for Robust GNSS Applications

Dec 14, 2021

Abstract:Factor graphs have recently emerged as an alternative solution method for GNSS positioning. In this article, we review how factor graphs are implemented in GNSS, some of their advantages over Kalman Filters, and their importance in making positioning solutions more robust to degraded measurements. We also talk about how factor graphs can be an important tool for the field radio-navigation community.

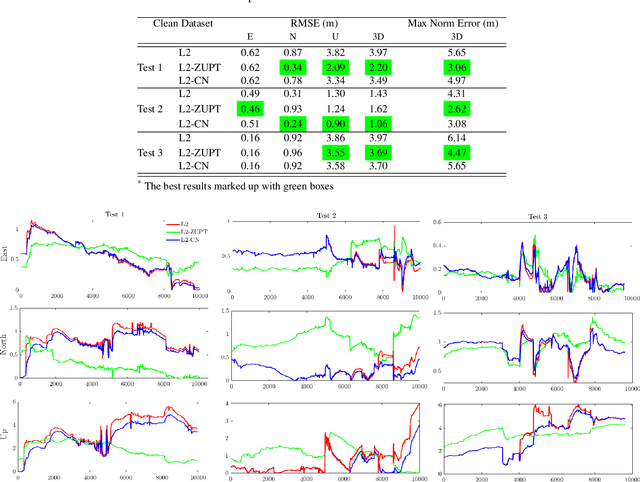

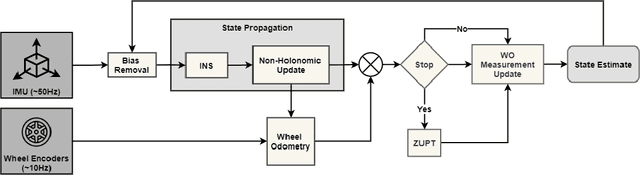

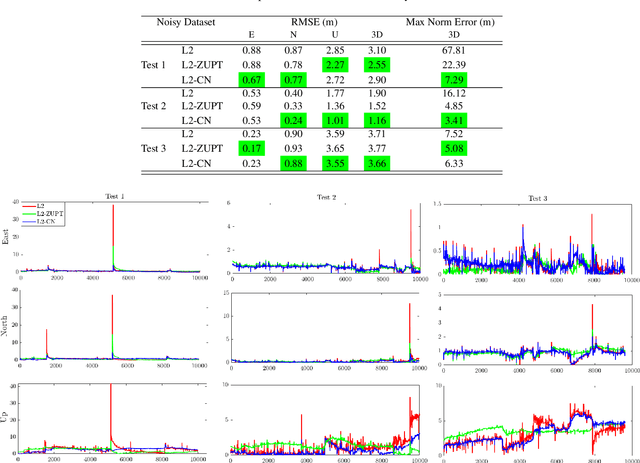

ZUPT Aided GNSS Factor Graph with Inertial Navigation Integration for Wheeled Robots

Dec 14, 2021

Abstract:In this work, we demonstrate the importance of zero velocity information for global navigation satellite system (GNSS) based navigation. The effectiveness of using the zero velocity information with zero velocity update (ZUPT) for inertial navigation applications have been shown in the literature. Here we leverage this information and add it as a position constraint in a GNSS factor graph. We also compare its performance to a GNSS/inertial navigation system (INS) coupled factor graph. We tested our ZUPT aided factor graph method on three datasets and compared it with the GNSS-only factor graph.

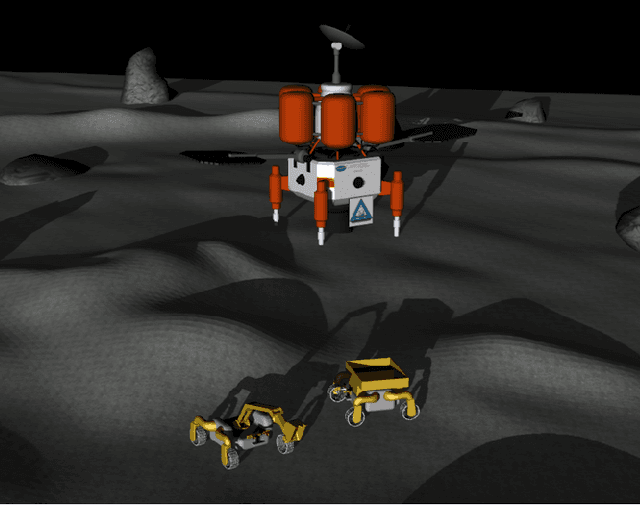

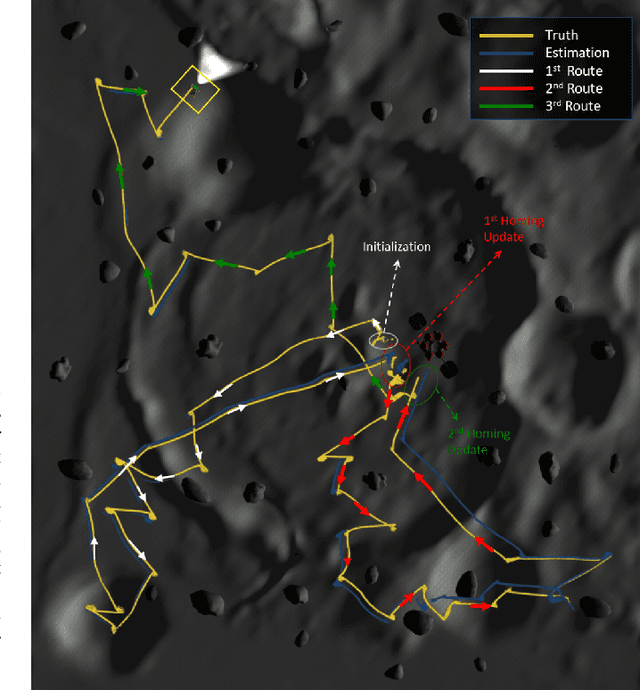

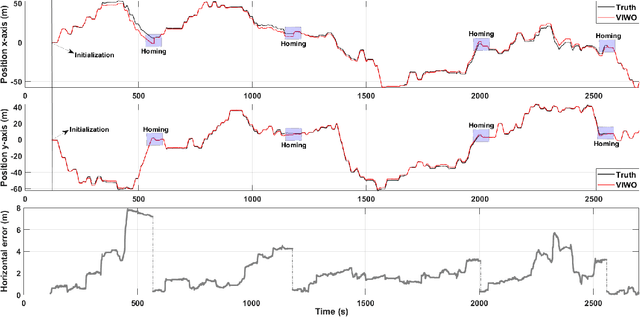

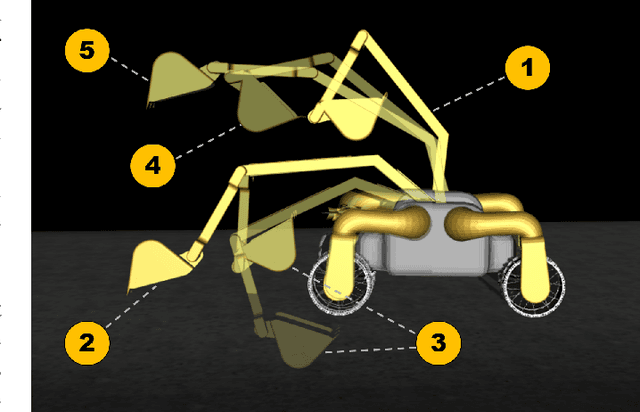

NASA Space Robotics Challenge 2 Qualification Round: An Approach to Autonomous Lunar Rover Operations

Sep 20, 2021

Abstract:Plans for establishing a long-term human presence on the Moon will require substantial increases in robot autonomy and multi-robot coordination to support establishing a lunar outpost. To achieve these objectives, algorithm design choices for the software developments need to be tested and validated for expected scenarios such as autonomous in-situ resource utilization (ISRU), localization in challenging environments, and multi-robot coordination. However, real-world experiments are extremely challenging and limited for extraterrestrial environment. Also, realistic simulation demonstrations in these environments are still rare and demanded for initial algorithm testing capabilities. To help some of these needs, the NASA Centennial Challenges program established the Space Robotics Challenge Phase 2 (SRC2) which consist of virtual robotic systems in a realistic lunar simulation environment, where a group of mobile robots were tasked with reporting volatile locations within a global map, excavating and transporting these resources, and detecting and localizing a target of interest. The main goal of this article is to share our team's experiences on the design trade-offs to perform autonomous robotic operations in a virtual lunar environment and to share strategies to complete the mission requirements posed by NASA SRC2 competition during the qualification round. Of the 114 teams that registered for participation in the NASA SRC2, team Mountaineers finished as one of only six teams to receive the top qualification round prize.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge