Hehui Zheng

Soft Robotics Lab ETH Zurich, ETH AI Center

Learning Soft Robotic Dynamics with Active Exploration

Oct 31, 2025Abstract:Soft robots offer unmatched adaptability and safety in unstructured environments, yet their compliant, high-dimensional, and nonlinear dynamics make modeling for control notoriously difficult. Existing data-driven approaches often fail to generalize, constrained by narrowly focused task demonstrations or inefficient random exploration. We introduce SoftAE, an uncertainty-aware active exploration framework that autonomously learns task-agnostic and generalizable dynamics models of soft robotic systems. SoftAE employs probabilistic ensemble models to estimate epistemic uncertainty and actively guides exploration toward underrepresented regions of the state-action space, achieving efficient coverage of diverse behaviors without task-specific supervision. We evaluate SoftAE on three simulated soft robotic platforms -- a continuum arm, an articulated fish in fluid, and a musculoskeletal leg with hybrid actuation -- and on a pneumatically actuated continuum soft arm in the real world. Compared with random exploration and task-specific model-based reinforcement learning, SoftAE produces more accurate dynamics models, enables superior zero-shot control on unseen tasks, and maintains robustness under sensing noise, actuation delays, and nonlinear material effects. These results demonstrate that uncertainty-driven active exploration can yield scalable, reusable dynamics models across diverse soft robotic morphologies, representing a step toward more autonomous, adaptable, and data-efficient control in compliant robots.

PokeFlex: A Real-World Dataset of Deformable Objects for Robotics

Oct 10, 2024

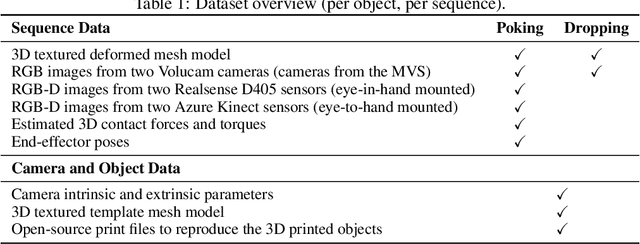

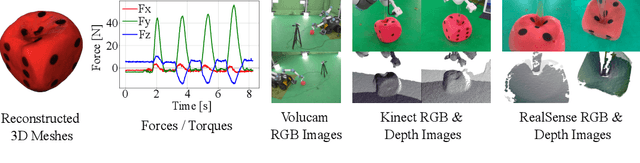

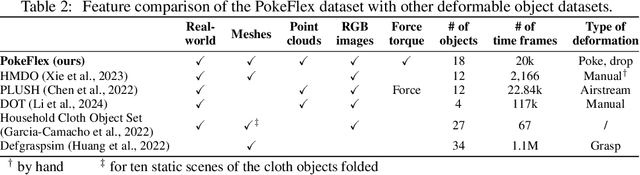

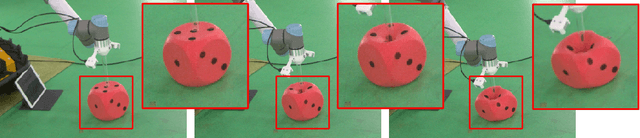

Abstract:Data-driven methods have shown great potential in solving challenging manipulation tasks, however, their application in the domain of deformable objects has been constrained, in part, by the lack of data. To address this, we propose PokeFlex, a dataset featuring real-world paired and annotated multimodal data that includes 3D textured meshes, point clouds, RGB images, and depth maps. Such data can be leveraged for several downstream tasks such as online 3D mesh reconstruction, and it can potentially enable underexplored applications such as the real-world deployment of traditional control methods based on mesh simulations. To deal with the challenges posed by real-world 3D mesh reconstruction, we leverage a professional volumetric capture system that allows complete 360{\deg} reconstruction. PokeFlex consists of 18 deformable objects with varying stiffness and shapes. Deformations are generated by dropping objects onto a flat surface or by poking the objects with a robot arm. Interaction forces and torques are also reported for the latter case. Using different data modalities, we demonstrated a use case for the PokeFlex dataset in online 3D mesh reconstruction. We refer the reader to our website ( https://pokeflex-dataset.github.io/ ) for demos and examples of our dataset.

PokeFlex: Towards a Real-World Dataset of Deformable Objects for Robotic Manipulation

Sep 25, 2024

Abstract:Advancing robotic manipulation of deformable objects can enable automation of repetitive tasks across multiple industries, from food processing to textiles and healthcare. Yet robots struggle with the high dimensionality of deformable objects and their complex dynamics. While data-driven methods have shown potential for solving manipulation tasks, their application in the domain of deformable objects has been constrained by the lack of data. To address this, we propose PokeFlex, a pilot dataset featuring real-world 3D mesh data of actively deformed objects, together with the corresponding forces and torques applied by a robotic arm, using a simple poking strategy. Deformations are captured with a professional volumetric capture system that allows for complete 360-degree reconstruction. The PokeFlex dataset consists of five deformable objects with varying stiffness and shapes. Additionally, we leverage the PokeFlex dataset to train a vision model for online 3D mesh reconstruction from a single image and a template mesh. We refer readers to the supplementary material and to our website ( https://pokeflex-dataset.github.io/ ) for demos and examples of our dataset.

Fast Point-cloud to Mesh Reconstruction for Deformable Object Tracking

Nov 05, 2023Abstract:The world around us is full of soft objects that we as humans learn to perceive and deform with dexterous hand movements from a young age. In order for a Robotic hand to be able to control soft objects, it needs to acquire online state feedback of the deforming object. While RGB-D cameras can collect occluded information at a rate of 30 Hz, the latter does not represent a continuously trackable object surface. Hence, in this work, we developed a method that can create deforming meshes of deforming point clouds at a speed of above 50 Hz for different categories of objects. The reconstruction of meshes from point clouds has been long studied in the field of Computer graphics under 3D reconstruction and 4D reconstruction, however both lack the speed and generalizability needed for robotics applications. Our model is designed using a point cloud auto-encoder and a Real-NVP architecture. The latter is a continuous flow neural network with manifold-preservation properties. Our model takes a template mesh which is the mesh of an object in its canonical state and then deforms the template mesh to match a deformed point cloud of the object. Our method can perform mesh reconstruction and tracking at a rate of 58 Hz for deformations of six different ycb categories. An instance of a downstream application can be the control algorithm for a robotic hand that requires online feedback from the state of a manipulated object which would allow online grasp adaptation in a closed-loop manner. Furthermore, the tracking capacity that our method provides can help in the system identification of deforming objects in a marker-free approach. In future work, we will extend our method to more categories of objects and real world deforming point clouds

Real Robot Challenge 2022: Learning Dexterous Manipulation from Offline Data in the Real World

Sep 04, 2023Abstract:Experimentation on real robots is demanding in terms of time and costs. For this reason, a large part of the reinforcement learning (RL) community uses simulators to develop and benchmark algorithms. However, insights gained in simulation do not necessarily translate to real robots, in particular for tasks involving complex interactions with the environment. The Real Robot Challenge 2022 therefore served as a bridge between the RL and robotics communities by allowing participants to experiment remotely with a real robot - as easily as in simulation. In the last years, offline reinforcement learning has matured into a promising paradigm for learning from pre-collected datasets, alleviating the reliance on expensive online interactions. We therefore asked the participants to learn two dexterous manipulation tasks involving pushing, grasping, and in-hand orientation from provided real-robot datasets. An extensive software documentation and an initial stage based on a simulation of the real set-up made the competition particularly accessible. By giving each team plenty of access budget to evaluate their offline-learned policies on a cluster of seven identical real TriFinger platforms, we organized an exciting competition for machine learners and roboticists alike. In this work we state the rules of the competition, present the methods used by the winning teams and compare their results with a benchmark of state-of-the-art offline RL algorithms on the challenge datasets.

Embracing Safe Contacts with Contact-aware Planning and Control

Aug 08, 2023Abstract:Unlike human beings that can employ the entire surface of their limbs as a means to establish contact with their environment, robots are typically programmed to interact with their environments via their end-effectors, in a collision-free fashion, to avoid damaging their environment. In a departure from such a traditional approach, this work presents a contact-aware controller for reference tracking that maintains interaction forces on the surface of the robot below a safety threshold in the presence of both rigid and soft contacts. Furthermore, we leveraged the proposed controller to extend the BiTRRT sample-based planning method to be contact-aware, using a simplified contact model. The effectiveness of our framework is demonstrated in hardware experiments using a Franka robot in a setup inspired by the Amazon stowing task. A demo video of our results can be seen here: https://youtu.be/2WeYytauhNg

ViSE: Vision-Based 3D Real-Time Shape Estimation of Continuously Deformable Robots

Nov 09, 2022Abstract:The precise control of soft and continuum robots requires knowledge of their shape. The shape of these robots has, in contrast to classical rigid robots, infinite degrees of freedom. To partially reconstruct the shape, proprioceptive techniques use built-in sensors resulting in inaccurate results and increased fabrication complexity. Exteroceptive methods so far rely on placing reflective markers on all tracked components and triangulating their position using multiple motion-tracking cameras. Tracking systems are expensive and infeasible for deformable robots interacting with the environment due to marker occlusion and damage. Here, we present a regression approach for 3D shape estimation using a convolutional neural network. The proposed approach takes advantage of data-driven supervised learning and is capable of real-time marker-less shape estimation during inference. Two images of a robotic system are taken simultaneously at 25 Hz from two different perspectives, and are fed to the network, which returns for each pair the parameterized shape. The proposed approach outperforms marker-less state-of-the-art methods by a maximum of 4.4\% in estimation accuracy while at the same time being more robust and requiring no prior knowledge of the shape. The approach can be easily implemented due to only requiring two color cameras without depth and not needing an explicit calibration of the extrinsic parameters. Evaluations on two types of soft robotic arms and a soft robotic fish demonstrate our method's accuracy and versatility on highly deformable systems in real-time. The robust performance of the approach against different scene modifications (camera alignment and brightness) suggests its generalizability to a wider range of experimental setups, which will benefit downstream tasks such as robotic grasping and manipulation.

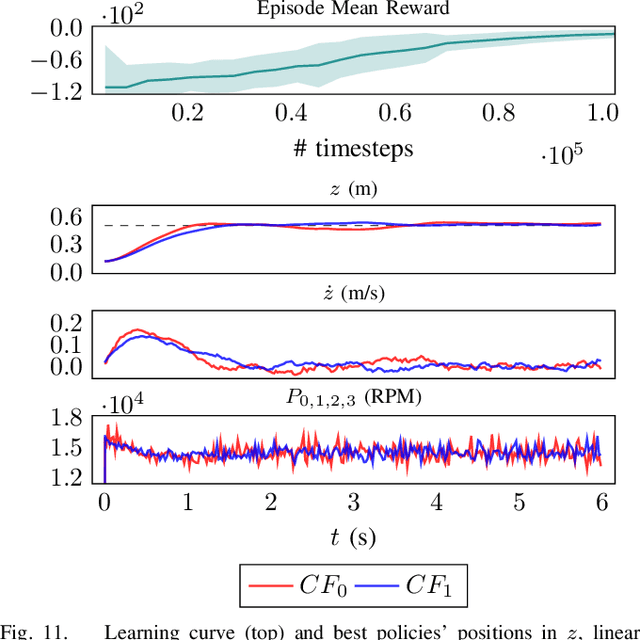

Learning to Fly -- a Gym Environment with PyBullet Physics for Reinforcement Learning of Multi-agent Quadcopter Control

Mar 04, 2021

Abstract:Robotic simulators are crucial for academic research and education as well as the development of safety-critical applications. Reinforcement learning environments -- simple simulations coupled with a problem specification in the form of a reward function -- are also important to standardize the development (and benchmarking) of learning algorithms. Yet, full-scale simulators typically lack portability and parallelizability. Vice versa, many reinforcement learning environments trade-off realism for high sample throughputs in toy-like problems. While public data sets have greatly benefited deep learning and computer vision, we still lack the software tools to simultaneously develop -- and fairly compare -- control theory and reinforcement learning approaches. In this paper, we propose an open-source OpenAI Gym-like environment for multiple quadcopters based on the Bullet physics engine. Its multi-agent and vision based reinforcement learning interfaces, as well as the support of realistic collisions and aerodynamic effects, make it, to the best of our knowledge, a first of its kind. We demonstrate its use through several examples, either for control (trajectory tracking with PID control, multi-robot flight with downwash, etc.) or reinforcement learning (single and multi-agent stabilization tasks), hoping to inspire future research that combines control theory and machine learning.

DSNAS: Direct Neural Architecture Search without Parameter Retraining

Feb 21, 2020

Abstract:If NAS methods are solutions, what is the problem? Most existing NAS methods require two-stage parameter optimization. However, performance of the same architecture in the two stages correlates poorly. In this work, we propose a new problem definition for NAS, task-specific end-to-end, based on this observation. We argue that given a computer vision task for which a NAS method is expected, this definition can reduce the vaguely-defined NAS evaluation to i) accuracy of this task and ii) the total computation consumed to finally obtain a model with satisfying accuracy. Seeing that most existing methods do not solve this problem directly, we propose DSNAS, an efficient differentiable NAS framework that simultaneously optimizes architecture and parameters with a low-biased Monte Carlo estimate. Child networks derived from DSNAS can be deployed directly without parameter retraining. Comparing with two-stage methods, DSNAS successfully discovers networks with comparable accuracy (74.4%) on ImageNet in 420 GPU hours, reducing the total time by more than 34%.

An Adversarial Approach to Private Flocking in Mobile Robot Teams

Sep 23, 2019

Abstract:Privacy is an important facet of defence against adversaries. In this letter, we introduce the problem of private flocking. We consider a team of mobile robots flocking in the presence of an adversary, who is able to observe all robots' trajectories, and who is interested in identifying the leader. We present a method that generates private flocking controllers that hide the identity of the leader robot. Our approach towards privacy leverages a data-driven adversarial co-optimization scheme. We design a mechanism that optimizes flocking control parameters, such that leader inference is hindered. As the flocking performance improves, we successively train an adversarial discriminator that tries to infer the identity of the leader robot. To evaluate the performance of our co-optimization scheme, we investigate different classes of reference trajectories. Although it is reasonable to assume that there is an inherent trade-off between flocking performance and privacy, our results demonstrate that we are able to achieve high flocking performance and simultaneously reduce the risk of revealing the leader.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge