Barnabas Gavin Cangan

Soft Robotics Lab, IRIS, D-MAVT, ETH Zurich, Switzerland

Beyond Anthropomorphism: Enhancing Grasping and Eliminating a Degree of Freedom by Fusing the Abduction of Digits Four and Five

Sep 16, 2025Abstract:This paper presents the SABD hand, a 16-degree-of-freedom (DoF) robotic hand that departs from purely anthropomorphic designs to achieve an expanded grasp envelope, enable manipulation poses beyond human capability, and reduce the required number of actuators. This is achieved by combining the adduction/abduction (Add/Abd) joint of digits four and five into a single joint with a large range of motion. The combined joint increases the workspace of the digits by 400\% and reduces the required DoFs while retaining dexterity. Experimental results demonstrate that the combined Add/Abd joint enables the hand to grasp objects with a side distance of up to 200 mm. Reinforcement learning-based investigations show that the design enables grasping policies that are effective not only for handling larger objects but also for achieving enhanced grasp stability. In teleoperated trials, the hand successfully performed 86\% of attempted grasps on suitable YCB objects, including challenging non-anthropomorphic configurations. These findings validate the design's ability to enhance grasp stability, flexibility, and dexterous manipulation without added complexity, making it well-suited for a wide range of applications.

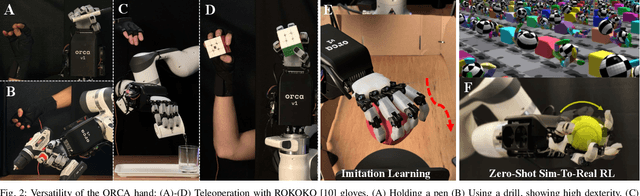

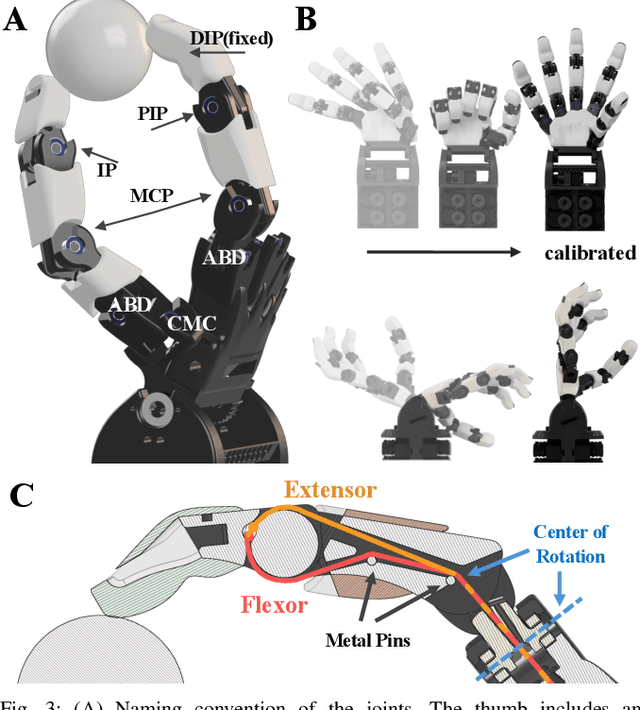

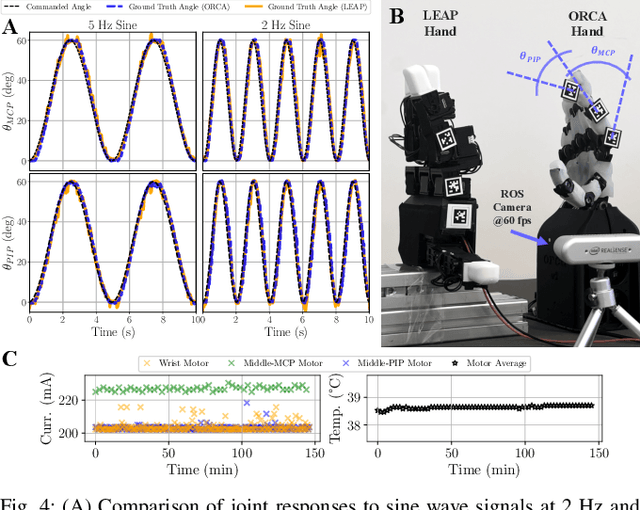

ORCA: An Open-Source, Reliable, Cost-Effective, Anthropomorphic Robotic Hand for Uninterrupted Dexterous Task Learning

Apr 05, 2025

Abstract:General-purpose robots should possess humanlike dexterity and agility to perform tasks with the same versatility as us. A human-like form factor further enables the use of vast datasets of human-hand interactions. However, the primary bottleneck in dexterous manipulation lies not only in software but arguably even more in hardware. Robotic hands that approach human capabilities are often prohibitively expensive, bulky, or require enterprise-level maintenance, limiting their accessibility for broader research and practical applications. What if the research community could get started with reliable dexterous hands within a day? We present the open-source ORCA hand, a reliable and anthropomorphic 17-DoF tendon-driven robotic hand with integrated tactile sensors, fully assembled in less than eight hours and built for a material cost below 2,000 CHF. We showcase ORCA's key design features such as popping joints, auto-calibration, and tensioning systems that significantly reduce complexity while increasing reliability, accuracy, and robustness. We benchmark the ORCA hand across a variety of tasks, ranging from teleoperation and imitation learning to zero-shot sim-to-real reinforcement learning. Furthermore, we demonstrate its durability, withstanding more than 10,000 continuous operation cycles - equivalent to approximately 20 hours - without hardware failure, the only constraint being the duration of the experiment itself. All design files, source code, and documentation will be available at https://www.orcahand.com/.

Model-Based Capacitive Touch Sensing in Soft Robotics: Achieving Robust Tactile Interactions for Artistic Applications

Mar 04, 2025Abstract:In this paper, we present a touch technology to achieve tactile interactivity for human-robot interaction (HRI) in soft robotics. By combining a capacitive touch sensor with an online solid mechanics simulation provided by the SOFA framework, contact detection is achieved for arbitrary shapes. Furthermore, the implementation of the capacitive touch technology presented here is selectively sensitive to human touch (conductive objects), while it is largely unaffected by the deformations created by the pneumatic actuation of our soft robot. Multi-touch interactions are also possible. We evaluated our approach with an organic soft robotics sculpture that was created by a visual artist. In particular, we evaluate that the touch localization capabilities are robust under the deformation of the device. We discuss the potential this approach has for the arts and entertainment as well as other domains.

* 8 pages, 17 figures. Accepted at IEEE Robotics and Automation Letters (RA-L) 2025

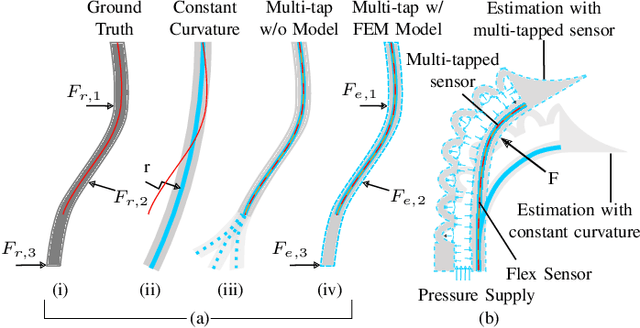

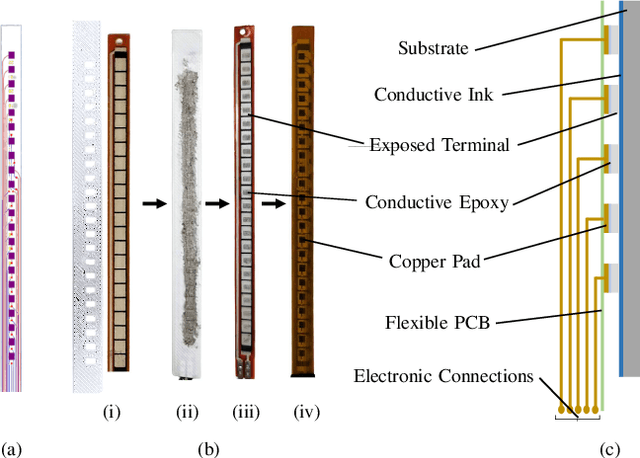

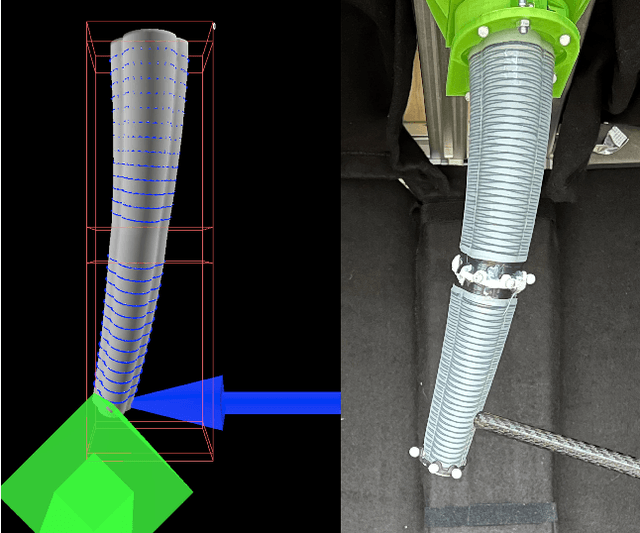

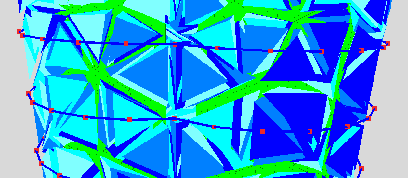

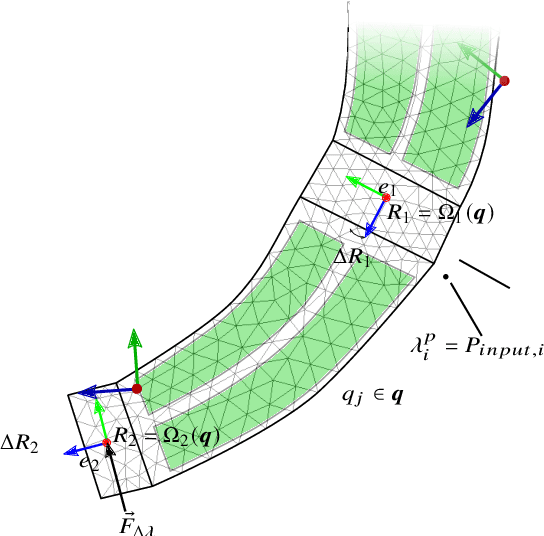

Multi-tap Resistive Sensing and FEM Modeling enables Shape and Force Estimation in Soft Robots

Nov 24, 2023

Abstract:We address the challenge of reliable and accurate proprioception in soft robots, specifically those with tight packaging constraints and relying only on internally embedded sensors. While various sensing approaches with single sensors have been tried, often with a constant curvature assumption, we look into sensing local deformations at multiple locations of the sensor. In our approach, we multi-tap an off-the-shelf resistive sensor by creating multiple electrical connections onto the resistive layer of the sensor and we insert the sensor into a soft body. This modification allows us to measure changes in resistance at multiple segments throughout the length of the sensor, providing improved resolution of local deformations in the soft body. These measurements inform a model based on a finite element method (FEM) that estimates the shape of the soft body and the magnitude of an external force acting at a known arbitrary location. Our model-based approach estimates soft body deformation with approximately 3% average relative error while taking into account internal fluidic actuation. Our estimate of external force disturbance has an 11% relative error within a range of 0 to 5 N. The combined sensing and modeling approach can be integrated, for instance, into soft manipulation platforms to enable features such as identifying the shape and material properties of an object being grasped. Such manipulators can benefit from the inherent softness and compliance while being fully proprioceptive, relying only on embedded sensing and not on external systems such as motion capture. Such proprioception is essential for the deployment of soft robots in real-world scenarios.

Real Robot Challenge 2022: Learning Dexterous Manipulation from Offline Data in the Real World

Sep 04, 2023Abstract:Experimentation on real robots is demanding in terms of time and costs. For this reason, a large part of the reinforcement learning (RL) community uses simulators to develop and benchmark algorithms. However, insights gained in simulation do not necessarily translate to real robots, in particular for tasks involving complex interactions with the environment. The Real Robot Challenge 2022 therefore served as a bridge between the RL and robotics communities by allowing participants to experiment remotely with a real robot - as easily as in simulation. In the last years, offline reinforcement learning has matured into a promising paradigm for learning from pre-collected datasets, alleviating the reliance on expensive online interactions. We therefore asked the participants to learn two dexterous manipulation tasks involving pushing, grasping, and in-hand orientation from provided real-robot datasets. An extensive software documentation and an initial stage based on a simulation of the real set-up made the competition particularly accessible. By giving each team plenty of access budget to evaluate their offline-learned policies on a cluster of seven identical real TriFinger platforms, we organized an exciting competition for machine learners and roboticists alike. In this work we state the rules of the competition, present the methods used by the winning teams and compare their results with a benchmark of state-of-the-art offline RL algorithms on the challenge datasets.

Getting the Ball Rolling: Learning a Dexterous Policy for a Biomimetic Tendon-Driven Hand with Rolling Contact Joints

Aug 04, 2023Abstract:Biomimetic, dexterous robotic hands have the potential to replicate much of the tasks that a human can do, and to achieve status as a general manipulation platform. Recent advances in reinforcement learning (RL) frameworks have achieved remarkable performance in quadrupedal locomotion and dexterous manipulation tasks. Combined with GPU-based highly parallelized simulations capable of simulating thousands of robots in parallel, RL-based controllers have become more scalable and approachable. However, in order to bring RL-trained policies to the real world, we require training frameworks that output policies that can work with physical actuators and sensors as well as a hardware platform that can be manufactured with accessible materials yet is robust enough to run interactive policies. This work introduces the biomimetic tendon-driven Faive Hand and its system architecture, which uses tendon-driven rolling contact joints to achieve a 3D printable, robust high-DoF hand design. We model each element of the hand and integrate it into a GPU simulation environment to train a policy with RL, and achieve zero-shot transfer of a dexterous in-hand sphere rotation skill to the physical robot hand.

Autonomous Vision-based Rapid Aerial Grasping

Nov 23, 2022

Abstract:In a future with autonomous robots, visual and spatial perception is of utmost importance for robotic systems. Particularly for aerial robotics, there are many applications where utilizing visual perception is necessary for any real-world scenarios. Robotic aerial grasping using drones promises fast pick-and-place solutions with a large increase in mobility over other robotic solutions. Utilizing Mask R-CNN scene segmentation (detectron2), we propose a vision-based system for autonomous rapid aerial grasping which does not rely on markers for object localization and does not require the size of the object to be previously known. With spatial information from a depth camera, we generate a point cloud of the detected objects and perform geometry-based grasp planning to determine grasping points on the objects. In real-world experiments, we show that our system can localize objects with a mean error of 3 cm compared to a motion capture ground truth for distances from the object ranging from 0.5 m to 2.5 m. Similar grasping efficacy is maintained compared to a system using motion capture for object localization in experiments. With our results, we show the first use of geometry-based grasping techniques with a flying platform and aim to increase the autonomy of existing aerial manipulation platforms, bringing them further towards real-world applications in warehouses and similar environments.

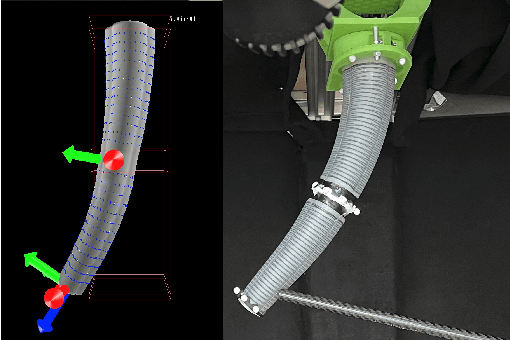

ViSE: Vision-Based 3D Real-Time Shape Estimation of Continuously Deformable Robots

Nov 09, 2022Abstract:The precise control of soft and continuum robots requires knowledge of their shape. The shape of these robots has, in contrast to classical rigid robots, infinite degrees of freedom. To partially reconstruct the shape, proprioceptive techniques use built-in sensors resulting in inaccurate results and increased fabrication complexity. Exteroceptive methods so far rely on placing reflective markers on all tracked components and triangulating their position using multiple motion-tracking cameras. Tracking systems are expensive and infeasible for deformable robots interacting with the environment due to marker occlusion and damage. Here, we present a regression approach for 3D shape estimation using a convolutional neural network. The proposed approach takes advantage of data-driven supervised learning and is capable of real-time marker-less shape estimation during inference. Two images of a robotic system are taken simultaneously at 25 Hz from two different perspectives, and are fed to the network, which returns for each pair the parameterized shape. The proposed approach outperforms marker-less state-of-the-art methods by a maximum of 4.4\% in estimation accuracy while at the same time being more robust and requiring no prior knowledge of the shape. The approach can be easily implemented due to only requiring two color cameras without depth and not needing an explicit calibration of the extrinsic parameters. Evaluations on two types of soft robotic arms and a soft robotic fish demonstrate our method's accuracy and versatility on highly deformable systems in real-time. The robust performance of the approach against different scene modifications (camera alignment and brightness) suggests its generalizability to a wider range of experimental setups, which will benefit downstream tasks such as robotic grasping and manipulation.

Model-Based Disturbance Estimation for a Fiber-Reinforced Soft Manipulator using Orientation Sensing

Jun 23, 2022

Abstract:For soft robots to work effectively in human-centered environments, they need to be able to estimate their state and external interactions based on (proprioceptive) sensors. Estimating disturbances allows a soft robot to perform desirable force control. Even in the case of rigid manipulators, force estimation at the end-effector is seen as a non-trivial problem. And indeed, other current approaches to address this challenge have shortcomings that prevent their general application. They are often based on simplified soft dynamic models, such as the ones relying on a piece-wise constant curvature (PCC) approximation or matched rigid-body models that do not represent enough details of the problem. Thus, the applications needed for complex human-robot interaction can not be built. Finite element methods (FEM) allow for predictions of soft robot dynamics in a more generic fashion. Here, using the soft robot modeling capabilities of the framework SOFA, we build a detailed FEM model of a multi-segment soft continuum robotic arm composed of compliant deformable materials and fiber-reinforced pressurized actuation chambers with a model for sensors that provide orientation output. This model is used to establish a state observer for the manipulator. Model parameters were calibrated to match imperfections of the manual fabrication process using physical experiments. We then solve a quadratic programming inverse dynamics problem to compute the components of external force that explain the pose error. Our experiments show an average force estimation error of around 1.2%. As the methods proposed are generic, these results are encouraging for the task of building soft robots exhibiting complex, reactive, sensor-based behavior that can be deployed in human-centered environments.

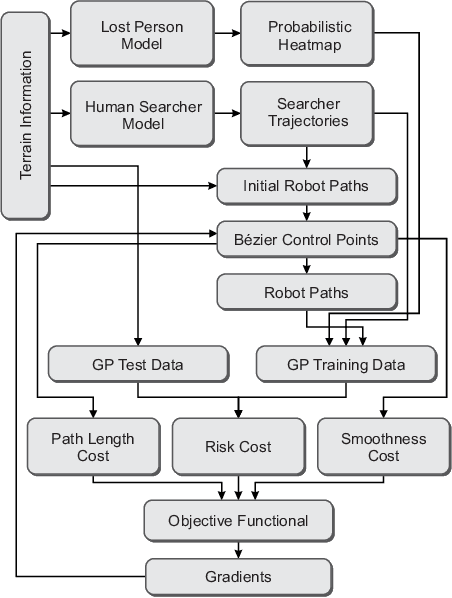

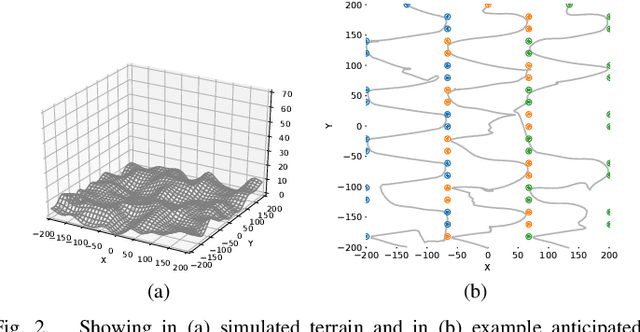

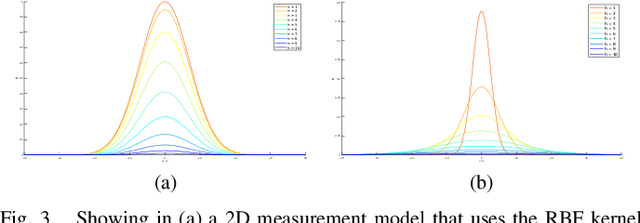

Anticipatory Human-Robot Path Planning for Search and Rescue

Sep 08, 2020

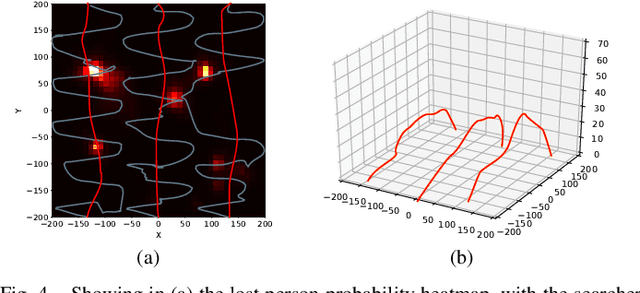

Abstract:In this work, our goal is to extend the existing search and rescue paradigm by allowing teams of autonomous unmanned aerial vehicles (UAVs) to collaborate effectively with human searchers on the ground. We derive a framework that includes a simulated lost person behavior model, as well as a human searcher behavior model that is informed by data collected from past search tasks. These models are used together to create a probabilistic heatmap of the lost person's position and anticipated searcher trajectories. We then use Gaussian processes with a Gibbs' kernel to accurately model a limited field-of-view (FOV) sensor, e.g., thermal cameras, from which we derive a risk metric that drives UAV path optimization. Our framework finally computes a set of search paths for a team of UAVs to autonomously complement human searchers' efforts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge