Erik Bauer

Latent Action Diffusion for Cross-Embodiment Manipulation

Jun 17, 2025Abstract:End-to-end learning approaches offer great potential for robotic manipulation, but their impact is constrained by data scarcity and heterogeneity across different embodiments. In particular, diverse action spaces across different end-effectors create barriers for cross-embodiment learning and skill transfer. We address this challenge through diffusion policies learned in a latent action space that unifies diverse end-effector actions. We first show that we can learn a semantically aligned latent action space for anthropomorphic robotic hands, a human hand, and a parallel jaw gripper using encoders trained with a contrastive loss. Second, we show that by using our proposed latent action space for co-training on manipulation data from different end-effectors, we can utilize a single policy for multi-robot control and obtain up to 13% improved manipulation success rates, indicating successful skill transfer despite a significant embodiment gap. Our approach using latent cross-embodiment policies presents a new method to unify different action spaces across embodiments, enabling efficient multi-robot control and data sharing across robot setups. This unified representation significantly reduces the need for extensive data collection for each new robot morphology, accelerates generalization across embodiments, and ultimately facilitates more scalable and efficient robotic learning.

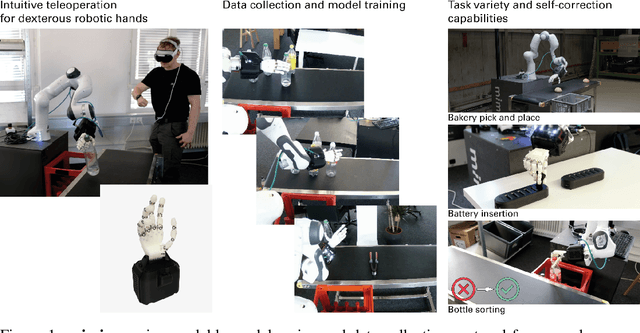

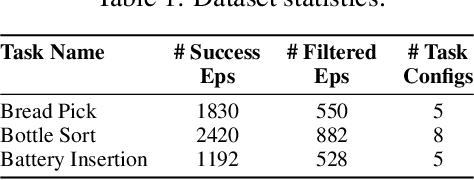

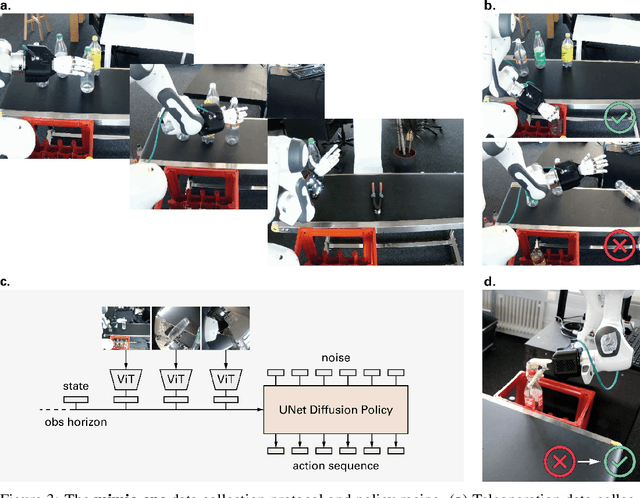

mimic-one: a Scalable Model Recipe for General Purpose Robot Dexterity

Jun 13, 2025

Abstract:We present a diffusion-based model recipe for real-world control of a highly dexterous humanoid robotic hand, designed for sample-efficient learning and smooth fine-motor action inference. Our system features a newly designed 16-DoF tendon-driven hand, equipped with wide angle wrist cameras and mounted on a Franka Emika Panda arm. We develop a versatile teleoperation pipeline and data collection protocol using both glove-based and VR interfaces, enabling high-quality data collection across diverse tasks such as pick and place, item sorting and assembly insertion. Leveraging high-frequency generative control, we train end-to-end policies from raw sensory inputs, enabling smooth, self-correcting motions in complex manipulation scenarios. Real-world evaluations demonstrate up to 93.3% out of distribution success rates, with up to a +33.3% performance boost due to emergent self-correcting behaviors, while also revealing scaling trends in policy performance. Our results advance the state-of-the-art in dexterous robotic manipulation through a fully integrated, practical approach to hardware, learning, and real-world deployment.

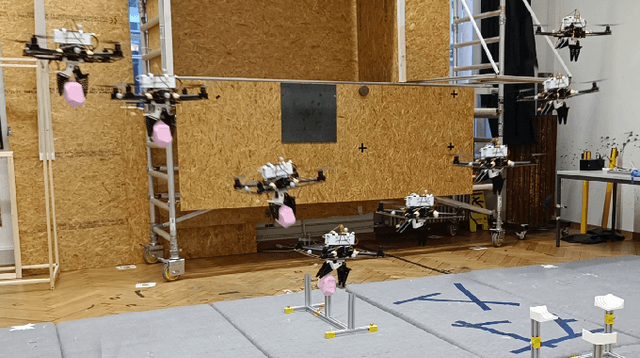

An Open-Source Soft Robotic Platform for Autonomous Aerial Manipulation in the Wild

Sep 11, 2024

Abstract:Aerial manipulation combines the versatility and speed of flying platforms with the functional capabilities of mobile manipulation, which presents significant challenges due to the need for precise localization and control. Traditionally, researchers have relied on offboard perception systems, which are limited to expensive and impractical specially equipped indoor environments. In this work, we introduce a novel platform for autonomous aerial manipulation that exclusively utilizes onboard perception systems. Our platform can perform aerial manipulation in various indoor and outdoor environments without depending on external perception systems. Our experimental results demonstrate the platform's ability to autonomously grasp various objects in diverse settings. This advancement significantly improves the scalability and practicality of aerial manipulation applications by eliminating the need for costly tracking solutions. To accelerate future research, we open source our ROS 2 software stack and custom hardware design, making our contributions accessible to the broader research community.

Autonomous Vision-based Rapid Aerial Grasping

Nov 23, 2022

Abstract:In a future with autonomous robots, visual and spatial perception is of utmost importance for robotic systems. Particularly for aerial robotics, there are many applications where utilizing visual perception is necessary for any real-world scenarios. Robotic aerial grasping using drones promises fast pick-and-place solutions with a large increase in mobility over other robotic solutions. Utilizing Mask R-CNN scene segmentation (detectron2), we propose a vision-based system for autonomous rapid aerial grasping which does not rely on markers for object localization and does not require the size of the object to be previously known. With spatial information from a depth camera, we generate a point cloud of the detected objects and perform geometry-based grasp planning to determine grasping points on the objects. In real-world experiments, we show that our system can localize objects with a mean error of 3 cm compared to a motion capture ground truth for distances from the object ranging from 0.5 m to 2.5 m. Similar grasping efficacy is maintained compared to a system using motion capture for object localization in experiments. With our results, we show the first use of geometry-based grasping techniques with a flying platform and aim to increase the autonomy of existing aerial manipulation platforms, bringing them further towards real-world applications in warehouses and similar environments.

RAPTOR: Rapid Aerial Pickup and Transport of Objects by Robots

Mar 06, 2022

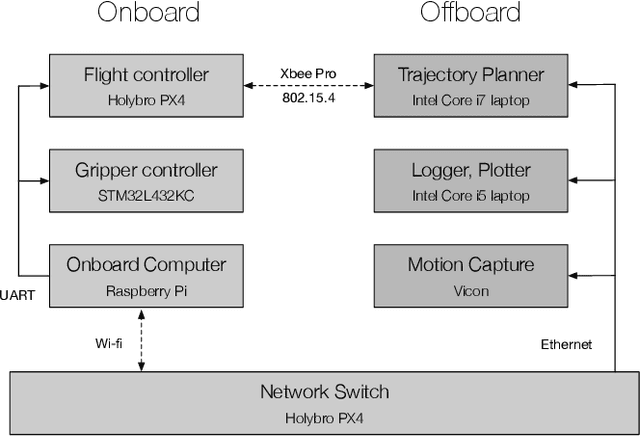

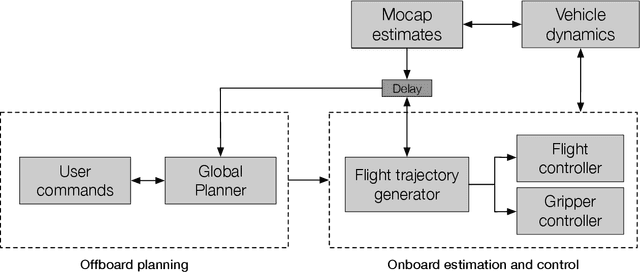

Abstract:Rapid aerial grasping promises vast applications that utilize the dynamic picking up and placing of objects by robots. Rigid grippers traditionally used in aerial manipulators require very high precision and specific object geometries for successful grasping. We propose RAPTOR, a quadcopter platform combined with a custom Fin Ray gripper to enable a more flexible grasping of objects with different geometries, leveraging the properties of soft materials to increase the contact surface between the gripper and the objects. To reduce the communication latency, we present a novel FastDDS-based middleware solution as an alternative to ROS (Robot Operating System). We show that RAPTOR achieves an average of 83% grasping efficacy in a real-world setting for four different object geometries while moving at an average velocity of 1 m/s during grasping, which is approximately five times faster than the state-of-the-art while supporting up to four times the payload. Our results further solidify the potential of quadcopters in warehouses and other automated pick-and-place applications over longer distances where speed and robustness become essential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge