Björn Hein

Model-Based Capacitive Touch Sensing in Soft Robotics: Achieving Robust Tactile Interactions for Artistic Applications

Mar 04, 2025Abstract:In this paper, we present a touch technology to achieve tactile interactivity for human-robot interaction (HRI) in soft robotics. By combining a capacitive touch sensor with an online solid mechanics simulation provided by the SOFA framework, contact detection is achieved for arbitrary shapes. Furthermore, the implementation of the capacitive touch technology presented here is selectively sensitive to human touch (conductive objects), while it is largely unaffected by the deformations created by the pneumatic actuation of our soft robot. Multi-touch interactions are also possible. We evaluated our approach with an organic soft robotics sculpture that was created by a visual artist. In particular, we evaluate that the touch localization capabilities are robust under the deformation of the device. We discuss the potential this approach has for the arts and entertainment as well as other domains.

* 8 pages, 17 figures. Accepted at IEEE Robotics and Automation Letters (RA-L) 2025

ETA-IK: Execution-Time-Aware Inverse Kinematics for Dual-Arm Systems

Nov 21, 2024Abstract:This paper presents ETA-IK, a novel Execution-Time-Aware Inverse Kinematics method tailored for dual-arm robotic systems. The primary goal is to optimize motion execution time by leveraging the redundancy of both arms, specifically in tasks where only the relative pose of the robots is constrained, such as dual-arm scanning of unknown objects. Unlike traditional inverse kinematics methods that use surrogate metrics such as joint configuration distance, our method incorporates direct motion execution time and implicit collisions into the optimization process, thereby finding target joints that allow subsequent trajectory generation to get more efficient and collision-free motion. A neural network based execution time approximator is employed to predict time-efficient joint configurations while accounting for potential collisions. Through experimental evaluation on a system composed of a UR5 and a KUKA iiwa robot, we demonstrate significant reductions in execution time. The proposed method outperforms conventional approaches, showing improved motion efficiency without sacrificing positioning accuracy. These results highlight the potential of ETA-IK to improve the performance of dual-arm systems in applications, where efficiency and safety are paramount.

Planning with Learned Subgoals Selected by Temporal Information

Oct 26, 2024

Abstract:Path planning in a changing environment is a challenging task in robotics, as moving objects impose time-dependent constraints. Recent planning methods primarily focus on the spatial aspects, lacking the capability to directly incorporate time constraints. In this paper, we propose a method that leverages a generative model to decompose a complex planning problem into small manageable ones by incrementally generating subgoals given the current planning context. Then, we take into account the temporal information and use learned time estimators based on different statistic distributions to examine and select the generated subgoal candidates. Experiments show that planning from the current robot state to the selected subgoal can satisfy the given time-dependent constraints while being goal-oriented.

HIRO: Heuristics Informed Robot Online Path Planning Using Pre-computed Deterministic Roadmaps

Oct 26, 2024Abstract:With the goal of efficiently computing collision-free robot motion trajectories in dynamically changing environments, we present results of a novel method for Heuristics Informed Robot Online Path Planning (HIRO). Dividing robot environments into static and dynamic elements, we use the static part for initializing a deterministic roadmap, which provides a lower bound of the final path cost as informed heuristics for fast path-finding. These heuristics guide a search tree to explore the roadmap during runtime. The search tree examines the edges using a fuzzy collision checking concerning the dynamic environment. Finally, the heuristics tree exploits knowledge fed back from the fuzzy collision checking module and updates the lower bound for the path cost. As we demonstrate in real-world experiments, the closed-loop formed by these three components significantly accelerates the planning procedure. An additional backtracking step ensures the feasibility of the resulting paths. Experiments in simulation and the real world show that HIRO can find collision-free paths considerably faster than baseline methods with and without prior knowledge of the environment.

6-DoF Grasp Pose Evaluation and Optimization via Transfer Learning from NeRFs

Jan 15, 2024

Abstract:We address the problem of robotic grasping of known and unknown objects using implicit behavior cloning. We train a grasp evaluation model from a small number of demonstrations that outputs higher values for grasp candidates that are more likely to succeed in grasping. This evaluation model serves as an objective function, that we maximize to identify successful grasps. Key to our approach is the utilization of learned implicit representations of visual and geometric features derived from a pre-trained NeRF. Though trained exclusively in a simulated environment with simplified objects and 4-DoF top-down grasps, our evaluation model and optimization procedure demonstrate generalization to 6-DoF grasps and novel objects both in simulation and in real-world settings, without the need for additional data. Supplementary material is available at: https://gergely-soti.github.io/grasp

Gradient based Grasp Pose Optimization on a NeRF that Approximates Grasp Success

Sep 14, 2023

Abstract:Current robotic grasping methods often rely on estimating the pose of the target object, explicitly predicting grasp poses, or implicitly estimating grasp success probabilities. In this work, we propose a novel approach that directly maps gripper poses to their corresponding grasp success values, without considering objectness. Specifically, we leverage a Neural Radiance Field (NeRF) architecture to learn a scene representation and use it to train a grasp success estimator that maps each pose in the robot's task space to a grasp success value. We employ this learned estimator to tune its inputs, i.e., grasp poses, by gradient-based optimization to obtain successful grasp poses. Contrary to other NeRF-based methods which enhance existing grasp pose estimation approaches by relying on NeRF's rendering capabilities or directly estimate grasp poses in a discretized space using NeRF's scene representation capabilities, our approach uniquely sidesteps both the need for rendering and the limitation of discretization. We demonstrate the effectiveness of our approach on four simulated 3DoF (Degree of Freedom) robotic grasping tasks and show that it can generalize to novel objects. Our best model achieves an average translation error of 3mm from valid grasp poses. This work opens the door for future research to apply our approach to higher DoF grasps and real-world scenarios.

Human Emergency Detection during Autonomous Hospital Transports

Jul 17, 2023Abstract:Human transports in hospitals are labor-intensive and primarily performed in beds to save time. This transfer method does not promote the mobility or autonomy of the patient. To relieve the caregivers from this time-consuming task, a mobile robot is developed to autonomously transport humans around the hospital. It provides different transfer modes including walking and sitting in a wheelchair. The problem that this paper focuses on is to detect emergencies and ensure the well-being of the patient during the transport. For this purpose, the patient is tracked and monitored with a camera system. OpenPose is used for Human Pose Estimation and a trained classifier for emergency detection. We collected and published a dataset of 18,000 images in lab and hospital environments. It differs from related work because we have a moving robot with different transfer modes in a highly dynamic environment with multiple people in the scene using only RGB-D data. To improve the critical recall metric, we apply threshold moving and a time delay. We compare different models with an AutoML approach. This paper shows that emergencies while walking are best detected by a SVM with a recall of 95.8% on single frames. In the case of sitting transport, the best model achieves a recall of 62.2%. The contribution is to establish a baseline on this new dataset and to provide a proof of concept for the human emergency detection in this use case.

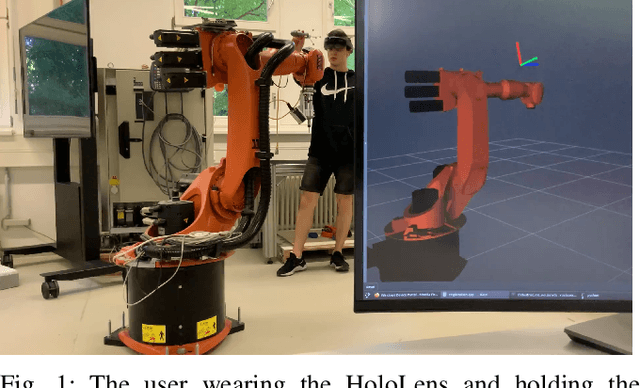

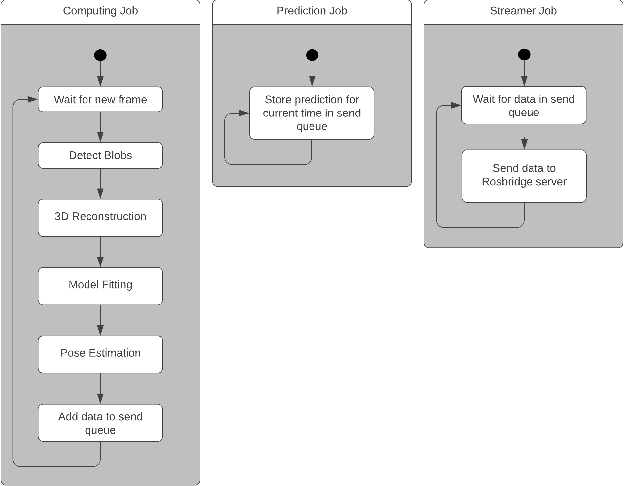

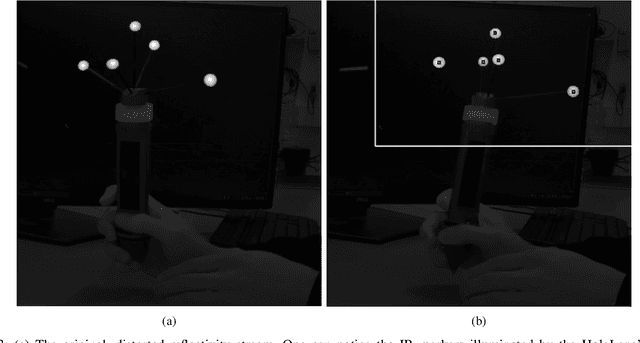

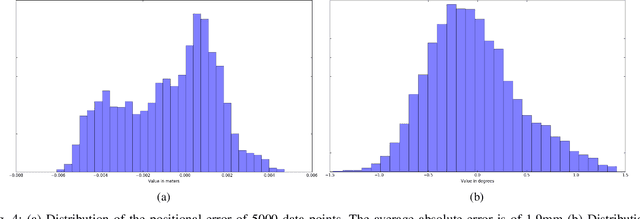

Inside-out Infrared Marker Tracking via Head Mounted Displays for Smart Robot Programming

Mar 28, 2023

Abstract:Intuitive robot programming through use of tracked smart input devices relies on fixed, external tracking systems, most often employing infra-red markers. Such an approach is frequently combined with projector-based augmented reality for better visualisation and interface. The combined system, although providing an intuitive programming platform with short cycle times even for inexperienced users, is immobile, expensive and requires extensive calibration. When faced with a changing environment and large number of robots it becomes sorely impractical. Here we present our work on infra-red marker tracking using the Microsoft HoloLens head-mounted display. The HoloLens can map the environment, register the robot on-line, and track smart devices equipped with infra-red markers in the robot coordinate system. We envision our work to provide the basis to transfer many of the paradigms developed over the years for systems requiring a projector and a tracked input device into a highly-portable system that does not require any calibration or special set-up. We test the quality of the marker-tracking in an industrial robot cell and compare our tracking with a ground truth obtained via an ART-3 tracking system.

Train What You Know -- Precise Pick-and-Place with Transporter Networks

Feb 17, 2023

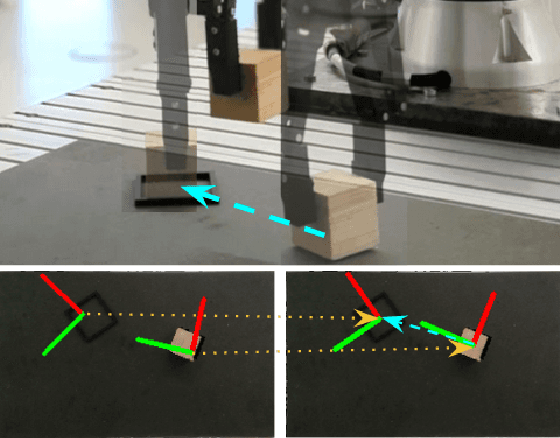

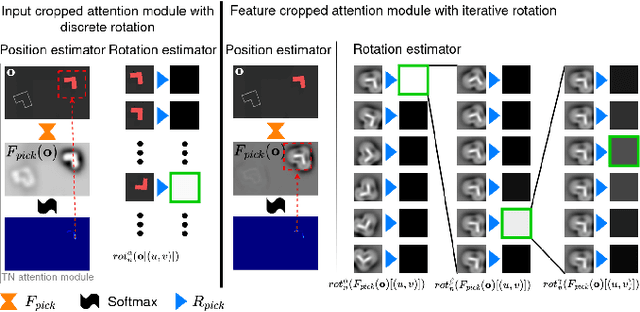

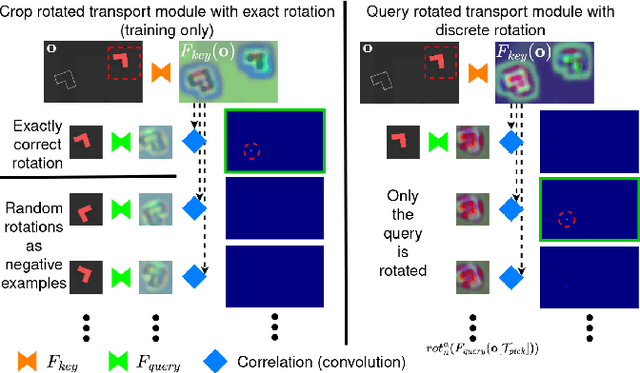

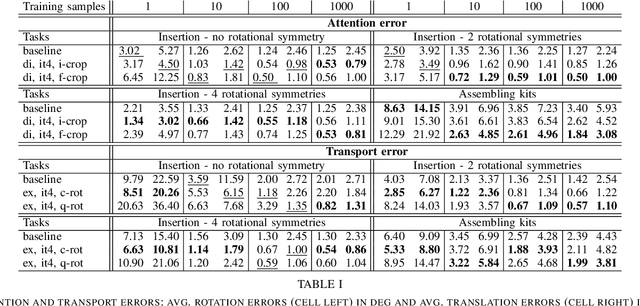

Abstract:Precise pick-and-place is essential in robotic applications. To this end, we define a novel exact training method and an iterative inference method that improve pick-and-place precision with Transporter Networks. We conduct a large scale experiment on 8 simulated tasks. A systematic analysis shows, that the proposed modifications have a significant positive effect on model performance. Considering picking and placing independently, our methods achieve up to 60% lower rotation and translation errors than baselines. For the whole pick-and-place process we observe 50% lower rotation errors for most tasks with slight improvements in terms of translation errors. Furthermore, we propose architectural changes that retain model performance and reduce computational costs and time. We validate our methods with an interactive teaching procedure on real hardware. Supplementary material will be made available at: https://gergely-soti.github.io/p

Updating Industrial Robots for Emerging Technologies

Apr 07, 2022

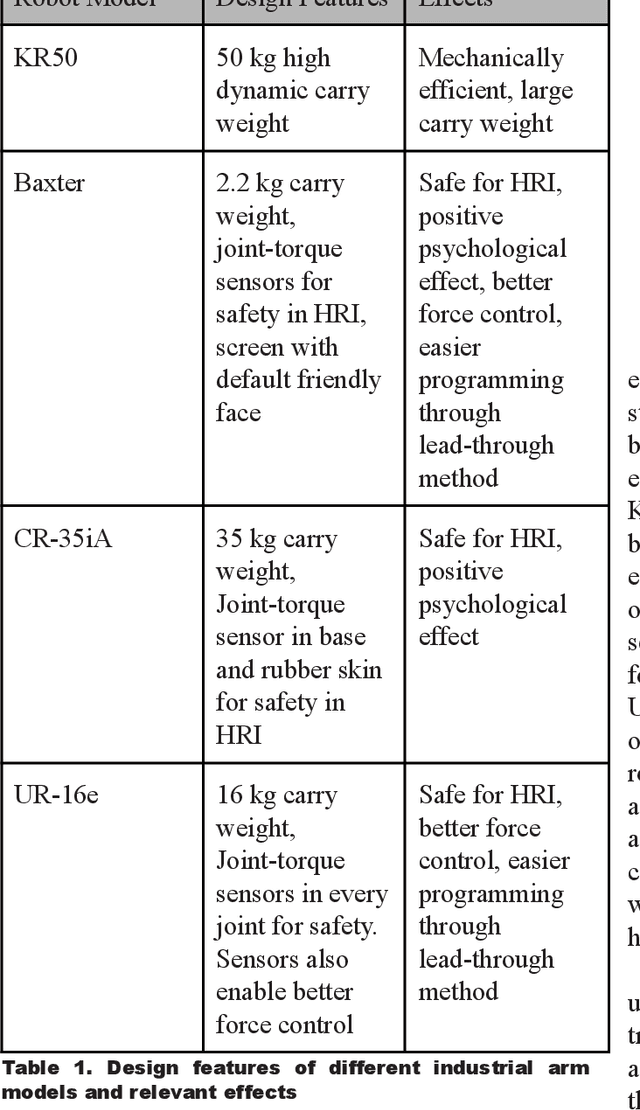

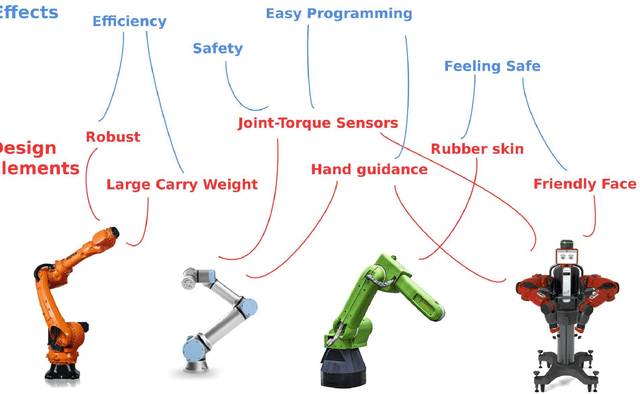

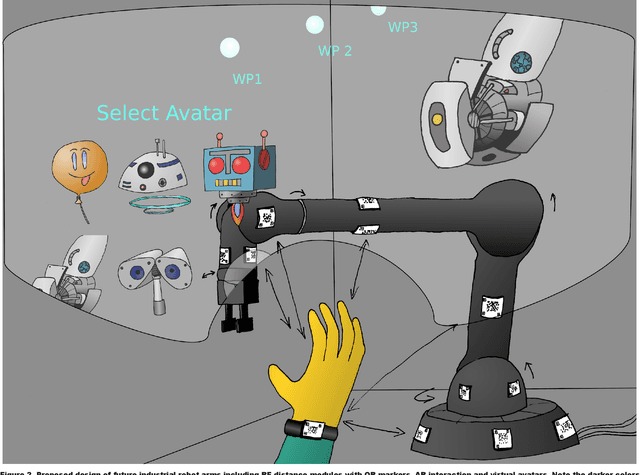

Abstract:Industrial arms need to evolve beyond their standard shape to embrace new and emerging technologies. In this paper, we shall first perform an analysis of four popular but different modern industrial robot arms. By seeing the common trends we will try to extrapolate and expand these trends for the future. Here, particular focus will be on interaction based on augmented reality (AR) through head-mounted displays (HMD), but also through smartphones. Long-term human-robot interaction and personalization of said interaction will also be considered. The use of AR in human-robot interaction has proven to enhance communication and information exchange. A basic addition to industrial arm design would be the integration of QR markers on the robot, both for accessing information and adding tracking capabilities to more easily display AR overlays. In a recent example of information access, Mercedes Benz added QR markers on their cars to help rescue workers estimate the best places to cut and evacuate people after car crashes. One has also to deal with safety in an environment that will be more and more about collaboration. The QR markers can therefore be combined with RF-based ranging modules, developed in the EU-project SafeLog, that can be used both for safety as well as for tracking of human positions while in close proximity interactions with the industrial arms. The industrial arms of the future should also be intuitive to program and interact with. This would be achieved through AR and head mounted displays as well as the already mentioned RF-based person tracking. Finally, a more personalized interaction between the robots and humans can be achieved through life-long learning AI and disembodied, personalized agents. We propose a design that not only exists in the physical world, but also partly in the digital world of mixed reality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge