David Puljiz

Inside-out Infrared Marker Tracking via Head Mounted Displays for Smart Robot Programming

Mar 28, 2023

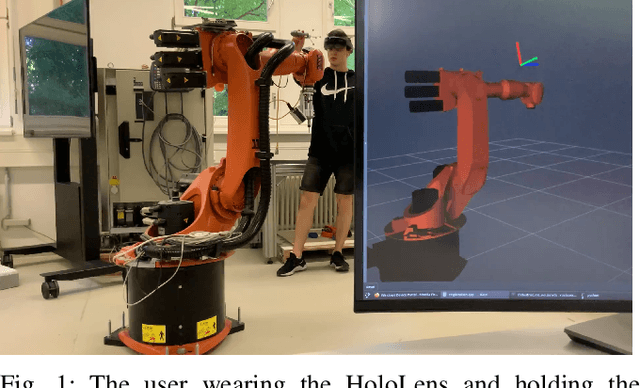

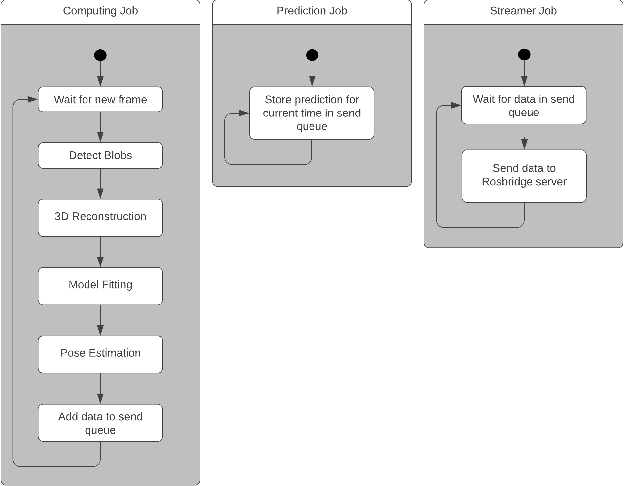

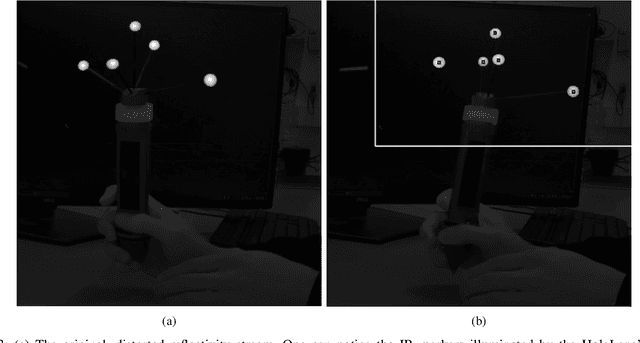

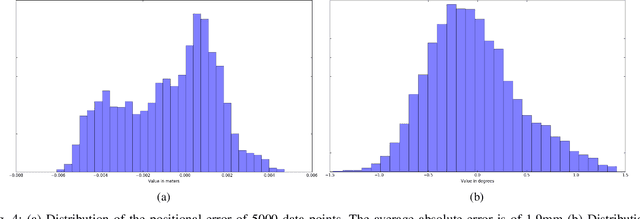

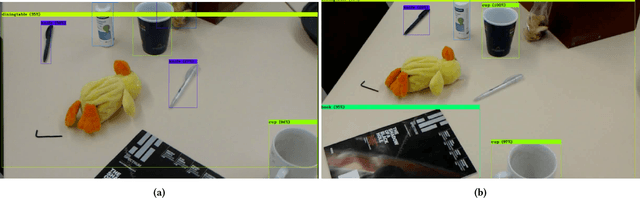

Abstract:Intuitive robot programming through use of tracked smart input devices relies on fixed, external tracking systems, most often employing infra-red markers. Such an approach is frequently combined with projector-based augmented reality for better visualisation and interface. The combined system, although providing an intuitive programming platform with short cycle times even for inexperienced users, is immobile, expensive and requires extensive calibration. When faced with a changing environment and large number of robots it becomes sorely impractical. Here we present our work on infra-red marker tracking using the Microsoft HoloLens head-mounted display. The HoloLens can map the environment, register the robot on-line, and track smart devices equipped with infra-red markers in the robot coordinate system. We envision our work to provide the basis to transfer many of the paradigms developed over the years for systems requiring a projector and a tracked input device into a highly-portable system that does not require any calibration or special set-up. We test the quality of the marker-tracking in an industrial robot cell and compare our tracking with a ground truth obtained via an ART-3 tracking system.

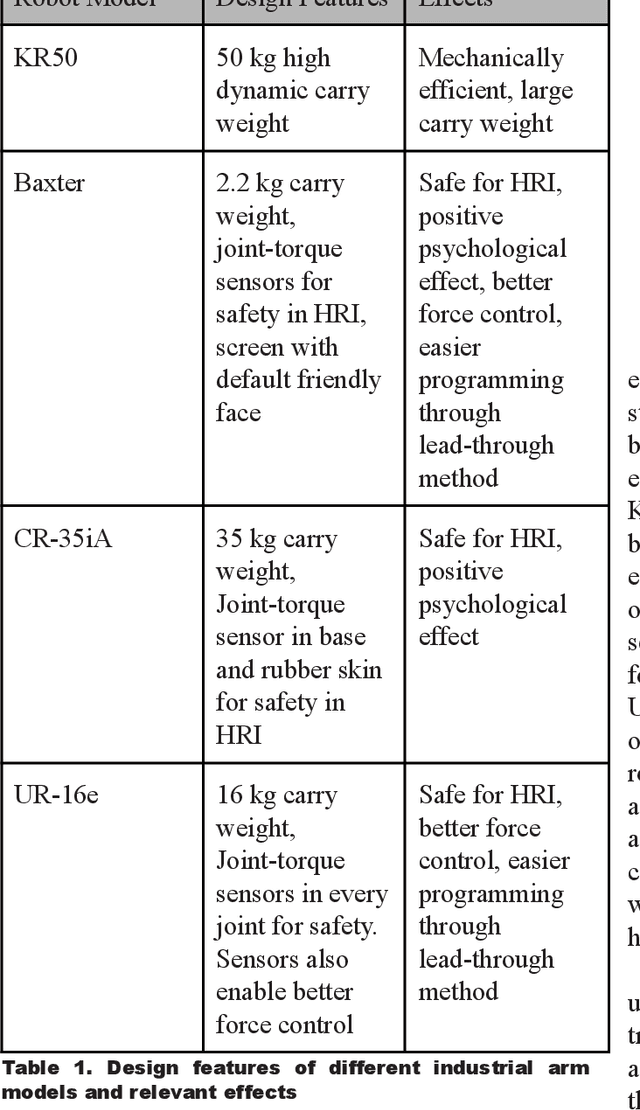

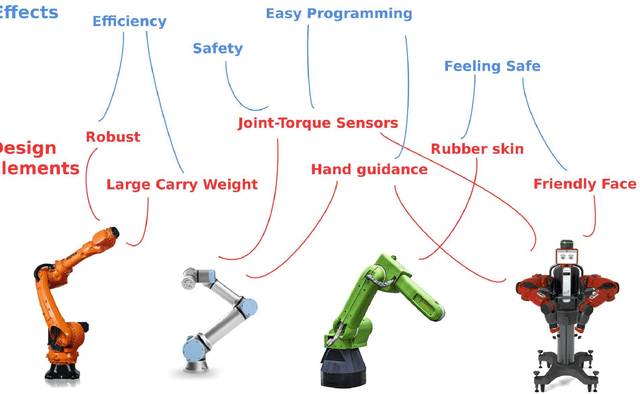

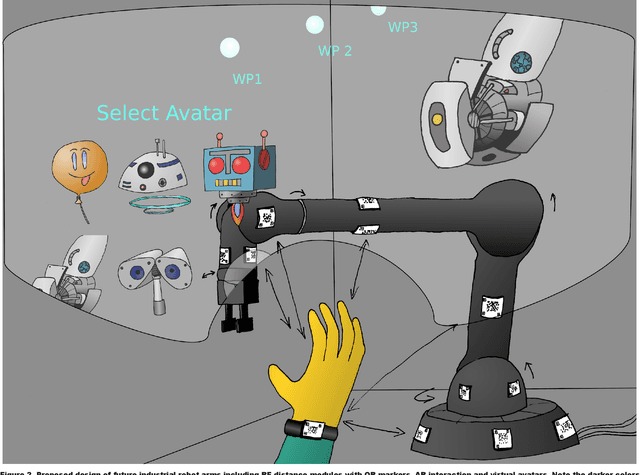

Updating Industrial Robots for Emerging Technologies

Apr 07, 2022

Abstract:Industrial arms need to evolve beyond their standard shape to embrace new and emerging technologies. In this paper, we shall first perform an analysis of four popular but different modern industrial robot arms. By seeing the common trends we will try to extrapolate and expand these trends for the future. Here, particular focus will be on interaction based on augmented reality (AR) through head-mounted displays (HMD), but also through smartphones. Long-term human-robot interaction and personalization of said interaction will also be considered. The use of AR in human-robot interaction has proven to enhance communication and information exchange. A basic addition to industrial arm design would be the integration of QR markers on the robot, both for accessing information and adding tracking capabilities to more easily display AR overlays. In a recent example of information access, Mercedes Benz added QR markers on their cars to help rescue workers estimate the best places to cut and evacuate people after car crashes. One has also to deal with safety in an environment that will be more and more about collaboration. The QR markers can therefore be combined with RF-based ranging modules, developed in the EU-project SafeLog, that can be used both for safety as well as for tracking of human positions while in close proximity interactions with the industrial arms. The industrial arms of the future should also be intuitive to program and interact with. This would be achieved through AR and head mounted displays as well as the already mentioned RF-based person tracking. Finally, a more personalized interaction between the robots and humans can be achieved through life-long learning AI and disembodied, personalized agents. We propose a design that not only exists in the physical world, but also partly in the digital world of mixed reality.

HAIR: Head-mounted AR Intention Recognition

Feb 22, 2021

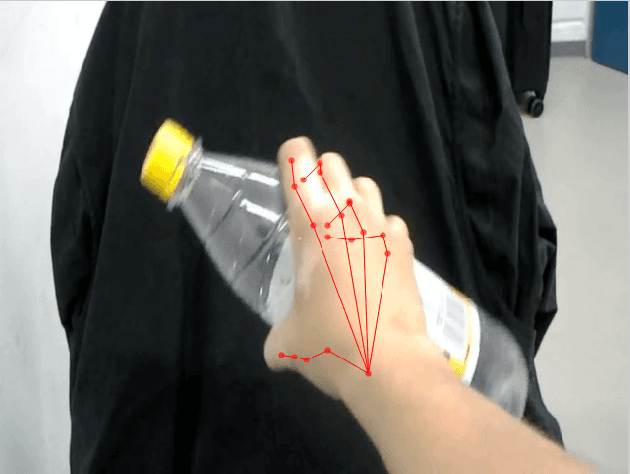

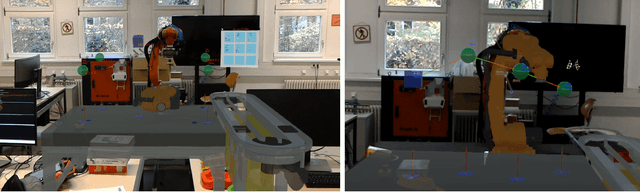

Abstract:Human teams exhibit both implicit and explicit intention sharing. To further development of human-robot collaboration, intention recognition is crucial on both sides. Present approaches rely on a vast sensor suite on and around the robot to achieve intention recognition. This relegates intuitive human-robot collaboration purely to such bulky systems, which are inadequate for large-scale, real-world scenarios due to their complexity and cost. In this paper we propose an intention recognition system that is based purely on a portable head-mounted display. In addition robot intention visualisation is also supported. We present experiments to show the quality of our human goal estimation component and some basic interactions with an industrial robot. HAIR should raise the quality of interaction between robots and humans, instead of such interactions raising the hair on the necks of the human coworkers.

Adapting the Human: Leveraging Wearable Technology in HRI

Dec 10, 2020Abstract:Adhering to current HRI paradigms, all of the sensors, visualisation and legibility of actions and motions are borne by the robot or its working cell. This necessarily makes robots more complex or confines them into specialised, structured environments. We propose leveraging the state of the art of wearable technologies, such as augmented reality head mounted displays, smart watches, sensor tags and radio-frequency ranging, to "adapt" the human and reduce the requirements and complexity of robots.

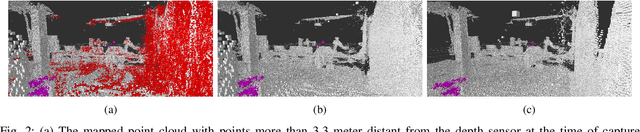

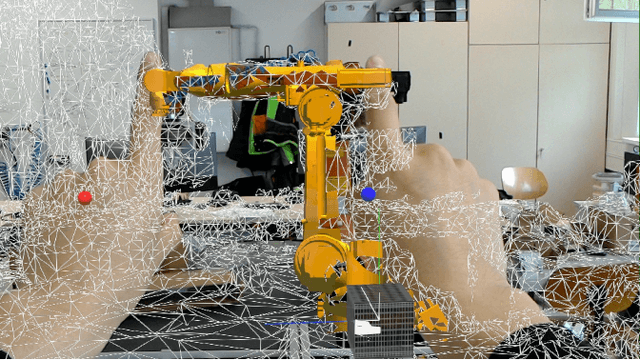

What the HoloLens Maps Is Your Workspace: Fast Mapping and Set-up of Robot Cells via Head Mounted Displays and Augmented Reality

May 26, 2020

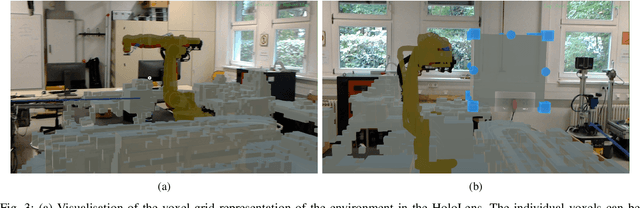

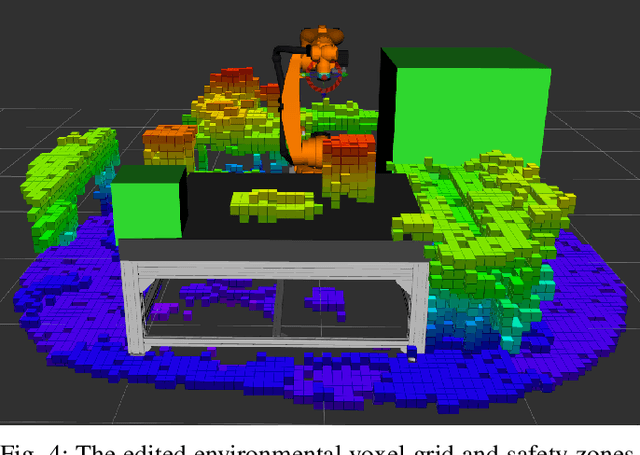

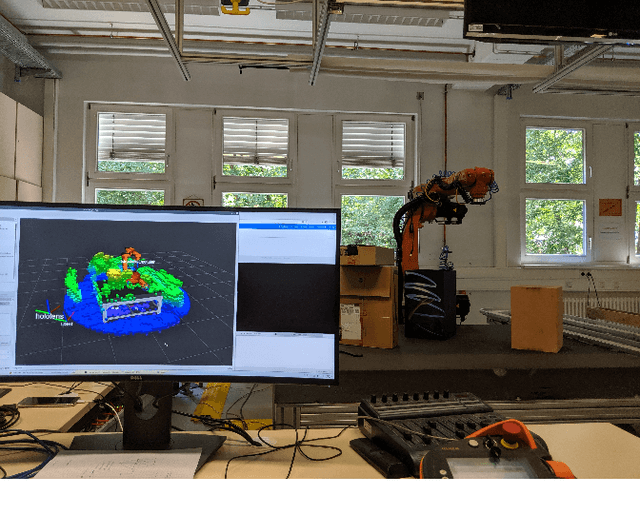

Abstract:Classical methods of modelling and mapping robot work cells are time consuming, expensive and involve expert knowledge. We present a novel approach to mapping and cell setup using modern Head Mounted Displays (HMDs) that possess self-localisation and mapping capabilities. We leveraged these capabilities to create a point cloud of the environment and build an OctoMap - a voxel occupancy grid representation of the robot's workspace for path planning. Through the use of Augmented Reality (AR) interactions, the user can edit the created Octomap and add security zones. We perform comprehensive tests of the HoloLens' depth sensing capabilities and the quality of the resultant point cloud. A high-end laser scanner is used to provide the ground truth for the evaluation of the point cloud quality. The amount of false-positive and false-negative voxels in the OctoMap are also tested.

Concepts for End-to-end Augmented Reality based Human-Robot Interaction Systems

Oct 10, 2019

Abstract:The field of Augmented Reality (AR) based Human Robot Interaction (HRI) has progressed significantly since its inception more than two decades ago. With more advanced devices, particularly head-mounted displays (HMD), freely available programming environments and better connectivity, the possible application space expanded significantly. Here we present concepts and systems currently being developed at our lab to enable a truly end-to-end application of AR in HRI, from setting up the working environment of the robot, through programming and finally interaction with the programmed robot. Relevant papers by other authors will also be overviewed. We demonstrate the use of such technologies with systems not inherently designed to be collaborative, namely industrial manipulators. By trying to make such industrial systems easily-installable, collaborative and interactive, the vision of universal robot co-workers can be pushed one step closer to reality. The main goal of the paper is to provide a short overview of the capabilities of HMD-based HRI to researchers unfamiliar with the concepts. For researchers already using such techniques, the hope is to perhaps introduce some new ideas and to broaden the field of research.

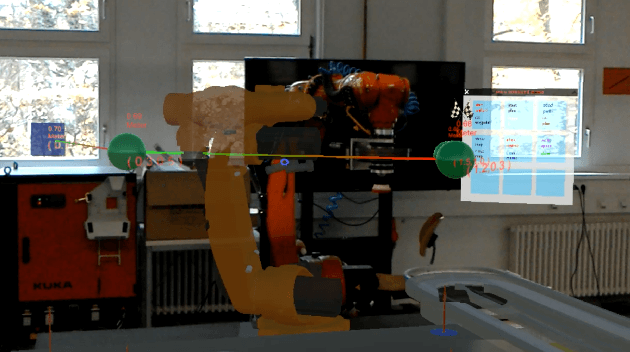

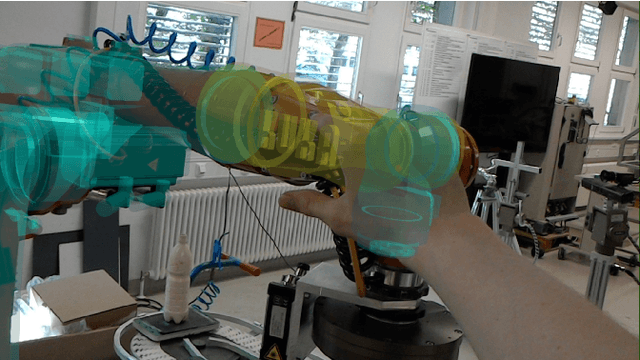

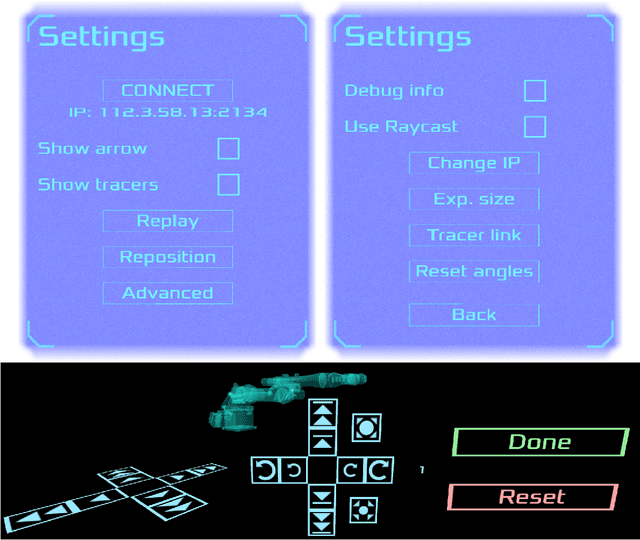

General Hand Guidance Framework using Microsoft HoloLens

Aug 13, 2019

Abstract:Hand guidance emerged from the safety requirements for collaborative robots, namely possessing joint-torque sensors. Since then it has proven to be a powerful tool for easy trajectory programming, allowing lay-users to reprogram robots intuitively. Going beyond, a robot can learn tasks by user demonstrations through kinesthetic teaching, enabling robots to generalise tasks and further reducing the need for reprogramming. However, hand guidance is still mostly relegated to collaborative robots. Here we propose a method that doesn't require any sensors on the robot or in the robot cell, by using a Microsoft HoloLens augmented reality head mounted display. We reference the robot using a registration algorithm to match the robot model to the spatial mesh. The in-built hand tracking and localisation capabilities are then used to calculate the position of the hands relative to the robot. By decomposing the hand movements into orthogonal rotations and propagating it down through the kinematic chain, we achieve a generalised hand guidance without the need to build a dynamic model of the robot itself. We tested our approach on a commonly used industrial manipulator, the KUKA KR-5.

Referencing between a Head-Mounted Device and Robotic Manipulators

Apr 04, 2019

Abstract:Having a precise and robust transformation between the robot coordinate system and the AR-device coordinate system is paramount during human-robot interaction (HRI) based on augmented reality using Head mounted displays (HMD), both for intuitive information display and for the tracking of human motions. Most current solutions in this area rely either on the tracking of visual markers, e.g. QR codes, or on manual referencing, both of which provide unsatisfying results. Meanwhile a plethora of object detection and referencing methods exist in the wider robotic and machine vision communities. The precision of the referencing is likewise almost never measured. Here we would like to address this issue by firstly presenting an overview of currently used referencing methods between robots and HMDs. This is followed by a brief overview of object detection and referencing methods used in the field of robotics. Based on these methods we suggest three classes of referencing algorithms we intend to pursue - semi-automatic, on-shot; automatic, one-shot; and automatic continuous. We describe the general workflows of these three classes as well as describing our proposed algorithms in each of these classes. Finally we present the first experimental results of a semi-automatic referencing algorithm, tested on an industrial KUKA KR-5 manipulator.

Sensorless Hand Guidance using Microsoft Hololens

Jan 15, 2019

Abstract:Hand guidance of robots has proven to be a useful tool both for programming trajectories and in kinesthetic teaching. However hand guidance is usually relegated to robots possessing joint-torque sensors (JTS). Here we propose to extend hand guidance to robots lacking those sensors through the use of an Augmented Reality (AR) device, namely Microsoft's Hololens. Augmented reality devices have been envisioned as a helpful addition to ease both robot programming and increase situational awareness of humans working in close proximity to robots. We reference the robot by using a registration algorithm to match a robot model to the spatial mesh. The in-built hand tracking capabilities are then used to calculate the position of the hands relative to the robot. By decomposing the hand movements into orthogonal rotations we achieve a completely sensorless hand guidance without any need to build a dynamic model of the robot itself. We did the first tests our approach on a commonly used industrial manipulator, the KUKA KR-5.

Human Intention Estimation based on Hidden Markov Model Motion Validation for Safe Flexible Robotized Warehouses

Nov 20, 2018

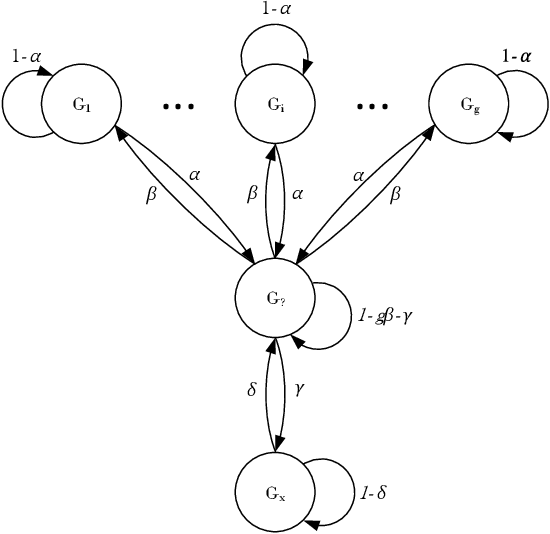

Abstract:With the substantial growth of logistics businesses the need for larger warehouses and their automation arises, thus using robots as assistants to human workers is becoming a priority. In order to operate efficiently and safely, robot assistants or the supervising system should recognize human intentions in real-time. Theory of mind (ToM) is an intuitive human conception of other humans' mental state, i.e., beliefs and desires, and how they cause behavior. In this paper we propose a ToM based human intention estimation algorithm for flexible robotized warehouses. We observe human's, i.e., worker's motion and validate it with respect to the goal locations using generalized Voronoi diagram based path planning. These observations are then processed by the proposed hidden Markov model framework which estimates worker intentions in an online manner, capable of handling changing environments. To test the proposed intention estimation we ran experiments in a real-world laboratory warehouse with a worker wearing Microsoft Hololens augmented reality glasses. Furthermore, in order to demonstrate the scalability of the approach to larger warehouses, we propose to use virtual reality digital warehouse twins in order to realistically simulate worker behavior. We conducted intention estimation experiments in the larger warehouse digital twin with up to 24 running robots. We demonstrate that the proposed framework estimates warehouse worker intentions precisely and in the end we discuss the experimental results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge