Franziska Krebs

Intuitive Programming, Adaptive Task Planning, and Dynamic Role Allocation in Human-Robot Collaboration

Nov 11, 2025

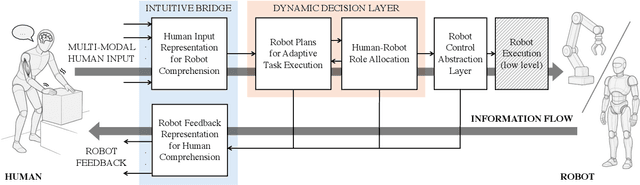

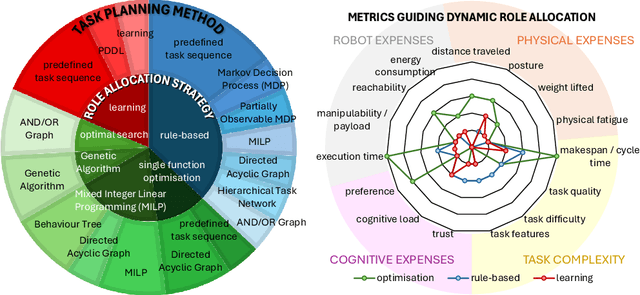

Abstract:Remarkable capabilities have been achieved by robotics and AI, mastering complex tasks and environments. Yet, humans often remain passive observers, fascinated but uncertain how to engage. Robots, in turn, cannot reach their full potential in human-populated environments without effectively modeling human states and intentions and adapting their behavior. To achieve a synergistic human-robot collaboration (HRC), a continuous information flow should be established: humans must intuitively communicate instructions, share expertise, and express needs. In parallel, robots must clearly convey their internal state and forthcoming actions to keep users informed, comfortable, and in control. This review identifies and connects key components enabling intuitive information exchange and skill transfer between humans and robots. We examine the full interaction pipeline: from the human-to-robot communication bridge translating multimodal inputs into robot-understandable representations, through adaptive planning and role allocation, to the control layer and feedback mechanisms to close the loop. Finally, we highlight trends and promising directions toward more adaptive, accessible HRC.

* Published in the Annual Review of Control, Robotics, and Autonomous Systems, Volume 9; copyright 2026 the author(s), CC BY 4.0, https://www.annualreviews.org

Incremental Learning of Full-Pose Via-Point Movement Primitives on Riemannian Manifolds

Dec 13, 2023

Abstract:Movement primitives (MPs) are compact representations of robot skills that can be learned from demonstrations and combined into complex behaviors. However, merely equipping robots with a fixed set of innate MPs is insufficient to deploy them in dynamic and unpredictable environments. Instead, the full potential of MPs remains to be attained via adaptable, large-scale MP libraries. In this paper, we propose a set of seven fundamental operations to incrementally learn, improve, and re-organize MP libraries. To showcase their applicability, we provide explicit formulations of the spatial operations for libraries composed of Via-Point Movement Primitives (VMPs). By building on Riemannian manifold theory, our approach enables the incremental learning of all parameters of position and orientation VMPs within a library. Moreover, our approach stores a fixed number of parameters, thus complying with the essential principles of incremental learning. We evaluate our approach to incrementally learn a VMP library from motion capture data provided sequentially.

What the HoloLens Maps Is Your Workspace: Fast Mapping and Set-up of Robot Cells via Head Mounted Displays and Augmented Reality

May 26, 2020

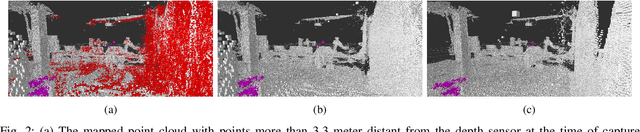

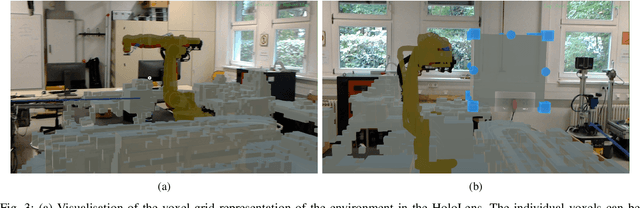

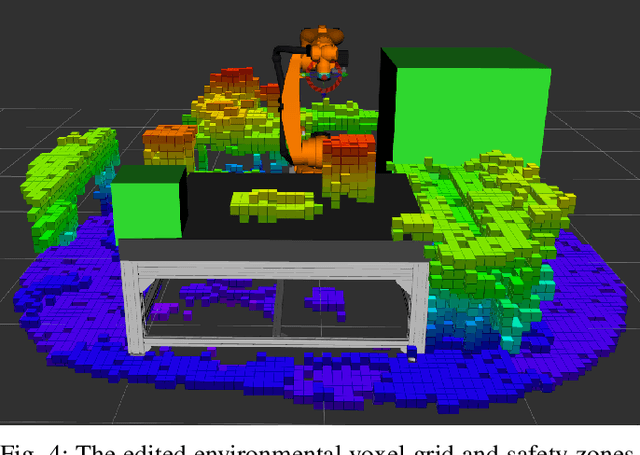

Abstract:Classical methods of modelling and mapping robot work cells are time consuming, expensive and involve expert knowledge. We present a novel approach to mapping and cell setup using modern Head Mounted Displays (HMDs) that possess self-localisation and mapping capabilities. We leveraged these capabilities to create a point cloud of the environment and build an OctoMap - a voxel occupancy grid representation of the robot's workspace for path planning. Through the use of Augmented Reality (AR) interactions, the user can edit the created Octomap and add security zones. We perform comprehensive tests of the HoloLens' depth sensing capabilities and the quality of the resultant point cloud. A high-end laser scanner is used to provide the ground truth for the evaluation of the point cloud quality. The amount of false-positive and false-negative voxels in the OctoMap are also tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge