Christian Wurll

dGrasp: NeRF-Informed Implicit Grasp Policies with Supervised Optimization Slopes

Jun 14, 2024Abstract:We present dGrasp, an implicit grasp policy with an enhanced optimization landscape. This landscape is defined by a NeRF-informed grasp value function. The neural network representing this function is trained on grasp demonstrations. During training, we use an auxiliary loss to guide not only the weight updates of this network but also the update how the slope of the optimization landscape changes. This loss is computed on the demonstrated grasp trajectory and the gradients of the landscape. With second order optimization, we incorporate valuable information from the trajectory as well as facilitate the optimization process of the implicit policy. Experiments demonstrate that employing this auxiliary loss improves policies' performance in simulation as well as their zero-shot transfer to the real-world.

6-DoF Grasp Pose Evaluation and Optimization via Transfer Learning from NeRFs

Jan 15, 2024

Abstract:We address the problem of robotic grasping of known and unknown objects using implicit behavior cloning. We train a grasp evaluation model from a small number of demonstrations that outputs higher values for grasp candidates that are more likely to succeed in grasping. This evaluation model serves as an objective function, that we maximize to identify successful grasps. Key to our approach is the utilization of learned implicit representations of visual and geometric features derived from a pre-trained NeRF. Though trained exclusively in a simulated environment with simplified objects and 4-DoF top-down grasps, our evaluation model and optimization procedure demonstrate generalization to 6-DoF grasps and novel objects both in simulation and in real-world settings, without the need for additional data. Supplementary material is available at: https://gergely-soti.github.io/grasp

Gradient based Grasp Pose Optimization on a NeRF that Approximates Grasp Success

Sep 14, 2023

Abstract:Current robotic grasping methods often rely on estimating the pose of the target object, explicitly predicting grasp poses, or implicitly estimating grasp success probabilities. In this work, we propose a novel approach that directly maps gripper poses to their corresponding grasp success values, without considering objectness. Specifically, we leverage a Neural Radiance Field (NeRF) architecture to learn a scene representation and use it to train a grasp success estimator that maps each pose in the robot's task space to a grasp success value. We employ this learned estimator to tune its inputs, i.e., grasp poses, by gradient-based optimization to obtain successful grasp poses. Contrary to other NeRF-based methods which enhance existing grasp pose estimation approaches by relying on NeRF's rendering capabilities or directly estimate grasp poses in a discretized space using NeRF's scene representation capabilities, our approach uniquely sidesteps both the need for rendering and the limitation of discretization. We demonstrate the effectiveness of our approach on four simulated 3DoF (Degree of Freedom) robotic grasping tasks and show that it can generalize to novel objects. Our best model achieves an average translation error of 3mm from valid grasp poses. This work opens the door for future research to apply our approach to higher DoF grasps and real-world scenarios.

Human Emergency Detection during Autonomous Hospital Transports

Jul 17, 2023Abstract:Human transports in hospitals are labor-intensive and primarily performed in beds to save time. This transfer method does not promote the mobility or autonomy of the patient. To relieve the caregivers from this time-consuming task, a mobile robot is developed to autonomously transport humans around the hospital. It provides different transfer modes including walking and sitting in a wheelchair. The problem that this paper focuses on is to detect emergencies and ensure the well-being of the patient during the transport. For this purpose, the patient is tracked and monitored with a camera system. OpenPose is used for Human Pose Estimation and a trained classifier for emergency detection. We collected and published a dataset of 18,000 images in lab and hospital environments. It differs from related work because we have a moving robot with different transfer modes in a highly dynamic environment with multiple people in the scene using only RGB-D data. To improve the critical recall metric, we apply threshold moving and a time delay. We compare different models with an AutoML approach. This paper shows that emergencies while walking are best detected by a SVM with a recall of 95.8% on single frames. In the case of sitting transport, the best model achieves a recall of 62.2%. The contribution is to establish a baseline on this new dataset and to provide a proof of concept for the human emergency detection in this use case.

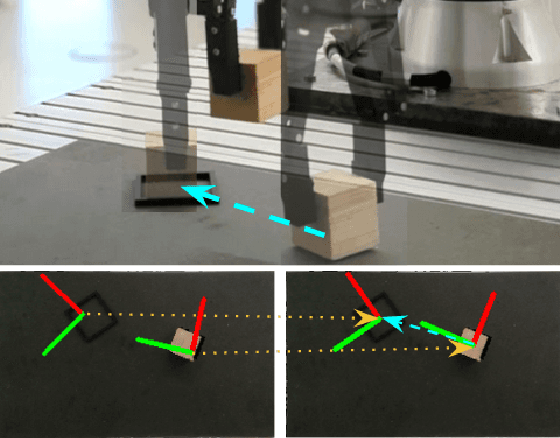

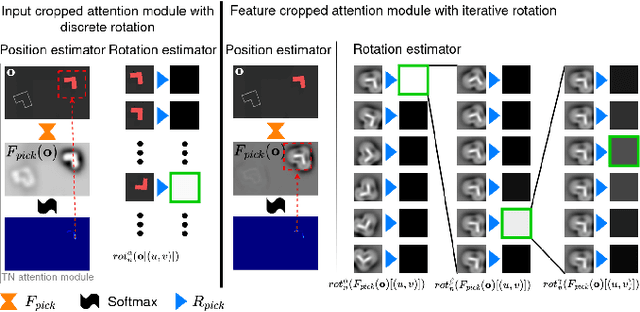

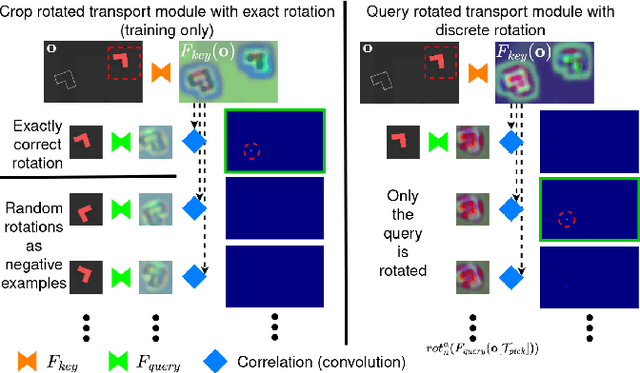

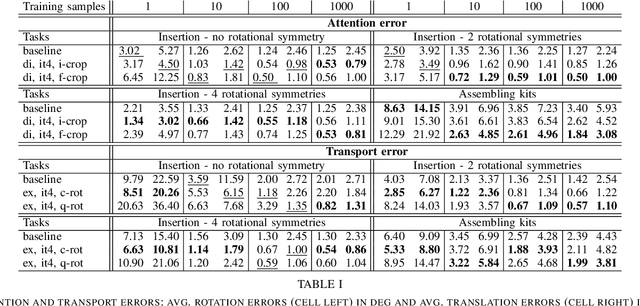

Train What You Know -- Precise Pick-and-Place with Transporter Networks

Feb 17, 2023

Abstract:Precise pick-and-place is essential in robotic applications. To this end, we define a novel exact training method and an iterative inference method that improve pick-and-place precision with Transporter Networks. We conduct a large scale experiment on 8 simulated tasks. A systematic analysis shows, that the proposed modifications have a significant positive effect on model performance. Considering picking and placing independently, our methods achieve up to 60% lower rotation and translation errors than baselines. For the whole pick-and-place process we observe 50% lower rotation errors for most tasks with slight improvements in terms of translation errors. Furthermore, we propose architectural changes that retain model performance and reduce computational costs and time. We validate our methods with an interactive teaching procedure on real hardware. Supplementary material will be made available at: https://gergely-soti.github.io/p

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge