Ge Song

AutoFPDesigner: Automated Flight Procedure Design Based on Multi-Agent Large Language Model

Oct 19, 2024Abstract:Current flight procedure design methods heavily rely on human-led design process, which is not only low auto-mation but also suffer from complex algorithm modelling and poor generalization. To address these challenges, this paper proposes an agent-driven flight procedure design method based on large language model, named Au-toFPDesigner, which utilizes multi-agent collaboration to complete procedure design. The method enables end-to-end automated design of performance-based navigation (PBN) procedures. In this process, the user input the design requirements in natural language, AutoFPDesigner models the flight procedure design by loading the design speci-fications and utilizing tool libraries complete the design. AutoFPDesigner allows users to oversee and seamlessly participate in the design process. Experimental results show that AutoFPDesigner ensures nearly 100% safety in the designed flight procedures and achieves 75% task completion rate, with good adaptability across different design tasks. AutoFPDesigner introduces a new paradigm for flight procedure design and represents a key step towards the automation of this process. Keywords: Flight Procedure Design; Large Language Model; Performance-Based Navigation (PBN); Multi Agent;

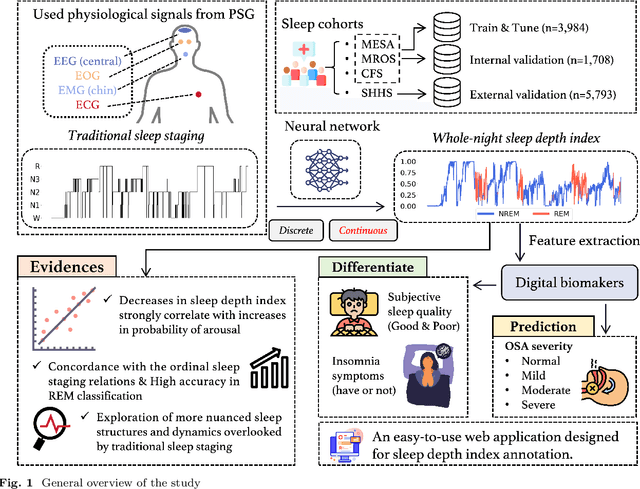

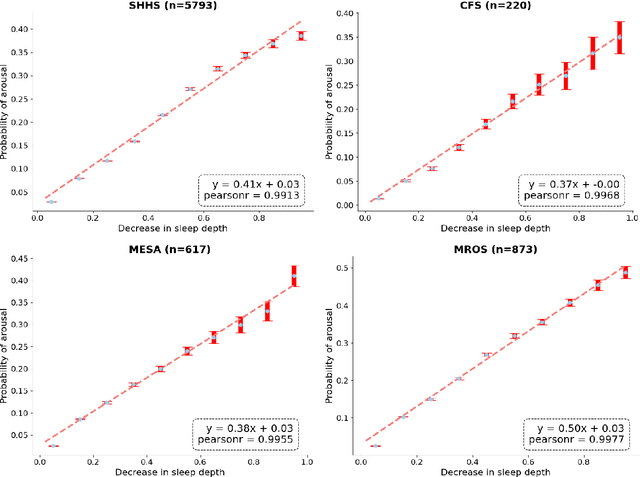

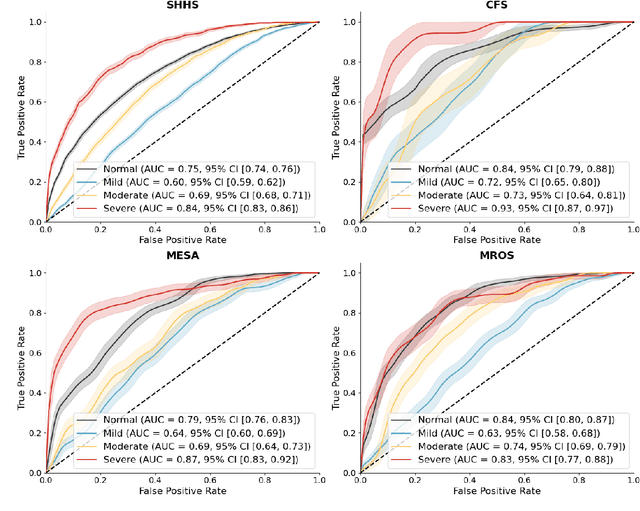

Annotation of Sleep Depth Index with Scalable Deep Learning Yields Novel Digital Biomarkers for Sleep Health

Jul 05, 2024

Abstract:Traditional sleep staging categorizes sleep and wakefulness into five coarse-grained classes, overlooking subtle variations within each stage. It provides limited information about the probability of arousal and may hinder the diagnosis of sleep disorders, such as insomnia. To address this issue, we propose a deep-learning method for automatic and scalable annotation of sleep depth index using existing sleep staging labels. Our approach is validated using polysomnography from over ten thousand recordings across four large-scale cohorts. The results show a strong correlation between the decrease in sleep depth index and the increase in arousal likelihood. Several case studies indicate that the sleep depth index captures more nuanced sleep structures than conventional sleep staging. Sleep biomarkers extracted from the whole-night sleep depth index exhibit statistically significant differences with medium-to-large effect sizes across groups of varied subjective sleep quality and insomnia symptoms. These sleep biomarkers also promise utility in predicting the severity of obstructive sleep apnea, particularly in severe cases. Our study underscores the utility of the proposed method for continuous sleep depth annotation, which could reveal more detailed structures and dynamics within whole-night sleep and yield novel digital biomarkers beneficial for sleep health.

EMPL: A novel Efficient Meta Prompt Learning Framework for Few-shot Unsupervised Domain Adaptation

Jul 04, 2024

Abstract:Few-shot unsupervised domain adaptation (FS-UDA) utilizes few-shot labeled source domain data to realize effective classification in unlabeled target domain. However, current FS-UDA methods are still suffer from two issues: 1) the data from different domains can not be effectively aligned by few-shot labeled data due to the large domain gaps, 2) it is unstable and time-consuming to generalize to new FS-UDA tasks.To address this issue, we put forward a novel Efficient Meta Prompt Learning Framework for FS-UDA. Within this framework, we use pre-trained CLIP model as the feature learning base model. First, we design domain-shared prompt learning vectors composed of virtual tokens, which mainly learns the meta knowledge from a large number of meta tasks to mitigate domain gaps. Secondly, we also design a task-shared prompt learning network to adaptively learn specific prompt vectors for each task, which aims to realize fast adaptation and task generalization. Thirdly, we learn a task-specific cross-domain alignment projection and a task-specific classifier with closed-form solutions for each meta task, which can efficiently adapt the model to new tasks in one step. The whole learning process is formulated as a bilevel optimization problem, and a good initialization of model parameters is learned through meta-learning. Extensive experimental study demonstrates the promising performance of our framework on benchmark datasets. Our method has the large improvement of at least 15.4% on 5-way 1-shot and 8.7% on 5-way 5-shot, compared with the state-of-the-art methods. Also, the performance of our method on all the test tasks is more stable than the other methods.

Cross-Modal Attention Alignment Network with Auxiliary Text Description for zero-shot sketch-based image retrieval

Jul 01, 2024Abstract:In this paper, we study the problem of zero-shot sketch-based image retrieval (ZS-SBIR). The prior methods tackle the problem in a two-modality setting with only category labels or even no textual information involved. However, the growing prevalence of Large-scale pre-trained Language Models (LLMs), which have demonstrated great knowledge learned from web-scale data, can provide us with an opportunity to conclude collective textual information. Our key innovation lies in the usage of text data as auxiliary information for images, thus leveraging the inherent zero-shot generalization ability that language offers. To this end, we propose an approach called Cross-Modal Attention Alignment Network with Auxiliary Text Description for zero-shot sketch-based image retrieval. The network consists of three components: (i) a Description Generation Module that generates textual descriptions for each training category by prompting an LLM with several interrogative sentences, (ii) a Feature Extraction Module that includes two ViTs for sketch and image data, a transformer for extracting tokens of sentences of each training category, finally (iii) a Cross-modal Alignment Module that exchanges the token features of both text-sketch and text-image using cross-attention mechanism, and align the tokens locally and globally. Extensive experiments on three benchmark datasets show our superior performances over the state-of-the-art ZS-SBIR methods.

Cross-target Stance Detection by Exploiting Target Analytical Perspectives

Jan 04, 2024

Abstract:Cross-target stance detection (CTSD) is an important task, which infers the attitude of the destination target by utilizing annotated data derived from the source target. One important approach in CTSD is to extract domain-invariant features to bridge the knowledge gap between multiple targets. However, the analysis of informal and short text structure, and implicit expressions, complicate the extraction of domain-invariant knowledge. In this paper, we propose a Multi-Perspective Prompt-Tuning (MPPT) model for CTSD that uses the analysis perspective as a bridge to transfer knowledge. First, we develop a two-stage instruct-based chain-of-thought method (TsCoT) to elicit target analysis perspectives and provide natural language explanations (NLEs) from multiple viewpoints by formulating instructions based on large language model (LLM). Second, we propose a multi-perspective prompt-tuning framework (MultiPLN) to fuse the NLEs into the stance predictor. Extensive experiments results demonstrate the superiority of MPPT against the state-of-the-art baseline methods.

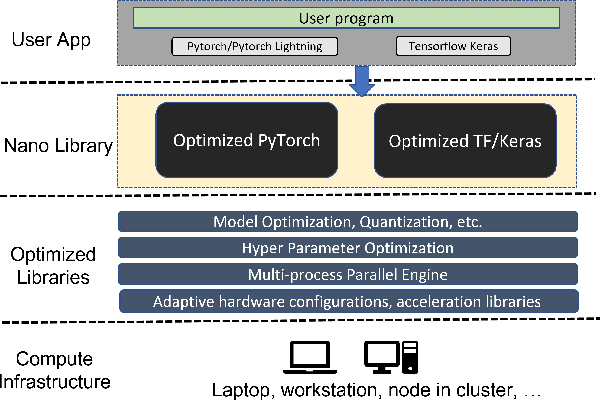

BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster

Apr 03, 2022

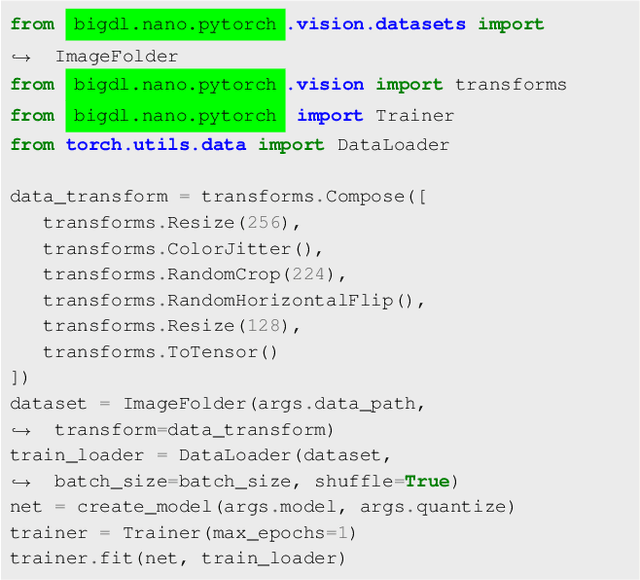

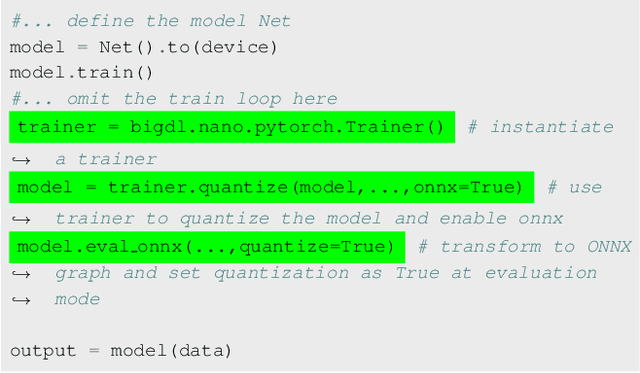

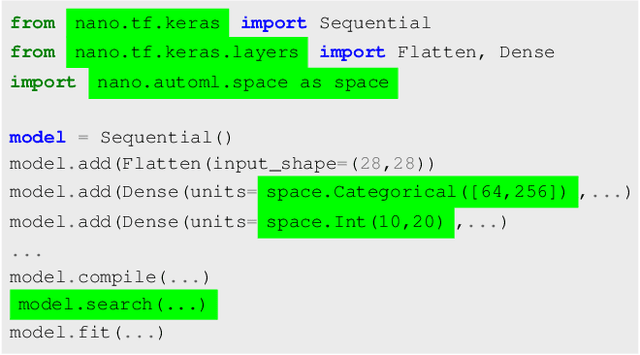

Abstract:Most AI projects start with a Python notebook running on a single laptop; however, one usually needs to go through a mountain of pains to scale it to handle larger dataset (for both experimentation and production deployment). These usually entail many manual and error-prone steps for the data scientists to fully take advantage of the available hardware resources (e.g., SIMD instructions, multi-processing, quantization, memory allocation optimization, data partitioning, distributed computing, etc.). To address this challenge, we have open sourced BigDL 2.0 at https://github.com/intel-analytics/BigDL/ under Apache 2.0 license (combining the original BigDL and Analytics Zoo projects); using BigDL 2.0, users can simply build conventional Python notebooks on their laptops (with possible AutoML support), which can then be transparently accelerated on a single node (with up-to 9.6x speedup in our experiments), and seamlessly scaled out to a large cluster (across several hundreds servers in real-world use cases). BigDL 2.0 has already been adopted by many real-world users (such as Mastercard, Burger King, Inspur, etc.) in production.

A Multi-Agent Reinforcement Learning Framework for Off-Policy Evaluation in Two-sided Markets

Feb 21, 2022

Abstract:The two-sided markets such as ride-sharing companies often involve a group of subjects who are making sequential decisions across time and/or location. With the rapid development of smart phones and internet of things, they have substantially transformed the transportation landscape of human beings. In this paper we consider large-scale fleet management in ride-sharing companies that involve multiple units in different areas receiving sequences of products (or treatments) over time. Major technical challenges, such as policy evaluation, arise in those studies because (i) spatial and temporal proximities induce interference between locations and times; and (ii) the large number of locations results in the curse of dimensionality. To address both challenges simultaneously, we introduce a multi-agent reinforcement learning (MARL) framework for carrying policy evaluation in these studies. We propose novel estimators for mean outcomes under different products that are consistent despite the high-dimensionality of state-action space. The proposed estimator works favorably in simulation experiments. We further illustrate our method using a real dataset obtained from a two-sided marketplace company to evaluate the effects of applying different subsidizing policies. A Python implementation of the proposed method is available at https://github.com/RunzheStat/CausalMARL.

DTDN: Dual-task De-raining Network

Aug 21, 2020

Abstract:Removing rain streaks from rainy images is necessary for many tasks in computer vision, such as object detection and recognition. It needs to address two mutually exclusive objectives: removing rain streaks and reserving realistic details. Balancing them is critical for de-raining methods. We propose an end-to-end network, called dual-task de-raining network (DTDN), consisting of two sub-networks: generative adversarial network (GAN) and convolutional neural network (CNN), to remove rain streaks via coordinating the two mutually exclusive objectives self-adaptively. DTDN-GAN is mainly used to remove structural rain streaks, and DTDN-CNN is designed to recover details in original images. We also design a training algorithm to train these two sub-networks of DTDN alternatively, which share same weights but use different training sets. We further enrich two existing datasets to approximate the distribution of real rain streaks. Experimental results show that our method outperforms several recent state-of-the-art methods, based on both benchmark testing datasets and real rainy images.

Deep Robust Multilevel Semantic Cross-Modal Hashing

Feb 07, 2020

Abstract:Hashing based cross-modal retrieval has recently made significant progress. But straightforward embedding data from different modalities into a joint Hamming space will inevitably produce false codes due to the intrinsic modality discrepancy and noises. We present a novel Robust Multilevel Semantic Hashing (RMSH) for more accurate cross-modal retrieval. It seeks to preserve fine-grained similarity among data with rich semantics, while explicitly require distances between dissimilar points to be larger than a specific value for strong robustness. For this, we give an effective bound of this value based on the information coding-theoretic analysis, and the above goals are embodied into a margin-adaptive triplet loss. Furthermore, we introduce pseudo-codes via fusing multiple hash codes to explore seldom-seen semantics, alleviating the sparsity problem of similarity information. Experiments on three benchmarks show the validity of the derived bounds, and our method achieves state-of-the-art performance.

Less Memory, Faster Speed: Refining Self-Attention Module for Image Reconstruction

May 20, 2019

Abstract:Self-attention (SA) mechanisms can capture effectively global dependencies in deep neural networks, and have been applied to natural language processing and image processing successfully. However, SA modules for image reconstruction have high time and space complexity, which restrict their applications to higher-resolution images. In this paper, we refine the SA module in self-attention generative adversarial networks (SAGAN) via adapting a non-local operation, revising the connectivity among the units in SA module and re-implementing its computational pattern, such that its time and space complexity is reduced from $\text{O}(n^2)$ to $\text{O}(n)$, but it is still equivalent to the original SA module. Further, we explore the principles behind the module and discover that our module is a special kind of channel attention mechanisms. Experimental results based on two benchmark datasets of image reconstruction, verify that under the same computational environment, two models can achieve comparable effectiveness for image reconstruction, but the proposed one runs faster and takes up less memory space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge