Shengsheng Huang

BigDL 2.0: Seamless Scaling of AI Pipelines from Laptops to Distributed Cluster

Apr 03, 2022

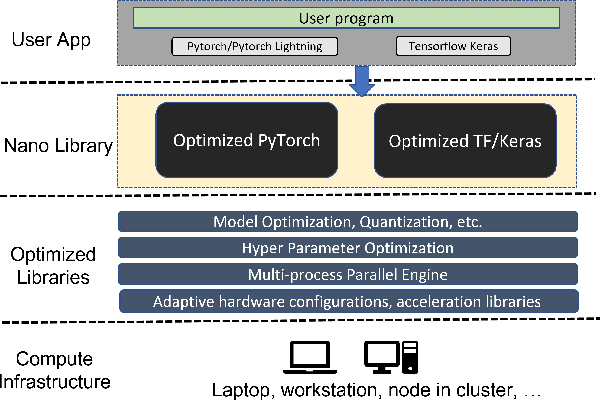

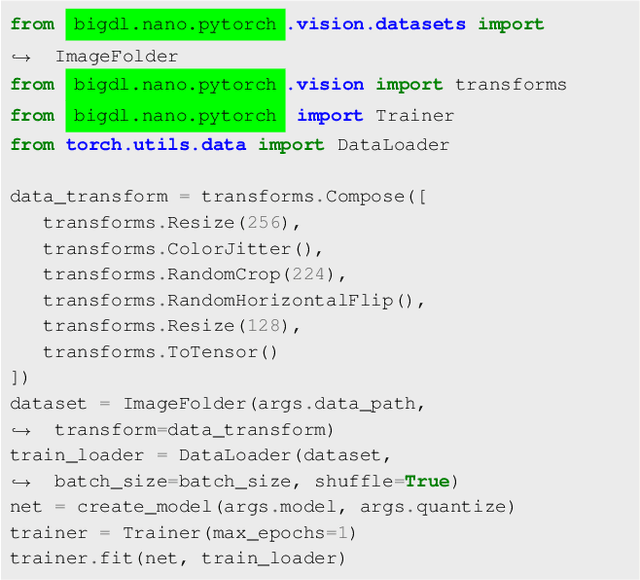

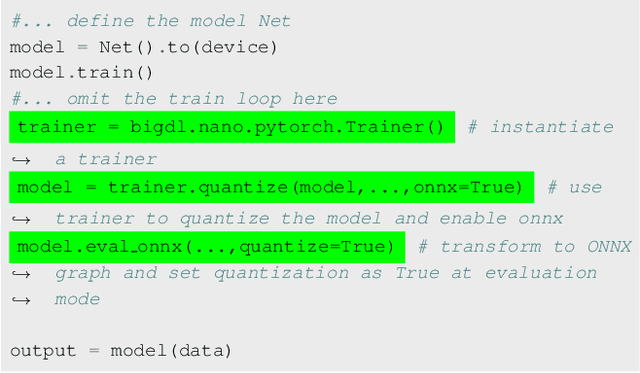

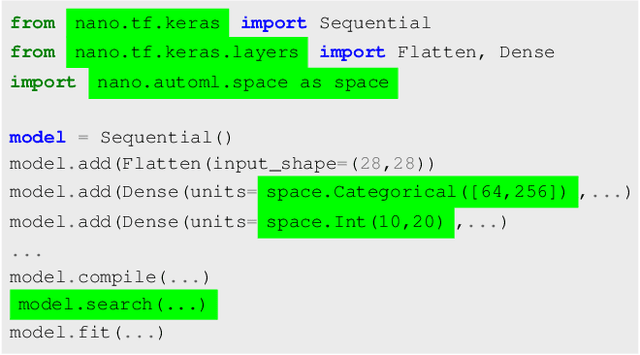

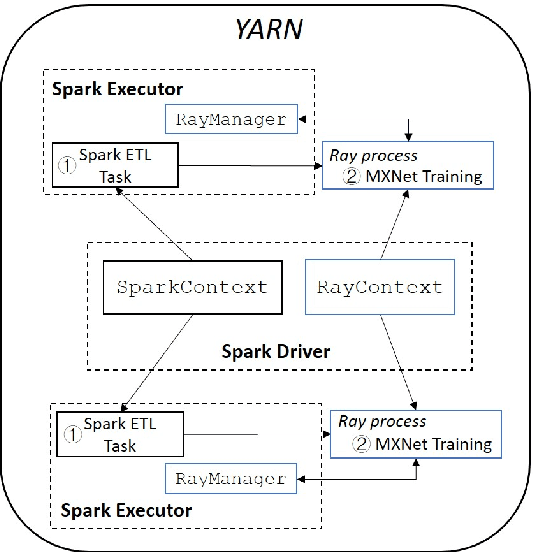

Abstract:Most AI projects start with a Python notebook running on a single laptop; however, one usually needs to go through a mountain of pains to scale it to handle larger dataset (for both experimentation and production deployment). These usually entail many manual and error-prone steps for the data scientists to fully take advantage of the available hardware resources (e.g., SIMD instructions, multi-processing, quantization, memory allocation optimization, data partitioning, distributed computing, etc.). To address this challenge, we have open sourced BigDL 2.0 at https://github.com/intel-analytics/BigDL/ under Apache 2.0 license (combining the original BigDL and Analytics Zoo projects); using BigDL 2.0, users can simply build conventional Python notebooks on their laptops (with possible AutoML support), which can then be transparently accelerated on a single node (with up-to 9.6x speedup in our experiments), and seamlessly scaled out to a large cluster (across several hundreds servers in real-world use cases). BigDL 2.0 has already been adopted by many real-world users (such as Mastercard, Burger King, Inspur, etc.) in production.

Context-Aware Drive-thru Recommendation Service at Fast Food Restaurants

Oct 13, 2020

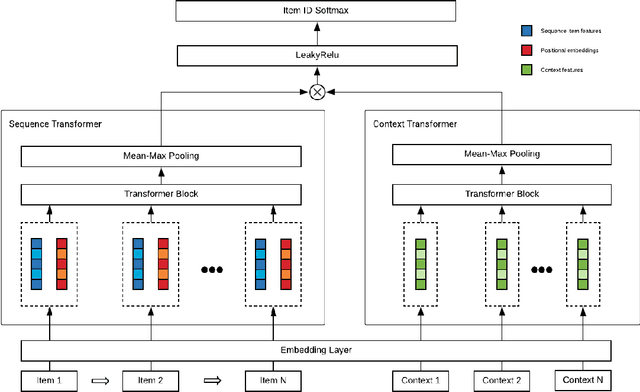

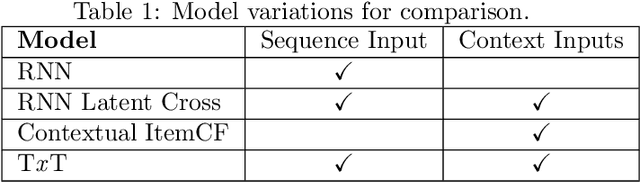

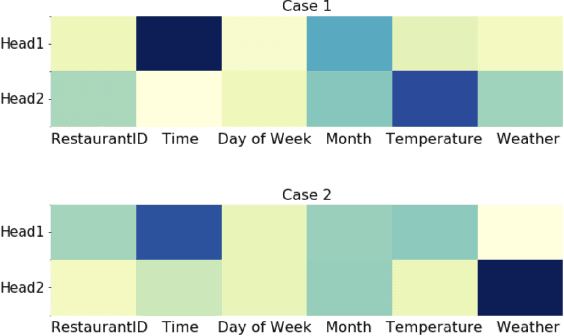

Abstract:Drive-thru is a popular sales channel in the fast food industry where consumers can make food purchases without leaving their cars. Drive-thru recommendation systems allow restaurants to display food recommendations on the digital menu board as guests are making their orders. Popular recommendation models in eCommerce scenarios rely on user attributes (such as user profiles or purchase history) to generate recommendations, while such information is hard to obtain in the drive-thru use case. Thus, in this paper, we propose a new recommendation model Transformer Cross Transformer (TxT), which exploits the guest order behavior and contextual features (such as location, time, and weather) using Transformer encoders for drive-thru recommendations. Empirical results show that our TxT model achieves superior results in Burger King's drive-thru production environment compared with existing recommendation solutions. In addition, we implement a unified system to run end-to-end big data analytics and deep learning workloads on the same cluster. We find that in practice, maintaining a single big data cluster for the entire pipeline is more efficient and cost-saving. Our recommendation system is not only beneficial for drive-thru scenarios, and it can also be generalized to other customer interaction channels.

BigDL: A Distributed Deep Learning Framework for Big Data

Jun 25, 2018

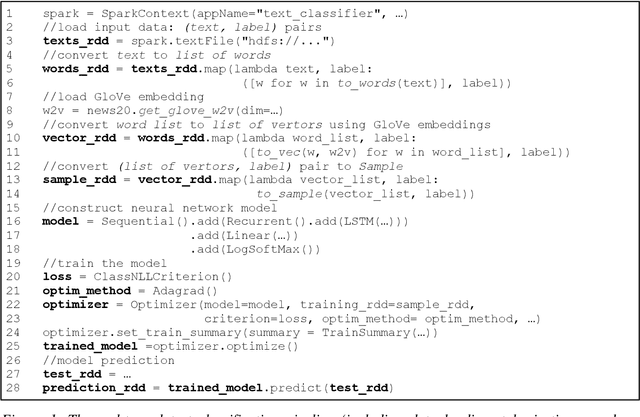

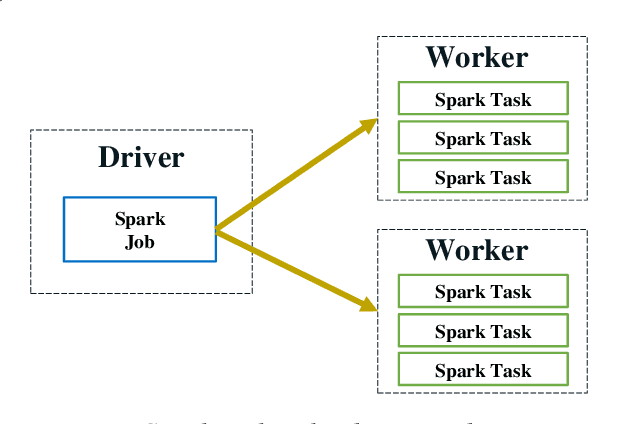

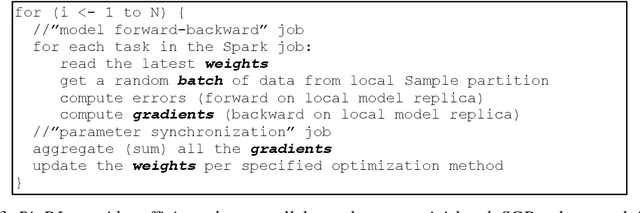

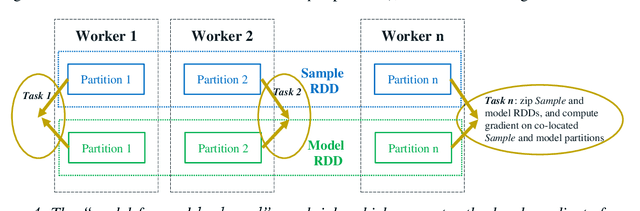

Abstract:In this paper, we present BigDL, a distributed deep learning framework for Big Data platforms and workflows. It is implemented on top of Apache Spark, and allows users to write their deep learning applications as standard Spark programs (running directly on large-scale big data clusters in a distributed fashion). It provides an expressive, "data-analytics integrated" deep learning programming model, so that users can easily build the end-to-end analytics + AI pipelines under a unified programming paradigm; by implementing an AllReduce like operation using existing primitives in Spark (e.g., shuffle, broadcast, and in-memory data persistence), it also provides a highly efficient "parameter server" style architecture, so as to achieve highly scalable, data-parallel distributed training. Since its initial open source release, BigDL users have built many analytics and deep learning applications (e.g., object detection, sequence-to-sequence generation, visual similarity, neural recommendations, fraud detection, etc.) on Spark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge