Dian Jin

Efficient Equivariant High-Order Crystal Tensor Prediction via Cartesian Local-Environment Many-Body Coupling

Feb 04, 2026Abstract:End-to-end prediction of high-order crystal tensor properties from atomic structures remains challenging: while spherical-harmonic equivariant models are expressive, their Clebsch-Gordan tensor products incur substantial compute and memory costs for higher-order targets. We propose the Cartesian Environment Interaction Tensor Network (CEITNet), an approach that constructs a multi-channel Cartesian local environment tensor for each atom and performs flexible many-body mixing via a learnable channel-space interaction. By performing learning in channel space and using Cartesian tensor bases to assemble equivariant outputs, CEITNet enables efficient construction of high-order tensor. Across benchmark datasets for order-2 dielectric, order-3 piezoelectric, and order-4 elastic tensor prediction, CEITNet surpasses prior high-order prediction methods on key accuracy criteria while offering high computational efficiency.

Magnitude-Modulated Equivariant Adapter for Parameter-Efficient Fine-Tuning of Equivariant Graph Neural Networks

Nov 10, 2025Abstract:Pretrained equivariant graph neural networks based on spherical harmonics offer efficient and accurate alternatives to computationally expensive ab-initio methods, yet adapting them to new tasks and chemical environments still requires fine-tuning. Conventional parameter-efficient fine-tuning (PEFT) techniques, such as Adapters and LoRA, typically break symmetry, making them incompatible with those equivariant architectures. ELoRA, recently proposed, is the first equivariant PEFT method. It achieves improved parameter efficiency and performance on many benchmarks. However, the relatively high degrees of freedom it retains within each tensor order can still perturb pretrained feature distributions and ultimately degrade performance. To address this, we present Magnitude-Modulated Equivariant Adapter (MMEA), a novel equivariant fine-tuning method which employs lightweight scalar gating to modulate feature magnitudes on a per-order and per-multiplicity basis. We demonstrate that MMEA preserves strict equivariance and, across multiple benchmarks, consistently improves energy and force predictions to state-of-the-art levels while training fewer parameters than competing approaches. These results suggest that, in many practical scenarios, modulating channel magnitudes is sufficient to adapt equivariant models to new chemical environments without breaking symmetry, pointing toward a new paradigm for equivariant PEFT design.

Zero-Effort Image-to-Music Generation: An Interpretable RAG-based VLM Approach

Sep 26, 2025Abstract:Recently, Image-to-Music (I2M) generation has garnered significant attention, with potential applications in fields such as gaming, advertising, and multi-modal art creation. However, due to the ambiguous and subjective nature of I2M tasks, most end-to-end methods lack interpretability, leaving users puzzled about the generation results. Even methods based on emotion mapping face controversy, as emotion represents only a singular aspect of art. Additionally, most learning-based methods require substantial computational resources and large datasets for training, hindering accessibility for common users. To address these challenges, we propose the first Vision Language Model (VLM)-based I2M framework that offers high interpretability and low computational cost. Specifically, we utilize ABC notation to bridge the text and music modalities, enabling the VLM to generate music using natural language. We then apply multi-modal Retrieval-Augmented Generation (RAG) and self-refinement techniques to allow the VLM to produce high-quality music without external training. Furthermore, we leverage the generated motivations in text and the attention maps from the VLM to provide explanations for the generated results in both text and image modalities. To validate our method, we conduct both human studies and machine evaluations, where our method outperforms others in terms of music quality and music-image consistency, indicating promising results. Our code is available at https://github.com/RS2002/Image2Music .

One-shot Robust Federated Learning of Independent Component Analysis

May 26, 2025Abstract:This paper investigates a general robust one-shot aggregation framework for distributed and federated Independent Component Analysis (ICA) problem. We propose a geometric median-based aggregation algorithm that leverages $k$-means clustering to resolve the permutation ambiguity in local client estimations. Our method first performs k-means to partition client-provided estimators into clusters and then aggregates estimators within each cluster using the geometric median. This approach provably remains effective even in highly heterogeneous scenarios where at most half of the clients can observe only a minimal number of samples. The key theoretical contribution lies in the combined analysis of the geometric median's error bound-aided by sample quantiles-and the maximum misclustering rates of the aforementioned solution of $k$-means. The effectiveness of the proposed approach is further supported by simulation studies conducted under various heterogeneous settings.

Community detection for Contexual-LSBM: Theoretical limitation on misclassfication ratio and effecient algorithm

Jan 19, 2025Abstract:The integration of both network information and node attribute information has recently gained significant attention in the context of community recovery problems. In this work, we address the task of determining the optimal classification rate for the Label-SBM(LSBM) model with node attribute information and. Specifically, we derive the optimal lower bound, which is characterized by the Chernoff-Hellinger divergence for a general LSBM network model with Gaussian node attributes. Additionally, we highlight the connection between the divergence $D(\bs\alpha, \mb P, \bs\mu)$ in our model and those introduced in \cite{yun2016optimal} and \cite{lu2016statistical}. We also presents a consistent algorithm based on spectral method for the proposed aggreated latent factor model.

Just a Few Glances: Open-Set Visual Perception with Image Prompt Paradigm

Dec 14, 2024

Abstract:To break through the limitations of pre-training models on fixed categories, Open-Set Object Detection (OSOD) and Open-Set Segmentation (OSS) have attracted a surge of interest from researchers. Inspired by large language models, mainstream OSOD and OSS methods generally utilize text as a prompt, achieving remarkable performance. Following SAM paradigm, some researchers use visual prompts, such as points, boxes, and masks that cover detection or segmentation targets. Despite these two prompt paradigms exhibit excellent performance, they also reveal inherent limitations. On the one hand, it is difficult to accurately describe characteristics of specialized category using textual description. On the other hand, existing visual prompt paradigms heavily rely on multi-round human interaction, which hinders them being applied to fully automated pipeline. To address the above issues, we propose a novel prompt paradigm in OSOD and OSS, that is, \textbf{Image Prompt Paradigm}. This brand new prompt paradigm enables to detect or segment specialized categories without multi-round human intervention. To achieve this goal, the proposed image prompt paradigm uses just a few image instances as prompts, and we propose a novel framework named \textbf{MI Grounding} for this new paradigm. In this framework, high-quality image prompts are automatically encoded, selected and fused, achieving the single-stage and non-interactive inference. We conduct extensive experiments on public datasets, showing that MI Grounding achieves competitive performance on OSOD and OSS benchmarks compared to text prompt paradigm methods and visual prompt paradigm methods. Moreover, MI Grounding can greatly outperform existing method on our constructed specialized ADR50K dataset.

SAG: Style-Aligned Article Generation via Model Collaboration

Oct 04, 2024

Abstract:Large language models (LLMs) have increased the demand for personalized and stylish content generation. However, closed-source models like GPT-4 present limitations in optimization opportunities, while the substantial training costs and inflexibility of open-source alternatives, such as Qwen-72B, pose considerable challenges. Conversely, small language models (SLMs) struggle with understanding complex instructions and transferring learned capabilities to new contexts, often exhibiting more pronounced limitations. In this paper, we present a novel collaborative training framework that leverages the strengths of both LLMs and SLMs for style article generation, surpassing the performance of either model alone. We freeze the LLMs to harness their robust instruction-following capabilities and subsequently apply supervised fine-tuning on the SLM using style-specific data. Additionally, we introduce a self-improvement method to enhance style consistency. Our new benchmark, NoteBench, thoroughly evaluates style-aligned generation. Extensive experiments show that our approach achieves state-of-the-art performance, with improvements of 0.78 in ROUGE-L and 0.55 in BLEU-4 scores compared to GPT-4, while maintaining a low hallucination rate regarding factual and faithfulness.

Optimal vintage factor analysis with deflation varimax

Oct 16, 2023

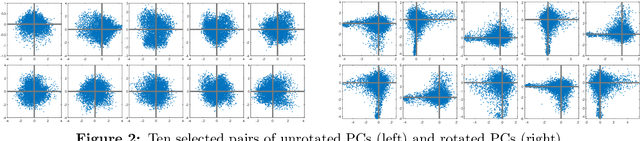

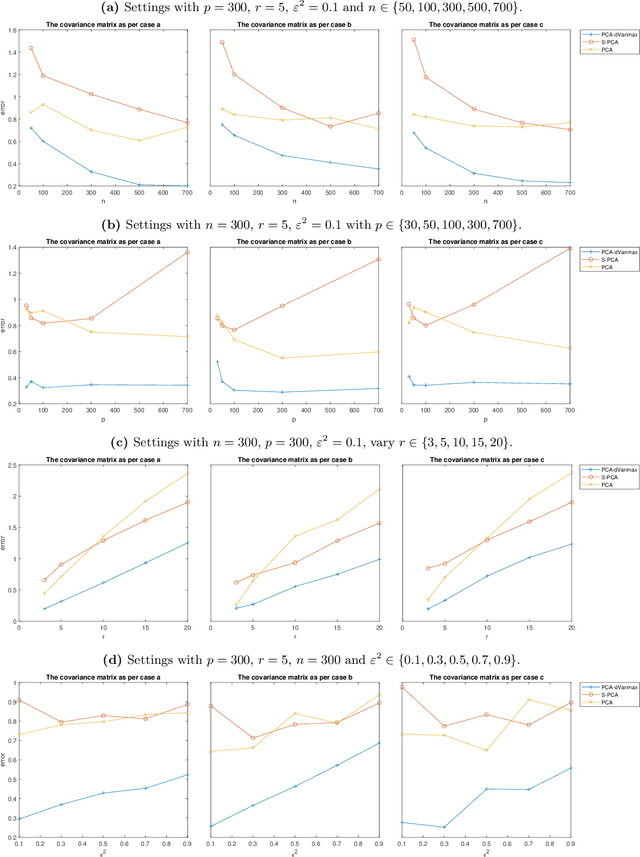

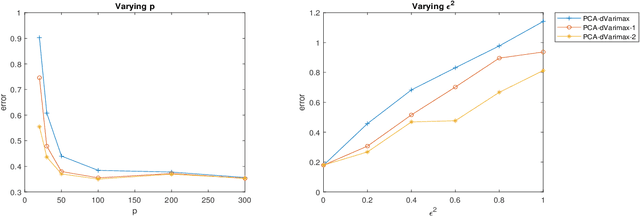

Abstract:Vintage factor analysis is one important type of factor analysis that aims to first find a low-dimensional representation of the original data, and then to seek a rotation such that the rotated low-dimensional representation is scientifically meaningful. Perhaps the most widely used vintage factor analysis is the Principal Component Analysis (PCA) followed by the varimax rotation. Despite its popularity, little theoretical guarantee can be provided mainly because varimax rotation requires to solve a non-convex optimization over the set of orthogonal matrices. In this paper, we propose a deflation varimax procedure that solves each row of an orthogonal matrix sequentially. In addition to its net computational gain and flexibility, we are able to fully establish theoretical guarantees for the proposed procedure in a broad context. Adopting this new varimax approach as the second step after PCA, we further analyze this two step procedure under a general class of factor models. Our results show that it estimates the factor loading matrix in the optimal rate when the signal-to-noise-ratio (SNR) is moderate or large. In the low SNR regime, we offer possible improvement over using PCA and the deflation procedure when the additive noise under the factor model is structured. The modified procedure is shown to be optimal in all SNR regimes. Our theory is valid for finite sample and allows the number of the latent factors to grow with the sample size as well as the ambient dimension to grow with, or even exceed, the sample size. Extensive simulation and real data analysis further corroborate our theoretical findings.

Bilevel Fast Scene Adaptation for Low-Light Image Enhancement

Jun 02, 2023

Abstract:Enhancing images in low-light scenes is a challenging but widely concerned task in the computer vision. The mainstream learning-based methods mainly acquire the enhanced model by learning the data distribution from the specific scenes, causing poor adaptability (even failure) when meeting real-world scenarios that have never been encountered before. The main obstacle lies in the modeling conundrum from distribution discrepancy across different scenes. To remedy this, we first explore relationships between diverse low-light scenes based on statistical analysis, i.e., the network parameters of the encoder trained in different data distributions are close. We introduce the bilevel paradigm to model the above latent correspondence from the perspective of hyperparameter optimization. A bilevel learning framework is constructed to endow the scene-irrelevant generality of the encoder towards diverse scenes (i.e., freezing the encoder in the adaptation and testing phases). Further, we define a reinforced bilevel learning framework to provide a meta-initialization for scene-specific decoder to further ameliorate visual quality. Moreover, to improve the practicability, we establish a Retinex-induced architecture with adaptive denoising and apply our built learning framework to acquire its parameters by using two training losses including supervised and unsupervised forms. Extensive experimental evaluations on multiple datasets verify our adaptability and competitive performance against existing state-of-the-art works. The code and datasets will be available at https://github.com/vis-opt-group/BL.

WebCPM: Interactive Web Search for Chinese Long-form Question Answering

May 23, 2023

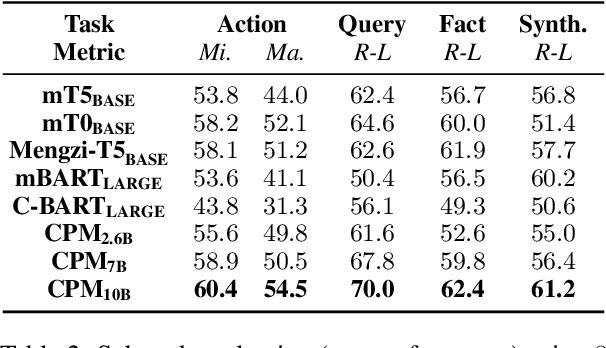

Abstract:Long-form question answering (LFQA) aims at answering complex, open-ended questions with detailed, paragraph-length responses. The de facto paradigm of LFQA necessitates two procedures: information retrieval, which searches for relevant supporting facts, and information synthesis, which integrates these facts into a coherent answer. In this paper, we introduce WebCPM, the first Chinese LFQA dataset. One unique feature of WebCPM is that its information retrieval is based on interactive web search, which engages with a search engine in real time. Following WebGPT, we develop a web search interface. We recruit annotators to search for relevant information using our interface and then answer questions. Meanwhile, the web search behaviors of our annotators would be recorded. In total, we collect 5,500 high-quality question-answer pairs, together with 14,315 supporting facts and 121,330 web search actions. We fine-tune pre-trained language models to imitate human behaviors for web search and to generate answers based on the collected facts. Our LFQA pipeline, built on these fine-tuned models, generates answers that are no worse than human-written ones in 32.5% and 47.5% of the cases on our dataset and DuReader, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge