David Flynn

A Survey on Semantic Communication for Vision: Categories, Frameworks, Enabling Techniques, and Applications

Jan 29, 2026Abstract:Semantic communication (SemCom) emerges as a transformative paradigm for traffic-intensive visual data transmission, shifting focus from raw data to meaningful content transmission and relieving the increasing pressure on communication resources. However, to achieve SemCom, challenges are faced in accurate semantic quantization for visual data, robust semantic extraction and reconstruction under diverse tasks and goals, transceiver coordination with effective knowledge utilization, and adaptation to unpredictable wireless communication environments. In this paper, we present a systematic review of SemCom for visual data transmission (SemCom-Vision), wherein an interdisciplinary analysis integrating computer vision (CV) and communication engineering is conducted to provide comprehensive guidelines for the machine learning (ML)-empowered SemCom-Vision design. Specifically, this survey first elucidates the basics and key concepts of SemCom. Then, we introduce a novel classification perspective to categorize existing SemCom-Vision approaches as semantic preservation communication (SPC), semantic expansion communication (SEC), and semantic refinement communication (SRC) based on communication goals interpreted through semantic quantization schemes. Moreover, this survey articulates the ML-based encoder-decoder models and training algorithms for each SemCom-Vision category, followed by knowledge structure and utilization strategies. Finally, we discuss potential SemCom-Vision applications.

Free Energy-Inspired Cognitive Risk Integration for AV Navigation in Pedestrian-Rich Environments

Jul 28, 2025

Abstract:Recent advances in autonomous vehicle (AV) behavior planning have shown impressive social interaction capabilities when interacting with other road users. However, achieving human-like prediction and decision-making in interactions with vulnerable road users remains a key challenge in complex multi-agent interactive environments. Existing research focuses primarily on crowd navigation for small mobile robots, which cannot be directly applied to AVs due to inherent differences in their decision-making strategies and dynamic boundaries. Moreover, pedestrians in these multi-agent simulations follow fixed behavior patterns that cannot dynamically respond to AV actions. To overcome these limitations, this paper proposes a novel framework for modeling interactions between the AV and multiple pedestrians. In this framework, a cognitive process modeling approach inspired by the Free Energy Principle is integrated into both the AV and pedestrian models to simulate more realistic interaction dynamics. Specifically, the proposed pedestrian Cognitive-Risk Social Force Model adjusts goal-directed and repulsive forces using a fused measure of cognitive uncertainty and physical risk to produce human-like trajectories. Meanwhile, the AV leverages this fused risk to construct a dynamic, risk-aware adjacency matrix for a Graph Convolutional Network within a Soft Actor-Critic architecture, allowing it to make more reasonable and informed decisions. Simulation results indicate that our proposed framework effectively improves safety, efficiency, and smoothness of AV navigation compared to the state-of-the-art method.

Preference-Driven Active 3D Scene Representation for Robotic Inspection in Nuclear Decommissioning

Apr 02, 2025

Abstract:Active 3D scene representation is pivotal in modern robotics applications, including remote inspection, manipulation, and telepresence. Traditional methods primarily optimize geometric fidelity or rendering accuracy, but often overlook operator-specific objectives, such as safety-critical coverage or task-driven viewpoints. This limitation leads to suboptimal viewpoint selection, particularly in constrained environments such as nuclear decommissioning. To bridge this gap, we introduce a novel framework that integrates expert operator preferences into the active 3D scene representation pipeline. Specifically, we employ Reinforcement Learning from Human Feedback (RLHF) to guide robotic path planning, reshaping the reward function based on expert input. To capture operator-specific priorities, we conduct interactive choice experiments that evaluate user preferences in 3D scene representation. We validate our framework using a UR3e robotic arm for reactor tile inspection in a nuclear decommissioning scenario. Compared to baseline methods, our approach enhances scene representation while optimizing trajectory efficiency. The RLHF-based policy consistently outperforms random selection, prioritizing task-critical details. By unifying explicit 3D geometric modeling with implicit human-in-the-loop optimization, this work establishes a foundation for adaptive, safety-critical robotic perception systems, paving the way for enhanced automation in nuclear decommissioning, remote maintenance, and other high-risk environments.

Evaluating Scenario-based Decision-making for Interactive Autonomous Driving Using Rational Criteria: A Survey

Jan 03, 2025

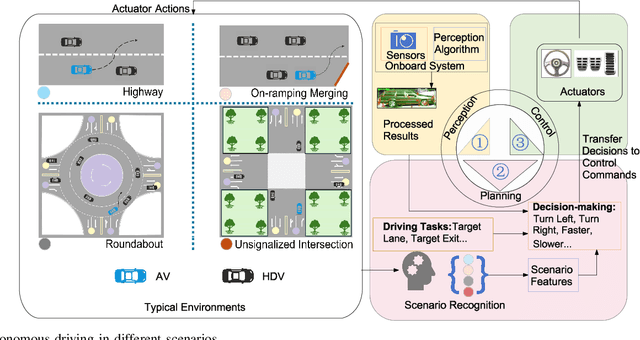

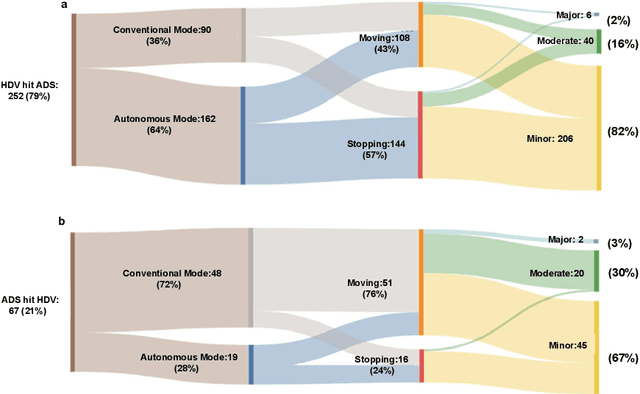

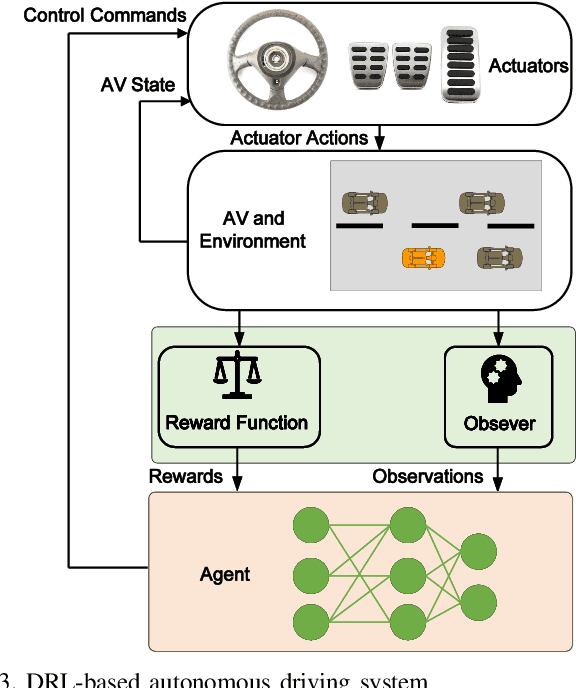

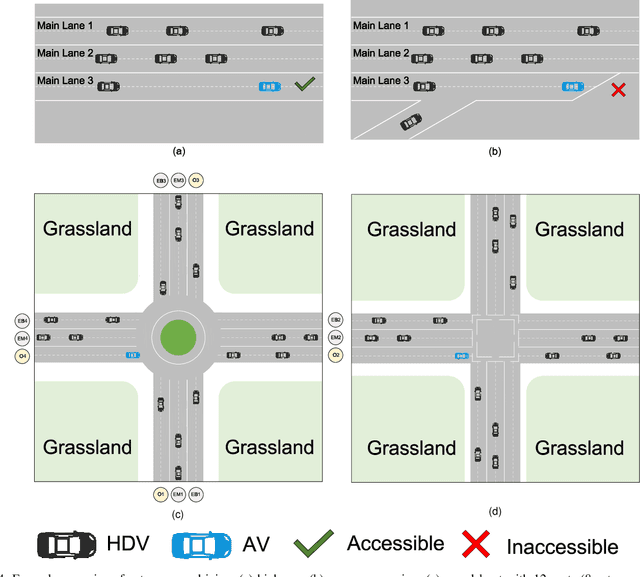

Abstract:Autonomous vehicles (AVs) can significantly promote the advances in road transport mobility in terms of safety, reliability, and decarbonization. However, ensuring safety and efficiency in interactive during within dynamic and diverse environments is still a primary barrier to large-scale AV adoption. In recent years, deep reinforcement learning (DRL) has emerged as an advanced AI-based approach, enabling AVs to learn decision-making strategies adaptively from data and interactions. DRL strategies are better suited than traditional rule-based methods for handling complex, dynamic, and unpredictable driving environments due to their adaptivity. However, varying driving scenarios present distinct challenges, such as avoiding obstacles on highways and reaching specific exits at intersections, requiring different scenario-specific decision-making algorithms. Many DRL algorithms have been proposed in interactive decision-making. However, a rationale review of these DRL algorithms across various scenarios is lacking. Therefore, a comprehensive evaluation is essential to assess these algorithms from multiple perspectives, including those of vehicle users and vehicle manufacturers. This survey reviews the application of DRL algorithms in autonomous driving across typical scenarios, summarizing road features and recent advancements. The scenarios include highways, on-ramp merging, roundabouts, and unsignalized intersections. Furthermore, DRL-based algorithms are evaluated based on five rationale criteria: driving safety, driving efficiency, training efficiency, unselfishness, and interpretability (DDTUI). Each criterion of DDTUI is specifically analyzed in relation to the reviewed algorithms. Finally, the challenges for future DRL-based decision-making algorithms are summarized.

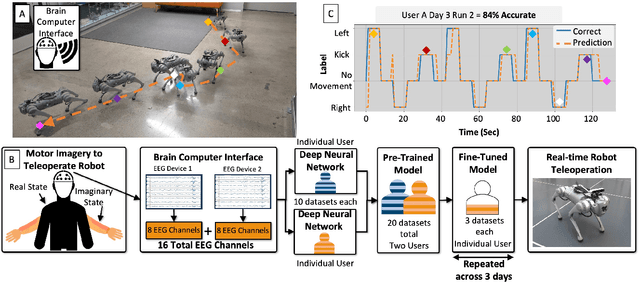

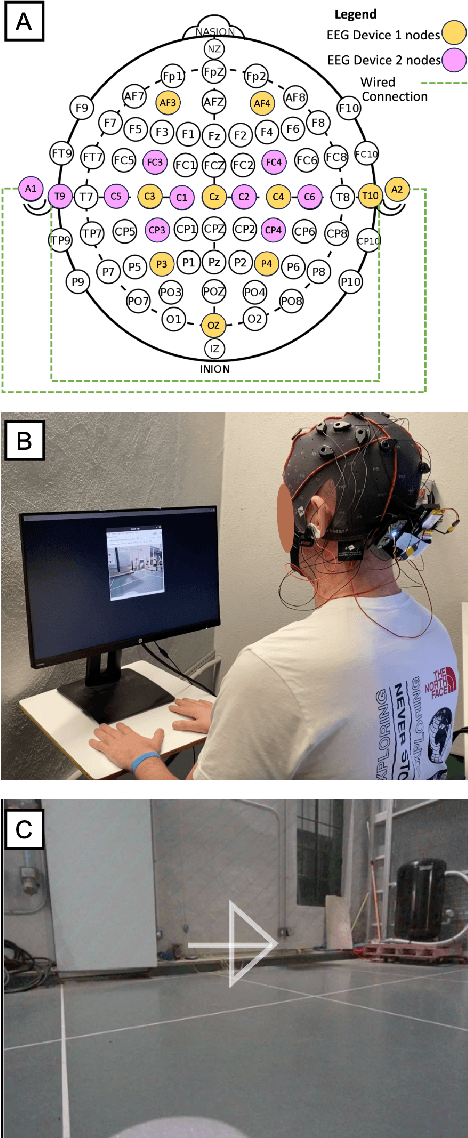

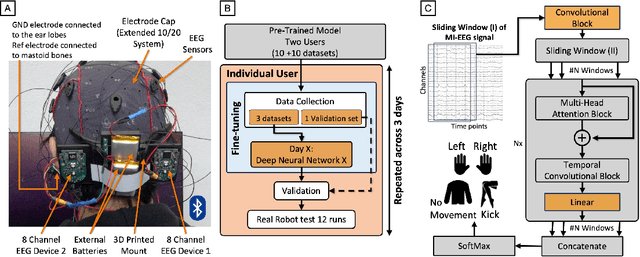

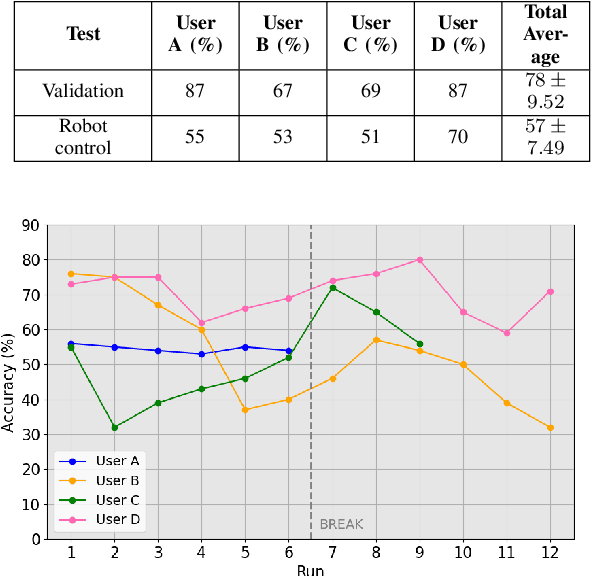

Motor Imagery Teleoperation of a Mobile Robot Using a Low-Cost Brain-Computer Interface for Multi-Day Validation

Dec 12, 2024

Abstract:Brain-computer interfaces (BCI) have the potential to provide transformative control in prosthetics, assistive technologies (wheelchairs), robotics, and human-computer interfaces. While Motor Imagery (MI) offers an intuitive approach to BCI control, its practical implementation is often limited by the requirement for expensive devices, extensive training data, and complex algorithms, leading to user fatigue and reduced accessibility. In this paper, we demonstrate that effective MI-BCI control of a mobile robot in real-world settings can be achieved using a fine-tuned Deep Neural Network (DNN) with a sliding window, eliminating the need for complex feature extractions for real-time robot control. The fine-tuning process optimizes the convolutional and attention layers of the DNN to adapt to each user's daily MI data streams, reducing training data by 70% and minimizing user fatigue from extended data collection. Using a low-cost (~$3k), 16-channel, non-invasive, open-source electroencephalogram (EEG) device, four users teleoperated a quadruped robot over three days. The system achieved 78% accuracy on a single-day validation dataset and maintained a 75% validation accuracy over three days without extensive retraining from day-to-day. For real-world robot command classification, we achieved an average of 62% accuracy. By providing empirical evidence that MI-BCI systems can maintain performance over multiple days with reduced training data to DNN and a low-cost EEG device, our work enhances the practicality and accessibility of BCI technology. This advancement makes BCI applications more feasible for real-world scenarios, particularly in controlling robotic systems.

Trustworthy Text-to-Image Diffusion Models: A Timely and Focused Survey

Sep 26, 2024

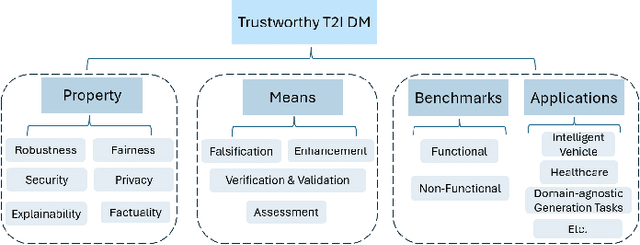

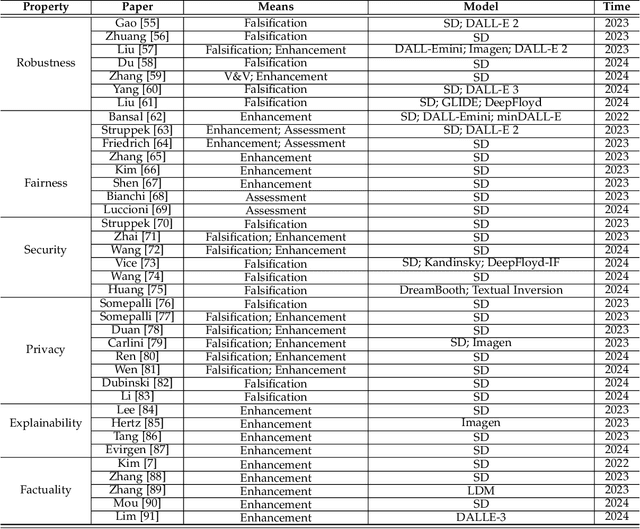

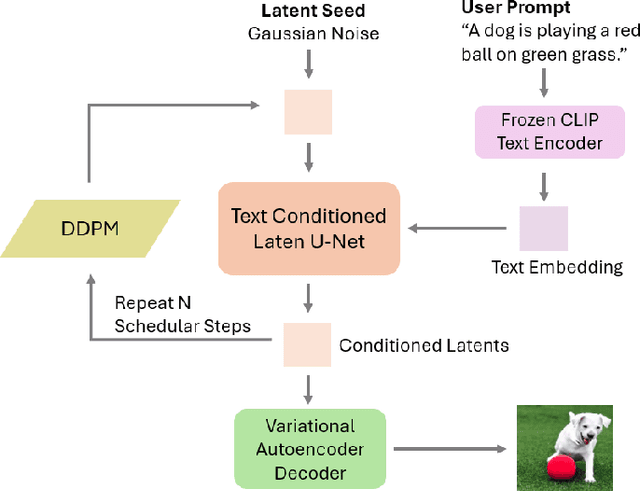

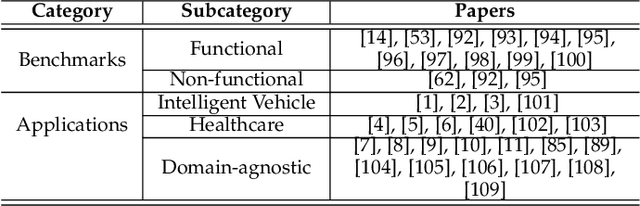

Abstract:Text-to-Image (T2I) Diffusion Models (DMs) have garnered widespread attention for their impressive advancements in image generation. However, their growing popularity has raised ethical and social concerns related to key non-functional properties of trustworthiness, such as robustness, fairness, security, privacy, factuality, and explainability, similar to those in traditional deep learning (DL) tasks. Conventional approaches for studying trustworthiness in DL tasks often fall short due to the unique characteristics of T2I DMs, e.g., the multi-modal nature. Given the challenge, recent efforts have been made to develop new methods for investigating trustworthiness in T2I DMs via various means, including falsification, enhancement, verification \& validation and assessment. However, there is a notable lack of in-depth analysis concerning those non-functional properties and means. In this survey, we provide a timely and focused review of the literature on trustworthy T2I DMs, covering a concise-structured taxonomy from the perspectives of property, means, benchmarks and applications. Our review begins with an introduction to essential preliminaries of T2I DMs, and then we summarise key definitions/metrics specific to T2I tasks and analyses the means proposed in recent literature based on these definitions/metrics. Additionally, we review benchmarks and domain applications of T2I DMs. Finally, we highlight the gaps in current research, discuss the limitations of existing methods, and propose future research directions to advance the development of trustworthy T2I DMs. Furthermore, we keep up-to-date updates in this field to track the latest developments and maintain our GitHub repository at: https://github.com/wellzline/Trustworthy_T2I_DMs

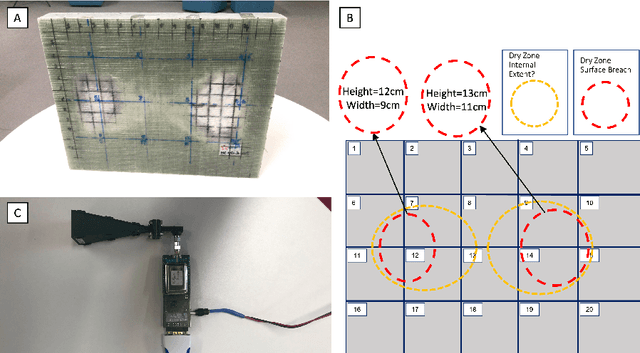

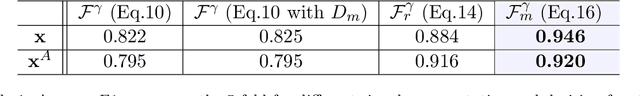

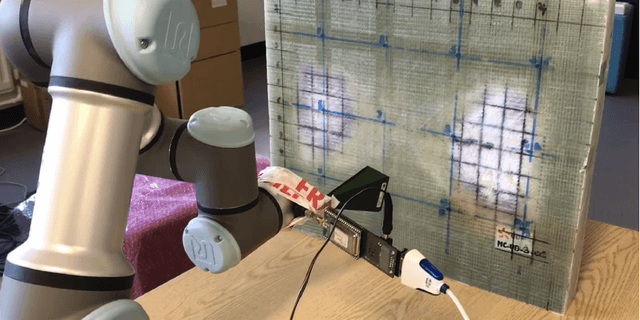

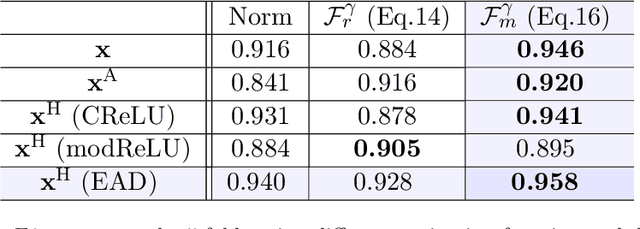

Non-contact Sensing for Anomaly Detection in Wind Turbine Blades: A focus-SVDD with Complex-Valued Auto-Encoder Approach

Jun 19, 2023

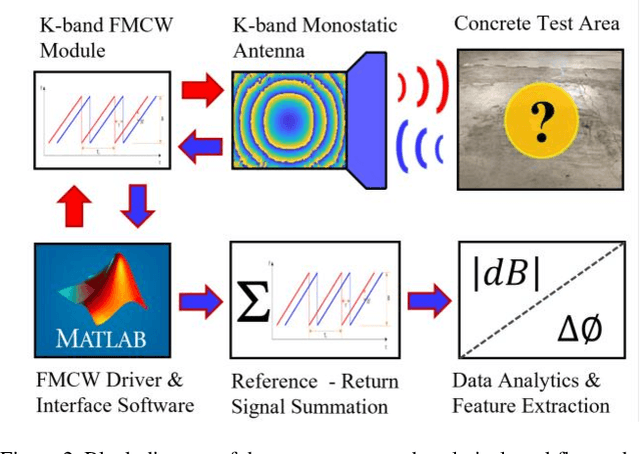

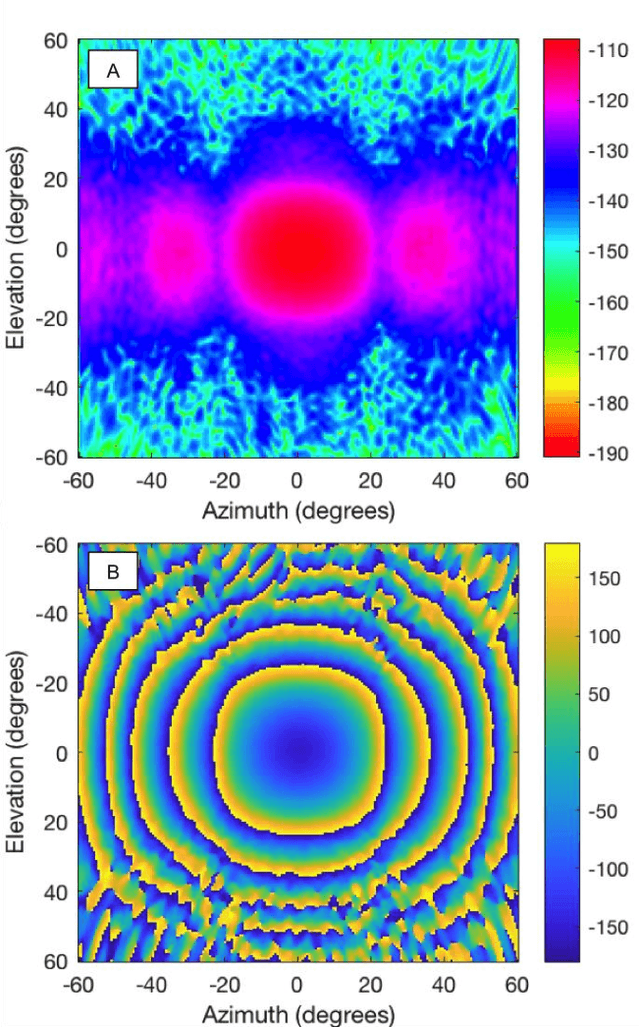

Abstract:The occurrence of manufacturing defects in wind turbine blade (WTB) production can result in significant increases in operation and maintenance costs and lead to severe and disastrous consequences. Therefore, inspection during the manufacturing process is crucial to ensure consistent fabrication of composite materials. Non-contact sensing techniques, such as Frequency Modulated Continuous Wave (FMCW) radar, are becoming increasingly popular as they offer a full view of these complex structures during curing. In this paper, we enhance the quality assurance of manufacturing utilizing FMCW radar as a non-destructive sensing modality. Additionally, a novel anomaly detection pipeline is developed that offers the following advantages: (1) We use the analytic representation of the Intermediate Frequency signal of the FMCW radar as a feature to disentangle material-specific and round-trip delay information from the received wave. (2) We propose a novel anomaly detection methodology called focus Support Vector Data Description (focus-SVDD). This methodology involves defining the limit boundaries of the dataset after removing healthy data features, thereby focusing on the attributes of anomalies. (3) The proposed method employs a complex-valued autoencoder to remove healthy features and we introduces a new activation function called Exponential Amplitude Decay (EAD). EAD takes advantage of the Rayleigh distribution, which characterizes an instantaneous amplitude signal. The effectiveness of the proposed method is demonstrated through its application to collected data, where it shows superior performance compared to other state-of-the-art unsupervised anomaly detection methods. This method is expected to make a significant contribution not only to structural health monitoring but also to the field of deep complex-valued data processing and SVDD application.

Bayesian Learning for the Robust Verification of Autonomous Robots

Mar 15, 2023Abstract:We develop a novel Bayesian learning framework that enables the runtime verification of autonomous robots performing critical missions in uncertain environments. Our framework exploits prior knowledge and observations of the verified robotic system to learn expected ranges of values for the occurrence rates of its events. We support both events observed regularly during system operation, and singular events such as catastrophic failures or the completion of difficult one-off tasks. Furthermore, we use the learnt event-rate ranges to assemble interval continuous-time Markov models, and we apply quantitative verification to these models to compute expected intervals of variation for key system properties. These intervals reflect the uncertainty intrinsic to many real-world systems, enabling the robust verification of their quantitative properties under parametric uncertainty. We apply the proposed framework to the case study of verification of an autonomous robotic mission for underwater infrastructure inspection and repair.

Millimeter-Wave Sensing for Avoidance of High-Risk Ground Conditions for Mobile Robots

Mar 30, 2022

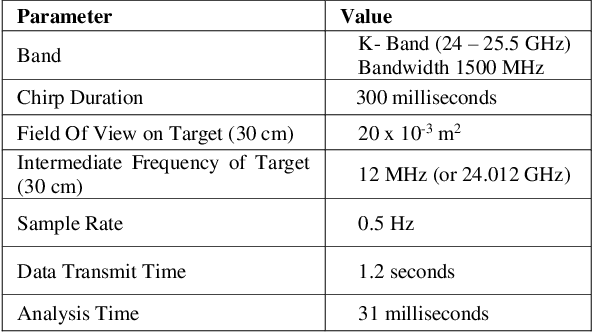

Abstract:Mobile robot autonomy has made significant advances in recent years, with navigation algorithms well developed and used commercially in certain well-defined environments, such as warehouses. The common link in usage scenarios is that the environments in which the robots are utilized have a high degree of certainty. Operating environments are often designed to be robot friendly, for example augmented reality markers are strategically placed and the ground is typically smooth, level, and clear of debris. For robots to be useful in a wider range of environments, especially environments that are not sanitized for their use, robots must be able to handle uncertainty. This requires a robot to incorporate new sensors and sources of information, and to be able to use this information to make decisions regarding navigation and the overall mission. When using autonomous mobile robots in unstructured and poorly defined environments, such as a natural disaster site or in a rural environment, ground condition is of critical importance and is a common cause of failure. Examples include loss of traction due to high levels of ground water, hidden cavities, or material boundary failures. To evaluate a non-contact sensing method to mitigate these risks, Frequency Modulated Continuous Wave (FMCW) radar is integrated with an Unmanned Ground Vehicle (UGV), representing a novel application of FMCW to detect new measurands for Robotic Autonomous Systems (RAS) navigation, informing on terrain integrity and adding to the state-of-the-art in sensing for optimized autonomous path planning. In this paper, the FMCW is first evaluated in a desktop setting to determine its performance in anticipated ground conditions. The FMCW is then fixed to a UGV and the sensor system is tested and validated in a representative environment containing regions with significant levels of ground water saturation.

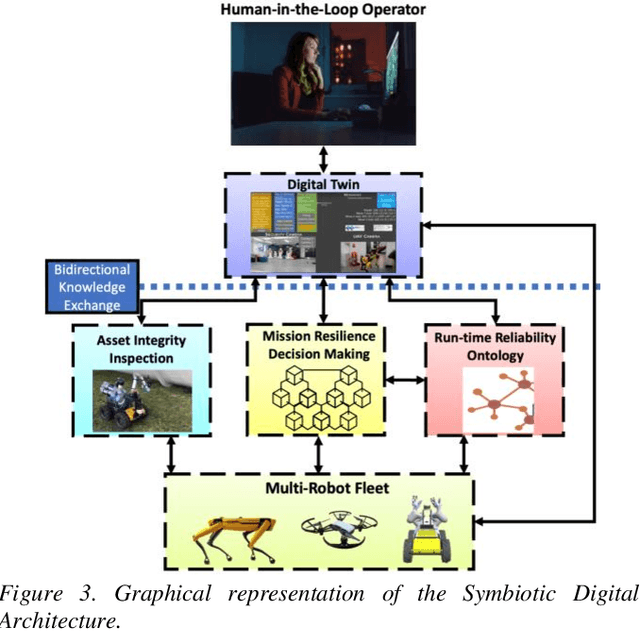

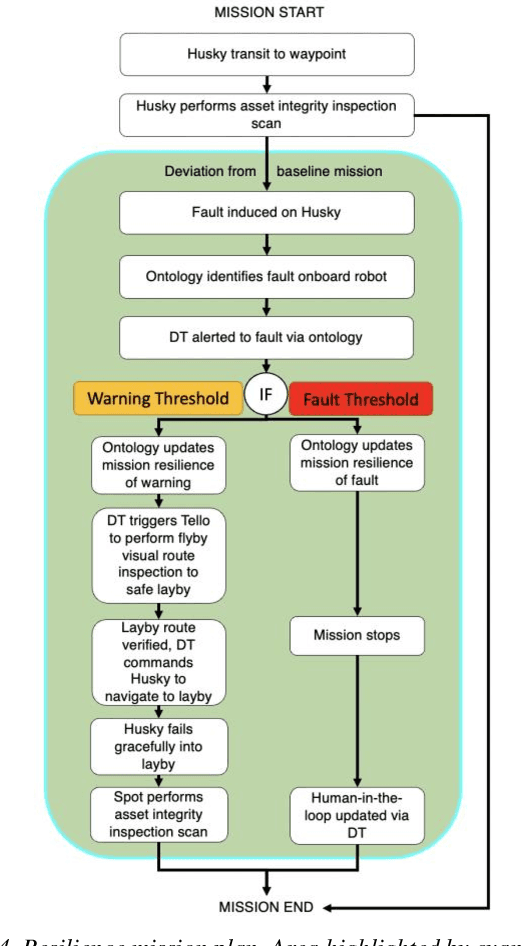

Addressing Non-Intervention Challenges via Resilient Robotics utilizing a Digital Twin

Mar 29, 2022

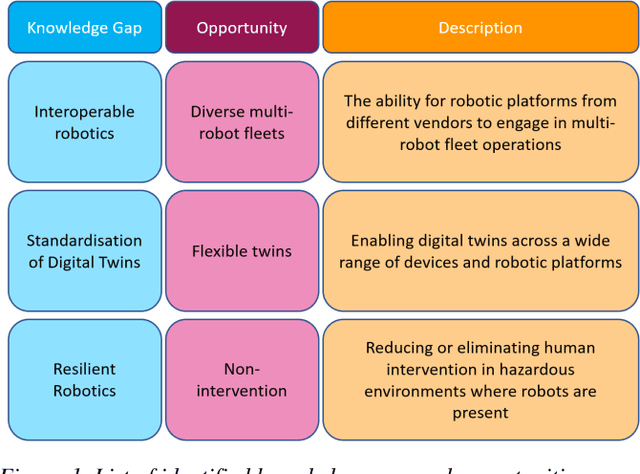

Abstract:Multi-robot systems face challenges in reducing human interventions as they are often deployed in dangerous environments. It is therefore necessary to include a methodology to assess robot failure rates to reduce the requirement for costly human intervention. A solution to this problem includes robots with the ability to work together to ensure mission resilience. To prevent this intervention, robots should be able to work together to ensure mission resilience. However, robotic platforms generally lack built-in interconnectivity with other platforms from different vendors. This work aims to tackle this issue by enabling the functionality through a bidirectional digital twin. The twin enables the human operator to transmit and receive information to and from the multi-robot fleet. This digital twin considers mission resilience, decision making and a run-time reliability ontology for failure detection to enable the resilience of a multi-robot fleet. This creates the cooperation, corroboration, and collaboration of diverse robots to leverage the capability of robots and support recovery of a failed robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge