Daniel Mitchell

Motor Imagery Teleoperation of a Mobile Robot Using a Low-Cost Brain-Computer Interface for Multi-Day Validation

Dec 12, 2024

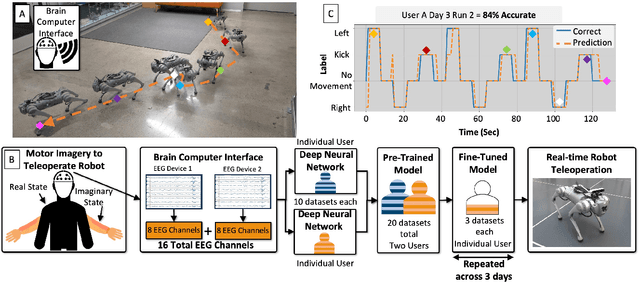

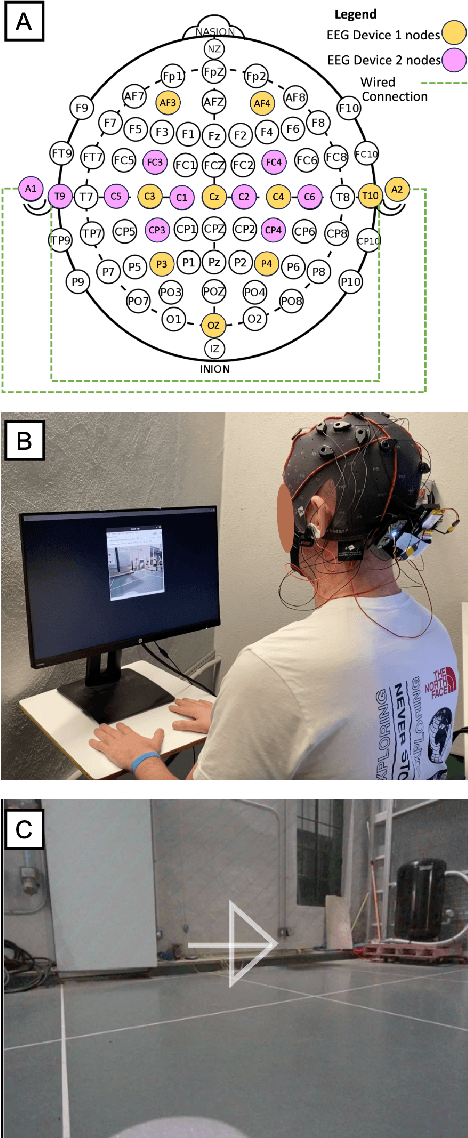

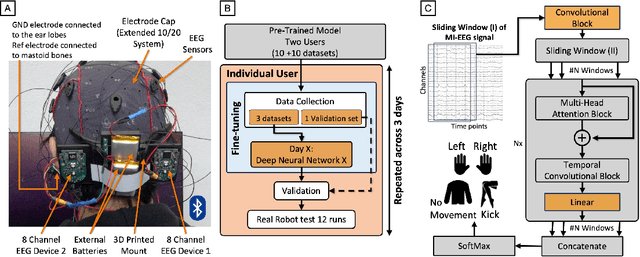

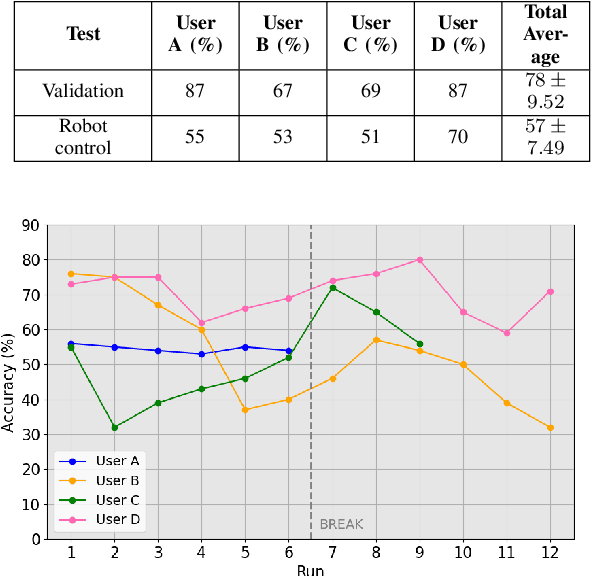

Abstract:Brain-computer interfaces (BCI) have the potential to provide transformative control in prosthetics, assistive technologies (wheelchairs), robotics, and human-computer interfaces. While Motor Imagery (MI) offers an intuitive approach to BCI control, its practical implementation is often limited by the requirement for expensive devices, extensive training data, and complex algorithms, leading to user fatigue and reduced accessibility. In this paper, we demonstrate that effective MI-BCI control of a mobile robot in real-world settings can be achieved using a fine-tuned Deep Neural Network (DNN) with a sliding window, eliminating the need for complex feature extractions for real-time robot control. The fine-tuning process optimizes the convolutional and attention layers of the DNN to adapt to each user's daily MI data streams, reducing training data by 70% and minimizing user fatigue from extended data collection. Using a low-cost (~$3k), 16-channel, non-invasive, open-source electroencephalogram (EEG) device, four users teleoperated a quadruped robot over three days. The system achieved 78% accuracy on a single-day validation dataset and maintained a 75% validation accuracy over three days without extensive retraining from day-to-day. For real-world robot command classification, we achieved an average of 62% accuracy. By providing empirical evidence that MI-BCI systems can maintain performance over multiple days with reduced training data to DNN and a low-cost EEG device, our work enhances the practicality and accessibility of BCI technology. This advancement makes BCI applications more feasible for real-world scenarios, particularly in controlling robotic systems.

Non-contact Sensing for Anomaly Detection in Wind Turbine Blades: A focus-SVDD with Complex-Valued Auto-Encoder Approach

Jun 19, 2023

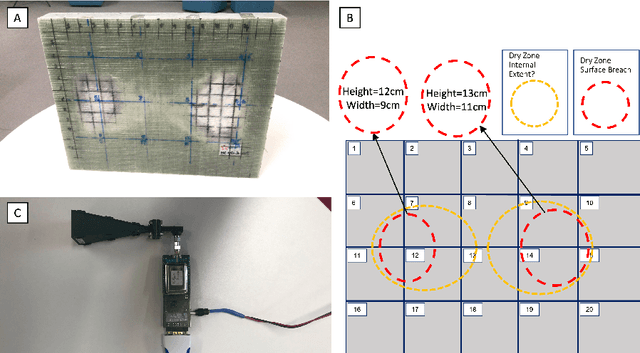

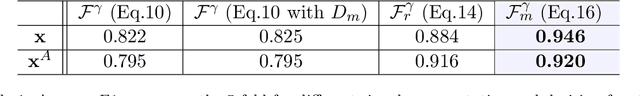

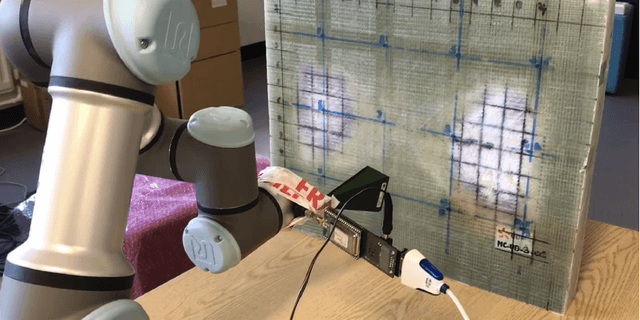

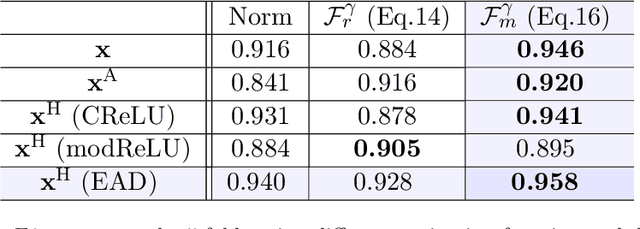

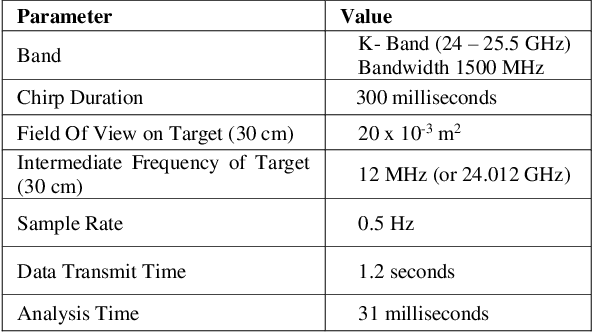

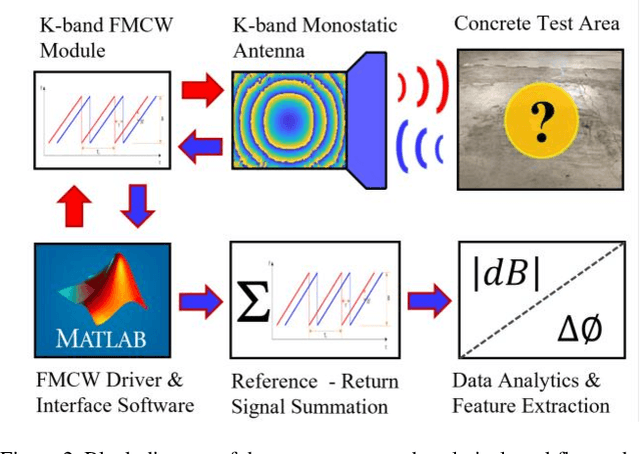

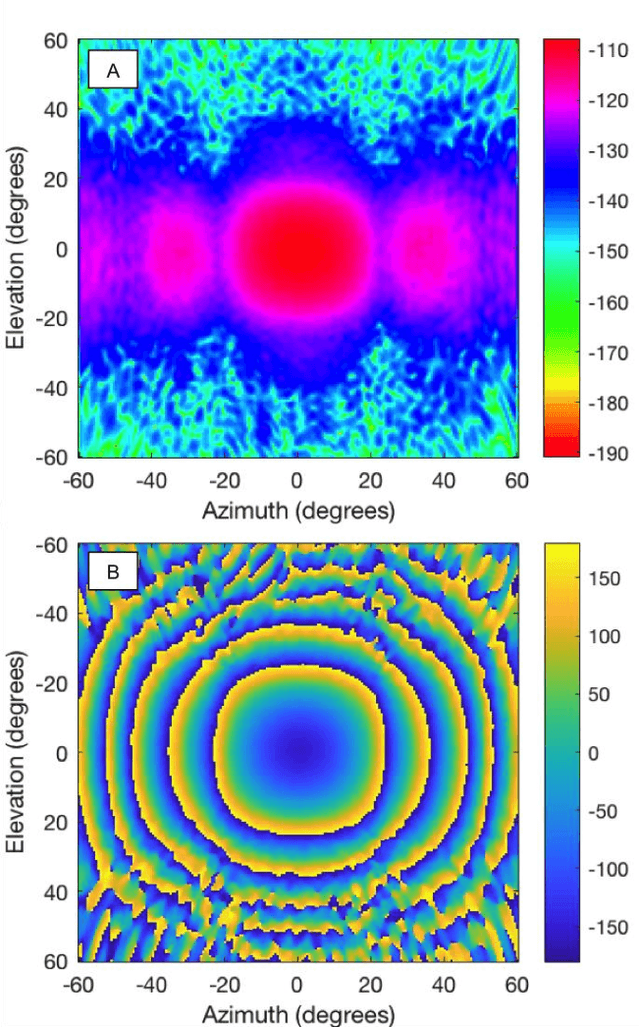

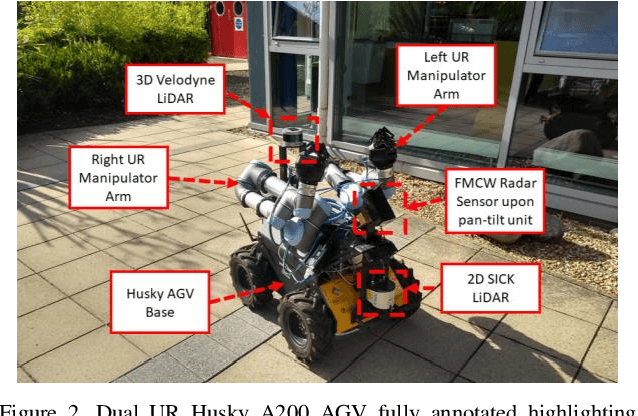

Abstract:The occurrence of manufacturing defects in wind turbine blade (WTB) production can result in significant increases in operation and maintenance costs and lead to severe and disastrous consequences. Therefore, inspection during the manufacturing process is crucial to ensure consistent fabrication of composite materials. Non-contact sensing techniques, such as Frequency Modulated Continuous Wave (FMCW) radar, are becoming increasingly popular as they offer a full view of these complex structures during curing. In this paper, we enhance the quality assurance of manufacturing utilizing FMCW radar as a non-destructive sensing modality. Additionally, a novel anomaly detection pipeline is developed that offers the following advantages: (1) We use the analytic representation of the Intermediate Frequency signal of the FMCW radar as a feature to disentangle material-specific and round-trip delay information from the received wave. (2) We propose a novel anomaly detection methodology called focus Support Vector Data Description (focus-SVDD). This methodology involves defining the limit boundaries of the dataset after removing healthy data features, thereby focusing on the attributes of anomalies. (3) The proposed method employs a complex-valued autoencoder to remove healthy features and we introduces a new activation function called Exponential Amplitude Decay (EAD). EAD takes advantage of the Rayleigh distribution, which characterizes an instantaneous amplitude signal. The effectiveness of the proposed method is demonstrated through its application to collected data, where it shows superior performance compared to other state-of-the-art unsupervised anomaly detection methods. This method is expected to make a significant contribution not only to structural health monitoring but also to the field of deep complex-valued data processing and SVDD application.

Millimeter-Wave Sensing for Avoidance of High-Risk Ground Conditions for Mobile Robots

Mar 30, 2022

Abstract:Mobile robot autonomy has made significant advances in recent years, with navigation algorithms well developed and used commercially in certain well-defined environments, such as warehouses. The common link in usage scenarios is that the environments in which the robots are utilized have a high degree of certainty. Operating environments are often designed to be robot friendly, for example augmented reality markers are strategically placed and the ground is typically smooth, level, and clear of debris. For robots to be useful in a wider range of environments, especially environments that are not sanitized for their use, robots must be able to handle uncertainty. This requires a robot to incorporate new sensors and sources of information, and to be able to use this information to make decisions regarding navigation and the overall mission. When using autonomous mobile robots in unstructured and poorly defined environments, such as a natural disaster site or in a rural environment, ground condition is of critical importance and is a common cause of failure. Examples include loss of traction due to high levels of ground water, hidden cavities, or material boundary failures. To evaluate a non-contact sensing method to mitigate these risks, Frequency Modulated Continuous Wave (FMCW) radar is integrated with an Unmanned Ground Vehicle (UGV), representing a novel application of FMCW to detect new measurands for Robotic Autonomous Systems (RAS) navigation, informing on terrain integrity and adding to the state-of-the-art in sensing for optimized autonomous path planning. In this paper, the FMCW is first evaluated in a desktop setting to determine its performance in anticipated ground conditions. The FMCW is then fixed to a UGV and the sensor system is tested and validated in a representative environment containing regions with significant levels of ground water saturation.

Addressing Non-Intervention Challenges via Resilient Robotics utilizing a Digital Twin

Mar 29, 2022

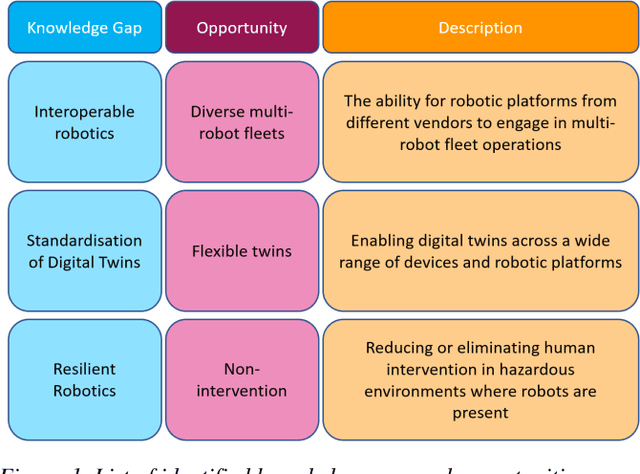

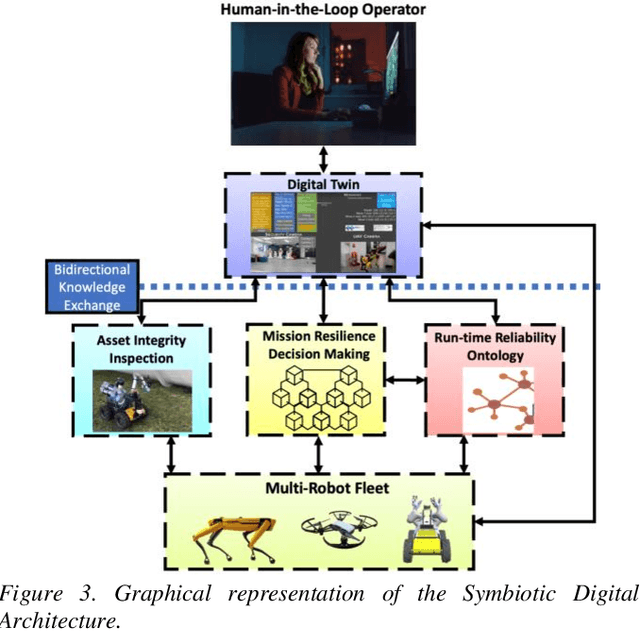

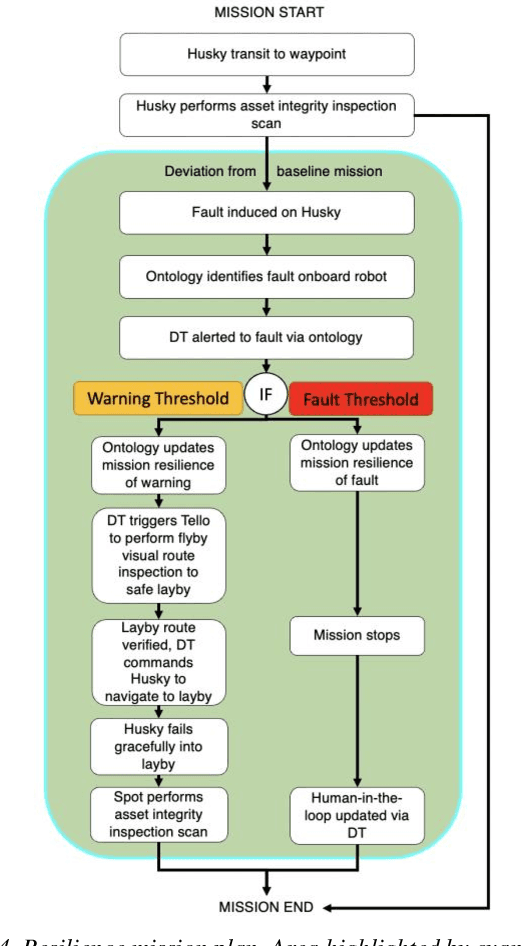

Abstract:Multi-robot systems face challenges in reducing human interventions as they are often deployed in dangerous environments. It is therefore necessary to include a methodology to assess robot failure rates to reduce the requirement for costly human intervention. A solution to this problem includes robots with the ability to work together to ensure mission resilience. To prevent this intervention, robots should be able to work together to ensure mission resilience. However, robotic platforms generally lack built-in interconnectivity with other platforms from different vendors. This work aims to tackle this issue by enabling the functionality through a bidirectional digital twin. The twin enables the human operator to transmit and receive information to and from the multi-robot fleet. This digital twin considers mission resilience, decision making and a run-time reliability ontology for failure detection to enable the resilience of a multi-robot fleet. This creates the cooperation, corroboration, and collaboration of diverse robots to leverage the capability of robots and support recovery of a failed robot.

Millimeter-wave Foresight Sensing for Safety and Resilience in Autonomous Operations

Mar 24, 2022

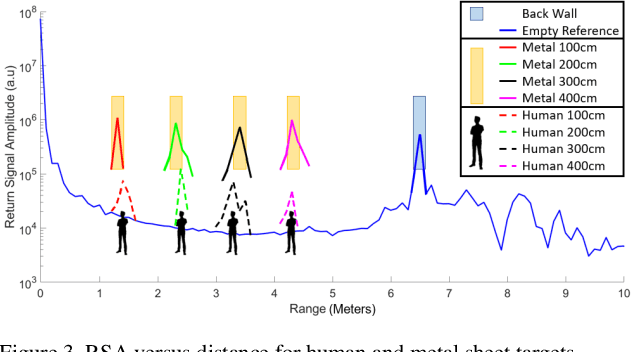

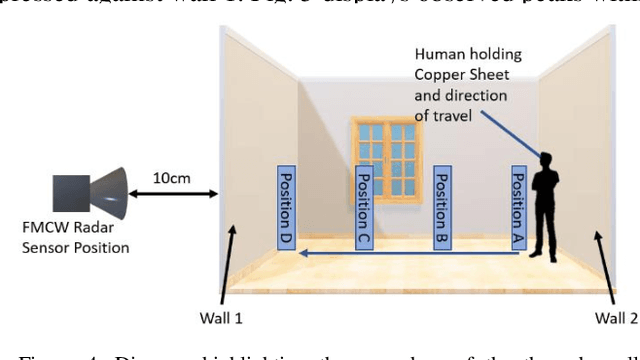

Abstract:Robotic platforms are highly programmable, scalable and versatile to complete several tasks including Inspection, Maintenance and Repair (IMR). Mobile robotics offer reduced restrictions in operating environments, resulting in greater flexibility; operation at height, dangerous areas and repetitive tasks. Cyber physical infrastructures have been identified by the UK Robotics Growth Partnership as a key enabler in how we utilize and interact with sensors and machines via the virtual and physical worlds. Cyber Physical Systems (CPS) allow for robotics and artificial intelligence to adapt and repurpose at pace, allowing for the addressment of new challenges in CPS. A challenge exists within robotics to secure an effective partnership in a wide range of areas which include shared workspaces and Beyond Visual Line of Sight (BVLOS). Robotic manipulation abilities have improved a robots accessibility via the ability to open doorways, however, challenges exist in how a robot decides if it is safe to move into a new workspace. Current sensing methods are limited to line of sight and are unable to capture data beyond doorways or walls, therefore, a robot is unable to sense if it is safe to open a door. Another limitation exists as robots are unable to detect if a human is within a shared workspace. Therefore, if a human is detected, extended safety precautions can be taken to ensure the safe autonomous operation of a robot. These challenges are represented as safety, trust and resilience, inhibiting the successful advancement of CPS. This paper evaluates the use of frequency modulated continuous wave radar sensing for human detection and through-wall detection to increase situational awareness. The results validate the use of the sensor to detect the difference between a person and infrastructure, and increased situational awareness for navigation via foresight monitoring through walls.

Bio-inspired Multi-robot Autonomy

Mar 15, 2022

Abstract:Increasingly, high value industrial markets are driving trends for improved functionality and resilience from resident autonomous systems. This led to an increase in multi-robot fleets that aim to leverage the complementary attributes of the diverse platforms. In this paper we introduce a novel bio-inspired Symbiotic System of Systems Approach (SSOSA) for designing the operational governance of a multi-robot fleet consisting of ground-based quadruped and wheeled platforms. SSOSA couples the MR-fleet to the resident infrastructure monitoring systems into one collaborative digital commons. The hyper visibility of the integrated distributed systems, achieved through a latency bidirectional communication network, supports collaboration, coordination and corroboration (3C) across the integrated systems. In our experiment, we demonstrate how an operator can activate a pre-determined autonomous mission and utilize SSOSA to overcome intrinsic and external risks to the autonomous missions. We demonstrate how resilience can be enhanced by local collaboration between SPOT and Husky wherein we detect a replacement battery, and utilize the manipulator arm of SPOT to support a Clearpath Husky A200 wheeled robotic platform. This allows for increased resilience of an autonomous mission as robots can collaborate to ensure the battery state of the Husky robot. Overall, these initial results demonstrate the value of a SSOSA approach in addressing a key operational barrier to scalable autonomy, the resilience.

A Review: Challenges and Opportunities for Artificial Intelligence and Robotics in the Offshore Wind Sector

Dec 13, 2021

Abstract:A global trend in increasing wind turbine size and distances from shore is emerging within the rapidly growing offshore wind farm market. In the UK, the offshore wind sector produced its highest amount of electricity in the UK in 2019, a 19.6% increase on the year before. Currently, the UK is set to increase production further, targeting a 74.7% increase of installed turbine capacity as reflected in recent Crown Estate leasing rounds. With such tremendous growth, the sector is now looking to Robotics and Artificial Intelligence (RAI) in order to tackle lifecycle service barriers as to support sustainable and profitable offshore wind energy production. Today, RAI applications are predominately being used to support short term objectives in operation and maintenance. However, moving forward, RAI has the potential to play a critical role throughout the full lifecycle of offshore wind infrastructure, from surveying, planning, design, logistics, operational support, training and decommissioning. This paper presents one of the first systematic reviews of RAI for the offshore renewable energy sector. The state-of-the-art in RAI is analyzed with respect to offshore energy requirements, from both industry and academia, in terms of current and future requirements. Our review also includes a detailed evaluation of investment, regulation and skills development required to support the adoption of RAI. The key trends identified through a detailed analysis of patent and academic publication databases provide insights to barriers such as certification of autonomous platforms for safety compliance and reliability, the need for digital architectures for scalability in autonomous fleets, adaptive mission planning for resilient resident operations and optimization of human machine interaction for trusted partnerships between people and autonomous assistants.

Symbiotic System Design for Safe and Resilient Autonomous Robotics in Offshore Wind Farms

Jan 23, 2021

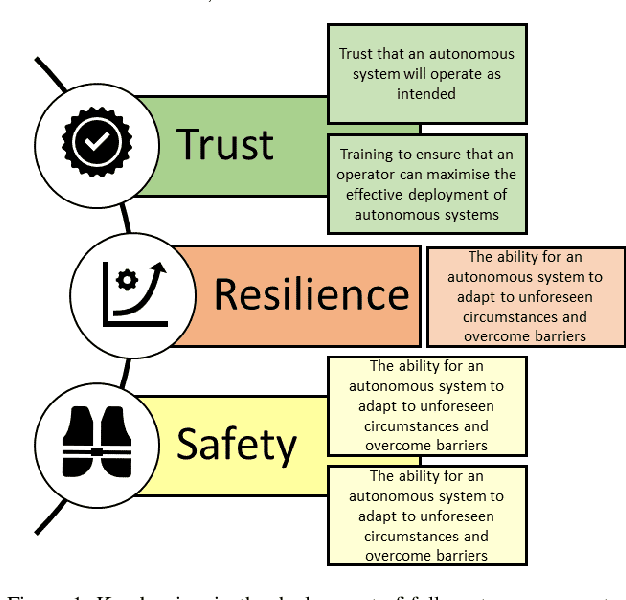

Abstract:To reduce Operation and Maintenance (O&M) costs on offshore wind farms, wherein 80% of the O&M cost relates to deploying personnel, the offshore wind sector looks to robotics and Artificial Intelligence (AI) for solutions. Barriers to Beyond Visual Line of Sight (BVLOS) robotics include operational safety compliance and resilience, inhibiting the commercialization of autonomous services offshore. To address safety and resilience challenges we propose a symbiotic system; reflecting the lifecycle learning and co-evolution with knowledge sharing for mutual gain of robotic platforms and remote human operators. Our methodology enables the run-time verification of safety, reliability and resilience during autonomous missions. We synchronize digital models of the robot, environment and infrastructure and integrate front-end analytics and bidirectional communication for autonomous adaptive mission planning and situation reporting to a remote operator. A reliability ontology for the deployed robot, based on our holistic hierarchical-relational model, supports computationally efficient platform data analysis. We analyze the mission status and diagnostics of critical sub-systems within the robot to provide automatic updates to our run-time reliability ontology, enabling faults to be translated into failure modes for decision making during the mission. We demonstrate an asset inspection mission within a confined space and employ millimeter-wave sensing to enhance situational awareness to detect the presence of obscured personnel to mitigate risk. Our results demonstrate a symbiotic system provides an enhanced resilience capability to BVLOS missions. A symbiotic system addresses the operational challenges and reprioritization of autonomous mission objectives. This advances the technology required to achieve fully trustworthy autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge