Valentin Robu

Bayesian Learning for the Robust Verification of Autonomous Robots

Mar 15, 2023Abstract:We develop a novel Bayesian learning framework that enables the runtime verification of autonomous robots performing critical missions in uncertain environments. Our framework exploits prior knowledge and observations of the verified robotic system to learn expected ranges of values for the occurrence rates of its events. We support both events observed regularly during system operation, and singular events such as catastrophic failures or the completion of difficult one-off tasks. Furthermore, we use the learnt event-rate ranges to assemble interval continuous-time Markov models, and we apply quantitative verification to these models to compute expected intervals of variation for key system properties. These intervals reflect the uncertainty intrinsic to many real-world systems, enabling the robust verification of their quantitative properties under parametric uncertainty. We apply the proposed framework to the case study of verification of an autonomous robotic mission for underwater infrastructure inspection and repair.

Millimeter-wave Foresight Sensing for Safety and Resilience in Autonomous Operations

Mar 24, 2022

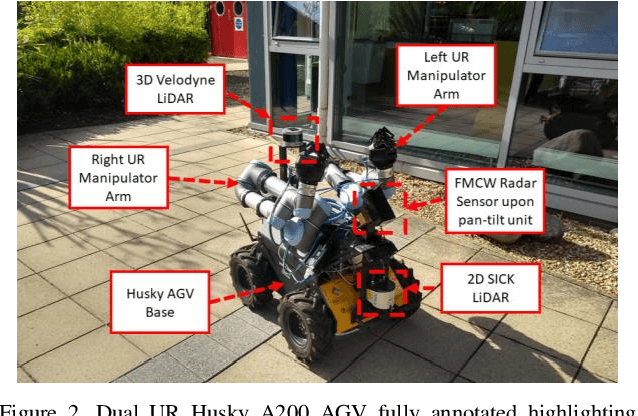

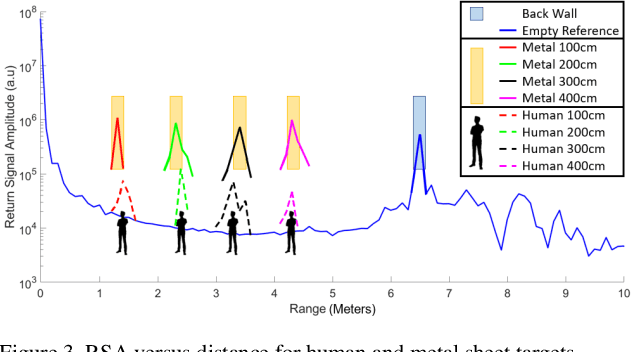

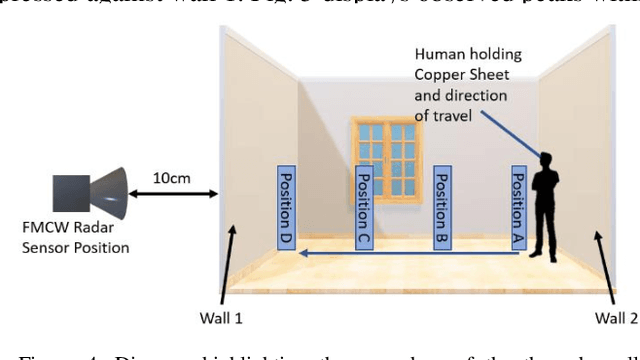

Abstract:Robotic platforms are highly programmable, scalable and versatile to complete several tasks including Inspection, Maintenance and Repair (IMR). Mobile robotics offer reduced restrictions in operating environments, resulting in greater flexibility; operation at height, dangerous areas and repetitive tasks. Cyber physical infrastructures have been identified by the UK Robotics Growth Partnership as a key enabler in how we utilize and interact with sensors and machines via the virtual and physical worlds. Cyber Physical Systems (CPS) allow for robotics and artificial intelligence to adapt and repurpose at pace, allowing for the addressment of new challenges in CPS. A challenge exists within robotics to secure an effective partnership in a wide range of areas which include shared workspaces and Beyond Visual Line of Sight (BVLOS). Robotic manipulation abilities have improved a robots accessibility via the ability to open doorways, however, challenges exist in how a robot decides if it is safe to move into a new workspace. Current sensing methods are limited to line of sight and are unable to capture data beyond doorways or walls, therefore, a robot is unable to sense if it is safe to open a door. Another limitation exists as robots are unable to detect if a human is within a shared workspace. Therefore, if a human is detected, extended safety precautions can be taken to ensure the safe autonomous operation of a robot. These challenges are represented as safety, trust and resilience, inhibiting the successful advancement of CPS. This paper evaluates the use of frequency modulated continuous wave radar sensing for human detection and through-wall detection to increase situational awareness. The results validate the use of the sensor to detect the difference between a person and infrastructure, and increased situational awareness for navigation via foresight monitoring through walls.

A Review: Challenges and Opportunities for Artificial Intelligence and Robotics in the Offshore Wind Sector

Dec 13, 2021

Abstract:A global trend in increasing wind turbine size and distances from shore is emerging within the rapidly growing offshore wind farm market. In the UK, the offshore wind sector produced its highest amount of electricity in the UK in 2019, a 19.6% increase on the year before. Currently, the UK is set to increase production further, targeting a 74.7% increase of installed turbine capacity as reflected in recent Crown Estate leasing rounds. With such tremendous growth, the sector is now looking to Robotics and Artificial Intelligence (RAI) in order to tackle lifecycle service barriers as to support sustainable and profitable offshore wind energy production. Today, RAI applications are predominately being used to support short term objectives in operation and maintenance. However, moving forward, RAI has the potential to play a critical role throughout the full lifecycle of offshore wind infrastructure, from surveying, planning, design, logistics, operational support, training and decommissioning. This paper presents one of the first systematic reviews of RAI for the offshore renewable energy sector. The state-of-the-art in RAI is analyzed with respect to offshore energy requirements, from both industry and academia, in terms of current and future requirements. Our review also includes a detailed evaluation of investment, regulation and skills development required to support the adoption of RAI. The key trends identified through a detailed analysis of patent and academic publication databases provide insights to barriers such as certification of autonomous platforms for safety compliance and reliability, the need for digital architectures for scalability in autonomous fleets, adaptive mission planning for resilient resident operations and optimization of human machine interaction for trusted partnerships between people and autonomous assistants.

Machine learning pipeline for battery state of health estimation

Feb 01, 2021

Abstract:Lithium-ion batteries are ubiquitous in modern day applications ranging from portable electronics to electric vehicles. Irrespective of the application, reliable real-time estimation of battery state of health (SOH) by on-board computers is crucial to the safe operation of the battery, ultimately safeguarding asset integrity. In this paper, we design and evaluate a machine learning pipeline for estimation of battery capacity fade - a metric of battery health - on 179 cells cycled under various conditions. The pipeline estimates battery SOH with an associated confidence interval by using two parametric and two non-parametric algorithms. Using segments of charge voltage and current curves, the pipeline engineers 30 features, performs automatic feature selection and calibrates the algorithms. When deployed on cells operated under the fast-charging protocol, the best model achieves a root mean squared percent error of 0.45\%. This work provides insights into the design of scalable data-driven models for battery SOH estimation, emphasising the value of confidence bounds around the prediction. The pipeline methodology combines experimental data with machine learning modelling and can be generalized to other critical components that require real-time estimation of SOH.

Symbiotic System Design for Safe and Resilient Autonomous Robotics in Offshore Wind Farms

Jan 23, 2021

Abstract:To reduce Operation and Maintenance (O&M) costs on offshore wind farms, wherein 80% of the O&M cost relates to deploying personnel, the offshore wind sector looks to robotics and Artificial Intelligence (AI) for solutions. Barriers to Beyond Visual Line of Sight (BVLOS) robotics include operational safety compliance and resilience, inhibiting the commercialization of autonomous services offshore. To address safety and resilience challenges we propose a symbiotic system; reflecting the lifecycle learning and co-evolution with knowledge sharing for mutual gain of robotic platforms and remote human operators. Our methodology enables the run-time verification of safety, reliability and resilience during autonomous missions. We synchronize digital models of the robot, environment and infrastructure and integrate front-end analytics and bidirectional communication for autonomous adaptive mission planning and situation reporting to a remote operator. A reliability ontology for the deployed robot, based on our holistic hierarchical-relational model, supports computationally efficient platform data analysis. We analyze the mission status and diagnostics of critical sub-systems within the robot to provide automatic updates to our run-time reliability ontology, enabling faults to be translated into failure modes for decision making during the mission. We demonstrate an asset inspection mission within a confined space and employ millimeter-wave sensing to enhance situational awareness to detect the presence of obscured personnel to mitigate risk. Our results demonstrate a symbiotic system provides an enhanced resilience capability to BVLOS missions. A symbiotic system addresses the operational challenges and reprioritization of autonomous mission objectives. This advances the technology required to achieve fully trustworthy autonomous systems.

BayLIME: Bayesian Local Interpretable Model-Agnostic Explanations

Dec 05, 2020

Abstract:A key impediment to the use of AI is the lacking of transparency, especially in safety/security critical applications. The black-box nature of AI systems prevents humans from direct explanations on how the AI makes predictions, which stimulated Explainable AI (XAI) -- a research field that aims at improving the trust and transparency of AI systems. In this paper, we introduce a novel XAI technique, BayLIME, which is a Bayesian modification of the widely used XAI approach LIME. BayLIME exploits prior knowledge to improve the consistency in repeated explanations of a single prediction and also the robustness to kernel settings. Both theoretical analysis and extensive experiments are conducted to support our conclusions.

Assessing Safety-Critical Systems from Operational Testing: A Study on Autonomous Vehicles

Aug 19, 2020

Abstract:Context: Demonstrating high reliability and safety for safety-critical systems (SCSs) remains a hard problem. Diverse evidence needs to be combined in a rigorous way: in particular, results of operational testing with other evidence from design and verification. Growing use of machine learning in SCSs, by precluding most established methods for gaining assurance, makes operational testing even more important for supporting safety and reliability claims. Objective: We use Autonomous Vehicles (AVs) as a current example to revisit the problem of demonstrating high reliability. AVs are making their debut on public roads: methods for assessing whether an AV is safe enough are urgently needed. We demonstrate how to answer 5 questions that would arise in assessing an AV type, starting with those proposed by a highly-cited study. Method: We apply new theorems extending Conservative Bayesian Inference (CBI), which exploit the rigour of Bayesian methods while reducing the risk of involuntary misuse associated with now-common applications of Bayesian inference; we define additional conditions needed for applying these methods to AVs. Results: Prior knowledge can bring substantial advantages if the AV design allows strong expectations of safety before road testing. We also show how naive attempts at conservative assessment may lead to over-optimism instead; why extrapolating the trend of disengagements is not suitable for safety claims; use of knowledge that an AV has moved to a less stressful environment. Conclusion: While some reliability targets will remain too high to be practically verifiable, CBI removes a major source of doubt: it allows use of prior knowledge without inducing dangerously optimistic biases. For certain ranges of required reliability and prior beliefs, CBI thus supports feasible, sound arguments. Useful conservative claims can be derived from limited prior knowledge.

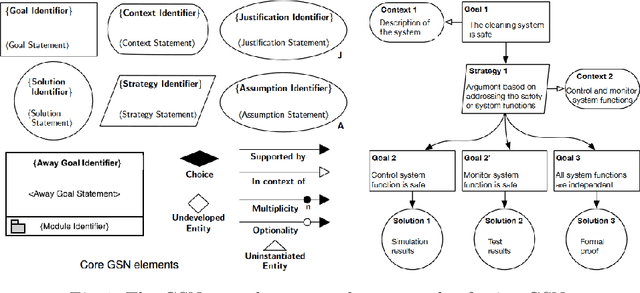

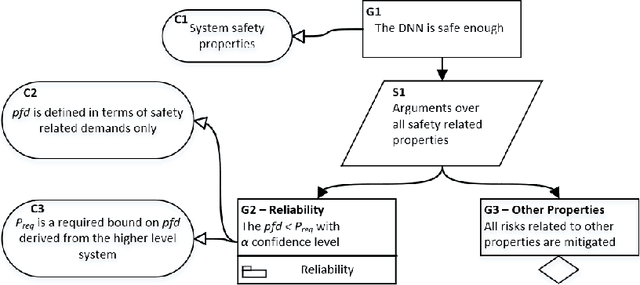

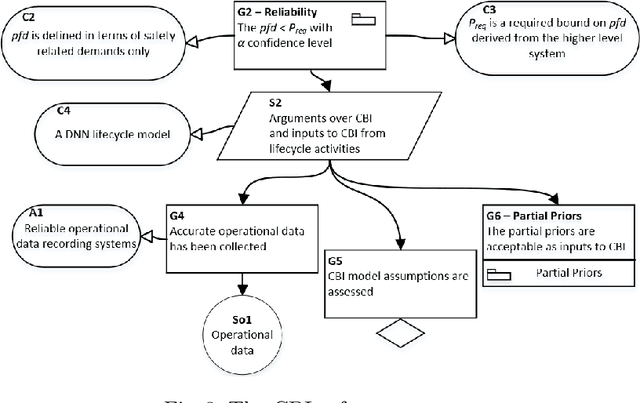

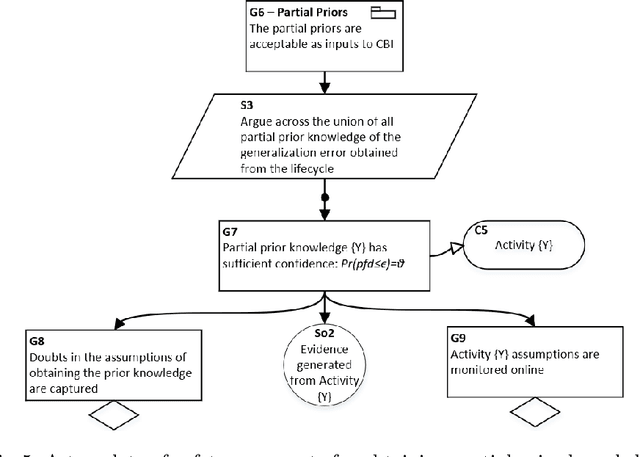

A Safety Framework for Critical Systems Utilising Deep Neural Networks

Mar 07, 2020

Abstract:Increasingly sophisticated mathematical modelling processes from Machine Learning are being used to analyse complex data. However, the performance and explainability of these models within practical critical systems requires a rigorous and continuous verification of their safe utilisation. Working towards addressing this challenge, this paper presents a principled novel safety argument framework for critical systems that utilise deep neural networks. The approach allows various forms of predictions, e.g., future reliability of passing some demands, or confidence on a required reliability level. It is supported by a Bayesian analysis using operational data and the recent verification and validation techniques for deep learning. The prediction is conservative -- it starts with partial prior knowledge obtained from lifecycle activities and then determines the worst-case prediction. Open challenges are also identified.

Consider ethical and social challenges in smart grid research

Nov 26, 2019Abstract:Artificial Intelligence and Machine Learning are increasingly seen as key technologies for building more decentralised and resilient energy grids, but researchers must consider the ethical and social implications of their use

* Preprint of paper published in Nature Machine Intelligence, vol. 1 (25 Nov. 2019)

Towards Integrating Formal Verification of Autonomous Robots with Battery Prognostics and Health Management

Aug 22, 2019

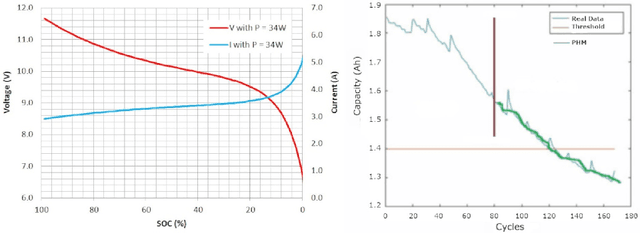

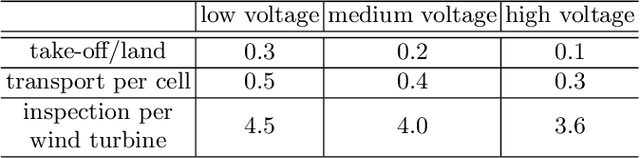

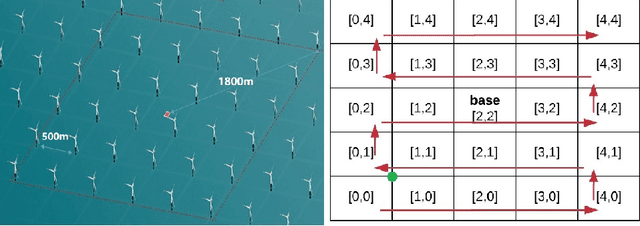

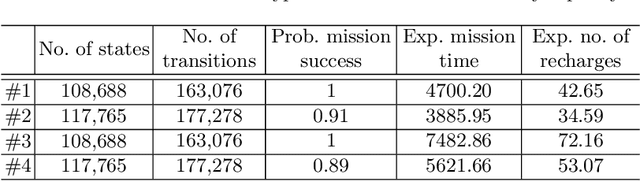

Abstract:The battery is a key component of autonomous robots. Its performance limits the robot's safety and reliability. Unlike liquid-fuel, a battery, as a chemical device, exhibits complicated features, including (i) capacity fade over successive recharges and (ii) increasing discharge rate as the state of charge (SOC) goes down for a given power demand. Existing formal verification studies of autonomous robots, when considering energy constraints, formalise the energy component in a generic manner such that the battery features are overlooked. In this paper, we model an unmanned aerial vehicle (UAV) inspection mission on a wind farm and via probabilistic model checking in PRISM show (i) how the battery features may affect the verification results significantly in practical cases; and (ii) how the battery features, together with dynamic environments and battery safety strategies, jointly affect the verification results. Potential solutions to explicitly integrate battery prognostics and health management (PHM) with formal verification of autonomous robots are also discussed to motivate future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge