James Sharp

Abstraction and Symbolic Execution of Deep Neural Networks with Bayesian Approximation of Hidden Features

Mar 05, 2021

Abstract:Intensive research has been conducted on the verification and validation of deep neural networks (DNNs), aiming to understand if, and how, DNNs can be applied to safety critical applications. However, existing verification and validation techniques are limited by their scalability, over both the size of the DNN and the size of the dataset. In this paper, we propose a novel abstraction method which abstracts a DNN and a dataset into a Bayesian network (BN). We make use of dimensionality reduction techniques to identify hidden features that have been learned by hidden layers of the DNN, and associate each hidden feature with a node of the BN. On this BN, we can conduct probabilistic inference to understand the behaviours of the DNN processing data. More importantly, we can derive a runtime monitoring approach to detect in operational time rare inputs and covariate shift of the input data. We can also adapt existing structural coverage-guided testing techniques (i.e., based on low-level elements of the DNN such as neurons), in order to generate test cases that better exercise hidden features. We implement and evaluate the BN abstraction technique using our DeepConcolic tool available at https://github.com/TrustAI/DeepConcolic.

Formal Verification of Robustness and Resilience of Learning-Enabled State Estimation Systems for Robotics

Oct 16, 2020

Abstract:This paper presents a formal verification guided approach for a principled design and implementation of robust and resilient learning-enabled systems. We focus on learning-enabled state estimation systems (LE-SESs), which have been widely used in robotics applications to determine the current state (e.g., location, speed, direction, etc.) of a complex system. The LE-SESs are networked systems composed of a set of connected components including Bayes filters for localisation, and neural networks for processing sensory input. We study LE-SESs from the perspective of formal verification, which determines the satisfiability of a system model against the specified properties. Over LE-SESs, we investigate two key properties - robustness and resilience - and provide their formal definitions. To enable formal verification, we reduce the LE-SESs to a novel class of labelled transition systems, named {PO}2-LTS in the paper, and formally express the properties as constrained optimisation objectives. We prove that the robustness verification is NP-complete. Based on {PO}2-LTS and the optimisation objectives, practical verification algorithms are developed to check the satisfiability of the properties on the LE-SESs. As a major case study, we interrogate a real-world dynamic tracking system which uses a single Kalman Filter (KF) - a special case of Bayes filter - to localise and track a ground vehicle. Its perception system, based on convolutional neural networks, processes a high-resolution Wide Area Motion Imagery (WAMI) data stream. Experimental results show that our algorithms can not only verify the properties of the WAMI tracking system but also provide representative examples, the latter of which inspired us to take an enhanced LE-SESs design where runtime monitors or joint-KFs are required. Experimental results confirm the improvement of the robustness of the enhanced design.

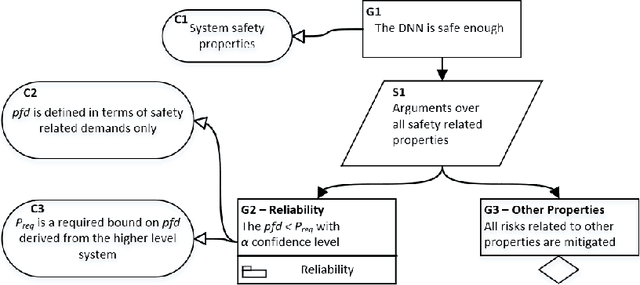

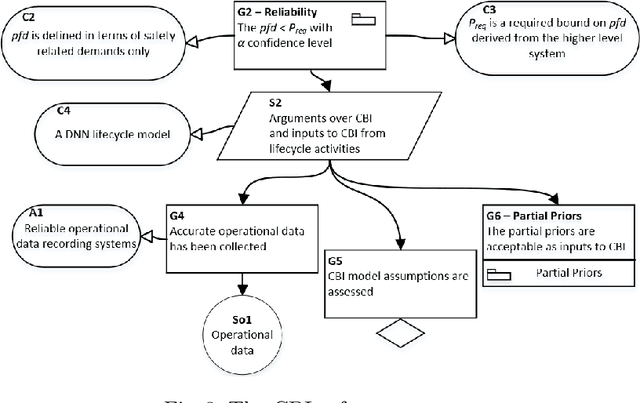

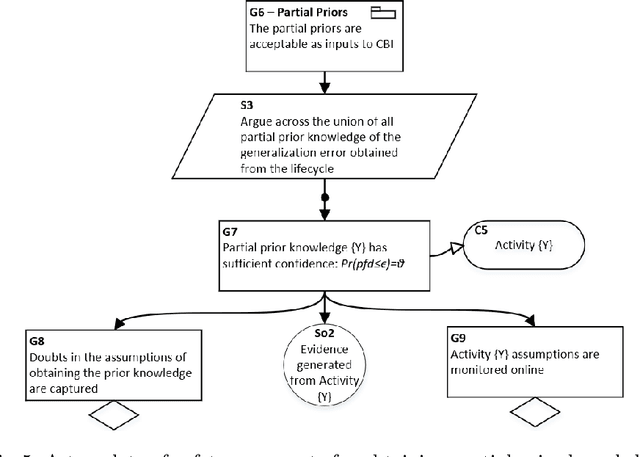

A Safety Framework for Critical Systems Utilising Deep Neural Networks

Mar 07, 2020

Abstract:Increasingly sophisticated mathematical modelling processes from Machine Learning are being used to analyse complex data. However, the performance and explainability of these models within practical critical systems requires a rigorous and continuous verification of their safe utilisation. Working towards addressing this challenge, this paper presents a principled novel safety argument framework for critical systems that utilise deep neural networks. The approach allows various forms of predictions, e.g., future reliability of passing some demands, or confidence on a required reliability level. It is supported by a Bayesian analysis using operational data and the recent verification and validation techniques for deep learning. The prediction is conservative -- it starts with partial prior knowledge obtained from lifecycle activities and then determines the worst-case prediction. Open challenges are also identified.

Reliability Validation of Learning Enabled Vehicle Tracking

Feb 06, 2020

Abstract:This paper studies the reliability of a real-world learning-enabled system, which conducts dynamic vehicle tracking based on a high-resolution wide-area motion imagery input. The system consists of multiple neural network components -- to process the imagery inputs -- and multiple symbolic (Kalman filter) components -- to analyse the processed information for vehicle tracking. It is known that neural networks suffer from adversarial examples, which make them lack robustness. However, it is unclear if and how the adversarial examples over learning components can affect the overall system-level reliability. By integrating a coverage-guided neural network testing tool, DeepConcolic, with the vehicle tracking system, we found that (1) the overall system can be resilient to some adversarial examples thanks to the existence of other components, and (2) the overall system presents an extra level of uncertainty which cannot be determined by analysing the deep learning components only. This research suggests the need for novel verification and validation methods for learning-enabled systems.

Test Metrics for Recurrent Neural Networks

Nov 05, 2019

Abstract:Recurrent neural networks (RNNs) have been applied to a broad range of application areas such as natural language processing, drug discovery, and video recognition. This paper develops a coverage-guided test framework, including three test metrics and a mutation-based test case generation method, for the validation of a major class of RNNs, i.e., long short-term memory networks (LSTMs). The test metrics are designed with respect to the internal structures of the LSTM layers to quantify the information of the forget gate, the one-step information change of an aggregate hidden state, and the multi-step information evolution of positive and negative aggregate hidden state, respectively. We apply the test framework to several typical LSTM applications, including a network trained on IMDB movie reviews for sentiment analysis, a network trained on MNIST dataset for image classification, and a network trained on a lipophilicity dataset for scientific machine learning. Our experimental results show that the coverage-guided testing can be used to not only extensively exploit the behaviour of the LSTM layer in order to discover the safety loopholes (such as adversarial examples) but also help understand the internal mechanism of how the LSTM layer processes data.

testRNN: Coverage-guided Testing on Recurrent Neural Networks

Jun 20, 2019

Abstract:Recurrent neural networks (RNNs) have been widely applied to various sequential tasks such as text processing, video recognition, and molecular property prediction. We introduce the first coverage-guided testing tool, coined testRNN, for the verification and validation of a major class of RNNs, long short-term memory networks (LSTMs). The tool implements a generic mutation-based test case generation method, and it empirically evaluates the robustness of a network using three novel LSTM structural test coverage metrics. Moreover, it is able to help the model designer go through the internal data flow processing of the LSTM layer. The tool is available through: https://github.com/TrustAI/testRNN under the BSD 3-Clause licence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge