Jie Meng

A Survey of New Mid-Band/FR3 for 6G: Channel Measurement, Characterization and Modeling in Outdoor Environment

Apr 09, 2025Abstract:The new mid-band (6-24 GHz) has attracted significant attention from both academia and industry, which is the spectrum with continuous bandwidth that combines the coverage benefits of low frequency with the capacity advantages of high frequency. Since outdoor environments represent the primary application scenario for mobile communications, this paper presents the first comprehensive review and summary of multi-scenario and multi-frequency channel characteristics based on extensive outdoor new mid-band channel measurement data, including UMa, UMi, and O2I. Specifically, a survey of the progress of the channel characteristics is presented, such as path loss, delay spread, angular spread, channel sparsity, capacity and near-field spatial non-stationary characteristics. Then, considering that satellite communication will be an important component of future communication systems, we examine the impact of clutter loss in air-ground communications. Our analysis of the frequency dependence of mid-band clutter loss suggests that its impact is not significant. Additionally, given that penetration loss is frequency-dependent, we summarize its variation within the FR3 band. Based on experimental results, comparisons with the standard model reveal that while the 3GPP TR 38.901 model remains a useful reference for penetration loss in wood and glass, it shows significant deviations for concrete and glass, indicating the need for further refinement. In summary, the findings of this survey provide both empirical data and theoretical support for the deployment of mid-band in future communication systems, as well as guidance for optimizing mid-band base station deployment in the outdoor environment. This survey offers the reference for improving standard models and advancing channel modeling.

Safeguarding Large Language Models: A Survey

Jun 03, 2024

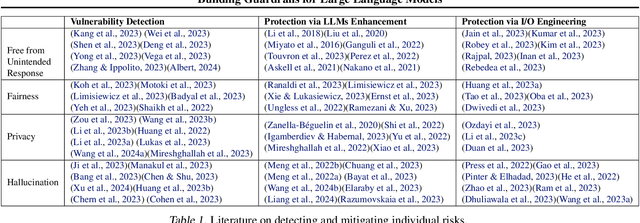

Abstract:In the burgeoning field of Large Language Models (LLMs), developing a robust safety mechanism, colloquially known as "safeguards" or "guardrails", has become imperative to ensure the ethical use of LLMs within prescribed boundaries. This article provides a systematic literature review on the current status of this critical mechanism. It discusses its major challenges and how it can be enhanced into a comprehensive mechanism dealing with ethical issues in various contexts. First, the paper elucidates the current landscape of safeguarding mechanisms that major LLM service providers and the open-source community employ. This is followed by the techniques to evaluate, analyze, and enhance some (un)desirable properties that a guardrail might want to enforce, such as hallucinations, fairness, privacy, and so on. Based on them, we review techniques to circumvent these controls (i.e., attacks), to defend the attacks, and to reinforce the guardrails. While the techniques mentioned above represent the current status and the active research trends, we also discuss several challenges that cannot be easily dealt with by the methods and present our vision on how to implement a comprehensive guardrail through the full consideration of multi-disciplinary approach, neural-symbolic method, and systems development lifecycle.

Building Guardrails for Large Language Models

Feb 02, 2024

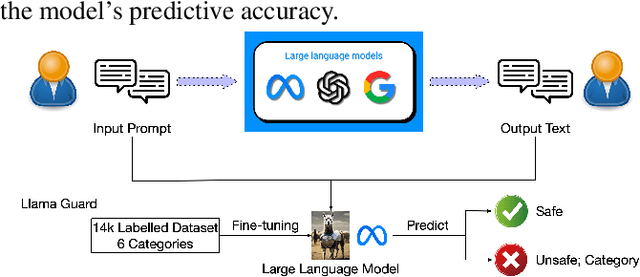

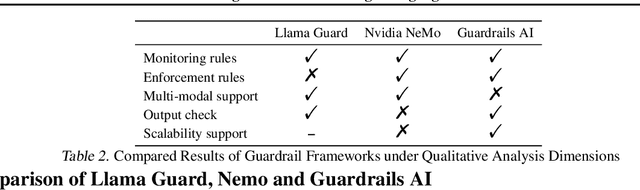

Abstract:As Large Language Models (LLMs) become more integrated into our daily lives, it is crucial to identify and mitigate their risks, especially when the risks can have profound impacts on human users and societies. Guardrails, which filter the inputs or outputs of LLMs, have emerged as a core safeguarding technology. This position paper takes a deep look at current open-source solutions (Llama Guard, Nvidia NeMo, Guardrails AI), and discusses the challenges and the road towards building more complete solutions. Drawing on robust evidence from previous research, we advocate for a systematic approach to construct guardrails for LLMs, based on comprehensive consideration of diverse contexts across various LLMs applications. We propose employing socio-technical methods through collaboration with a multi-disciplinary team to pinpoint precise technical requirements, exploring advanced neural-symbolic implementations to embrace the complexity of the requirements, and developing verification and testing to ensure the utmost quality of the final product.

Formal Verification of Robustness and Resilience of Learning-Enabled State Estimation Systems for Robotics

Oct 16, 2020

Abstract:This paper presents a formal verification guided approach for a principled design and implementation of robust and resilient learning-enabled systems. We focus on learning-enabled state estimation systems (LE-SESs), which have been widely used in robotics applications to determine the current state (e.g., location, speed, direction, etc.) of a complex system. The LE-SESs are networked systems composed of a set of connected components including Bayes filters for localisation, and neural networks for processing sensory input. We study LE-SESs from the perspective of formal verification, which determines the satisfiability of a system model against the specified properties. Over LE-SESs, we investigate two key properties - robustness and resilience - and provide their formal definitions. To enable formal verification, we reduce the LE-SESs to a novel class of labelled transition systems, named {PO}2-LTS in the paper, and formally express the properties as constrained optimisation objectives. We prove that the robustness verification is NP-complete. Based on {PO}2-LTS and the optimisation objectives, practical verification algorithms are developed to check the satisfiability of the properties on the LE-SESs. As a major case study, we interrogate a real-world dynamic tracking system which uses a single Kalman Filter (KF) - a special case of Bayes filter - to localise and track a ground vehicle. Its perception system, based on convolutional neural networks, processes a high-resolution Wide Area Motion Imagery (WAMI) data stream. Experimental results show that our algorithms can not only verify the properties of the WAMI tracking system but also provide representative examples, the latter of which inspired us to take an enhanced LE-SESs design where runtime monitors or joint-KFs are required. Experimental results confirm the improvement of the robustness of the enhanced design.

CasGCN: Predicting future cascade growth based on information diffusion graph

Sep 10, 2020

Abstract:Sudden bursts of information cascades can lead to unexpected consequences such as extreme opinions, changes in fashion trends, and uncontrollable spread of rumors. It has become an important problem on how to effectively predict a cascade' size in the future, especially for large-scale cascades on social media platforms such as Twitter and Weibo. However, existing methods are insufficient in dealing with this challenging prediction problem. Conventional methods heavily rely on either hand crafted features or unrealistic assumptions. End-to-end deep learning models, such as recurrent neural networks, are not suitable to work with graphical inputs directly and cannot handle structural information that is embedded in the cascade graphs. In this paper, we propose a novel deep learning architecture for cascade growth prediction, called CasGCN, which employs the graph convolutional network to extract structural features from a graphical input, followed by the application of the attention mechanism on both the extracted features and the temporal information before conducting cascade size prediction. We conduct experiments on two real-world cascade growth prediction scenarios (i.e., retweet popularity on Sina Weibo and academic paper citations on DBLP), with the experimental results showing that CasGCN enjoys a superior performance over several baseline methods, particularly when the cascades are of large scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge