Simos Gerasimou

Quantifying Adversarial Uncertainty in Evidential Deep Learning using Conflict Resolution

Jun 06, 2025Abstract:Reliability of deep learning models is critical for deployment in high-stakes applications, where out-of-distribution or adversarial inputs may lead to detrimental outcomes. Evidential Deep Learning, an efficient paradigm for uncertainty quantification, models predictions as Dirichlet distributions of a single forward pass. However, EDL is particularly vulnerable to adversarially perturbed inputs, making overconfident errors. Conflict-aware Evidential Deep Learning (C-EDL) is a lightweight post-hoc uncertainty quantification approach that mitigates these issues, enhancing adversarial and OOD robustness without retraining. C-EDL generates diverse, task-preserving transformations per input and quantifies representational disagreement to calibrate uncertainty estimates when needed. C-EDL's conflict-aware prediction adjustment improves detection of OOD and adversarial inputs, maintaining high in-distribution accuracy and low computational overhead. Our experimental evaluation shows that C-EDL significantly outperforms state-of-the-art EDL variants and competitive baselines, achieving substantial reductions in coverage for OOD data (up to 55%) and adversarial data (up to 90%), across a range of datasets, attack types, and uncertainty metrics.

Adaptive Human-Robot Collaborative Missions using Hybrid Task Planning

Apr 09, 2025Abstract:Producing robust task plans in human-robot collaborative missions is a critical activity in order to increase the likelihood of these missions completing successfully. Despite the broad research body in the area, which considers different classes of constraints and uncertainties, its applicability is confined to relatively simple problems that can be comfortably addressed by the underpinning mathematically-based or heuristic-driven solver engines. In this paper, we introduce a hybrid approach that effectively solves the task planning problem by decomposing it into two intertwined parts, starting with the identification of a feasible plan and followed by its uncertainty augmentation and verification yielding a set of Pareto optimal plans. To enhance its robustness, adaptation tactics are devised for the evolving system requirements and agents' capabilities. We demonstrate our approach through an industrial case study involving workers and robots undertaking activities within a vineyard, showcasing the benefits of our hybrid approach both in the generation of feasible solutions and scalability compared to native planners.

Safe Reinforcement Learning in Black-Box Environments via Adaptive Shielding

May 28, 2024Abstract:Empowering safe exploration of reinforcement learning (RL) agents during training is a critical impediment towards deploying RL agents in many real-world scenarios. Training RL agents in unknown, black-box environments poses an even greater safety risk when prior knowledge of the domain/task is unavailable. We introduce ADVICE (Adaptive Shielding with a Contrastive Autoencoder), a novel post-shielding technique that distinguishes safe and unsafe features of state-action pairs during training, thus protecting the RL agent from executing actions that yield potentially hazardous outcomes. Our comprehensive experimental evaluation against state-of-the-art safe RL exploration techniques demonstrates how ADVICE can significantly reduce safety violations during training while maintaining a competitive outcome reward.

DeepKnowledge: Generalisation-Driven Deep Learning Testing

Mar 25, 2024

Abstract:Despite their unprecedented success, DNNs are notoriously fragile to small shifts in data distribution, demanding effective testing techniques that can assess their dependability. Despite recent advances in DNN testing, there is a lack of systematic testing approaches that assess the DNN's capability to generalise and operate comparably beyond data in their training distribution. We address this gap with DeepKnowledge, a systematic testing methodology for DNN-based systems founded on the theory of knowledge generalisation, which aims to enhance DNN robustness and reduce the residual risk of 'black box' models. Conforming to this theory, DeepKnowledge posits that core computational DNN units, termed Transfer Knowledge neurons, can generalise under domain shift. DeepKnowledge provides an objective confidence measurement on testing activities of DNN given data distribution shifts and uses this information to instrument a generalisation-informed test adequacy criterion to check the transfer knowledge capacity of a test set. Our empirical evaluation of several DNNs, across multiple datasets and state-of-the-art adversarial generation techniques demonstrates the usefulness and effectiveness of DeepKnowledge and its ability to support the engineering of more dependable DNNs. We report improvements of up to 10 percentage points over state-of-the-art coverage criteria for detecting adversarial attacks on several benchmarks, including MNIST, SVHN, and CIFAR.

Robust Uncertainty Quantification using Conformalised Monte Carlo Prediction

Aug 18, 2023

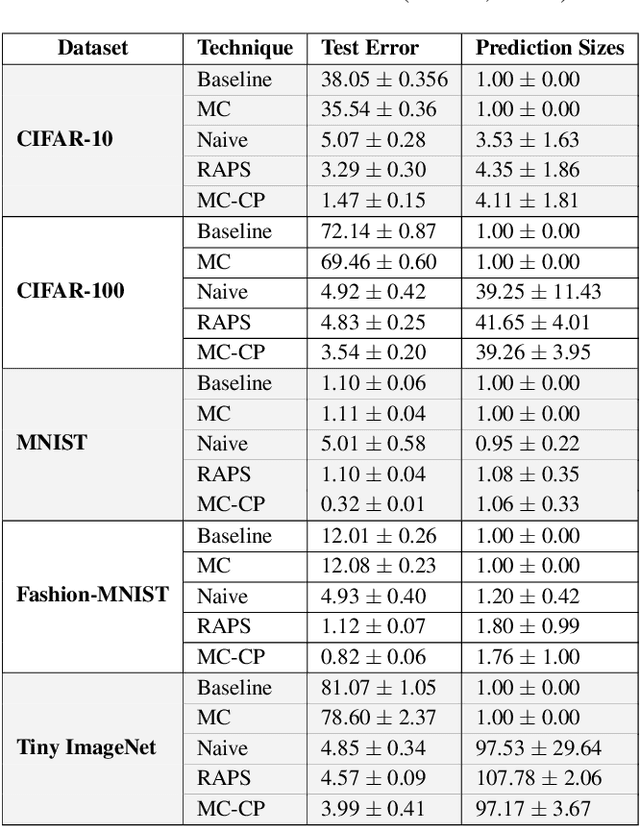

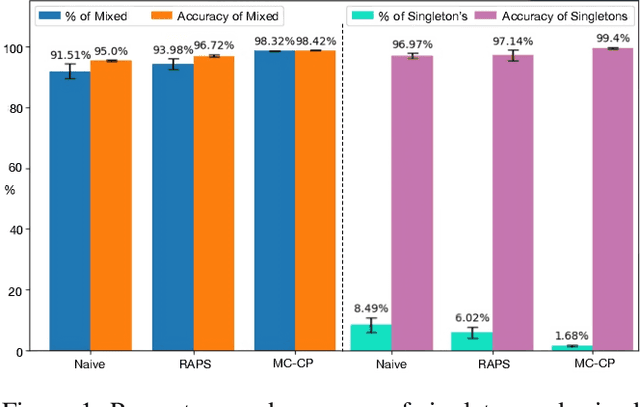

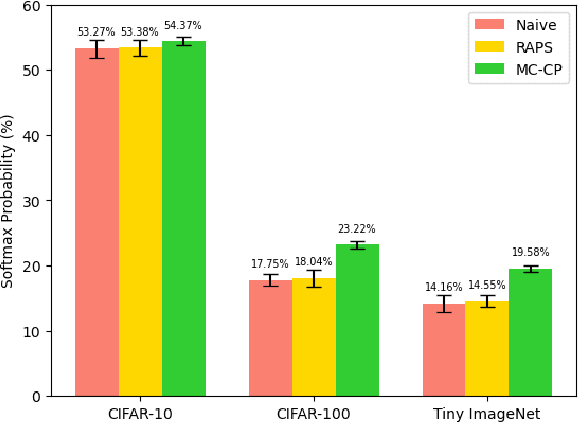

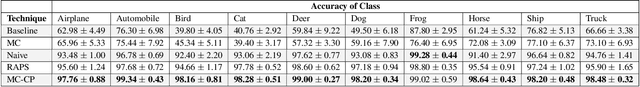

Abstract:Deploying deep learning models in safety-critical applications remains a very challenging task, mandating the provision of assurances for the dependable operation of these models. Uncertainty quantification (UQ) methods estimate the model's confidence per prediction, informing decision-making by considering the effect of randomness and model misspecification. Despite the advances of state-of-the-art UQ methods, they are computationally expensive or produce conservative prediction sets/intervals. We introduce MC-CP, a novel hybrid UQ method that combines a new adaptive Monte Carlo (MC) dropout method with conformal prediction (CP). MC-CP adaptively modulates the traditional MC dropout at runtime to save memory and computation resources, enabling predictions to be consumed by CP, yielding robust prediction sets/intervals. Throughout comprehensive experiments, we show that MC-CP delivers significant improvements over advanced UQ methods, like MC dropout, RAPS and CQR, both in classification and regression benchmarks. MC-CP can be easily added to existing models, making its deployment simple.

Bayesian Learning for the Robust Verification of Autonomous Robots

Mar 15, 2023Abstract:We develop a novel Bayesian learning framework that enables the runtime verification of autonomous robots performing critical missions in uncertain environments. Our framework exploits prior knowledge and observations of the verified robotic system to learn expected ranges of values for the occurrence rates of its events. We support both events observed regularly during system operation, and singular events such as catastrophic failures or the completion of difficult one-off tasks. Furthermore, we use the learnt event-rate ranges to assemble interval continuous-time Markov models, and we apply quantitative verification to these models to compute expected intervals of variation for key system properties. These intervals reflect the uncertainty intrinsic to many real-world systems, enabling the robust verification of their quantitative properties under parametric uncertainty. We apply the proposed framework to the case study of verification of an autonomous robotic mission for underwater infrastructure inspection and repair.

Fast Parametric Model Checking through Model Fragmentation

Feb 02, 2021

Abstract:Parametric model checking (PMC) computes algebraic formulae that express key non-functional properties of a system (reliability, performance, etc.) as rational functions of the system and environment parameters. In software engineering, PMC formulae can be used during design, e.g., to analyse the sensitivity of different system architectures to parametric variability, or to find optimal system configurations. They can also be used at runtime, e.g., to check if non-functional requirements are still satisfied after environmental changes, or to select new configurations after such changes. However, current PMC techniques do not scale well to systems with complex behaviour and more than a few parameters. Our paper introduces a fast PMC (fPMC) approach that overcomes this limitation, extending the applicability of PMC to a broader class of systems than previously possible. To this end, fPMC partitions the Markov models that PMC operates with into \emph{fragments} whose reachability properties are analysed independently, and obtains PMC reachability formulae by combining the results of these fragment analyses. To demonstrate the effectiveness of fPMC, we show how our fPMC tool can analyse three systems (taken from the research literature, and belonging to different application domains) with which current PMC techniques and tools struggle.

Supporting Robotic Software Migration Using Static Analysis and Model-Driven Engineering

Aug 05, 2020Abstract:The wide use of robotic systems contributed to developing robotic software highly coupled to the hardware platform running the robotic system. Due to increased maintenance cost or changing business priorities, the robotic hardware is infrequently upgraded, thus increasing the risk for technology stagnation. Reducing this risk entails migrating the system and its software to a new hardware platform. Conventional software engineering practices such as complete re-development and code-based migration, albeit useful in mitigating these obsolescence issues, they are time-consuming and overly expensive. Our RoboSMi model-driven approach supports the migration of the software controlling a robotic system between hardware platforms. First, RoboSMi executes static analysis on the robotic software of the source hardware platform to identify platform-dependent and platform-agnostic software constructs. By analysing a model that expresses the architecture of robotic components on the target platform, RoboSMi establishes the hardware configuration of those components and suggests software libraries for each component whose execution will enable the robotic software to control the components. Finally, RoboSMi through code-generation produces software for the target platform and indicates areas that require manual intervention by robotic engineers to complete the migration. We evaluate the applicability of RoboSMi and analyse the level of automation and performance provided from its use by migrating two robotic systems deployed for an environmental monitoring and a line following mission from a Propeller Activity Board to an Arduino Uno.

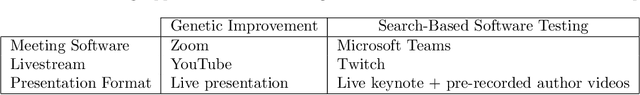

Genetic Improvement @ ICSE 2020

Jul 31, 2020

Abstract:Following Prof. Mark Harman of Facebook's keynote and formal presentations (which are recorded in the proceedings) there was a wide ranging discussion at the eighth international Genetic Improvement workshop, GI-2020 @ ICSE (held as part of the 42nd ACM/IEEE International Conference on Software Engineering on Friday 3rd July 2020). Topics included industry take up, human factors, explainabiloity (explainability, justifyability, exploitability) and GI benchmarks. We also contrast various recent online approaches (e.g. SBST 2020) to holding virtual computer science conferences and workshops via the WWW on the Internet without face-2-face interaction. Finally we speculate on how the Coronavirus Covid-19 Pandemic will affect research next year and into the future.

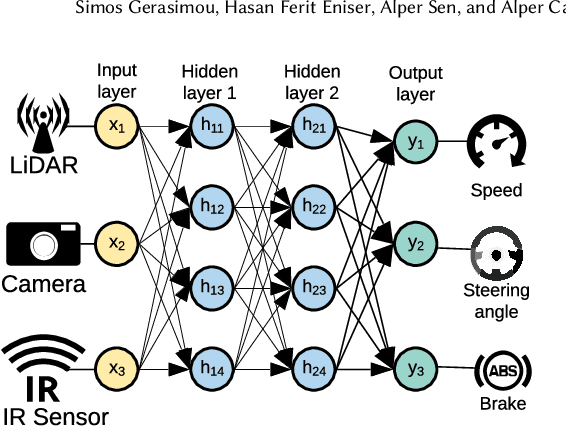

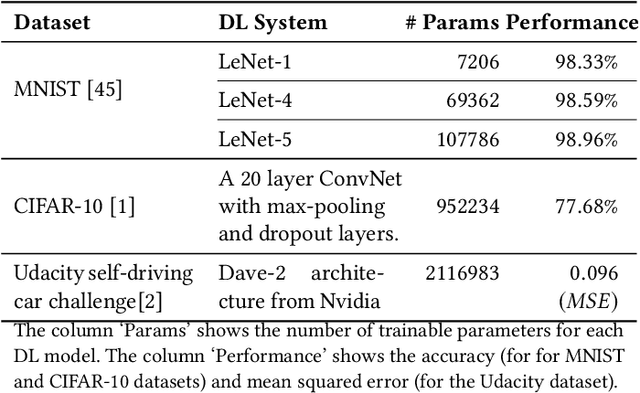

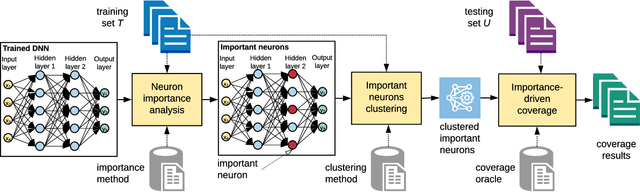

Importance-Driven Deep Learning System Testing

Feb 09, 2020

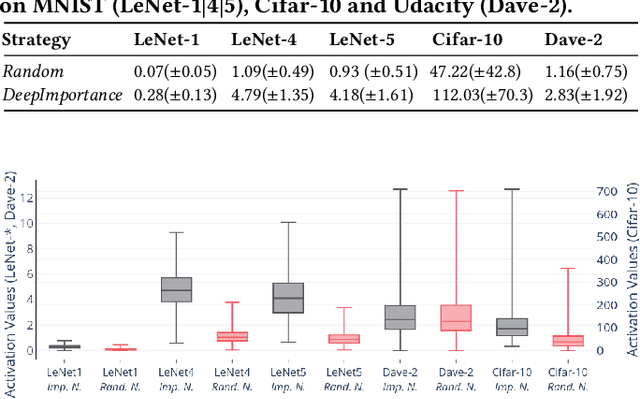

Abstract:Deep Learning (DL) systems are key enablers for engineering intelligent applications due to their ability to solve complex tasks such as image recognition and machine translation. Nevertheless, using DL systems in safety- and security-critical applications requires to provide testing evidence for their dependable operation. Recent research in this direction focuses on adapting testing criteria from traditional software engineering as a means of increasing confidence for their correct behaviour. However, they are inadequate in capturing the intrinsic properties exhibited by these systems. We bridge this gap by introducing DeepImportance, a systematic testing methodology accompanied by an Importance-Driven (IDC) test adequacy criterion for DL systems. Applying IDC enables to establish a layer-wise functional understanding of the importance of DL system components and use this information to assess the semantic diversity of a test set. Our empirical evaluation on several DL systems, across multiple DL datasets and with state-of-the-art adversarial generation techniques demonstrates the usefulness and effectiveness of DeepImportance and its ability to support the engineering of more robust DL systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge