Theodore Lim

Addressing Non-Intervention Challenges via Resilient Robotics utilizing a Digital Twin

Mar 29, 2022

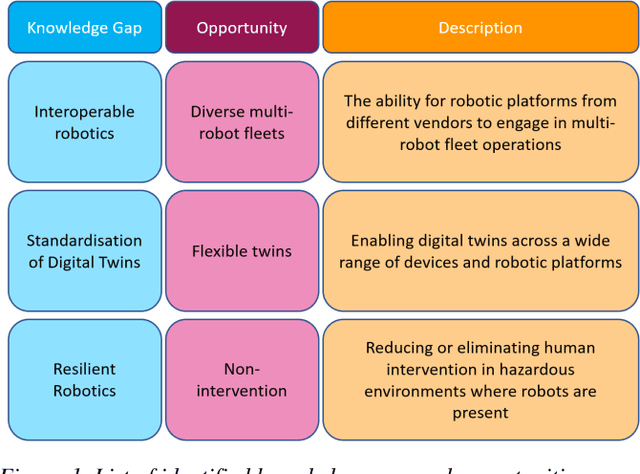

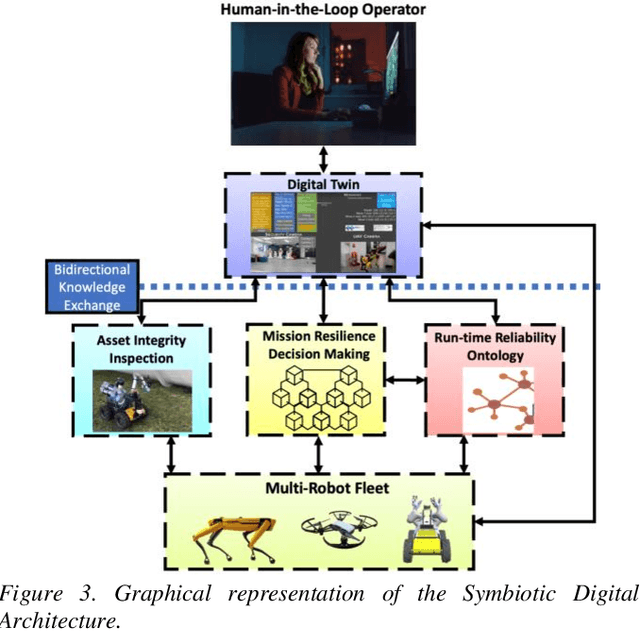

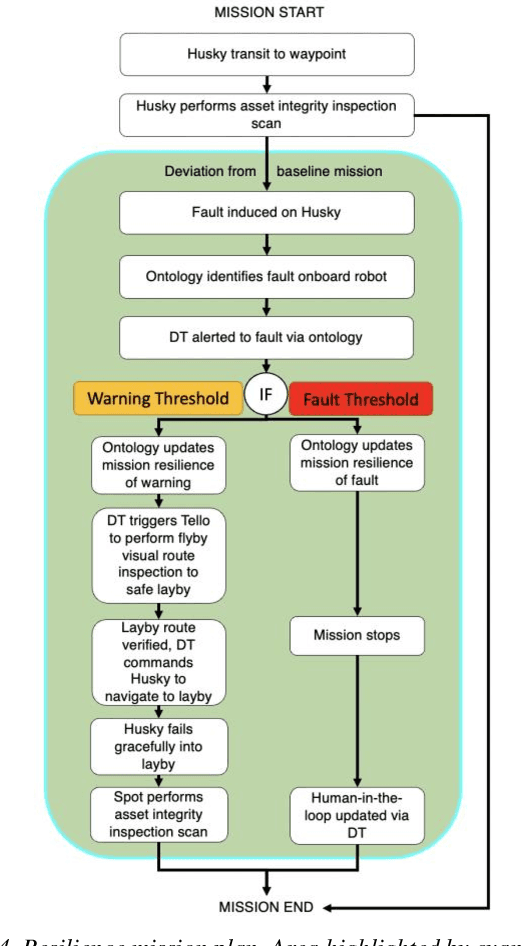

Abstract:Multi-robot systems face challenges in reducing human interventions as they are often deployed in dangerous environments. It is therefore necessary to include a methodology to assess robot failure rates to reduce the requirement for costly human intervention. A solution to this problem includes robots with the ability to work together to ensure mission resilience. To prevent this intervention, robots should be able to work together to ensure mission resilience. However, robotic platforms generally lack built-in interconnectivity with other platforms from different vendors. This work aims to tackle this issue by enabling the functionality through a bidirectional digital twin. The twin enables the human operator to transmit and receive information to and from the multi-robot fleet. This digital twin considers mission resilience, decision making and a run-time reliability ontology for failure detection to enable the resilience of a multi-robot fleet. This creates the cooperation, corroboration, and collaboration of diverse robots to leverage the capability of robots and support recovery of a failed robot.

Millimeter-wave Foresight Sensing for Safety and Resilience in Autonomous Operations

Mar 24, 2022

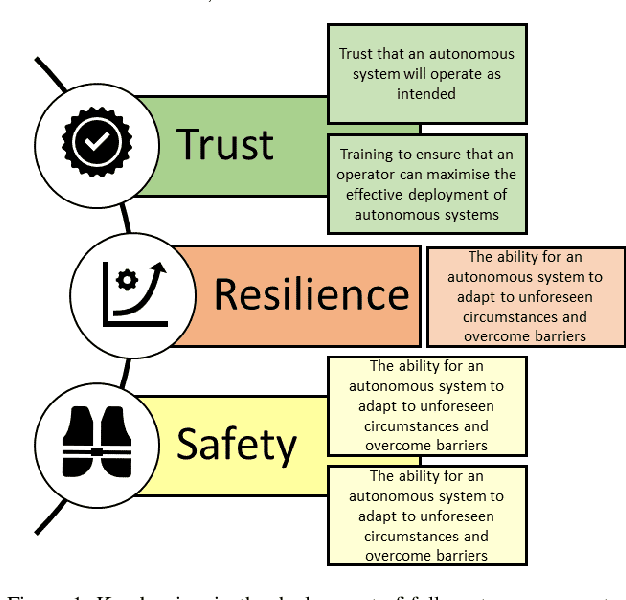

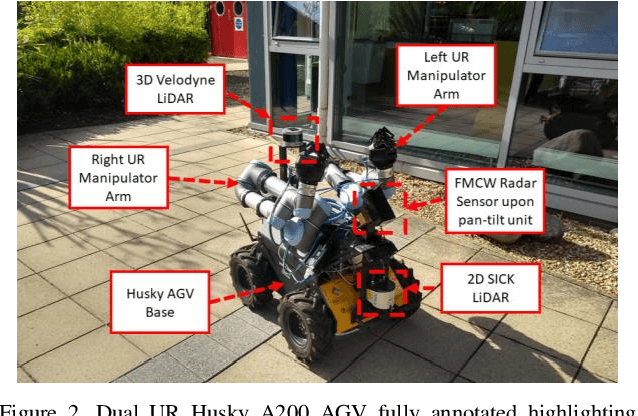

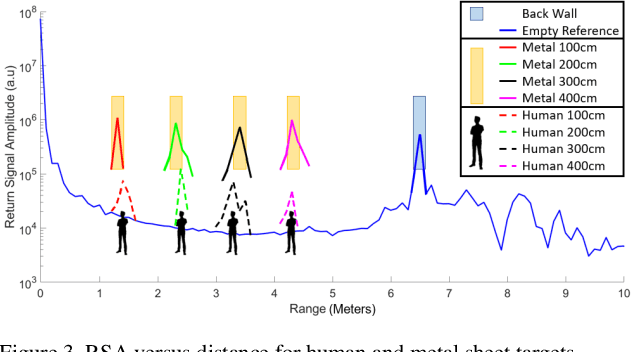

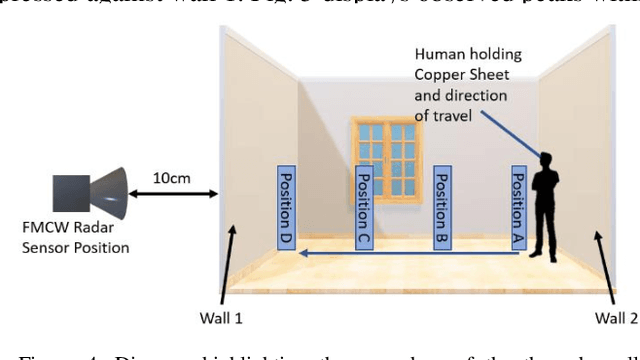

Abstract:Robotic platforms are highly programmable, scalable and versatile to complete several tasks including Inspection, Maintenance and Repair (IMR). Mobile robotics offer reduced restrictions in operating environments, resulting in greater flexibility; operation at height, dangerous areas and repetitive tasks. Cyber physical infrastructures have been identified by the UK Robotics Growth Partnership as a key enabler in how we utilize and interact with sensors and machines via the virtual and physical worlds. Cyber Physical Systems (CPS) allow for robotics and artificial intelligence to adapt and repurpose at pace, allowing for the addressment of new challenges in CPS. A challenge exists within robotics to secure an effective partnership in a wide range of areas which include shared workspaces and Beyond Visual Line of Sight (BVLOS). Robotic manipulation abilities have improved a robots accessibility via the ability to open doorways, however, challenges exist in how a robot decides if it is safe to move into a new workspace. Current sensing methods are limited to line of sight and are unable to capture data beyond doorways or walls, therefore, a robot is unable to sense if it is safe to open a door. Another limitation exists as robots are unable to detect if a human is within a shared workspace. Therefore, if a human is detected, extended safety precautions can be taken to ensure the safe autonomous operation of a robot. These challenges are represented as safety, trust and resilience, inhibiting the successful advancement of CPS. This paper evaluates the use of frequency modulated continuous wave radar sensing for human detection and through-wall detection to increase situational awareness. The results validate the use of the sensor to detect the difference between a person and infrastructure, and increased situational awareness for navigation via foresight monitoring through walls.

Bio-inspired Multi-robot Autonomy

Mar 15, 2022

Abstract:Increasingly, high value industrial markets are driving trends for improved functionality and resilience from resident autonomous systems. This led to an increase in multi-robot fleets that aim to leverage the complementary attributes of the diverse platforms. In this paper we introduce a novel bio-inspired Symbiotic System of Systems Approach (SSOSA) for designing the operational governance of a multi-robot fleet consisting of ground-based quadruped and wheeled platforms. SSOSA couples the MR-fleet to the resident infrastructure monitoring systems into one collaborative digital commons. The hyper visibility of the integrated distributed systems, achieved through a latency bidirectional communication network, supports collaboration, coordination and corroboration (3C) across the integrated systems. In our experiment, we demonstrate how an operator can activate a pre-determined autonomous mission and utilize SSOSA to overcome intrinsic and external risks to the autonomous missions. We demonstrate how resilience can be enhanced by local collaboration between SPOT and Husky wherein we detect a replacement battery, and utilize the manipulator arm of SPOT to support a Clearpath Husky A200 wheeled robotic platform. This allows for increased resilience of an autonomous mission as robots can collaborate to ensure the battery state of the Husky robot. Overall, these initial results demonstrate the value of a SSOSA approach in addressing a key operational barrier to scalable autonomy, the resilience.

A Review: Challenges and Opportunities for Artificial Intelligence and Robotics in the Offshore Wind Sector

Dec 13, 2021

Abstract:A global trend in increasing wind turbine size and distances from shore is emerging within the rapidly growing offshore wind farm market. In the UK, the offshore wind sector produced its highest amount of electricity in the UK in 2019, a 19.6% increase on the year before. Currently, the UK is set to increase production further, targeting a 74.7% increase of installed turbine capacity as reflected in recent Crown Estate leasing rounds. With such tremendous growth, the sector is now looking to Robotics and Artificial Intelligence (RAI) in order to tackle lifecycle service barriers as to support sustainable and profitable offshore wind energy production. Today, RAI applications are predominately being used to support short term objectives in operation and maintenance. However, moving forward, RAI has the potential to play a critical role throughout the full lifecycle of offshore wind infrastructure, from surveying, planning, design, logistics, operational support, training and decommissioning. This paper presents one of the first systematic reviews of RAI for the offshore renewable energy sector. The state-of-the-art in RAI is analyzed with respect to offshore energy requirements, from both industry and academia, in terms of current and future requirements. Our review also includes a detailed evaluation of investment, regulation and skills development required to support the adoption of RAI. The key trends identified through a detailed analysis of patent and academic publication databases provide insights to barriers such as certification of autonomous platforms for safety compliance and reliability, the need for digital architectures for scalability in autonomous fleets, adaptive mission planning for resilient resident operations and optimization of human machine interaction for trusted partnerships between people and autonomous assistants.

Symbiotic System Design for Safe and Resilient Autonomous Robotics in Offshore Wind Farms

Jan 23, 2021

Abstract:To reduce Operation and Maintenance (O&M) costs on offshore wind farms, wherein 80% of the O&M cost relates to deploying personnel, the offshore wind sector looks to robotics and Artificial Intelligence (AI) for solutions. Barriers to Beyond Visual Line of Sight (BVLOS) robotics include operational safety compliance and resilience, inhibiting the commercialization of autonomous services offshore. To address safety and resilience challenges we propose a symbiotic system; reflecting the lifecycle learning and co-evolution with knowledge sharing for mutual gain of robotic platforms and remote human operators. Our methodology enables the run-time verification of safety, reliability and resilience during autonomous missions. We synchronize digital models of the robot, environment and infrastructure and integrate front-end analytics and bidirectional communication for autonomous adaptive mission planning and situation reporting to a remote operator. A reliability ontology for the deployed robot, based on our holistic hierarchical-relational model, supports computationally efficient platform data analysis. We analyze the mission status and diagnostics of critical sub-systems within the robot to provide automatic updates to our run-time reliability ontology, enabling faults to be translated into failure modes for decision making during the mission. We demonstrate an asset inspection mission within a confined space and employ millimeter-wave sensing to enhance situational awareness to detect the presence of obscured personnel to mitigate risk. Our results demonstrate a symbiotic system provides an enhanced resilience capability to BVLOS missions. A symbiotic system addresses the operational challenges and reprioritization of autonomous mission objectives. This advances the technology required to achieve fully trustworthy autonomous systems.

SMASH: One-Shot Model Architecture Search through HyperNetworks

Aug 17, 2017

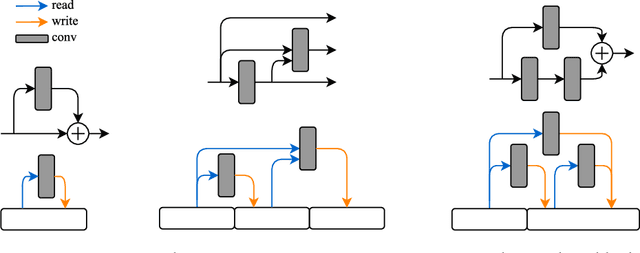

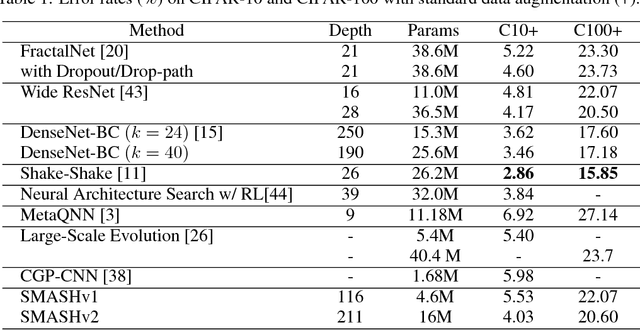

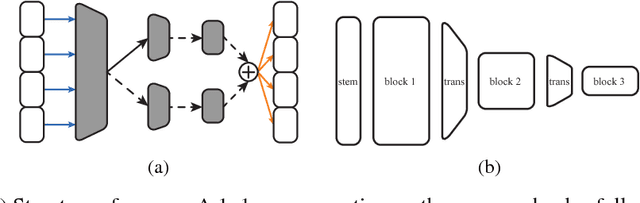

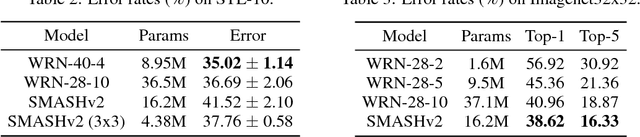

Abstract:Designing architectures for deep neural networks requires expert knowledge and substantial computation time. We propose a technique to accelerate architecture selection by learning an auxiliary HyperNet that generates the weights of a main model conditioned on that model's architecture. By comparing the relative validation performance of networks with HyperNet-generated weights, we can effectively search over a wide range of architectures at the cost of a single training run. To facilitate this search, we develop a flexible mechanism based on memory read-writes that allows us to define a wide range of network connectivity patterns, with ResNet, DenseNet, and FractalNet blocks as special cases. We validate our method (SMASH) on CIFAR-10 and CIFAR-100, STL-10, ModelNet10, and Imagenet32x32, achieving competitive performance with similarly-sized hand-designed networks. Our code is available at https://github.com/ajbrock/SMASH

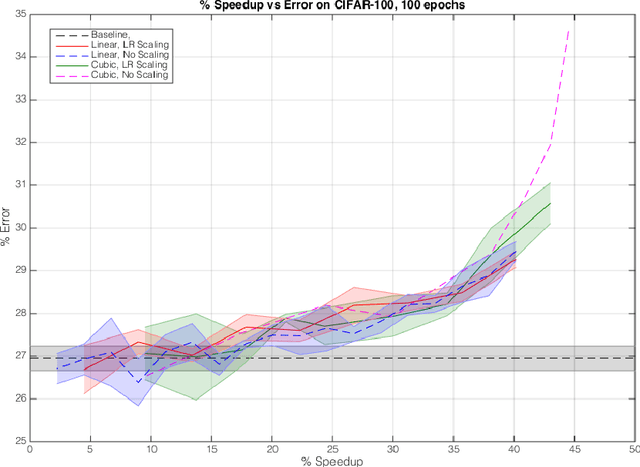

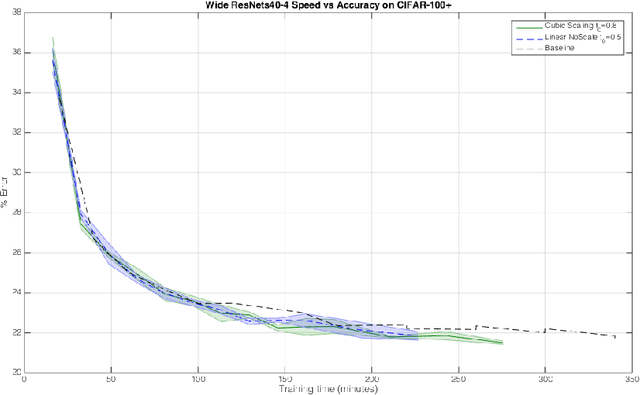

FreezeOut: Accelerate Training by Progressively Freezing Layers

Jun 18, 2017

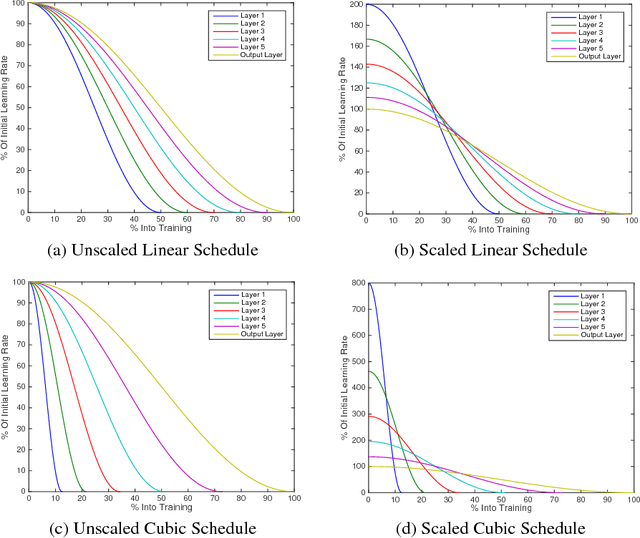

Abstract:The early layers of a deep neural net have the fewest parameters, but take up the most computation. In this extended abstract, we propose to only train the hidden layers for a set portion of the training run, freezing them out one-by-one and excluding them from the backward pass. Through experiments on CIFAR, we empirically demonstrate that FreezeOut yields savings of up to 20% wall-clock time during training with 3% loss in accuracy for DenseNets, a 20% speedup without loss of accuracy for ResNets, and no improvement for VGG networks. Our code is publicly available at https://github.com/ajbrock/FreezeOut

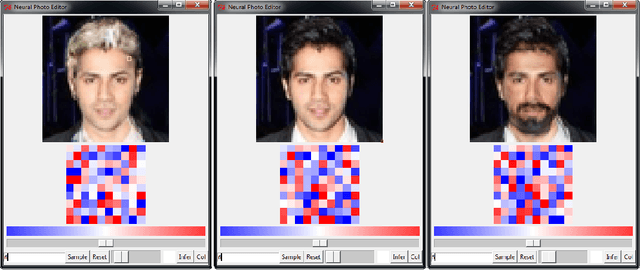

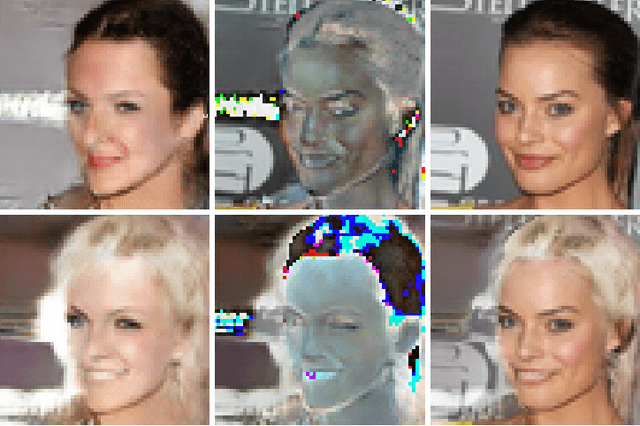

Neural Photo Editing with Introspective Adversarial Networks

Feb 06, 2017

Abstract:The increasingly photorealistic sample quality of generative image models suggests their feasibility in applications beyond image generation. We present the Neural Photo Editor, an interface that leverages the power of generative neural networks to make large, semantically coherent changes to existing images. To tackle the challenge of achieving accurate reconstructions without loss of feature quality, we introduce the Introspective Adversarial Network, a novel hybridization of the VAE and GAN. Our model efficiently captures long-range dependencies through use of a computational block based on weight-shared dilated convolutions, and improves generalization performance with Orthogonal Regularization, a novel weight regularization method. We validate our contributions on CelebA, SVHN, and CIFAR-100, and produce samples and reconstructions with high visual fidelity.

Generative and Discriminative Voxel Modeling with Convolutional Neural Networks

Aug 16, 2016

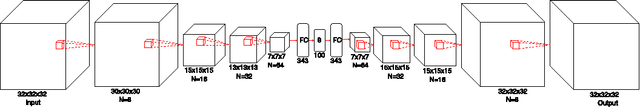

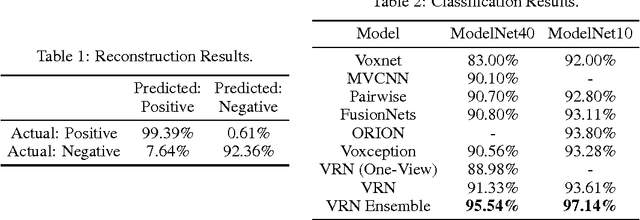

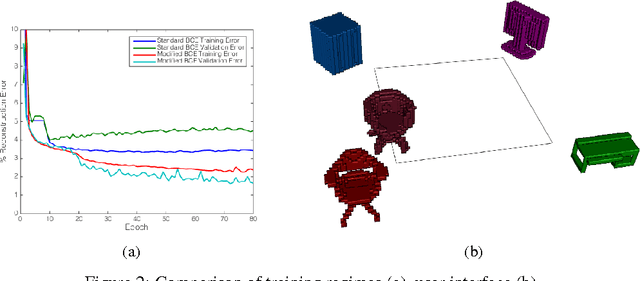

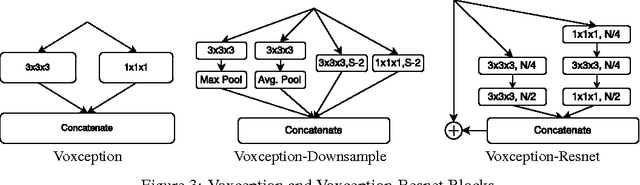

Abstract:When working with three-dimensional data, choice of representation is key. We explore voxel-based models, and present evidence for the viability of voxellated representations in applications including shape modeling and object classification. Our key contributions are methods for training voxel-based variational autoencoders, a user interface for exploring the latent space learned by the autoencoder, and a deep convolutional neural network architecture for object classification. We address challenges unique to voxel-based representations, and empirically evaluate our models on the ModelNet benchmark, where we demonstrate a 51.5% relative improvement in the state of the art for object classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge